Deep Learning, The Curse of Dimensionality, and Autoencoders

Autoencoders are an extremely exciting new approach to unsupervised learning and for many machine learning tasks they have already surpassed the decades of progress made by researchers handpicking features.

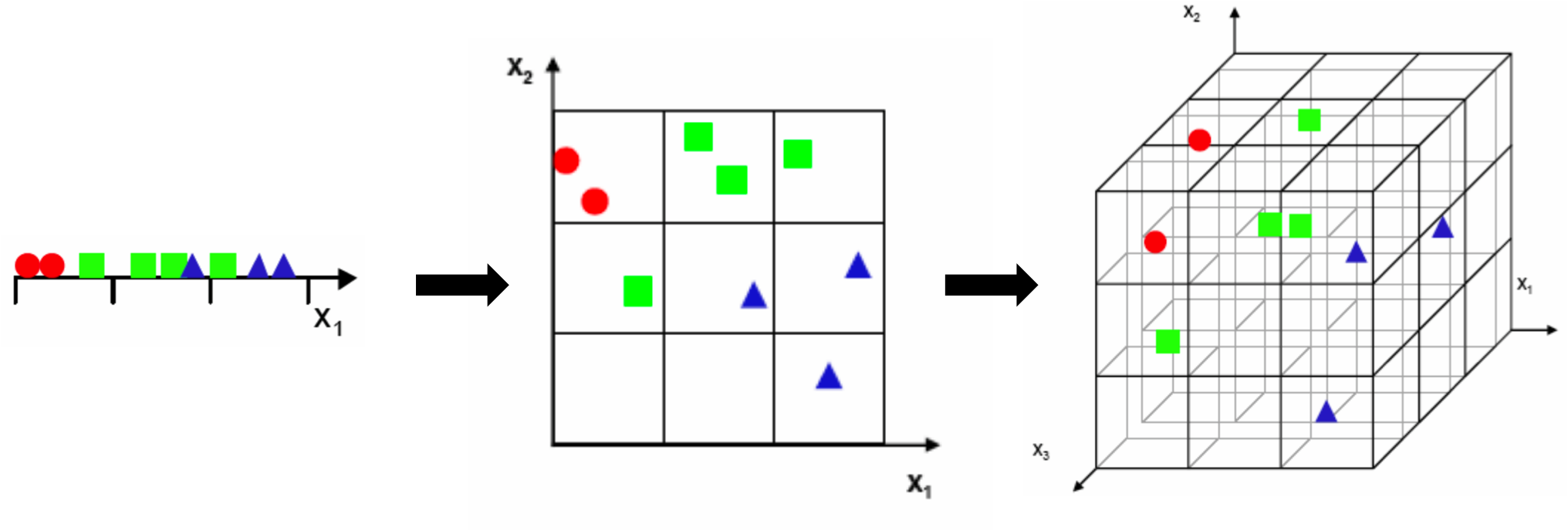

Understandably, we notice there's too much overlap between classes, so we decide to add another feature to improve separability. But we notice that if we keep the same number of samples, we get a 2D scatter plot that's really sparse, and it's really hard to ascertain any meaningful relationships without increasing the number of samples. If we move onto 3-dimensional inputs, it becomes even worse. Now we're trying to fill even more 3^{3} = 27 bins with the same number of examples. The scatter plot is nearly empty!

Of important note is that this is NOT an issue that can be easily solved by more effective computing or improved hardware! For many machine learning tasks, collecting training examples is the most time consuming part, so this mathematical result forces us to be careful about the dimensions we choose to analyze. If we are able to express our inputs in few enough dimensions, we might be able to turn an unfeasible problem into a feasible one!

Manual Feature Selection

Going back to the original problem of classifying facial expressions, it's quite clear that we don't need all 65,000 dimensions to classify an image. Specifically, there are certain key features that our brain automatically uses to detect emotion quickly. For example, the curve of the person's lips, the extent his brow is furrowed, and the shape of his eyes all help us determine that the man in the picture is feeling happy. It turns out that these features can be conveniently summarized by looking at the relative positioning of various facial keypoints, and the distances between them.

Obviously, this allows us to significantly reduce the dimensionality of our input (from 65,000 to about 60), but there are some limitations! Hand selection of features can take years and years of research to optimize. For many problems, the features that matter are not easy to express. For example, it becomes very difficult to select features for generalized object recognition, where the algorithm needs to tell apart birds, from faces, from cars, etc. So how do we extract the most information-rich dimensions from our inputs? This is answered by a field of machine learning that is called unsupervised learning. In the next section, we will be discussing the autoencoder, a unsupervised learning technique pioneered by Geoffrey Hinton that is based on neural networks. We'll briefly talk about how the autoencoder works and how it compares to traditional linear approaches such as principal component analysis (PCA) for computer vision and latent semantic analysis (LSA) for natural language processing.

A Brief Overview of the Autoencoder

An autoencoder is an unsupervised machine learning technique that utilizes a neural network to produce a low dimensional representation of a high dimensional input. Traditionally, dimensionality reduction depended on linear methods such as PCA, which finds the directions of maximal variance in high-dimensional data. By selecting only those axes that have the largest variance, PCA aims to capture the directions that contain the most information about the inputs, so we can express as much as possible with a minimal number of dimensions. The linearity of PCA, however, places significant limitations on the kinds of feature dimensions that can be extracted. Autoencoders overcome these limitations by exploiting the inherent nonlinearity of neural networks.