Deep Learning, The Curse of Dimensionality, and Autoencoders

Autoencoders are an extremely exciting new approach to unsupervised learning and for many machine learning tasks they have already surpassed the decades of progress made by researchers handpicking features.

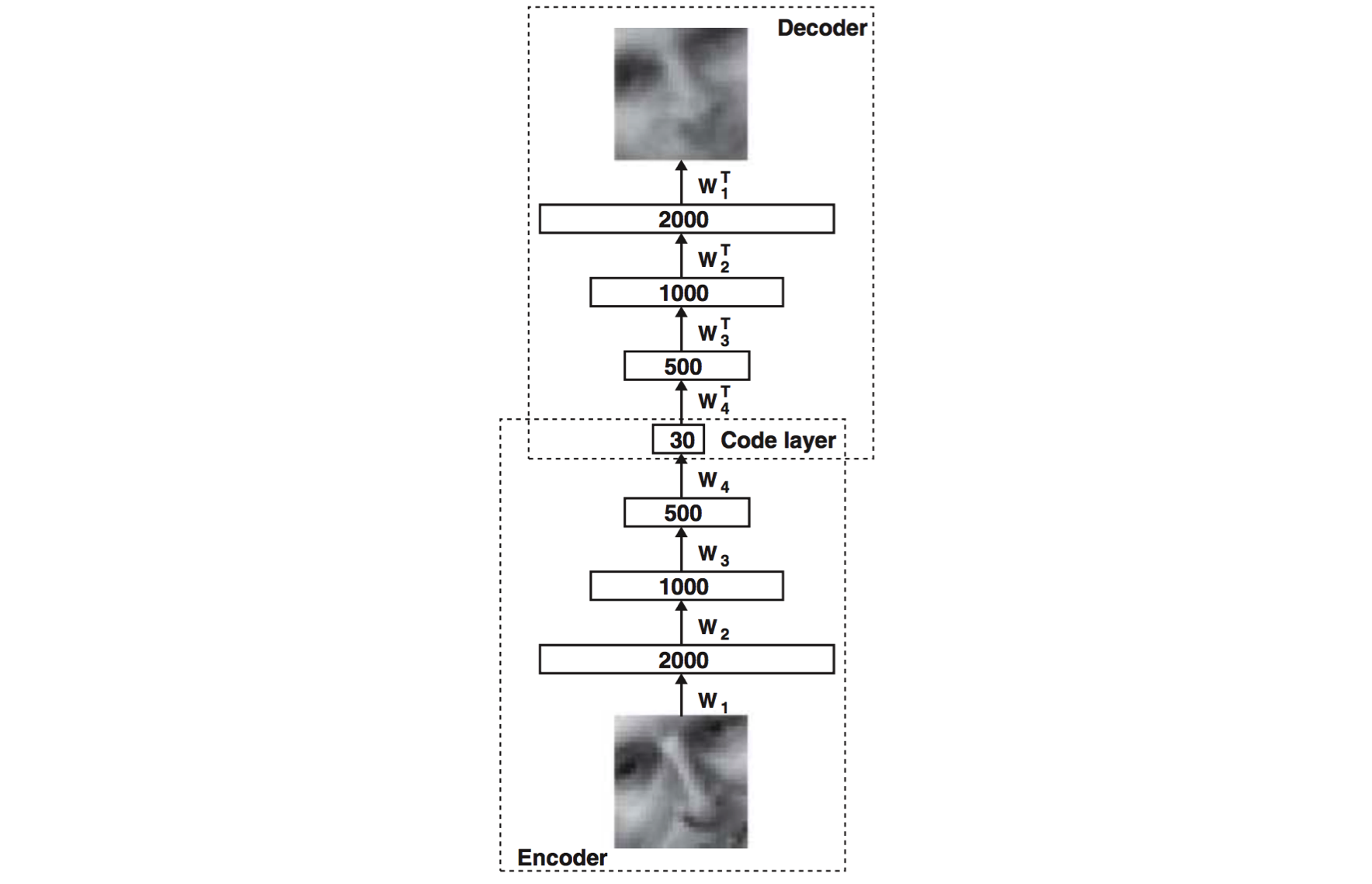

An autoencoder consists of two major parts, the encoder and the decoder networks. The encoder network is used during both training and deployment, while the decoder network is only used during training. The purpose of the encoder network is to discover a compressed representation of the given input. In this example, we are generating a 30-dimensional representation from a 2000-dimensional input. The purpose of the decoder network, which is just a reflection of the encoder network, is to reconstruct the original input as closely as possible. It is used during training in order to force the autoencoder to choose the most informative features in its compressed representation. The closer the reconstructed input is to the original, the better our original representation!

So how does the autoencoder compare to its linear competitors? Let's take a look at a number of experiments performed by Hinton and Salakhutdinov when the technique debuted in 2006. First, we take a look at how well autoencoders can reconstruct their original output compared to PCA using 30 dimensions.

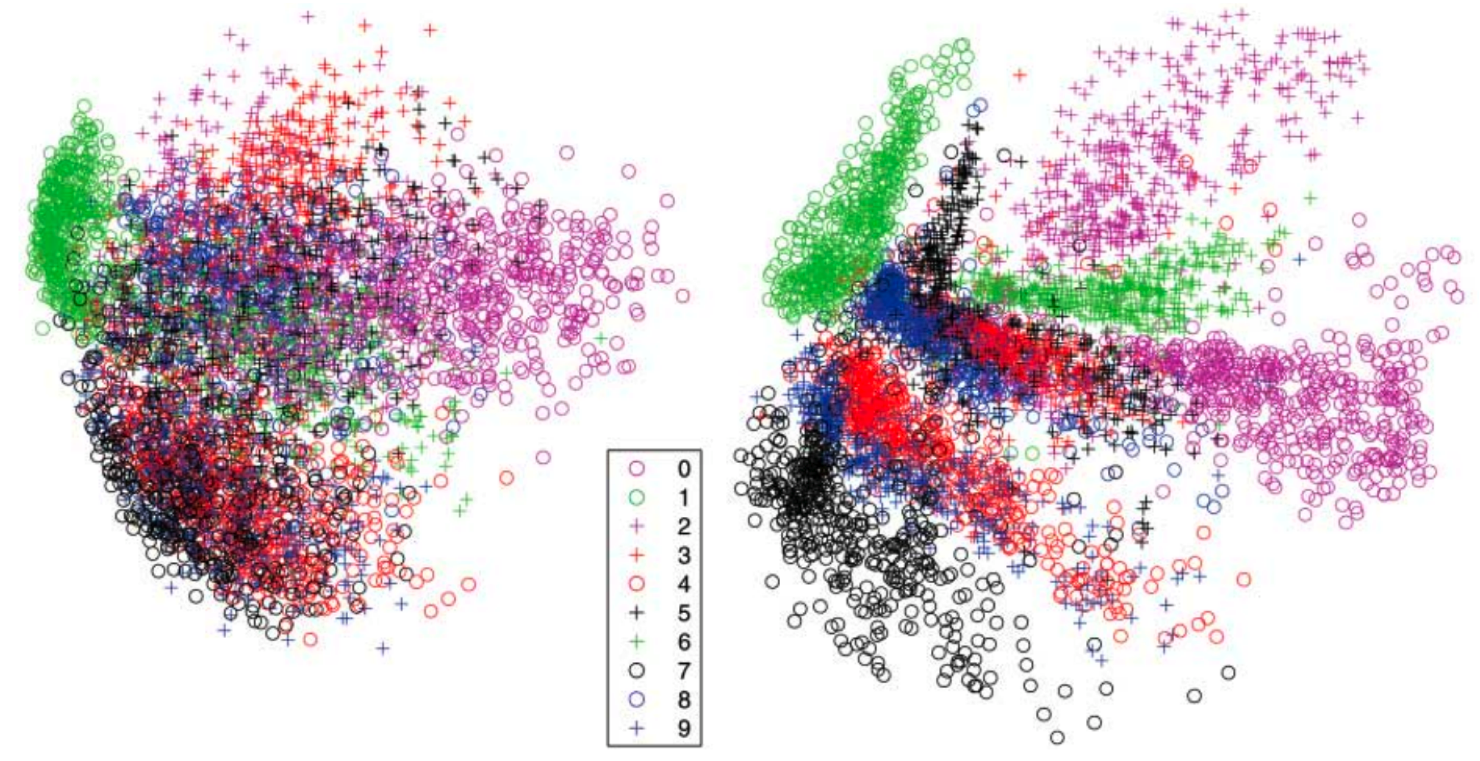

The reconstruction by the autoencoder is visibly better than the PCA output, which is a very promising result. We then explore whether autoencoders can significantly improve the separability of our dataset by comparing 2-dimensional codes produced by an autoencoder to a 2-dimensional representation generated by PCA on the MNIST handwritten digit dataset.

A comparison of separability of 2-dimensional codes generated by an autoencoder (right) and PCA (left) on the MNIST dataset

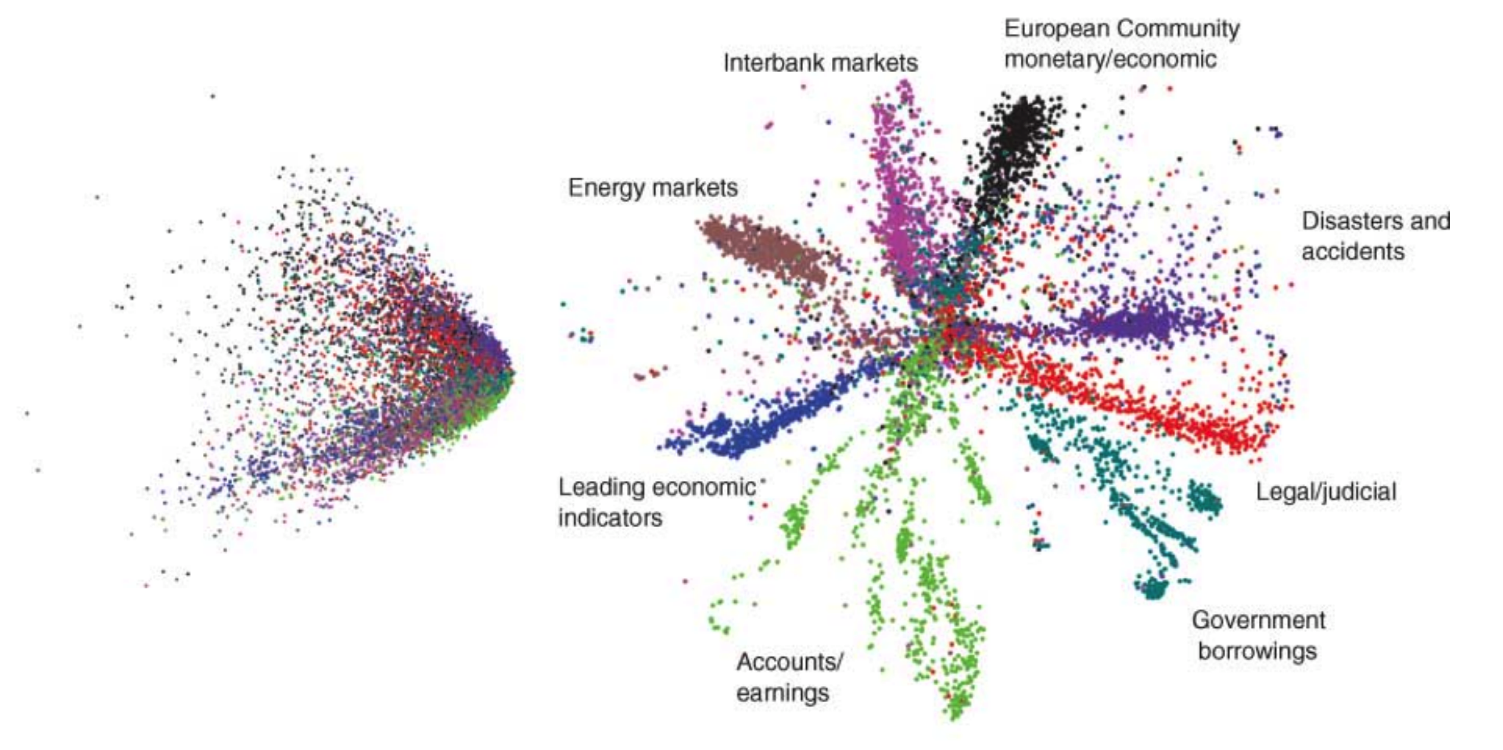

Finally, we see even more drastic improvements when we compare the autoencoder to the gold-standard unsupervised learning technique for natural language (LSA).

A comparison of separability of 2-dimensional codes generated by an autoencoder (right) and PCA (left) on news stories

Conclusion

Autoencoders are an extremely exciting new approach to unsupervised learning, and for virtually every major kind of machine learning task, they have already surpassed the decades of progress made by researchers handpicking features! We've covered a lot of ground, but we're still only at the tip of the iceberg. In the next blog post, I'll go into much more depth on how autoencoders work, how we efficiently train them, and other clever optimizations (such as sparsity). If you're interested and would like to talk, please feel free to drop me a line at nkbuduma@gmail.com. I'm always excited to hear new perspectives

Bio: Nikhil Buduma is a computer science student at MIT with deep interests in machine learning and the biomedical sciences. He is a two time gold medalist at the International Biology Olympiad, a student researcher, and a “hacker.” He was selected as a finalist in the 2012 International BioGENEius Challenge for his research on the pertussis vaccine, and in 2014 received the Young Innovator Award from the Gordon and Betty Moore Foundation for using augmented reality to re-envision the traditional chemistry set.

Related: