Apache Spark Key Terms, Explained

An overview of 13 core Apache Spark concepts, presented with focus and clarity in mind. A great beginner's overview of essential Spark terminology.

7. GraphX

GraphX is the component in Apache Spark for graphs and graph-parallel computation. At a high level, GraphX extends the Spark RDD via a Graph abstraction: a directed multigraph with properties attached to each vertex and edge. To support graph computation, GraphX exposes a set of fundamental operators (e.g., subgraph, joinVertices, and aggregateMessages) as well as an optimized variant of the Pregel API. In addition, GraphX includes a growing collection of graph algorithms and builders to simplify graph analytics tasks.

Spark Streaming is an extension of the core Spark API that allows data engineers and data scientists to process real-time data from various sources including (but not limited to) Kafka, Flume, and Amazon Kinesis. This processed data can be pushed out to filesystems, databases, and live dashboards. Its key abstraction is a Discretized Stream or, in short, a DStream, which represents a stream of data divided into small batches. DStreams are built on RDDs, Spark’s core data abstraction. This allows Spark Streaming to seamlessly integrate with any other Spark components like MLlib and Spark SQL.

This unification of disparate data processing capabilities is the key reason behind Spark Streaming’s rapid adoption. It makes it very easy for developers to use a single framework to satisfy all their processing needs.

9. Structured Streaming

Introduced as part of Apache Spark 2.0, structured streaming is a high-level streaming built on top of the Spark SQL engine. It is a declarative API that extends DataFrames and Datasets to support batch, interactive, and streaming queries. The advantage of this approach is that it allows programmers to apply their experience working with static data sets (i.e. batch) and easily apply this to infinite data sets (i.e. streaming).

http://spark-packages.org is a community package index to track the growing number of open source packages and libraries that work with Apache Spark. Spark Packages makes it easy for users to find, discuss, rate, and install packages for any version of Spark and makes it easy for developers to contribute packages.

Spark Packages features integrations with various data sources, management tools, higher level domain-specific libraries, machine learning algorithms, code samples, and other Spark content. Examples packages include Spark-CSV (which is now included in Spark 2.0) and Spark ML integration packages including GraphFrames and TensorFrames.

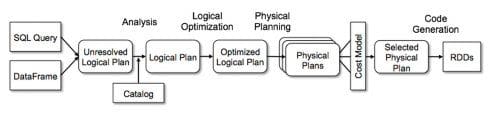

Spark SQL is one of the most technically involved components of Apache Spark. It powers both SQL queries and the DataFrame API. At the core of Spark SQL is the Catalyst optimizer, which leverages advanced programming language features (e.g. Scala’s pattern matching and quasiquotes) in a novel way to build an extensible query optimizer.

Catalyst is based on functional programming constructs in Scala and designed with these key two purposes:

- Easily add new optimization techniques and features to Spark SQL

- Enable external developers to extend the optimizer (e.g. adding data source specific rules, support for new data types, etc.)

As well, Catalyst supports both rule-based and cost-based optimization.

For more information, please refer to Deep Dive into Spark SQL’s Catalyst Optimizer and the webinar Apache Spark DataFrames: Simple and Fast Analysis of Structured Data.

12. Tungsten

Tungsten is the codename for the umbrella project to make changes to Apache Spark’s execution engine that focuses on substantially improving the efficiency of memory and CPU for Spark applications, to push performance closer to the limits of modern hardware. This effort includes the following initiatives:

- Memory Management and Binary Processing: leveraging application semantics to manage memory explicitly and eliminate the overhead of JVM object model and garbage collection

- Cache-aware computation: algorithms and data structures to exploit memory hierarchy

- Code generation: using code generation to exploit modern compilers and CPUs

- No virtual function dispatches: this reduces multiple CPU calls which can have a profound impact on performance when dispatching billions of times.

- Intermediate data in memory vs CPU registers: Tungsten Phase 2 places intermediate data into CPU registers. This is an order of magnitudes reduction in the number of cycles to obtain data from the CPU registers instead of from memory

- Loop unrolling and SIMD: Optimize Apache Spark’s execution engine to take advantage of modern compilers and CPUs’ ability to efficiently compile and execute simple for loops (as opposed to complex function call graphs).

For more information, please reference Project Tungsten: Bringing Apache Spark Closer to Bare Metal, Deep Dive into Spark SQL’s Catalyst Optimizer, and Apache Spark as a Compiler: Joining a Billion Rows per Second on a Laptop.

13. Continuous Applications

In Apache Spark 2.0 adding structure to Spark, through use of high-level DataFrames and Datasets APIs, accommodates a novel approach to look at real-time streaming. That is, look at streaming not as streaming but as either a static table of data (where you know all the data) or a continuous table of data (where new data is continuously arriving).

As such you can build end-to-end continuous applications, in which you can issue the same queries to batch as to real-time data, perform ETL, generate reports, update or track specific data in the stream. This combined batch & real-time query-capabilities to a structured stream is a unique offering—not many streaming engines offer it yet.

Related: