Automated Data Science & Machine Learning: An Interview with the Auto-sklearn Team

Automated Data Science & Machine Learning: An Interview with the Auto-sklearn Team

This is an interview with the authors of the recent winning KDnuggets Automated Data Science and Machine Learning blog contest entry, which provided an overview of the Auto-sklearn project. Learn more about the authors, the project, and automated data science.

KDnuggets recently ran an Automated Data Science and Machine Learning blog contest, which garnered numerous entries and lots of appreciation for the winning posts and a pair of honorable mentions.

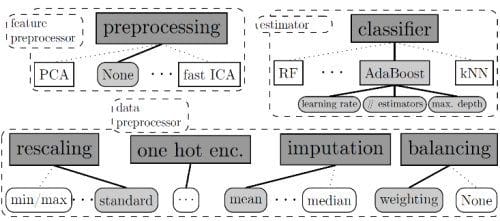

The winning post, titled Contest Winner: Winning the AutoML Challenge with Auto-sklearn, written by Matthias Feurer, Aaron Klein, and Frank Hutten, all of the University of Freiburg, provides an overview of Auto-sklearn, an open-source Python tool that automatically determines effective machine learning pipelines for classification and regression datasets. The project is built around the successful scikit-learn library and won the recent AutoML challenge.

Given the popularity of the post, we asked the authors if they would be interested in answering a few followup questions on themselves, their project, and automated data science in general. What follows is the result of this conversation.

Matthew Mayo: First off, congratulations on winning the KDnuggets Automated Data Science and Machine Learning Blog Contest, with your entry outlining your project Auto-sklearn. What if we start by having you introduce the members of the team and provide a little information on each of your backgrounds?

Matthias Feurer: I am a 2nd year PhD student in Frank’s group, working on hyperparameter optimization and automated machine learning. Mostly, I’m interested in optimizing pre-defined machine learning pipelines. I started working for Frank during my Master’s studies, being annoyed by hyperparameter tuning in most of my study projects up to that point.

Aaron Klein: I am also a 2nd year PhD student working on automated deep learning. Like Matthias, I was a master student at the University of Freiburg before I joined Frank’s group.

Frank Hutter: I’m an Assistant Professor in Computer Science at the University of Freiburg, with main interests in artificial intelligence, machine learning, and automated algorithm design. Before moving to Freiburg, I spent nine years at the University of British Columbia in Vancouver, Canada.

All: Besides the three of us (who wrote the blog post for the KDnuggets Blog Contest), the team for our winning submission also consisted of a few more PhD students and postdocs from the University of Freiburg: Katharina Eggensperger, Jost Tobias Springenberg, Hector Mendoza, Manuel Blum, Stefan Falkner, and Marius Lindauer.

The post was very informative and described Auto-sklearn quite well. Is there anything additional of note you would like our readers to know about Auto-sklearn, or any developments that have occurred since this post? Is there anything you can share about future development plans?

One short-term goal is regression, where we can do a lot more. In the long term we would like Auto-sklearn to become a flexible extension of scikit-learn, which helps users optimize their machine learning pipelines. We also want to do lots more work along the lines of Auto-Net, and want to speed up the optimization process dramatically by more reasoning across datasets, across subsets of data, and over time (for anytime algorithms).

To what extent do you think machine learning and data science can be automated, and what degree of human interaction will be required for so-called fully automated systems?

While there are several approaches for tuning the hyperparameters of machine learning pipelines, so far there is only little work on discovering new pipeline building blocks. Auto-sklearn uses a predefined set of preprocessors and classifiers in a fixed order. An efficient way to also come up with new pipelines would be helpful. One can of course continue this line of thinking and try to automate the discovery of new algorithms as done in several recent papers, such as Learning to learn by gradient descent by gradient descent. Humans can also still tune hyperparameters better than automated methods when machine learning models are very expensive to train, such as state-of-the-art deep neural networks for large datasets. We’re working on ways to transform human expert heuristics into fully formalized algorithms; e.g., our Fabolas approach optimizes the hyperparameters of a neural network on small subsets of the data to speed up learning about the best hyperparameters for the full data set.

Considering the previous question, will data scientists be unemployed anytime soon? Or, if too drastic an idea, will the current hype surrounding data scientists be tempered by automation in the near future, and if so, to what degree?

Certainly not. All the methods of automated machine learning are developed to support data scientists, not to replace them. Such methods can free the data scientist from nasty, complicated tasks (like hyperparameter optimization) that can be solved better by machines. But analysing and drawing conclusions still has to be done by human experts -- and in particular data scientists who know the application domain will remain extremely important. We do believe, though, that automation will make individual data scientists a lot more productive, so this might indeed affect the number of data scientists needed to do the job.

What, if anything, can data scientists do to avoid being rendered obsolete? The question, of course, being directed toward adding value as opposed to being mischievous.

It will always take data scientists to analyze and interpret the results of a statistical analysis -- so for young graduates starting data science jobs such skills might be more future-proof than some others (e.g., manual hyperparameter tuning to get the most out of your neural network).

As you have been active in machine learning competitions in the past, do you have any interesting tips, tricks, or insights to share?

Automation and careful resampling strategies. Automation allows to run a lot of experiments, while resampling strategies such as careful cross-validation are needed to prevent against overfitting. It's also often important to go in with an open mind and just let the data speak about which method works best on which dataset.

Finally, where do you think machine learning technology will be in 5 years?

It is hard to predict what will happen in the future, especially in a field that’s changing as quickly as machine learning. E.g., five years ago not many foresaw the rise of deep learning. But we’re fairly confident that machine learning will be used ever more and will be embedded in commercial tools everyone uses.

Thank you for your time. I'm sure that your time is at a premium, and we appreciate you taking a few moments for our readers.

Related:

Automated Data Science & Machine Learning: An Interview with the Auto-sklearn Team

Automated Data Science & Machine Learning: An Interview with the Auto-sklearn Team