4 Cognitive Bias Key Points Data Scientists Need to Know

Cognitive biases are inherently problematic in a variety of fields, including data science. Is this something that can be mitigated? A solid understanding of cognitive biases is the best weapon, which this overview hopes to help provide.

Cognitive biases are hugely important when dealing with data.

Wikipedia offers the following definition of cognitive bias:

Cognitive biases are tendencies to think in certain ways that can lead to systematic deviations from a standard of rationality or good judgment, and are often studied in psychology and behavioral economics.

A few specific examples of how cognitive biases can (and do) interfere in the real world include:

- Voters and politicians who don't understand science, but think they do, doubt climate change because it still snows in the winter (Dunning–Kruger effect)

- Confirmation bias very recently prevented pollsters from believing any data showing that Donald Trump could win the US Presidential election

These cited examples may be taken from politics, but cognitive biases are inherently problematic and intensely studied in fields from economics to business to artificial intelligence. Given the nature of the work, they are of concern in data science as well.

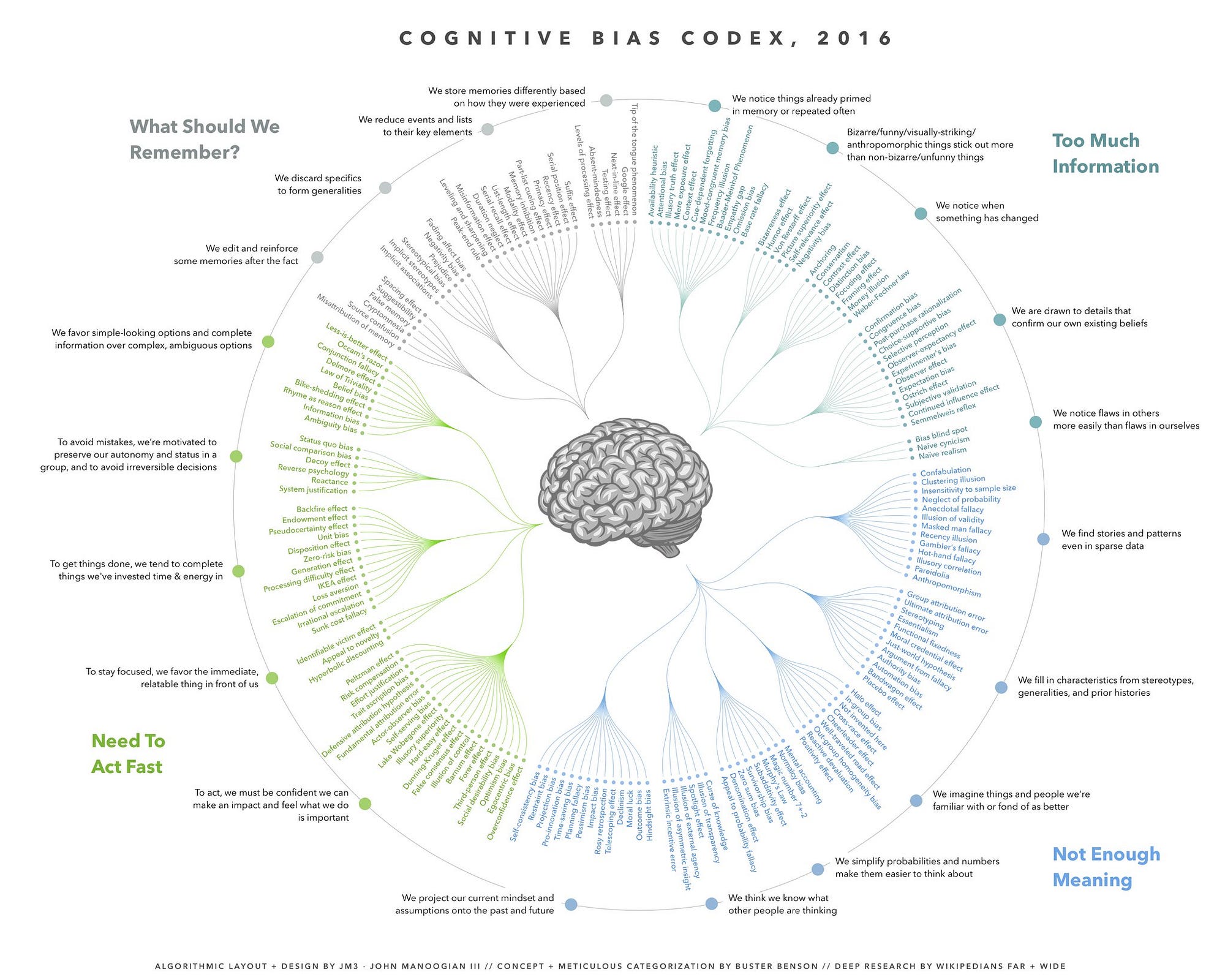

Wikipedia has a fairly extensive article listing cognitive biases. Lots of cognitive biases. Like, more than 170 of them.

But since the Wikipedia article simply lists this massive collection of biases, free from categorization or other meaningful presentation, Buster Benson, Senior Product Manager at Slack Technologies, Inc. and cognitive bias enthusiast, has opted to spend some of his time grouping, analyzing, and summarizing said biases. He states that his intention was to make it easier to get something useful from this collection of biases, as opposed to simply their rote memorization. He was then nice enough to share the results, including a much more manageable write-up. He, fittingly, called his overview the Cognitive bias cheat sheet.

The most impressive part of his work is that it links biases to 4 problems which the biases help humans solve; after all, cognitive biases do not exist in a vacuum, and have arisen throughout evolution with a specific, if flawed, purpose, or set of purposes.

In that regard, Benson offers 4 main key points as takeaway for readers, which he states are the "four problems that biases help us address."

1. Information overload sucks, so we aggressively filter. Noise becomes signal.

In order to avoid drowning in information overload, our brains need to skim and filter insane amounts of information and quickly, almost effortlessly, decide which few things in that firehose are actually important and call those out.

2. Lack of meaning is confusing, so we fill in the gaps. Signal becomes a story.

In order to construct meaning out of the bits and pieces of information that come to our attention, we need to fill in the gaps, and map it all to our existing mental models.

3. Need to act fast lest we lose our chance, so we jump to conclusions. Stories become decisions.

In order to act fast, our brains need to make split-second decisions that could impact our chances for survival, security, or success, and feel confident that we can make things happen.

4. This isn’t getting easier, so we try to remember the important bits. Decisions inform our mental models of the world.

And in order to keep doing all of this as efficiently as possible, our brains need to remember the most important and useful bits of new information and inform the other systems so they can adapt and improve over time, but no more than that.

Benson then points out what he refers to as four truths, which identify the ways in which our solutions to the four problems (see above) are problematic in their own right.

- We don’t see everything. Some of the information we filter out is actually useful and important.

- Our search for meaning can conjure illusions. We sometimes imagine details that were filled in by our assumptions, and construct meaning and stories that aren’t really there.

- Quick decisions can be seriously flawed. Some of the quick reactions and decisions we jump to are unfair, self-serving, and counter-productive.

- Our memory reinforces errors. Some of the stuff we remember for later just makes all of the above systems more biased, and more damaging to our thought processes.

Now, I'm certain I don't need to draw a line from what Benson writes above - both in his four problems and his four truths - and their applicability to data science. In a sense, "data science" could be seen as an effort to battle such biases; unfortunately, as data scientists are generally human (at this point), the opportunity for bias to creep back into the actual processes of data science exists. This is something that cannot ever be "solved," but can be mitigated to some degree with adequate understanding.

As a bit of a bonus, the following graphic is the Cognitive Bias Codex, created by John Manoogian III as a response to Benson's article, which Benson then included ex post facto.

Related: