Artificial Intelligence and Speech Recognition for Chatbots: A Primer

Bot bots bots... Read this overview of how artificial intelligence and natural language processing are contributing to chatbot development, and where it all goes from here.

Conversational User Interfaces (CUI) are at the heart of the current wave of AI development. Although many applications and products out there are simply “Mechanical Turks” — which means machines that pretend to be automatized while a hidden person is actually doing all the work — there have been many interesting advancements in speech recognition from the symbolic or statistical learning approaches.

In particular, deep learning is drastically augmenting the abilities of the bots with respect to traditional NLP (i.e., bag-of-words clustering, TF-IDF, etc.) and is creating the concept of “conversation-as-a-platform”, which is disrupting the apps market.

Our smartphone currently represents the most expensive area to be purchased per squared centimeter (even more expensive than the square meters price of houses in Beverly Hills), and it is not hard to envision that having a bot as unique interfaces will make this area worth almost zero.

None of these would be possible though without heavily investing in speech recognition research. Deep Reinforcement Learning (DFL) has been the boss in town for the past few years and it has been fed by human feedbacks. However, I personally believe that soon we will move toward a B2B (bot-to-bot) training for a very simple reason: the reward structure. Humans spend time training their bots if they are enough compensated for their effort.

This is not a new concept, and it is something Li Deng (Microsoft) and his group are really aware of. He actually provides a great threefold classification of AI bots:

- Bots that look around for information;

- Bots that look around for information to complete a specific task;

- Bots with social abilities and tasks (which he names social bots or chatbots)

For the first two, the reward structure is indeed pretty easy to be defined, while the third one is more complex, which makes it more difficult to be approached nowadays.

When this third class will be fully implemented, though, we would find ourselves living in a world where machines communicate among themselves and with humans in the same way. In this world, the bot-to-bot business model will be something ordinary and it is going to be populated by two types of bots: master bots and follower bots.

I believe that research in speech recognition adds up, as well as the technology stacks in this specific space. This would result in some players creating “universal” bots (master bots) which everyone else will use as gateways for their (peripheral) interfaces and applications. The good thing of this centralized (and almost monopolistic) scenario is, however, that in spite of the two-levels degree of complexity, we won’t have the black box issue affecting the deep learning movement today because bots (either master or follower) will communicate between themselves in plain English rather than in any programming language.

Image Credit: https://blog.dlvrit.com/2016/05/facebook-messenger-chatbots/

The challenges toward Master Bots

Traditionally, we can think of deep learning models for speech recognition as either retrieval-based models or generative-models. The first class of models uses heuristics to draw answers from predefined responses given some inputs and context, while the latter generates new responses from scratch each time.

The state-of-art of speech recognition today has raised a lot since 2012, with deep-q networks (DQNs), deep belief networks (DBN), long short-term memory RNN, Gated Recurrent Unit (GRU), Sequence-to-sequence Learning (Sutskever et al., 2014), and Tensor Product Representations (for a great overview on speech recognition, look at Deng and Li, 2013).

So, if DFL breakthroughs were able to improve our understanding of the machine cognition, what is preventing us from realizing the perfect social bots? Well, there are at least a couple of things I can think of.

First of all, machine translation is still in its infancy. Google has recently created a “Neural Machine Translation”, a relevant leap ahead in the field, with the new version even enabling zero-short translation (in languages which they were not trained for).

Second, speech recognition is still mainly a supervised process. We might need to put further effort into Unsupervised Learning, and eventually even better integrate the symbolic and neural representations.

Furthermore, there are many nuances of human speech recognition which we are not able to fully embed into a machine yet. MetaMind is doing a great work in the space and it recently introduced Joint Many-Tasks (JMT) and the Dynamic Coattention Network (DCN), respectively an end-to-end trainable model which allows collaboration between different layers and a network that reads through documents having an internal representation of the documents conditioned on the question that it is trying to answer.

Finally, the automatic speech recognition (ASR) engines created so far were either lacking personality or completely missing the spatiotemporal context. These are two essential aspects for a general CUI, and only a few works have been tried up to date (Yao et al., 2015; Li et al., 2016).

Image Credit: http://www.assafelovic.com/

How is the market distributed?

This was not originally intended to part of this article, but I found useful to go quickly through main players in the space in order to understand the importance of speech recognition in business contexts.

The history of bots goes back to Eliza (1966, the first bot ever), Parry (1968) to eventually ALICE and Clever in the nineties and Microsoft Xiaoice more recently, but it evolved a lot over the last 2–3 years.

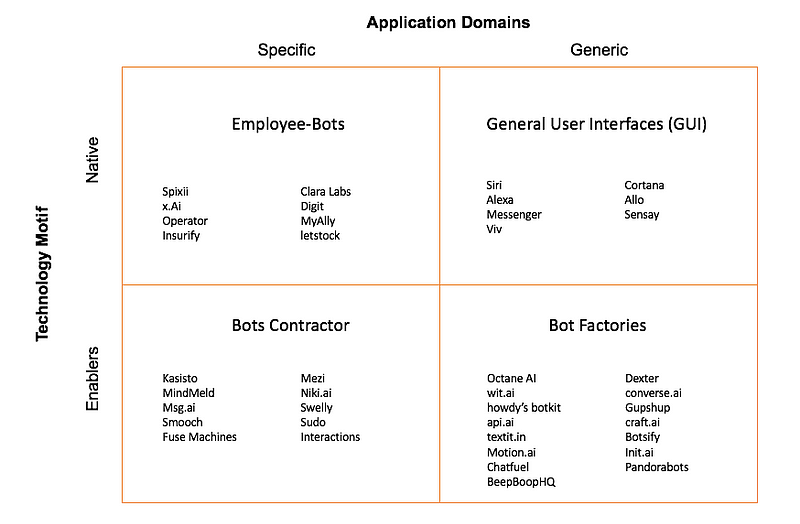

I like to think about this market according to this 2 by 2 matrix. You can indeed classify bots as native or enablers, designed for either specific or generic applications. The edges of this classification are only roughed out and you might actually have companies operating at the intersection between two of these quadrants:

Bots Classification Matrix

Following this classification, we can identify four different types of startups:

- Employee-Bots: these are bots that have been created within a specific industry or areas of application. They are stand-alone frameworks that do not necessitate extra training but are ready to plug and play;

- General User Interfaces: these are native applications that represent the purest aspiration to a general conversational interface;

- Bots Contractors: bots that are “hired” to complete specific purposes, but that were created as generalists. Usually cheaper and less specialized than their Employee brothers, live in a sort of symbiotic way with the parent application. It could be useful to think about this class as functional bots rather than industry experts (first class);

- Bots Factories: startups that facilitate the creation of your own bot.

A few (non-exhaustive) examples of companies operating in each group have been provided, but it is clear how this market is becoming crowded and really profitable.

Final food for thoughts

It is an exciting time to be working on deep learning for speech recognition. Not only the research community but the market as well are quickly recognizing the importance of the field as an essential step to the development of an AGI.

The current state of ASR and bots reflect very well the distinction between narrow AI and general intelligence, and I believe we should carefully manage the expectations of both investors and customers. I am also convinced is not a space in which everyone will have a slice of the pie and that a few players will eat most of the market, but it is so quick-moving that is really hard to make predictions on it.

References

Deng, L., Li, X. (2013). “Machine Learning Paradigms for Speech Recognition: An Overview”. IEEE Transaction on audio, speech, and language processing 21(5).

Li, J., Galley, M., Brockett, C., Spithourakis, G., Gao, J., Dolan, W. B. (2016).“A Persona-Based Neural Conversation Model”. ACL (1).

Sutskever, I., Vinyals, O., Le, Q. (2014). “Sequence to Sequence Learning with Neural Networks”. NIPS 2014: 3104–3112.

Yao, K., Zweig, G., Peng, B. (2015). “Attention with Intention for a Neural Network Conversation Model”. CoRR abs/1510.08565

Bio: Francesco Corea is a Decision Scientist and Data Strategist based in London, UK.

Original. Reposted with permission.

Related: