Data Science, Machine Learning: Main Developments in 2017 and Key Trends in 2018

Data Science, Machine Learning: Main Developments in 2017 and Key Trends in 2018

The leading experts in the field on the main Data Science, Machine Learning, Predictive Analytics developments in 2017 and key trends in 2018.

This year we again asked a distinguished panel of leaders of the field

Among main themes were AI and Deep Learning - both real progress and hype, Machine Learning, Security, Quantum Computing, AlphaGo Zero, and more. Read the opinions of Kirk D. Borne, Tom Davenport, Jill Dyche, Bob E. Hayes, Carla Gentry, Gregory Piatetsky-Shapiro, GP Pulipaka, Rexer Analytics team (Paul Gearan, Heather Allen, and Karl Rexer), Eric Siegel, Jeff Ullman, and Jen Underwood.

Read also Big Data: Main Developments in 2017 and Key Trends in 2018 and last year predictions.

Have a different opinion? Comment at the end of the post.

Kirk D. Borne, @KirkDBorne, The Principal Data Scientist at BoozAllen, PhD Astrophysicist. Top Data Science and Big Data Influencer.

In 2017 we saw Big Data give way to AI at center stage of the technology hype cycle. This excessive media and practitioner attention on AI included positive news (increasingly powerful machine learning algorithms and AI applications in numerous industries, including automotive, medical imaging, security, customer service, entertainment, financial services) and negative news (threats of machines taking our jobs and taking over our world). We also witnessed a growth in value-producing innovations around data, including greater use of APIs, as-a-Service offerings, data science platforms, deep learning, and cloud machine learning services from the major providers. Specialized applications of data, machine learning, and AI included machine intelligence, prescriptive analytics, journey sciences, behavior analytics, and IoT.

In 2017 we saw Big Data give way to AI at center stage of the technology hype cycle. This excessive media and practitioner attention on AI included positive news (increasingly powerful machine learning algorithms and AI applications in numerous industries, including automotive, medical imaging, security, customer service, entertainment, financial services) and negative news (threats of machines taking our jobs and taking over our world). We also witnessed a growth in value-producing innovations around data, including greater use of APIs, as-a-Service offerings, data science platforms, deep learning, and cloud machine learning services from the major providers. Specialized applications of data, machine learning, and AI included machine intelligence, prescriptive analytics, journey sciences, behavior analytics, and IoT.

In 2018 we should see increased momentum to move beyond the AI hype. It will be time for proving AI's value, measuring its ROI, and making it actionable. The places where we will see these developments are not too different from the focus areas in 2017, including process automation, machine intelligence, customer service, hyper-personalization, and workforce transformation. We will also witness a growing maturity in IoT, including greater security features, modular platforms, APIs to access sensor data streams, and edge analytics interfaces. Also, we may see digital twins becoming more mainstream in the manufacturing, utilities, engineering, and construction industries. I also believe that in 2018 more practitioners will rise to the challenge to communicate the positive benefits of AI to a skeptical public.

Tom Davenport, is the Distinguished Professor of IT and Management at Babson College, the co-founder of the International Institute for Analytics, a Fellow of the MIT Initiative on the Digital Economy, and a Senior Advisor to Deloitte Analytics.

Primary Developments of 2017:

Jill Dyche, @jilldyche, Vice President @SASBestPractice. Best-selling business book author.

Everyone and their brother has an artificial intelligence or machine learning offering these days. 2017 proved that bright shiny objects were newly-burnished, and vendors-many of which weren't even what I call "AI-adjacent" -were prepared to buff and shine their product sets. The irony is that because of their newness, many of these vendors might well leapfrog established offerings.

2018 will see a rise in business conversations and use cases around AI/ML. Why? Because managers, most of whom are business people with problems to solve, don't care whether neural networks struggle with sparse data. They're not interested in lexical inference challenges with natural language processing. Instead they want to accelerate their supply chains, know what customers will do/buy/say next, and simply tell a computer what they want to know. It's prescriptive analytics writ large, and the vendors who can deliver it with as little adoption friction as possible can rule the world.

2018 will see a rise in business conversations and use cases around AI/ML. Why? Because managers, most of whom are business people with problems to solve, don't care whether neural networks struggle with sparse data. They're not interested in lexical inference challenges with natural language processing. Instead they want to accelerate their supply chains, know what customers will do/buy/say next, and simply tell a computer what they want to know. It's prescriptive analytics writ large, and the vendors who can deliver it with as little adoption friction as possible can rule the world.

Carla Gentry, Data Scientist at Analytical Solution, @data_nerd.

2017 was the year everyone started talking about Machine learning, AI and Predictive Analytics, unfortunately, a lot of these companies / vendors were "buzzword hopping" and don't really have the background to do, as they advertise.... It's very confusing if you check out any of the above mentioned "hot topics" on Twitter you'll find a bevy of posts from the same people who were talking about social media marketing last year! Experience in these fields require TIME and TALENT, not just "calls to action" and buzz... as always, EXPERIENCE DOES MATTER!

2017 was the year everyone started talking about Machine learning, AI and Predictive Analytics, unfortunately, a lot of these companies / vendors were "buzzword hopping" and don't really have the background to do, as they advertise.... It's very confusing if you check out any of the above mentioned "hot topics" on Twitter you'll find a bevy of posts from the same people who were talking about social media marketing last year! Experience in these fields require TIME and TALENT, not just "calls to action" and buzz... as always, EXPERIENCE DOES MATTER!

I see 2018 as the year where we look to the leaders in Data Science and Predictive Analytics, not because it's trendy but because it can make a HUGE difference in your business. Predictive hiring can save millions in turnover and attrition; AI and Machine Learning can do in seconds what you used to do in days! We agree technology can take us to new heights, but let's also remember to be HUMAN. You have a commitment to do no harm as a data scientist or an algorithm writer, if not by law, by humanity and ethical practice to be transparent and unbiased

Bob E. Hayes @bobehayes, Researcher and writer, publisher of Business Over Broadway, holds a PhD in industrial-organizational psychology.

The practice of data science and machine learning capabilities are increasingly being adopted across a wide range of industries and applications.

In 2017, we saw great advancements in AI capabilities. While prior deep learning models required massive amounts of data to teach the algorithm, the use of neural nets and reinforcement learning shows that data sets are not needed to create high-performing algorithms. DeepMind employed these techniques and created Alpha Go Zero, an algorithm that outperformed prior algorithms, by simply playing itself.

As the adoption of AI continues to grow in areas like criminal justice, finance, education and the workplace, we will need to establish standards for algorithms to assess their inaccuracy and bias. The interest in the social implications of AI will continue to grow (see here and here), including establishing rules of when AI can be used (e.g., avoid the "black box" of decision-making) and understanding how deep learning algorithms arrive at their decisions.

Security breaches will continue to climb, even in companies that were born of the Internet age (e.g., imgur, Uber). Consequently, we will see efforts at overhauling security approaches, increasing the visibility of Blockchain (a virtual ledger) as a viable way of improving how companies secure data of their constituencies.

Security breaches will continue to climb, even in companies that were born of the Internet age (e.g., imgur, Uber). Consequently, we will see efforts at overhauling security approaches, increasing the visibility of Blockchain (a virtual ledger) as a viable way of improving how companies secure data of their constituencies.

Gregory Piatetsky-Shapiro, President KDnuggets, Data Scientist, co-founder of KDD conferences and SIGKDD, professional organization for Knowledge Discovery and Data Mining.

Main developments in 2017:

Dr. GP (Ganapathi) Pulipaka, @gp_pulipaka, is CEO and Chief Data Scientist at DeepSingularity LLC.

Machine Learning, Deep Learning, Data Science Developments, 2017

Paul Gearan, Heather Allen & Karl Rexer, principals at Rexer Analytics, a leading data mining and advanced analytics consulting firm.

The promise of ubiquitous and effective use of Business Intelligence software by those without research or analytic backgrounds still faces many hurdles. Inroads certainly have been made with software like Tableau, IBM's Watson, Microsoft Power BI and others. However, in data collected in 2017 by Rexer Analytics as part of our report on the practices of data science professionals, only a little over half of respondents indicated that self-service tools are being used by people outside of their data science teams. When these tools are being used, about 60% of the time challenges are reported, with the most common themes being failure to understand the analytic process and the misinterpretation of results.

For 2018, the goal of actualizing the promise of these "Citizen Data Science" tools to expand the use and power of analytics to produce valid and meaningful results is of paramount importance. As is often the case (in our experience), an integrated multi-disciplinary team approach still works best: it is important to provide non-analytically trained staff and executives with the tools to explore and visualize their hypotheses. But it is equally important for teams to develop models and interpret findings in concert with a data science professional who is trained to understand the applications and limitations of the specific analytic techniques.

For 2018, the goal of actualizing the promise of these "Citizen Data Science" tools to expand the use and power of analytics to produce valid and meaningful results is of paramount importance. As is often the case (in our experience), an integrated multi-disciplinary team approach still works best: it is important to provide non-analytically trained staff and executives with the tools to explore and visualize their hypotheses. But it is equally important for teams to develop models and interpret findings in concert with a data science professional who is trained to understand the applications and limitations of the specific analytic techniques.

Eric Siegel, @predictanalytic, Founder, Predictive Analytics World conference series.

In 2017, three high-velocity trends in machine learning continued full speed and I expect they will in 2018 as well. Two are great, and one is the rose's inevitable thorn:

1) Business applications of machine learning continue to widen in their adoption across sectors -- such as in marketing, financial risk, fraud detection, workforce optimization, manufacturing, and healthcare. To see in one glance this wide spectrum of activities, and which leading companies are realizing value, take a look at the conference agenda for Predictive Analytics World's June 2018 event in Las Vegas, the first "Mega-PAW" and the only PAW Business of the year (in the US).

2) Deep learning has blossomed, both in buzz and in actual value. This relatively new set of advanced neural network methods scales machine learning to a new level of potential -- namely, achieving high performance for large-signal input problems, such as for the classification of images (self-driving cars, medical images), sound (speech recognition, speaker recognition), text (document classification), and even "standard" business problems, e.g., by processing high dimensional clickstreams. To help catalyze its commercial deployment across industry sectors, we're launching Deep Learning World alongside PAW Vegas 2018.

3) Unfortunately, artificial intelligence is still becoming even more overhyped and "oversouled" (this pun's credit to Eric King of The Modeling Agency :). Although the ill-defined term AI is sometimes used by expert practitioners to refer specifically to machine learning, more often it is used by analytics vendors and journalists to imply capabilities that are patently unrealistic and to cultivate expectations that are more fantasy than real life. Just because "any sufficiently advanced technology is indistinguishable from magic," as Arthur C. Clarke famously posited, that does not mean any and all "magic" we imagine or include in science fiction can or will eventually be achieved by technology. You've got the logic reversed. That AI will attain a will of its own that might maliciously or recklessly incur an existential threat to humanity is a ghost story -- one that furthers the anthropomorphization (or even deification) of machines many vendors seem to hope will improve sales. Friends, colleagues, and countrymen, I urge you to ease off on the "AI" stuff. It's adding noise and confusion, and will eventually incur backlash, as all "vaporware" sales do.

Jeff Ullman, the Stanford W. Ascherman Professor of Computer Science (Emeritus). His interests include database theory, database integration, data mining, and education using the information infrastructure.

I was recently at a meeting where I was reunited with two of my oldest colleagues, John Hopcroft and Al Aho. (Editor: see classic textbook Data Structures and Algorithms, by Aho, Hopcroft, and Ullman). I didn't have much to say in my talk that was new, but both Al and John are looking at things that could be of real interest to the KDnuggets readers.

John (Hopcroft) talked about analysis of deep-learning algorithms. He has done some experiments where he looks at the behavior of network nodes in a series of trainings on the same data in different orders (or similar experiments). He identifies cases where SOME node of each resulting network does essentially the same thing. There are other cases where you can't map nodes to nodes, but small sets of nodes in one network have the same effect as another set of nodes in another network. This work is in its infancy, but I think a prediction that might hold up for next year is:

Then, Al Aho talked about quantum computing. There is a lot of investment by some of the biggest companies on the planet, e.g., IBM, Microsoft, Google, in building quantum computers. There are many different approaches to these devices, but the thing that Al was excited about was the work of one of his former students at Microsoft, who is building a suite of compilers and simulators to allow the design of quantum algorithms and testing them, not on a real machine, which doesn't really exist, but on a simulator. This reminds me of the work in the 1980's on integrated-circuit design. There we also had compilers from high-level languages to circuits, which were then simulated, rather than fabricated (at least at first). The advantage was that you could try out different algorithms without the huge expense of building the physical circuit. Of course in the quantum world, it is not just "slow and expensive" but possibly "not possible at all," we just don't know at this point. Al actually shares my skepticism that quantum computing is going to be real any time soon, but there is no question that money will be invested, and algorithms designed. For example, Al points out that this past year there was interesting progress on more efficient quantum algorithms for linear algebra, which if brought to fruition would surely interest data scientists. So here's another prediction:

I'll add one more prediction of my own, far more mundane:

Jen Underwood, @idigdata, founder of Impact Analytix, LLC, is a recognized analytics industry expert, with a unique blend of product management, design and over 20 years of "hands-on" development of data warehouses, reporting, visualization and advanced analytics solutions.

When I look back at 2017, I will remember it fondly as the year when intelligent analytics platforms emerged. From analytics bots to automated machine learning, there has been a plethora of sophisticated, intelligent automation capabilities coming to life across every aspect of data science. Data integration and data preparation platforms have become smart enough to plug-and-play data sources, self-repair when errors occur in data pipelines, and even self-manage maintenance or data quality tasks based on knowledge learned from human interactions. Augmented analytics offerings have started to deliver on the promise of democratizing machine learning. Lastly, automated machine learning platforms with pre-packaged best-practice algorithm design blueprints and partially automated feature engineering capabilities have rapidly become game-changers in the digital era analytics arsenal.

Next year I expect to see automated artificial intelligence seamlessly unified into more analytics and decision-making processes. As organizations adapt, I anticipate numerous concerns to be raised around knowing how automated decisions are made and learning how to responsibly guide these systems in our imperfect world. Looming EU General Data Protection Regulation compliance deadlines will further elevate our need to open up analytics black boxes, ensure proper use, and dutifully govern personal data.

Next year I expect to see automated artificial intelligence seamlessly unified into more analytics and decision-making processes. As organizations adapt, I anticipate numerous concerns to be raised around knowing how automated decisions are made and learning how to responsibly guide these systems in our imperfect world. Looming EU General Data Protection Regulation compliance deadlines will further elevate our need to open up analytics black boxes, ensure proper use, and dutifully govern personal data.

Related:

What were the main developments in Data Science, Machine Learning, Predictive Analytics and what key trends you expect in 2018?

Among main themes were AI and Deep Learning - both real progress and hype, Machine Learning, Security, Quantum Computing, AlphaGo Zero, and more. Read the opinions of Kirk D. Borne, Tom Davenport, Jill Dyche, Bob E. Hayes, Carla Gentry, Gregory Piatetsky-Shapiro, GP Pulipaka, Rexer Analytics team (Paul Gearan, Heather Allen, and Karl Rexer), Eric Siegel, Jeff Ullman, and Jen Underwood.

Read also Big Data: Main Developments in 2017 and Key Trends in 2018 and last year predictions.

Have a different opinion? Comment at the end of the post.

Kirk D. Borne, @KirkDBorne, The Principal Data Scientist at BoozAllen, PhD Astrophysicist. Top Data Science and Big Data Influencer.

In 2017 we saw Big Data give way to AI at center stage of the technology hype cycle. This excessive media and practitioner attention on AI included positive news (increasingly powerful machine learning algorithms and AI applications in numerous industries, including automotive, medical imaging, security, customer service, entertainment, financial services) and negative news (threats of machines taking our jobs and taking over our world). We also witnessed a growth in value-producing innovations around data, including greater use of APIs, as-a-Service offerings, data science platforms, deep learning, and cloud machine learning services from the major providers. Specialized applications of data, machine learning, and AI included machine intelligence, prescriptive analytics, journey sciences, behavior analytics, and IoT.

In 2017 we saw Big Data give way to AI at center stage of the technology hype cycle. This excessive media and practitioner attention on AI included positive news (increasingly powerful machine learning algorithms and AI applications in numerous industries, including automotive, medical imaging, security, customer service, entertainment, financial services) and negative news (threats of machines taking our jobs and taking over our world). We also witnessed a growth in value-producing innovations around data, including greater use of APIs, as-a-Service offerings, data science platforms, deep learning, and cloud machine learning services from the major providers. Specialized applications of data, machine learning, and AI included machine intelligence, prescriptive analytics, journey sciences, behavior analytics, and IoT.

In 2018 we should see increased momentum to move beyond the AI hype. It will be time for proving AI's value, measuring its ROI, and making it actionable. The places where we will see these developments are not too different from the focus areas in 2017, including process automation, machine intelligence, customer service, hyper-personalization, and workforce transformation. We will also witness a growing maturity in IoT, including greater security features, modular platforms, APIs to access sensor data streams, and edge analytics interfaces. Also, we may see digital twins becoming more mainstream in the manufacturing, utilities, engineering, and construction industries. I also believe that in 2018 more practitioners will rise to the challenge to communicate the positive benefits of AI to a skeptical public.

Tom Davenport, is the Distinguished Professor of IT and Management at Babson College, the co-founder of the International Institute for Analytics, a Fellow of the MIT Initiative on the Digital Economy, and a Senior Advisor to Deloitte Analytics.

Primary Developments of 2017:

- Enterprise AI goes mainstream: Many large, established companies have AI or machine learning initiatives underway. Some have over 50 projects employing various technologies. Most of these are "low hanging fruit" projects with relatively limited objectives. There is a tendency to move away from large vendors with "transformative" offerings, and toward open source, do-it-yourself types of projects. Of course this means that firms must hire or develop high levels of data science skills.

- Machine learning is applied to data integration: The oldest challenge in analytics and data management is now being addressed by machine learning. Labor-intensive approaches to integrating and curating data are being replaced-or at least augmented-by "probabilistic matching" of similar data elements across different databases. The use of this tool-augmented by workflow and crowdsourcing of experts-can reduce the amount of time to integrate data tenfold.

Conservative firms embrace open source: The most traditionally conservative firms in industries like banking, insurance, and health care are now actively embracing open source analytical, AI, and data management software. Some are actively discouraging employees from using proprietary tools; others leave it up to individual choice. Cost is one reason for the shift, but increased performance and appealing to recent university hires are more common reasons.

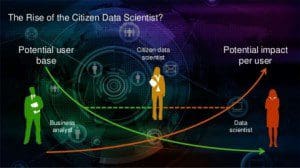

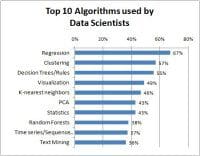

We have entered the "post algorithmic" era: Historically analysts and data scientists needed to have considerable knowledge of which algorithm to use for what purpose. But automation of analytical and machine learning processes has made it possible for analyses to consider a hundred or more different algorithms. What matters is how well a model-or an ensemble of models-performs. This of course has enabled the rise of the "citizen data scientist." There may eventually be horror stories from this development, but none appear to have cropped up yet.

- The appeal of the standalone AI startup begins to wane: Stoked by venture capital funding, hundreds of AI startups have been founded in the past couple of years. Most address a relatively narrow problem. Even if they work effectively, however, integration with existing processes and systems is the primary challenge for most organizations. As a result, established companies are exhibiting a preference for either developing their own AI "microservices" that are relatively easy to integrate, or purchasing from vendors who have embedded AI content into their transaction systems.

Jill Dyche, @jilldyche, Vice President @SASBestPractice. Best-selling business book author.

Everyone and their brother has an artificial intelligence or machine learning offering these days. 2017 proved that bright shiny objects were newly-burnished, and vendors-many of which weren't even what I call "AI-adjacent" -were prepared to buff and shine their product sets. The irony is that because of their newness, many of these vendors might well leapfrog established offerings.

2018 will see a rise in business conversations and use cases around AI/ML. Why? Because managers, most of whom are business people with problems to solve, don't care whether neural networks struggle with sparse data. They're not interested in lexical inference challenges with natural language processing. Instead they want to accelerate their supply chains, know what customers will do/buy/say next, and simply tell a computer what they want to know. It's prescriptive analytics writ large, and the vendors who can deliver it with as little adoption friction as possible can rule the world.

2018 will see a rise in business conversations and use cases around AI/ML. Why? Because managers, most of whom are business people with problems to solve, don't care whether neural networks struggle with sparse data. They're not interested in lexical inference challenges with natural language processing. Instead they want to accelerate their supply chains, know what customers will do/buy/say next, and simply tell a computer what they want to know. It's prescriptive analytics writ large, and the vendors who can deliver it with as little adoption friction as possible can rule the world.

Carla Gentry, Data Scientist at Analytical Solution, @data_nerd.

2017 was the year everyone started talking about Machine learning, AI and Predictive Analytics, unfortunately, a lot of these companies / vendors were "buzzword hopping" and don't really have the background to do, as they advertise.... It's very confusing if you check out any of the above mentioned "hot topics" on Twitter you'll find a bevy of posts from the same people who were talking about social media marketing last year! Experience in these fields require TIME and TALENT, not just "calls to action" and buzz... as always, EXPERIENCE DOES MATTER!

2017 was the year everyone started talking about Machine learning, AI and Predictive Analytics, unfortunately, a lot of these companies / vendors were "buzzword hopping" and don't really have the background to do, as they advertise.... It's very confusing if you check out any of the above mentioned "hot topics" on Twitter you'll find a bevy of posts from the same people who were talking about social media marketing last year! Experience in these fields require TIME and TALENT, not just "calls to action" and buzz... as always, EXPERIENCE DOES MATTER!

I see 2018 as the year where we look to the leaders in Data Science and Predictive Analytics, not because it's trendy but because it can make a HUGE difference in your business. Predictive hiring can save millions in turnover and attrition; AI and Machine Learning can do in seconds what you used to do in days! We agree technology can take us to new heights, but let's also remember to be HUMAN. You have a commitment to do no harm as a data scientist or an algorithm writer, if not by law, by humanity and ethical practice to be transparent and unbiased

Bob E. Hayes @bobehayes, Researcher and writer, publisher of Business Over Broadway, holds a PhD in industrial-organizational psychology.

The practice of data science and machine learning capabilities are increasingly being adopted across a wide range of industries and applications.

In 2017, we saw great advancements in AI capabilities. While prior deep learning models required massive amounts of data to teach the algorithm, the use of neural nets and reinforcement learning shows that data sets are not needed to create high-performing algorithms. DeepMind employed these techniques and created Alpha Go Zero, an algorithm that outperformed prior algorithms, by simply playing itself.

As the adoption of AI continues to grow in areas like criminal justice, finance, education and the workplace, we will need to establish standards for algorithms to assess their inaccuracy and bias. The interest in the social implications of AI will continue to grow (see here and here), including establishing rules of when AI can be used (e.g., avoid the "black box" of decision-making) and understanding how deep learning algorithms arrive at their decisions.

Security breaches will continue to climb, even in companies that were born of the Internet age (e.g., imgur, Uber). Consequently, we will see efforts at overhauling security approaches, increasing the visibility of Blockchain (a virtual ledger) as a viable way of improving how companies secure data of their constituencies.

Security breaches will continue to climb, even in companies that were born of the Internet age (e.g., imgur, Uber). Consequently, we will see efforts at overhauling security approaches, increasing the visibility of Blockchain (a virtual ledger) as a viable way of improving how companies secure data of their constituencies.

Gregory Piatetsky-Shapiro, President KDnuggets, Data Scientist, co-founder of KDD conferences and SIGKDD, professional organization for Knowledge Discovery and Data Mining.

Main developments in 2017:

AlphaGo Zero was probably the Most Significant Research Advance in AI in 2017

- Increasing automation of Data Science, with more tools offering automated Machine Learning platforms

- The growth of AI hype and expectations was even faster than the growth in AI and Deep Learning successes

- GDPR (European General Data Protection Regulation), which becomes enforceable on May 25, 2018, will have a major effect on Data Science, with requirements that include the right to explanation (can your Deep Learning-based method explain why this person was denied credit?), and prevention of bias and discrimination.

- Google DeepMind team will follow up on the amazing result of AlphaGo Zero and achieve another superhuman performance on a task where only a few years ago many people thought it was impossible to do by computer.

(note: next DeepMind breakthrough happened already in December 2017, with AlphaZero mastering chess in just 4 hours, with the same self-playing learning program reaching superhuman performance in chess, Go, and shogi) - We will see more self-driving car (and truck) progress, including first-time problems (like Las Vegas self-driving shuttle that did not know to move out of the way) being resolved (it will move out of the way next time).

- The AI bubble will continue but we will see signs of shake-out and consolidation.

Dr. GP (Ganapathi) Pulipaka, @gp_pulipaka, is CEO and Chief Data Scientist at DeepSingularity LLC.

Machine Learning, Deep Learning, Data Science Developments, 2017

- A novel form of reinforcement learning was introduced by AlphaGo Zero in which AlphaGo becomes its own teacher without human intervention and historical datasets.

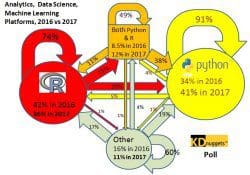

Python (1.65 M GitHub pushes), Java (2.32 M GitHub pushes), and R (163,807 GitHub pushes) are the most sought-after programming languages for 2017.

- Processing large-scale big data to perform neural networking functions on CPUs could translate to massive power costs over a long arc of time. Google released second generation TPUs. The precision design engineering on TPUs includes attaching a co-processor to the common PCIe bus with instructions to handle traffic with multiplier accumulators (MACs) to reuse the value from the register for mathematical calculations and save billions of dollars on energy.

- Nvidia released Volta-architecture based Tesla GPUs for supercharging deep learning and machine learning at a peak rate of 120 teraflops of performance per each GPU.

- Moving away from the hype of D-Wave quantum annealing computers to 20 qubit capable quantum computing machines with QISKit quantum programming stack in Python.

- McAfree Labs 2018 threats research report shows that, adversarial machine learning will be implemented for network intrusion detection, fraud detection, spam detection, and malware detection in the field of cybersecurity at extreme machine speeds in serverless environments.

- HPE to develop dot product engine and rollout their own neural network chips for high performance computing with inference from deep neural networks, convolutional neural networks, and recurrent neural networks.

The future of quantum machine learning hinges on qubits with 10 or more states and 100s of dimensions as opposed to qubits that can only adopt two possible states. There will be a number of microchips manufactured with qubits which will create spectacularly powerful quantum computers.

- The barriers for IoT and edge computing with machine learning will be lowered in 2018. Geospatial intelligence will leapfrog with breakthrough algorithms applied on mobile phones, RFID sensors, UAVs, drones, and satellites.

- Self-supervised learning and autonomous learning will power robotics with novel deep learning techniques for control tasks that enable the robots to interact with their surrounding terrestrial environments and in underwater settings.

Paul Gearan, Heather Allen & Karl Rexer, principals at Rexer Analytics, a leading data mining and advanced analytics consulting firm.

The promise of ubiquitous and effective use of Business Intelligence software by those without research or analytic backgrounds still faces many hurdles. Inroads certainly have been made with software like Tableau, IBM's Watson, Microsoft Power BI and others. However, in data collected in 2017 by Rexer Analytics as part of our report on the practices of data science professionals, only a little over half of respondents indicated that self-service tools are being used by people outside of their data science teams. When these tools are being used, about 60% of the time challenges are reported, with the most common themes being failure to understand the analytic process and the misinterpretation of results.

For 2018, the goal of actualizing the promise of these "Citizen Data Science" tools to expand the use and power of analytics to produce valid and meaningful results is of paramount importance. As is often the case (in our experience), an integrated multi-disciplinary team approach still works best: it is important to provide non-analytically trained staff and executives with the tools to explore and visualize their hypotheses. But it is equally important for teams to develop models and interpret findings in concert with a data science professional who is trained to understand the applications and limitations of the specific analytic techniques.

For 2018, the goal of actualizing the promise of these "Citizen Data Science" tools to expand the use and power of analytics to produce valid and meaningful results is of paramount importance. As is often the case (in our experience), an integrated multi-disciplinary team approach still works best: it is important to provide non-analytically trained staff and executives with the tools to explore and visualize their hypotheses. But it is equally important for teams to develop models and interpret findings in concert with a data science professional who is trained to understand the applications and limitations of the specific analytic techniques.

Eric Siegel, @predictanalytic, Founder, Predictive Analytics World conference series.

In 2017, three high-velocity trends in machine learning continued full speed and I expect they will in 2018 as well. Two are great, and one is the rose's inevitable thorn:

1) Business applications of machine learning continue to widen in their adoption across sectors -- such as in marketing, financial risk, fraud detection, workforce optimization, manufacturing, and healthcare. To see in one glance this wide spectrum of activities, and which leading companies are realizing value, take a look at the conference agenda for Predictive Analytics World's June 2018 event in Las Vegas, the first "Mega-PAW" and the only PAW Business of the year (in the US).

2) Deep learning has blossomed, both in buzz and in actual value. This relatively new set of advanced neural network methods scales machine learning to a new level of potential -- namely, achieving high performance for large-signal input problems, such as for the classification of images (self-driving cars, medical images), sound (speech recognition, speaker recognition), text (document classification), and even "standard" business problems, e.g., by processing high dimensional clickstreams. To help catalyze its commercial deployment across industry sectors, we're launching Deep Learning World alongside PAW Vegas 2018.

3) Unfortunately, artificial intelligence is still becoming even more overhyped and "oversouled" (this pun's credit to Eric King of The Modeling Agency :). Although the ill-defined term AI is sometimes used by expert practitioners to refer specifically to machine learning, more often it is used by analytics vendors and journalists to imply capabilities that are patently unrealistic and to cultivate expectations that are more fantasy than real life. Just because "any sufficiently advanced technology is indistinguishable from magic," as Arthur C. Clarke famously posited, that does not mean any and all "magic" we imagine or include in science fiction can or will eventually be achieved by technology. You've got the logic reversed. That AI will attain a will of its own that might maliciously or recklessly incur an existential threat to humanity is a ghost story -- one that furthers the anthropomorphization (or even deification) of machines many vendors seem to hope will improve sales. Friends, colleagues, and countrymen, I urge you to ease off on the "AI" stuff. It's adding noise and confusion, and will eventually incur backlash, as all "vaporware" sales do.

Jeff Ullman, the Stanford W. Ascherman Professor of Computer Science (Emeritus). His interests include database theory, database integration, data mining, and education using the information infrastructure.

I was recently at a meeting where I was reunited with two of my oldest colleagues, John Hopcroft and Al Aho. (Editor: see classic textbook Data Structures and Algorithms, by Aho, Hopcroft, and Ullman). I didn't have much to say in my talk that was new, but both Al and John are looking at things that could be of real interest to the KDnuggets readers.

John (Hopcroft) talked about analysis of deep-learning algorithms. He has done some experiments where he looks at the behavior of network nodes in a series of trainings on the same data in different orders (or similar experiments). He identifies cases where SOME node of each resulting network does essentially the same thing. There are other cases where you can't map nodes to nodes, but small sets of nodes in one network have the same effect as another set of nodes in another network. This work is in its infancy, but I think a prediction that might hold up for next year is:

The careful analysis of deep-learning networks will advance the understanding of how deep learning really works, its uses and pitfalls.

Then, Al Aho talked about quantum computing. There is a lot of investment by some of the biggest companies on the planet, e.g., IBM, Microsoft, Google, in building quantum computers. There are many different approaches to these devices, but the thing that Al was excited about was the work of one of his former students at Microsoft, who is building a suite of compilers and simulators to allow the design of quantum algorithms and testing them, not on a real machine, which doesn't really exist, but on a simulator. This reminds me of the work in the 1980's on integrated-circuit design. There we also had compilers from high-level languages to circuits, which were then simulated, rather than fabricated (at least at first). The advantage was that you could try out different algorithms without the huge expense of building the physical circuit. Of course in the quantum world, it is not just "slow and expensive" but possibly "not possible at all," we just don't know at this point. Al actually shares my skepticism that quantum computing is going to be real any time soon, but there is no question that money will be invested, and algorithms designed. For example, Al points out that this past year there was interesting progress on more efficient quantum algorithms for linear algebra, which if brought to fruition would surely interest data scientists. So here's another prediction:

Quantum computing, including algorithms of interest in data science will receive a lot more attention in the next years, even if true quantum computers able to operate at a sufficiently large scale never materialize or are decades away.

I'll add one more prediction of my own, far more mundane:

- The trend of moving from Hadoop to Spark will continue, eventually causing people to pretty much forget Hadoop.

Jen Underwood, @idigdata, founder of Impact Analytix, LLC, is a recognized analytics industry expert, with a unique blend of product management, design and over 20 years of "hands-on" development of data warehouses, reporting, visualization and advanced analytics solutions.

When I look back at 2017, I will remember it fondly as the year when intelligent analytics platforms emerged. From analytics bots to automated machine learning, there has been a plethora of sophisticated, intelligent automation capabilities coming to life across every aspect of data science. Data integration and data preparation platforms have become smart enough to plug-and-play data sources, self-repair when errors occur in data pipelines, and even self-manage maintenance or data quality tasks based on knowledge learned from human interactions. Augmented analytics offerings have started to deliver on the promise of democratizing machine learning. Lastly, automated machine learning platforms with pre-packaged best-practice algorithm design blueprints and partially automated feature engineering capabilities have rapidly become game-changers in the digital era analytics arsenal.

Next year I expect to see automated artificial intelligence seamlessly unified into more analytics and decision-making processes. As organizations adapt, I anticipate numerous concerns to be raised around knowing how automated decisions are made and learning how to responsibly guide these systems in our imperfect world. Looming EU General Data Protection Regulation compliance deadlines will further elevate our need to open up analytics black boxes, ensure proper use, and dutifully govern personal data.

Next year I expect to see automated artificial intelligence seamlessly unified into more analytics and decision-making processes. As organizations adapt, I anticipate numerous concerns to be raised around knowing how automated decisions are made and learning how to responsibly guide these systems in our imperfect world. Looming EU General Data Protection Regulation compliance deadlines will further elevate our need to open up analytics black boxes, ensure proper use, and dutifully govern personal data.

Related:

- Big Data: Main Developments in 2017 and Key Trends in 2018

- Data Science, Predictive Analytics Main Developments in 2016 and Key Trends for 2017

- 2017 Predictions

Data Science, Machine Learning: Main Developments in 2017 and Key Trends in 2018

Data Science, Machine Learning: Main Developments in 2017 and Key Trends in 2018 Conservative firms embrace open source:

The most traditionally conservative firms in industries like banking, insurance, and health care are now actively embracing open source analytical, AI, and data management software. Some are actively discouraging employees from using proprietary tools; others leave it up to individual choice. Cost is one reason for the shift, but increased performance and appealing to recent university hires are more common reasons.

Conservative firms embrace open source:

The most traditionally conservative firms in industries like banking, insurance, and health care are now actively embracing open source analytical, AI, and data management software. Some are actively discouraging employees from using proprietary tools; others leave it up to individual choice. Cost is one reason for the shift, but increased performance and appealing to recent university hires are more common reasons.

We have entered the "post algorithmic" era:

Historically analysts and data scientists needed to have considerable knowledge of which algorithm to use for what purpose. But automation of analytical and machine learning processes has made it possible for analyses to consider a hundred or more different algorithms. What matters is how well a model-or an ensemble of models-performs. This of course has enabled the rise of the "citizen data scientist." There may eventually be horror stories from this development, but none appear to have cropped up yet.

We have entered the "post algorithmic" era:

Historically analysts and data scientists needed to have considerable knowledge of which algorithm to use for what purpose. But automation of analytical and machine learning processes has made it possible for analyses to consider a hundred or more different algorithms. What matters is how well a model-or an ensemble of models-performs. This of course has enabled the rise of the "citizen data scientist." There may eventually be horror stories from this development, but none appear to have cropped up yet.

Python (1.65 M GitHub pushes), Java (2.32 M GitHub pushes), and R (163,807 GitHub pushes) are the most sought-after programming languages for 2017.

Python (1.65 M GitHub pushes), Java (2.32 M GitHub pushes), and R (163,807 GitHub pushes) are the most sought-after programming languages for 2017.

The future of quantum machine learning hinges on qubits with 10 or more states and 100s of dimensions as opposed to qubits that can only adopt two possible states. There will be a number of microchips manufactured with qubits which will create spectacularly powerful quantum computers.

The future of quantum machine learning hinges on qubits with 10 or more states and 100s of dimensions as opposed to qubits that can only adopt two possible states. There will be a number of microchips manufactured with qubits which will create spectacularly powerful quantum computers.