How Much Mathematics Does an IT Engineer Need to Learn to Get Into Data Science?

How Much Mathematics Does an IT Engineer Need to Learn to Get Into Data Science?

When I started diving deep into these exciting subjects (by self-study), I discovered quickly that I don’t know/only have a rudimentary idea about/ forgot mostly what I studied in my undergraduate study some essential mathematics.

Disclaimer and Prologue

First, the disclaimer, I am not an IT engineer :-) I work in the field of semiconductors, specifically high-power semiconductors, as a technology development engineer, whose day job consists of dealing primarily with semiconductor physics, finite-element simulation of silicon fabrication process, or electronic circuit theory. There are, of course, some mathematics in this endeavor, but for better of worse, I don’t need to dabble in the kind of mathematics that will be necessary for a data scientist.

However, I have many friends in IT industry and observed a great many traditional IT engineers enthusiastic about learning/contributing to the exciting field of data science and machine learning/artificial intelligence. I am dabbling myself in this field to learn some tricks of the trade which I can apply to the domain of semiconductor device or process design. But when I started diving deep into these exciting subjects (by self-study), I discovered quickly that I don’t know/only have a rudimentary idea about/ forgot mostly what I studied in my undergraduate study some essential mathematics. In this LinkedIn article, I ramble about it...

Now, I have a Ph.D. in Electrical Engineering from a reputed US University and still I felt incomplete in my preparation for having solid grasp over machine learning or data science techniques without having a refresher in some essential mathematics. Meaning no disrespect to an IT engineer, I must say that the very nature of his/her job and long training generally leave him/her distanced from the world of applied mathematics. (S)he may be dealing with lot of data and information on a daily basis but there may not be an emphasis on rigorous modeling of that data. Often, there is immense time pressure, and the emphasis is on ‘use the data for your immediate need and move on’ rather than on deep probing and scientific exploration of the same. Unfortunately, data science should always be about the science (not data), and following that thread, certain tools and techniques become indispensable.

These tools and techniques — modeling a process (physical or informational) by probing the underlying dynamics, rigorously estimating the quality of the data source, training one’s sense for identification of the hidden pattern from the stream of information, or understanding clearly the limitation of a model— are the hallmarks of sound scientific process.

They are often taught at advanced graduate level courses in an applied science/engineering discipline. Or, one can imbibe them through high-quality graduate-level research work in similar field. Unfortunately, even a decade long career in traditional IT (devOps, database, or QA/testing) will fall short of rigorously imparting this kind of training. There is, simply, no need.

The Times They Are a-Changin’

Until now.

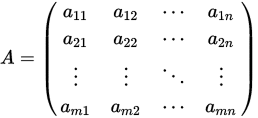

You see, in most cases, having impeccable knowledge of SQL queries, a clear sense of the overarching business need, and idea about the general structure of the corresponding RDBMS is good enough to perform the extract-transform-load cycle and thereby generating value to the company for any IT engineer worth his/her salt. But what happens if someone drops by and starts asking weird question like “is your artificially synthesized test data set random enough” or “how would you know if the next data point is within 3-sigma limit of the underlying distribution of your data”? Or, even the occasional quipping from the next-cubicle computer science graduate/nerd that the computational load for any meaningful mathematical operation with a table of data (aka a matrix) grows non-linearly with the size of table i.e. number of rows and columns, can be exasperating and confusing.

And these type of questions are growing in frequency and urgency, simply because data is the new currency.

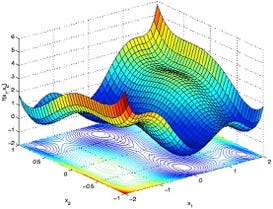

Executives, technical managers, decision-makers are not satisfied anymore with just the dry description of a table, obtained by traditional ETL tools. They want to see the hidden pattern, they yarn to feel the subtle interaction between the columns, they would like to get the full descriptive and inferential statistics that may help in predictive modeling and extending the projection power of the data set far beyond the immediate range of values that it contains.

Today’s data must tell a story, or, sing a song if you like. However, to listen to its beautiful tune, one must be versed in the fundamental notes of the music, and

those are mathematical truths.

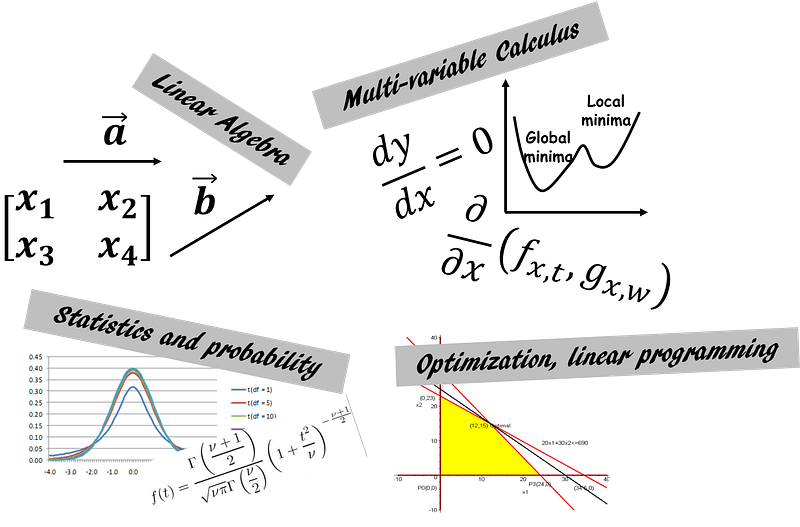

Without much further ado, let us come to the crux of the matter. What are the essential topics/sub-topics of mathematics, that an average IT engineer must study/refresh if (s)he wants to enter into the field of business analytics/data science/data mining? I’ll show my idea in the following chart.

Basic Algebra, Functions, Set theory, Plotting, Geometry

Always a good idea to start at the root. Edifice of modern mathematics is built upon some key foundations — set theory, functional analysis, number theory etc. From an applied mathematics learning point of view, we can simplify studying these topics through some concise modules (in no particular order):

a) set theory basics, b) real and complex numbers and basic properties, c) polynomial functions, exponential, logarithms, trigonometric identities, d) linear and quadratic equations, e) inequalities, infinite series, binomial theorem, f) permutation and combination, g) graphing and plotting, Cartesian and polar co-ordinate systems, conic sections, h) basic geometry and theorems, triangle properties.

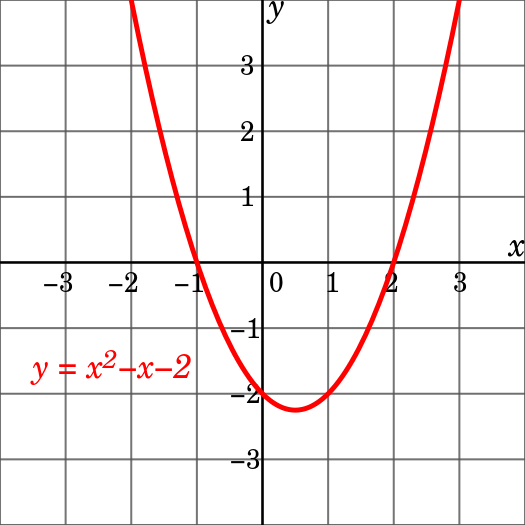

Calculus

Sir Issac Newton wanted to explain the behavior of heavenly bodies. But he did not have a good enough mathematical tool to describe his physical concepts. So he invented this (or a certain modern form) branch of mathematics when he was hiding away on his countryside farm from the plague outbreak in urban England. Since then, it is considered the gateway to advanced learning in any analytical study — pure or applied science, engineering, social science, economics, ...

Not surprisingly then, the concept and application of calculus pops up in numerous places in the field of data science or machine learning. Most essential topics to be covered are as follows -

a) Functions of single variable, limit, continuity and differentiability, b) mean value theorems, indeterminate forms and L’Hospital rule, c) maxima and minima, d) product and chain rule, e) Taylor’s series, f) fundamental and mean value-theorems of integral calculus, g) evaluation of definite and improper integrals, h) Beta and Gamma functions, i) Functions of two variables, limit, continuity, partial derivatives, j) basics of ordinary and partial differential equations.

Linear Algebra

Got a new friend suggestion on Facebook? A long lost professional contact suddenly added you on LinkedIn? Amazon suddenly recommended an awesome romance-thriller for your next vacation reading? Or Netflix dug up for you that little-known gem of a documentary which just suits your taste and mood?

Doesn’t it feel good to know that if you learn basics of linear algebra, then you are empowered with the knowledge about the basic mathematical object that is at the heart of all these exploits by the high and mighty of the tech industry?

At least, you will know the basic properties of the mathematical structure that controls what you shop on Target, how you drive using Google Map, which song you listen to on Pandora, or whose room you rent on Airbnb.

The essential topics to study are (not an ordered or exhaustive list by any means):

a) basic properties of matrix and vectors —scalar multiplication, linear transformation, transpose, conjugate, rank, determinant, b) inner and outer products, c) matrix multiplication rule and various algorithms, d) matrix inverse, e) special matrices — square matrix, identity matrix, triangular matrix, idea about sparse and dense matrix, unit vectors, symmetric matrix, Hermitian, skew-Hermitian and unitary matrices, f) matrix factorization concept/LU decomposition, Gaussian/Gauss-Jordan elimination, solving Ax=b linear system of equation, g) vector space, basis, span, orthogonality, orthonormality, linear least square, h) singular value decomposition, i) eigenvalues, eigenvectors, and diagonalization.

Here is a nice Medium article on what you can accomplish with linear algebra.

Statistics and Probability

Only death and taxes are certain, and for everything else there is normal distribution.

The importance of having a solid grasp over essential concepts of statistics and probability cannot be overstated in a discussion about data science. Many practitioners in the field actually call machine learning nothing but statistical learning. I followed the widely known “An Introduction to Statistical Learning” while working on my first MOOC in machine learning and immediately realized the conceptual gaps I had in the subject. To plug those gaps, I started taking other MOOCs focused on basic statistics and probability and reading up/watching videos on related topics. The subject is vast and endless, and therefore focused planning is critical to cover most essential concepts. I am trying to list them as best as I can but I fear this is the area where I will fall short by most amount.

a) data summaries and descriptive statistics, central tendency, variance, covariance, correlation, b) Probability: basic idea, expectation, probability calculus, Bayes theorem, conditional probability, c) probability distribution functions — uniform, normal, binomial, chi-square, student’s t-distribution, central limit theorem, d) sampling, measurement, error, random numbers, e) hypothesis testing, A/B testing, confidence intervals, p-values, f) ANOVA, g) linear regression, h) power, effect size, testing means, i) research studies and design-of-experiment.

Here is a nice article on the necessity of statistics knowledge for a data scientist.

Special Topics: Optimization theory, Algorithm analysis

These topics are little different from the traditional discourse in applied mathematics as they are mostly relevant and most widely used in specialized fields of study — theoretical computer science, control theory, or operation research. However, a basic understanding of these powerful techniques can be so fruitful in the practice of machine learning that they are worth mentioning here.

For example, virtually every machine learning algorithm/technique aims to minimize some kind of estimation error subject to various constraints. That, right there, is an optimization problem, which is generally solved by linear programming or similar techniques. On the other hand, it is always deeply satisfying and insightful experience to understand a computer algorithm’s time complexity as it becomes extremely important when the algorithm is applied to a large data set. In this era of big data, where a data scientist is routinely expected to extract, transform, and analyze billions of records, (s)he must be extremely careful about choosing the right algorithm as it can make all the difference between amazing performance or abject failure. General theory and properties of algorithms are best studied in a formal computer science course but to understand how their time complexity (i.e. how much time the algorithm will take to run for a given size of data) is analyzed and calculated, one must have rudimentary familiarity with mathematical concepts such as dynamic programming or recurrence equations. A familiarity with the technique of proof by mathematical induction can be extremely helpful too.

Epilogue

Scared? Mind-bending list of topics to learn just as per-requisite? Fear not, you will learn on the go and as needed. But the goal is to keep the windows and doors of your mind open and welcoming.

There is even a concise MOOC course to get you started. Note, this is a beginner-level course for refreshing your high-school or freshman year level knowledge. And here is a summary article on 15 best math courses for data science on kdnuggets.

But you can be assured that, after refreshing these topics, many of which you may have studied in your undergraduate, or even learning new concepts, you will feel so empowered that you will definitely start to hear the hidden music that the data sings. And that’s called a big leap towards becoming a data scientist…

#datascience, #machinelearning, #information, #technology, #mathematics

If you have any questions or ideas to share, please contact the author at tirthajyoti[AT]gmail.com. Also you can check author’s GitHub repositoriesfor other fun code snippets in Python, R, or MATLAB and machine learning resources. You can also follow me on LinkedIn.

Bio: Tirthajyoti Sarkar is a semiconductor technologist, machine learning/data science zealot, Ph.D. in EE, blogger and writer.

Original. Reposted with permission.

Related:

- Why You Should Forget ‘for-loop’ for Data Science Code and Embrace Vectorization

- 15 Mathematics MOOCs for Data Science

- Is Regression Analysis Really Machine Learning?

How Much Mathematics Does an IT Engineer Need to Learn to Get Into Data Science?

How Much Mathematics Does an IT Engineer Need to Learn to Get Into Data Science?