Does Deep Learning Have Deep Flaws?

A recent study of neural networks found that for every correctly classified image, one can generate an "adversarial", visually indistinguishable image that will be misclassified. This suggests potential deep flaws in all neural networks, including possibly a human brain.

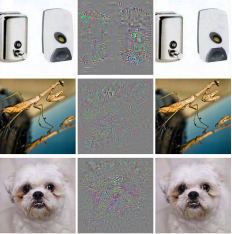

Here are three pairs of images. Can you find the differences between left columns and right columns?

I suppose not. They are truly identical to human eyes, while not for the deep neural networks. Actually, deep neural networks can only recognize the left correctly, but can not recognize the right ones. Interesting, isn’t it?

A recent study by researchers from Google, New York University and University of Montreal has found this flaw in almost every deep neural network.

Two counter intuitive properties of deep neural networks are presented.

1. It is the space, rather than the individual units, that contains the semantic information in the high layer of neural networks. This means that random distortion of the originals can also be correctly classified.

The figures below compare the natural basis to the random basis on the convolutional neural network trained on MNIST, using ImageNet dataset as validation set.

For the natural basis (upper images), it sees an activation of a hidden unit as a feature and looks for input images which maximize the activation value of this single feature. We can interpret these features into meaningful variations in the input domain. However, the experiments show that such interpretable semantic also works for any random directions (lower images).

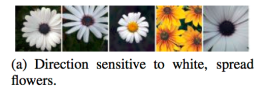

Take white flower recognition as an example. Traditionally, the images with black circle and fan-shaped white region will be most likely classified as white flowers. However, it turns out that if we pick a random set of basis, the images responded can also be semantically interpreted in a similar way.

2. The network may misclassify an image after the researchers applied a certain imperceptible perturbation. The perturbations are found by adjusting the pixel values to maximize the prediction error.

For all the networks we studied (MNIST, QuocNet, AlexNet), for each sample, we always manage to generate very close, visually indistinguishable, adversarial examples that are misclassified by the original network.

The examples below are (left) correctly predicted samples, (right) adversarial examples, (center) 10*magnification of differences between them. The two columns are the same to a human, while totally different to a neural network.

This is a remarkable finding. Shouldn’t they be immune to small perturbations? The continuity and stability of deep neural networks are questioned. The smoothness assumption does not hold for deep neural networks any more.

What’s more surprising is that the same perturbation can cause a different network, which was trained on a different training dataset, to misclassify the same image. It means that adversarial examples are somewhat universal.

This result may change our way to manage training/validation datasets and edge cases. It has been supported by the experiments that if we keep a pool of adversarial examples and mix it into the original training set, the generalization will be improved. Adversarial examples for the higher layer seem to be more useful than those on the lower layers.

The influences are not limited to this.

The authors claimed,

Although the set of adversarial negatives is dense, the probability is extremely low, so it is rarely observed in the test set.

But “rare” does not mean never. Imagine that AI systems with such blind spots were applied to criminal detection, security devices or banking systems. If we keep digging deeper, do such adversarial images exist in our brain? Scary, isn’t it?

Ran Bi is a master student in Data Science program at New York University. She has done several projects in machine learning, deep learning and also big data analytics during her study at NYU. With the background in Financial Engineering for undergrad study, she is also interested in business analytics.

Related:

- Where to Learn Deep Learning – Courses, Tutorials, Software

- How Deep Learning Analytics Mimic the Mind

- Deep Learning Wins Dogs vs Cats competition on Kaggle