Why the Data Scientist and Data Engineer Need to Understand Virtualization in the Cloud

This article covers the value of understanding the virtualization constructs for the data scientist and data engineer as they deploy their analysis onto all kinds of cloud platforms. Virtualization is a key enabling layer of software for these data workers to be aware of and to achieve optimal results from.

Isolation and Security

Separating one team’s work from another’s is important so that they can achieve the SLAs the business needs. Separation in the virtualization sphere is done through use of groupings of servers and virtual machines in their own confined resource pools – so as to limit their effect on external workloads.

Example: Different teams at a health care company use a Hortonworks cluster for certain analysis jobs, while running alongside a MapR cluster on the same infrastructure, for other analytic purposes.

Distributed firewalls in purpose-built virtual machines can be deployed to exclude access to certain clusters and data from others that happen to be on the same infrastructure. Because these distributed firewalls are in virtual machines, they can move with the workload and are not confined to physical boundaries.

Performance

Data Scientists are tasked with getting to the answer to their queries within a time boundary. Performance of the systems that execute their work is of prime importance to this community.

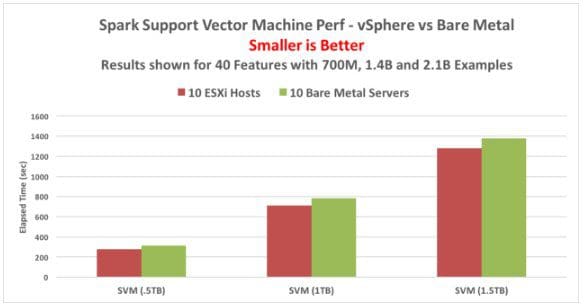

To test the performance of data science programs on virtual machines, VMware engineers executed a set of Machine Learning (ML) algorithms on Spark clusters, where each node in the cluster is held in a virtual machine. These tests were also executed on the same servers without virtualization present and the result of the testing were compared. Here is one example of the results.

These types of systems train a model with a large set of example data to begin with, so that the model will recognize certain patterns in the data. These example sets were in the 700 million to 2.1 billion entry range in the above setup. Each example had 40 features or different aspects of one record of the data (such as the amount of the transaction and the expiry date for a credit card purchase)

The model, once trained on a large number of examples, is then presented with a new transaction instance. This could be a credit card transaction or a customer’s application for a new credit card for example. The analysis model is asked to classify that particular transaction as a good one or a fraudulent one. Using statistical techniques, the model gives a binary answer to this question. This type of analysis is called a binary classification. Several other kinds of machine learning algorithms were tested also.

What the results show is that these ML algorithms, operating in virtual machines on vSphere, present as good performance and in some cases better performance than the equivalent bare metal implementation of the same test code. A full technical description of these workloads and the results seen is given in http://www.vmware.com/content/dam/digitalmarketing/vmware/en/pdf/techpaper/bigdata-perf-vsphere6.pdf

Virtualization Choices for Cloud Deployments

The technical choices that are open to the Data Scientist/Data Engineer and the infrastructure management team as they begin their deployment of analytics infrastructure software in the cloud are highlighted here.

- Sizing the virtual machines correctly

- Placement of the virtual machines onto the most appropriate host servers

- Configuration of different software roles within the virtual machines

- Assigning groups of computing resources to different teams

- Rapid provisioning

- Monitoring applications at the infrastructure level

Sizing Virtual Machines

You choose the amount of memory, number of virtual CPUs, storage layout and networking configuration for a virtual machine at creation time – and you can change it later if you need to. These are all software constructs rather than hardware ones, so they are more open to change. In big data practice so far, we have seen a minimum value of 4 vCPUs and a general rule of thumb value of 16 vCPUs for higher-end virtual machines running Hadoop/Spark type workloads. Applications that can make use of more than 16 vCPUs are rare but still feasible. Those would for code that is highly parallelized with significant numbers of concurrent threads within them, for example.

The amount of memory required on a virtual machine can be as large as your physical memory, although we see configured memory at 256Gb or 512Gb values for contemporary workloads. Basic operation for the classic Hadoop worker nodes can fit within a 128Gb memory boundary for many workloads. These sizes will be determined by the application platform architects, but it is also valuable for the end user, the data scientist and data engineer, to understand the constraints that apply to any one virtual machine. Given the hardware capacity, these numbers can be expanded in short order to see whether the work completes faster under new conditions.

Placement of the Virtual Machines onto Servers

In some cloud environments, you get to make the choice about fitting virtual machine onto host servers and the racks that contain them; in others you don’t. Where you are offered this choice, you can make decisions that will optimize your application’s performance. For example, in private clouds, you can choose to not overcommit the physical memory or physical CPU power of your servers with too many virtual machines or too large a configuration of virtual machine resources. Avoiding this over-commitment of physical resources generally helps the performance of those applications that are resource intensive, such as data analysis ones.

A corollary question here is how many virtual machines there should be on any one physical host server. In our testing work with virtualization, two to four suitably-sized virtual machines per server can yield good results as a starting point – and this number can be increased as experience grows with the platform and the workload. Where there is visibility to the server hardware type and configuration available to you, then NUMA boundaries should be respected in sizing your virtual machines, so as to get the absolute best performance from the machine. The virtualization best practices document mentioned above has detailed technical advice in this area.

Different Software Roles in the Virtual Machines

In most contemporary distributed data platforms, the software process roles are separated into “master” and “worker” nodes. The controlling processes run on the former and the executors that drive individual parts of the work are run in the workers. You create a template virtual machine (a “golden master”) for each of these different roles and then copy or clone that virtual machine depending on how many instances of that role you need. There may be an option to install the analysis software components themselves into the template virtual machine and thereby save on repeating that installation when it comes to instantiation of the clones or members of the cluster. These nodes are customizable with new software services at any time in the lifetime of that cluster, through the vendors’ management tools. We can likewise de-commission certain roles from the cluster in the same way, such as when we need fewer workers to carry out the analysis work.

Assigning Groups of Computing Resources to Different Teams

An inherent value of the virtualization approach for the cloud is the pooling of the hardware resources, so that parts of the total CPU/memory aggregate power can be carved out and dedicated for specific purposes and teams. Virtualization includes the concept of a “resource pool” with the capability to reserve a set compute and memory power for this purpose (across several machines). One department’s set of virtual machines lives within the confined space of its resource pool while a separate department lives in another. These may be adjacent to each other physically. When needed, more compute resources can be allocated to one or the other dynamically. This gives the manager of a data science department that has competing requests from his/her teams much more control over the allocations of resources, depending on the needs and relative importance of each team’s project work.

Rapid Provisioning

The creation of a set of virtual machines for a data scientists’ use is of course much more rapid than providing them with new physical machines, yielding better time to productive work. The further flexibility for expansion and contraction of the population of virtual machines in that set is even more important. In larger deployments of analytic applications that we have seen, rapid expansion occurs in the initial stages, going from 20 nodes (virtual machines) to over 300 nodes in a single cluster. As time moves on and new types of workload appear, that large infrastructure may contract, so as to fit a new one beside it on the same hardware collection, though separated into a different resource pool. That type of flexibility is a function of virtualization. The strict ownership boundaries that delineated private collections of servers for individual teams are broken down here.

Monitoring Applications at the Infrastructure Level

The data scientist/engineer wants to know why the query is taking so long to complete. We know this phenomenon well from the history of databases of various kinds. SQL engines are among the most popular layers on top of big data infrastructure today – indeed, almost every vendor has got one. There is a constant need to find the inefficient or poorly structured query that is hogging the system. Virtual machines and resource pools provide isolation of that rogue query to begin with. But further to that, if the cloud provider allows it, being able to see the effects of your code at the infrastructure level provides valuable data towards tracing its effects and optimizing its execution time.

Cloud Infrastructure is on the Move

In our opening remarks, we mentioned public, private and hybrid clouds. There is a wide interest in using common infrastructure and management tools across these types of clouds. When these base elements are the same for private and public cloud, then applications can live on either, as best fits the business need. In fact, the application can move from one to the other in an orderly fashion, without having to change. The lines will blur for the data scientist/engineer as a particular analysis work project may start off as an experiment in a public cloud and as it matures, it migrates to a private cloud for optimization of its performance. The reverse move may also take place. Announcements were made in late 2016 that the full VMware Software Defined Data Center portfolio will execute in AWS as a service. This common infrastructure across private and public cloud extends the flexibility and choice for the data scientist/data engineer. Analysis workloads on the VMware Cloud on AWS may now reach into S3 storage on AWS in a local fashion, within a common data center, thus bringing down the latency of access for data.

In this article, we have seen the value of understanding the virtualization constructs for the data scientist and data engineer as they deploy their analysis onto all kinds of cloud platforms. Virtualization is a key enabling layer of software for these data workers to be aware of and to achieve optimal results from.

Big Data/Hadoop on VMware vSphere - Reference Materials

Deployment Guides:

- Virtualizing Hadoop - a Deployment Guide

www.vmware.com/bde Under Resources -> Getting Started, or more directly:

www.vmware.com/files/pdf/products/vsphere/Hadoop-Deployment-Guide-USLET.pdf - Deploying Virtualized Cloudera CDH on vSphere using Isilon Storage - Technical Guide from EMC/Isilon

hsk-cdh.readthedocs.org/en/latest/hsk.html#deploy-a-cloudera-hadoop-cluster

or find the latest version at community.emc.com/docs/DOC-26892 - Deploying Virtualized Hortonworks HDP on vSphere using Isilon Storage - Technical Guide from EMC/Isilon

hsk-hwx.readthedocs.org/en/latest/hsk.html#deploy-a-hortonworks-hadoop-cluster-with-isilon-for-hdfs

or as above community.emc.com/docs/DOC-26892

Reference Architectures:

- Cloudera Reference Architecture - Isilon version

www.cloudera.com/content/cloudera/en/documentation/reference-architecture/latest/PDF/cloudera_ref_arch_vmware_isilon.pdf - Cloudera Reference Architecture – Direct Attached Storage version

www.cloudera.com/content/cloudera/en/documentation/reference-architecture/latest/PDF/cloudera_ref_arch_vmware_local_storage.pdf - Big Data with Cisco UCS and EMC Isilon: Building a 60 Node Hadoop Cluster (using Cloudera)

www.cisco.com/c/dam/en/us/td/docs/unified_computing/ucs/UCS_CVDs/Cisco_UCS_and_EMC_Isilon-with-Cloudera_CDH5.pdf - Deploying Hortonworks Data Platform (HDP) on VMware vSphere – Technical Reference Architecture

hortonworks.com/wp-content/uploads/2014/02/1514.Deploying-Hortonworks-Data-Platform-VMware-vSphere-0402161.pdf

Case Studies:

- Adobe Deploys Hadoop-as-a-Service on VMware vSphere

www.vmware.com/files/pdf/products/vsphere/VMware-vSphere-Adobe-Deploys-HAAS-CS.pdf - Virtualizing Hadoop in Large-Scale Infrastructures – technical white paper by EMC

community.emc.com/docs/DOC-41473 - Skyscape Cloud Services Deploys Hadoop in the Cloud on vSphere

www.vmware.com/files/pdf/products/vsphere/VMware-vSphere-Skyscape-Cloud-Services-Deploys-Hadoop-Cloud.pdf - Virtualizing Big Data at VMware IT – Starting Out at Small Scale

blogs.vmware.com/vsphere/2015/11/virtualizing-big-data-at-vmware-it-starting-out-at-small-scale.html

Performance:

- Big Data Performance on vSphere® 6 – Best Practices for Optimizing Virtualized Big Data Applications (2016)

www.vmware.com/content/dam/digitalmarketing/vmware/en/pdf/techpaper/bigdata-perf-vsphere6.pdf - Virtualized Hadoop Performance with VMware vSphere® 6 on High-Performance Servers (2015)

www.vmware.com/resources/techresources/10452 - Virtualized Hadoop Performance with VMware vSphere 5.1

www.vmware.com/resources/techresources/10360

There are some very useful best practices in the above two technical papers. - A Benchmarking Case Study of Virtualized Hadoop Performance on vSphere 5

www.vmware.com/resources/techresources/10222 - Transaction Processing Council – TPCx-HS Benchmark Results (Cloudera on VMware performance, submitted by Dell)

www.tpc.org/tpcx-hs/results/tpcxhs_results.asp - ESG Lab Review: VCE vBlock Systems with EMC Isilon for Enterprise Hadoop

www.esg-global.com/lab-reports/esg-lab-review-vce-vblock-systems-with-emc-isilon-for-enterprise-hadoop/

labs.vmware.com/flings/big-data-extensions-for-vsphere-standard-edition

Other vSphere Features and Big Data:

- Protecting Hadoop with VMware vSphere 5 Fault Tolerance

www.vmware.com/files/pdf/techpaper/VMware-vSphere-Hadoop-FT.pdf - Toward an Elastic Elephant – Enabling Hadoop for the Cloud

labs.vmware.com/vmtj/toward-an-elastic-elephant-enabling-hadoop-for-the-cloud - Apache Flume and Apache Scoop Data Ingestion to Apache Hadoop Clusters on VMware vSphere

www.vmware.com/files/pdf/products/vsphere/VMware-vSphere-Data-Ingestion-Solution-Guide.pdf - Hadoop Virtualization Extensions (HVE):

www.vmware.com/files/pdf/Hadoop-Virtualization-Extensions-on-VMware-vSphere-5.pdf

VMware CTO and Cloudera CTO Interview videos and Adobe Reference video:

hwww.youtube.com/playlist?list=PL9MeVsU0uG662xAjTB8XSD83GXSDuDzuk

Bio: Justin Murray (@johjustinmurray) is a Technical Marketing Architect at VMware in Palo Alto, CA. He has worked in various technical and alliances roles at VMware since 2007. Justin works with VMware’s customers and field engineering to create guidelines and best practices for using virtualization technology for big data. He has spoken at a variety of conferences on these subjects and has published blogs, white papers and other materials in this field.

Related: