37 Reasons why your Neural Network is not working

37 Reasons why your Neural Network is not working

Over the course of many debugging sessions, I’ve compiled my experience along with the best ideas around in this handy list. I hope they would be useful to you.

III. Implementation issues

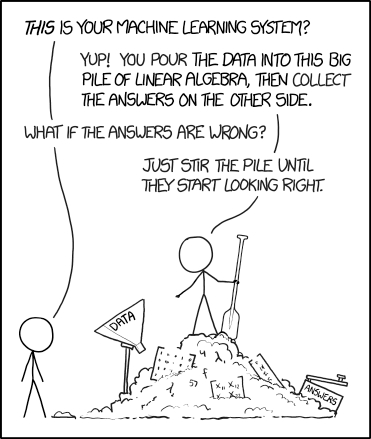

Credit: https://xkcd.com/1838/

16. Try solving a simpler version of the problem

This will help with finding where the issue is. For example, if the target output is an object class and coordinates, try limiting the prediction to object class only.

17. Look for correct loss “at chance”

Again from the excellent CS231n: Initialize with small parameters, without regularization. For example, if we have 10 classes, at chance means we will get the correct class 10% of the time, and the Softmax loss is the negative log probability of the correct class so: -ln(0.1) = 2.302.

After this, try increasing the regularization strength which should increase the loss.

18. Check your loss function

If you implemented your own loss function, check it for bugs and add unit tests. Often, my loss would be slightly incorrect and hurt the performance of the network in a subtle way.

19. Verify loss input

If you are using a loss function provided by your framework, make sure you are passing to it what it expects. For example, in PyTorch I would mix up the NLLLoss and CrossEntropyLoss as the former requires a softmax input and the latter doesn’t.

20. Adjust loss weights

If your loss is composed of several smaller loss functions, make sure their magnitude relative to each is correct. This might involve testing different combinations of loss weights.

21. Monitor other metrics

Sometimes the loss is not the best predictor of whether your network is training properly. If you can, use other metrics like accuracy.

22. Test any custom layers

Did you implement any of the layers in the network yourself? Check and double-check to make sure they are working as intended.

23. Check for “frozen” layers or variables

Check if you unintentionally disabled gradient updates for some layers/variables that should be learnable.

24. Increase network size

Maybe the expressive power of your network is not enough to capture the target function. Try adding more layers or more hidden units in fully connected layers.

25. Check for hidden dimension errors

If your input looks like (k, H, W) = (64, 64, 64) it’s easy to miss errors related to wrong dimensions. Use weird numbers for input dimensions (for example, different prime numbers for each dimension) and check how they propagate through the network.

26. Explore Gradient checking

If you implemented Gradient Descent by hand, gradient checking makes sure that your backpropagation works like it should. More info: 1 2 3.

IV. Training issues

Credit: http://carlvondrick.com/ihog/

27. Solve for a really small dataset

Overfit a small subset of the data and make sure it works. For example, train with just 1 or 2 examples and see if your network can learn to differentiate these. Move on to more samples per class.

28. Check weights initialization

If unsure, use Xavier or He initialization. Also, your initialization might be leading you to a bad local minimum, so try a different initialization and see if it helps.

29. Change your hyperparameters

Maybe you using a particularly bad set of hyperparameters. If feasible, try a grid search.

30. Reduce regularization

Too much regularization can cause the network to underfit badly. Reduce regularization such as dropout, batch norm, weight/bias L2 regularization, etc. In the excellent “Practical Deep Learning for coders” course, Jeremy Howard advises getting rid of underfitting first. This means you overfit the training data sufficiently, and only then addressing overfitting.

31. Give it time

Maybe your network needs more time to train before it starts making meaningful predictions. If your loss is steadily decreasing, let it train some more.

32. Switch from Train to Test mode

Some frameworks have layers like Batch Norm, Dropout, and other layers behave differently during training and testing. Switching to the appropriate mode might help your network to predict properly.

33. Visualize the training

- Monitor the activations, weights, and updates of each layer. Make sure their magnitudes match. For example, the magnitude of the updates to the parameters (weights and biases) should be 1-e3.

- Consider a visualization library like Tensorboard and Crayon. In a pinch, you can also print weights/biases/activations.

- Be on the lookout for layer activations with a mean much larger than 0. Try Batch Norm or ELUs.

- Deeplearning4j points out what to expect in histograms of weights and biases:

“For weights, these histograms should have an approximately Gaussian (normal) distribution, after some time. For biases, these histograms will generally start at 0, and will usually end up being approximately Gaussian(One exception to this is for LSTM). Keep an eye out for parameters that are diverging to +/- infinity. Keep an eye out for biases that become very large. This can sometimes occur in the output layer for classification if the distribution of classes is very imbalanced.”

- Check layer updates, they should have a Gaussian distribution.

34. Try a different optimizer

Your choice of optimizer shouldn’t prevent your network from training unless you have selected particularly bad hyperparameters. However, the proper optimizer for a task can be helpful in getting the most training in the shortest amount of time. The paper which describes the algorithm you are using should specify the optimizer. If not, I tend to use Adam or plain SGD with momentum.

Check this excellent post by Sebastian Ruder to learn more about gradient descent optimizers.

35. Exploding / Vanishing gradients

- Check layer updates, as very large values can indicate exploding gradients. Gradient clipping may help.

- Check layer activations. From Deeplearning4j comes a great guideline: “A good standard deviation for the activations is on the order of 0.5 to 2.0. Significantly outside of this range may indicate vanishing or exploding activations.”

36. Increase/Decrease Learning Rate

A low learning rate will cause your model to converge very slowly.

A high learning rate will quickly decrease the loss in the beginning but might have a hard time finding a good solution.

Play around with your current learning rate by multiplying it by 0.1 or 10.

37. Overcoming NaNs

Getting a NaN (Non-a-Number) is a much bigger issue when training RNNs (from what I hear). Some approaches to fix it:

- Decrease the learning rate, especially if you are getting NaNs in the first 100 iterations.

- NaNs can arise from division by zero or natural log of zero or negative number.

- Russell Stewart has great pointers on how to deal with NaNs.

- Try evaluating your network layer by layer and see where the NaNs appear.

Bio: Slav Ivanov is Entrepreneur & ML Practitioner in Sofia, Bulgaria. He blogs about Machine Learning at https://blog.slavv.com . Previously built http://postplanner.com .

Original. Reposted with permission.

Related:

37 Reasons why your Neural Network is not working

37 Reasons why your Neural Network is not working