PySpark SQL Cheat Sheet: Big Data in Python

PySpark is a Spark Python API that exposes the Spark programming model to Python - With it, you can speed up analytic applications. With Spark, you can get started with big data processing, as it has built-in modules for streaming, SQL, machine learning and graph processing.

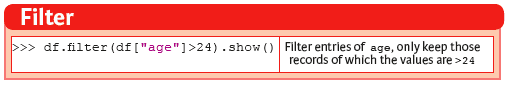

Filter

Filtering your Spark DataFrames is extremely easy: you use the filter() method on your DataFrame. Do make sure to pass in the condition on which you’re filtering!

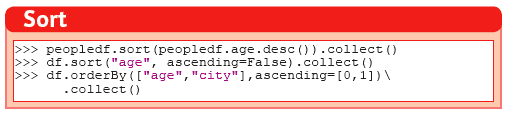

Sort

Also sorting your Spark DataFrame is easy peasy: make use of the sort() and orderBy() methods, in combination with collect() to retrieve your sorted DataFrame.

Remember: while show() prints the first n rows to the console, collect() returns all the records as a list of Row. Make sure that you use the latter when only when you’re working on small DataFrames, exactly because of what you have just read: the use of this method will make sure that all records are returned and will move all records back from the executor to the driver.

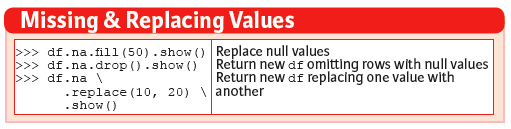

Missing & Replacing Values

To handle missing values, you can replace, fill or drop them - you can use the replace(), fill() and drop() methods to do this.

Note: the replace() method takes two arguments, namely, the value that you want to be replacing and the value with which you want to replace the original value.

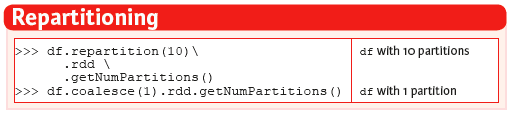

Repartitioning

Repartitioning is something that you’ll find yourself doing from time to time. You can use this method to increase or decrease the number of partitions in your DataFrame. However, you might want to consider the way you’re doing this operation. Consider the following examples:

You see that both the repartition() and coalesce() methods are used to repartition the DataFrame.

Note that, while repartition() creates equal-sized data partitions by means of a full data shuffle, coalesce() avoids this full shuffle by combining existing partitions.

Running SQL Queries Programmatically

Registering DataFrames as Views

You register Spark DataFrames as views so that you can query it using “real” SQL queries (and not the variants with methods which you saw earlier in this article).

The first step in querying your Spark DataFrames with “real” SQL is, of course, registering your DataFrames as views. When you’re doing this, it’s important to keep the scope of your project in mind - as the name already suggests, createTempView() creates a temporary view, while createGlobalTempView() creates a global temporary view. The latter is shared among all sessions and is kept alive until the Spark application terminates, while the former is session-scoped and will disappear if the session that creates it terminates.

Note that you can also use createOrReplaceTempView() to create a temporary view if there is none or replace one if there is already a view present.

Query Views

Now that you have made some views, as in the example above, where you made the views “people” and “customer”, you can use the sql() function on a SparkSession to run SQL queries programmatically - The result of this operation will be another DataFrame, as you can see in the examples above.

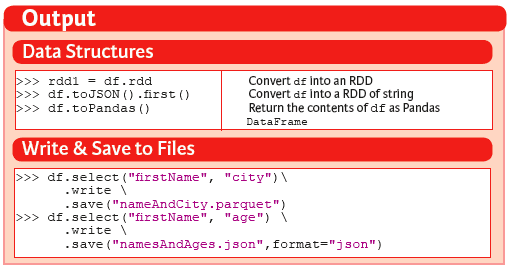

Output

Lastly, there’s the output to consider: your analyses will always have some results and you’ll want to save these results to another file, a Pandas DataFrame, … This section will cover some of the options that you have available to do this.

Data Structures

As already mentioned above, you can convert your Spark DataFrame into an RDD, a Pandas DataFrame, an RDD of string, … There are many possible data structures to which you can convert your Spark DataFrame but the three listed in the image above are probably the most common ones to be used.

Write & Save to Files

Writing and saving the results of your analysis is of key importance whenever you’re doing data science - The same holds, of course, when you’re working with big data. You’ll want to save your results to JSON or parquet files.

Remember: parquet files have a columnar file format available to any project in the Hadoop ecosystem. It doesn’t matter which data processing framework, data model or programming language you’re using! Parquet files provide efficient data compression and encoding schemes with enhanced performance to handle complex data in bulk. JSON or JavaScript Object Notation files, on the other hand, have an open-standard file format that uses human-readable text to transmit data objects consisting of attribute–value pairs and array data types (or any other serializable value).

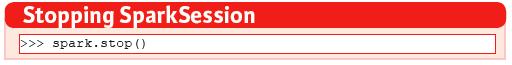

Stopping SparkSession

Before you leave, don’t forget to stop your SparkSession! Remember that you have assigned your SparkSession to the spark variable - You’ll need this exact variable and the stop() method to correctly stop your SparkSession.

This is just the beginning of your journey with Spark SQL. We hope that you don’t forget to keep your cheat sheet handy, of course, when you continue learning more about this exciting technology, its applications and its possibilities.

DataCamp is an online interactive education platform that that focuses on building the best learning experience specifically for Data Science. Our courses on R, Python, SQL, and Data Science are built around a certain topic, and combine video instruction with in-browser coding challenges so that you can learn by doing. You can start every course for free, whenever you want, wherever you want.

Karlijn Willems is a data science journalist and writes for the DataCamp community, focusing on data science education, the latest news and the hottest trends. She holds degrees in Literature and Linguistics and Information Management.

Related: