Neural Networks – an Intuition

Neural networks are one of the most powerful algorithms used in the field of machine learning and artificial intelligence. We attempt to outline its similarities with the human brain and how intuition plays a big part in this.

By Prateek Karkare, Research Associate at Nanyang Technological University .

Humans have always been fascinated by nature. The flight of birds led us to invent airplanes; shark skin inspired us to make faster swimsuits and numerous other machines which draw inspiration from nature. Today we are in the era of building intelligent machines, and there is no better inspiration than the brain. Humans are particularly blessed by evolution with a brain capable of performing the most complex tasks.

Since early 1940 scientists have been trying to build mathematical models and algorithms which mimic computations as they are performed inside the brain. Of course we are still far away in achieving the kind of feat brain does, but we are getting there.

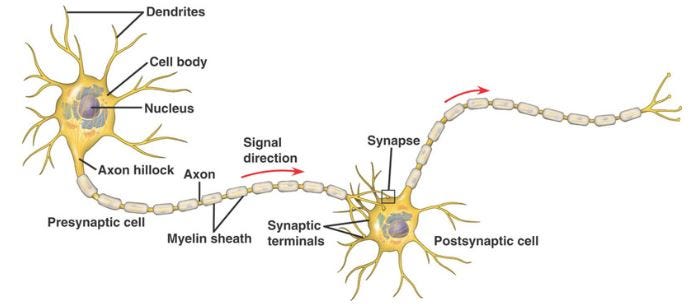

Several simplified learning models have been proposed in the quest of making intelligent machines and the most popular among them is the Artificial Neural Network or ANN or simply a Neural Network. Neural networks are one of the most powerful algorithms used in the field of machine learning and artificial intelligence nowadays. As the name suggests it draws inspiration from neurons in our brain and the way they are connected. Let us take a quick peek inside our brain.

Source: http://biomedicalengineering.yolasite.com/neurons.php

Neurons are connected inside our brain as depicted in the picture above. This picture shows only 2 neurons connected to each other. In reality, thousands of neurons connect to a single neuron’s cell body through dendrites, on an average a single neuron connects to 10,000 other neurons. Let us simplify this picture to make an artificial neural network model.

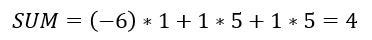

A multi-layer neural network

For now, assume that the cell body will just hold a number and the connection arrow describes the direction of data flow and how strongly each neuron is connected to other neurons. Don’t worry about what strongly means here we will see that in a while. We have taken some inspiration from biology about neurons and their connectivity. Let’s see how this helps in doing some tasks which our brain does.

Perceptron

Source: https://www.javascripttuts.com

Well, that’s Megatron.

Perceptron is a machine learning algorithm invented by Frank Rosenblatt in 1957. Perceptron is a linear classifier, you can read about what linear classifier is and a classification algorithm here. Let’s look at a very small classification example. Let us try to build a machine which identifies whether an object is a cricket ball or not.

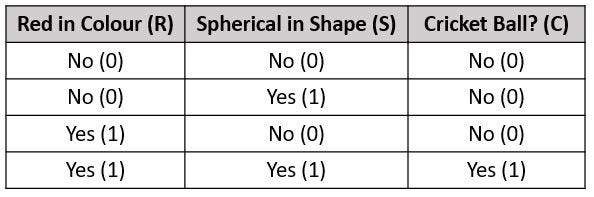

Let us arbitrarily choose some properties of this ball.

- It is Red, we will call this property R of the ball

- It is spherical, we will call this property S of the ball

Depending on the arbitrarily chosen properties, also known as features, we will classify the object as a cricket ball or not. The above table tells us that if a ball is red and spherical, it is a cricket ball, in all other cases, it is not. Let’s see how to do this with a neural network.

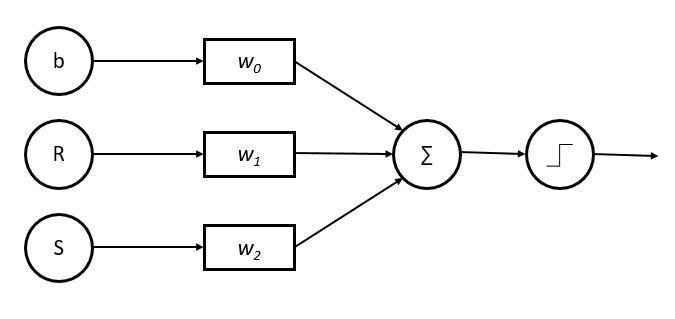

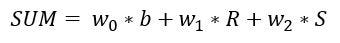

To build our super tiny brain which can identify a cricket ball we will take a neuron which just adds up the inputs and outputs the sum —

Breaking down the above figure; b, R and S are input neurons or simply the inputs to the network, w0, w1 and w2 are the strengths of connections to the middle neuron which sums up the inputs to it. b here is a constant which is called bias. The last step which is the rightmost neuron is just a function called activation function which outputs 1 if the input to it is positive and 0 if the input it negative. Mathematically it looks like —

if this SUM > 0, Output = 1 or Yes and SUM < 0, Output = 0 or No

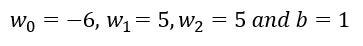

Let us look at how this helps in classifying our cricket ball. We choose some arbitrary numbers for our connection strengths w and our constant b. How do we arrive at those values which is a part of learning those weights by training the neural network is a topic for part-2 of this series. For now let’s just assume we got these numbers from somewhere —

Let us fit these values

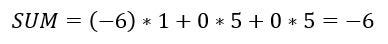

Case 1: When the object is neither a sphere (S=0) nor red (R=0)

The SUM<0 which means the output is 0 or No. Our perceptron says that this is not a cricket ball.

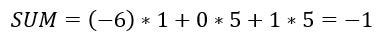

Case 2: When the object is a sphere (S=1) but not red (R=0)

The SUM<0 which means the output is 0 or No, not a cricket ball

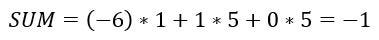

Case:3 When the object is not a sphere (S=0) but red (R=1)

The SUM<0 which means the output is 0 or No, not a cricket ball

Case 4: When the object is a sphere (S=1) and red (R=1)

The SUM>0 which means the output is 1. Et Voila! Our perceptron says it is a cricket ball.

This is the most rudimentary idea behind a neural network. We connect lot of these perceptrons in a particular manner and what we get is a neural network. I’ve oversimplified the idea a little bit but it still captures the essence of a perceptron.

Multi-layer Perceptron

We take this idea of a perceptron and stack them together to create layers of these neurons which is called a Multi-layer perceptron (MLP) or a Neural Network.

In our simplified perceptron model we were just using a step function for the output. In practice lot of different transformations or activation functions are used.

We chose our features, red color and spherical shape manually and rather arbitrarily but it is not always practical to choose such features for many other complex tasks. The MLP addresses this problem to some extent. The inputs to MLP could simply be just the pixel values of an image, the hidden layer then combines and transforms these pixel values to create features in the hidden layer. Essentially a single layer of perceptrons transforms the data and sends it to the following layer.

A 28 pixel x 28 pixel image of a handwritten digit

Suppose our MLP is trying to identify a digit by looking at images of handwritten digits similar to the one above. With a MLP we can pass this image as their pixel values directly without extracting any features out of it to the input layer. The hidden layer then combines and transforms these pixels to create some features. Each neuron in the hidden layer tries to learn some features as a part of the training process. For instance neuron number 1 in the hidden layer might learn to respond to horizontal edges in the image, neuron 2 might respond to a vertical edge in the image and so on. Now if the input image has a horizontal edge and a vertical edge together, neuron 1 and 2 in the hidden layer would respond and the output neuron will now combine these two features and might say it is a 7 since 7 roughly has a vertical edge and a horizontal edge.

Of course the MLPs don’t always learn features which necessarily make sense but it essentially is a transformation of the input data. For example for a MLP which takes in the handwritten digit image as input having 16 neurons in hidden layer learns the features something like —

Each square represents the learned features by a neuron in the hidden layer

As you can clearly see the patterns are more or less random and don’t make sense. This inference process and transformations of MLP is best explained in this awesome YouTube series by 3Blue1Brown — What is a Neural Network. I would highly encourage you to go to this channel and watch the videos to get an intuition about neural networks. A nice tool to visualize some of the things which a neural network does is Tensorflow Playground and if you have some background in Linear Algebra the visualizations in this blog are nice.

Neural Networks Today and Tomorrow

So what tasks a neural network can perform apart from identifying a cricket ball or a handwritten digit? Today neural networks are deployed for a wide range of tasks. Image Recognition, Voice Recognition, Natural Language Processing, Time series data and many many other applications. Image classification is one particular field where neural networks have become the de facto algorithm. Neural networks have surpassed humans in identifying images accurately. Of course there are lot of sophisticated techniques and math to build such high fidelity neural networks.

Source: xkcd.com

Neural networks are everywhere. Companies deploy them to give you recommendations about which video you might like to watch on YouTube, identify your voice and commands when you speak to Siri or Google Now or Alexa. Neural networks are now generating their own paintings and music pieces. Still, there are a lot of challenges. Neural networks can be huge with hundreds of layers with hundreds of neurons in each layer which makes computing a big challenge with the current hardware technology. Training neural networks is another huge challenge. Several GPUs are employed together to train these neural networks which take several hours to days.

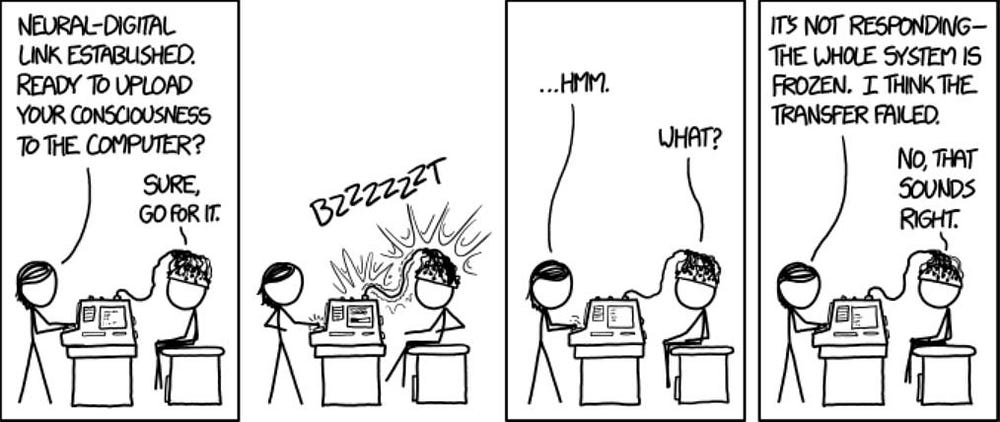

But they are the present and the future in our quest for building machines with intelligence and capabilities as sophisticated as the human brain. We are still far away from making an artificial brain which can someday “download” all of your consciousness creating a machine copy of yourself. But for now that is just science fiction!

Source: xkcd.com

Neural network is a vast subject and the idea behind this article is to elicit enough curiosity to give you a small impetus to go and explore the topic. So go and click those YouTube and TensorFlow links in the article and have fun learning!.

Look out for the part-2 of this series on How to train your D̶r̶a̶g̶o̶n NN.

Bio: Prateek Karkare is an Electrical engineer in training with a keen interest in Physics, Computer Sciences and Biology.

Original. Reposted with permission.

Resources:

- On-line and web-based: Analytics, Data Mining, Data Science, Machine Learning education

- Software for Analytics, Data Science, Data Mining, and Machine Learning

Related:

- NLP Overview: Modern Deep Learning Techniques Applied to Natural Language Processing

- The Backpropagation Algorithm Demystified

- Supervised Learning: Model Popularity from Past to Present