Source: Adobe Stock

Do you remember the first time you started to build some SQL queries to analyse your data? I’m sure most of the time you just wanted to see the “Top selling products” or “Count of product visits by weekly”. Why write SQL queries instead of just asking what you have in your mind in natural language?

This is now possible thanks to the recent advancements in NLP. You can now not just use the LLM (Large Language Model) but also teach them new skills. This is called transfer learning. You can use a pretrained model as a starting point. Even with smaller labeled datasets you can get great performances compared to training on the data alone.

In this tutorial we are going to do transfer learning with text-to-text generation model T5 by Google with our custom data so that it can convert basic questions to SQL queries. We will add a new task to T5 called: translate English to SQL. At the end of this article you will have a trained model that can translate the following sample query:

Cars built after 2020 and manufactured in Italy

into the following SQL query:

SELECT name FROM cars WHERE location = 'Italy' AND date > 2020

You can find the Gradio demo here and the Layer Project here.

Build the Training Data

Unlike the common language to language translation datasets, we can build custom English to SQL translation pairs programmatically with the help of templates. Now, time to come up with some templates:

We can build our function which will use these templates and generate our dataset.

As you can see we have used Layer @dataset decorator here. We can now pass this function to Layer easily with:

layer.run([build_dataset])

Once the run is complete, we can start building our custom dataset loader to fine tune T5.

Create Dataloader

Our dataset is basically a PyTorch Dataset implementation specific to our custom built dataset.

Finetune T5

Our dataset is ready and registered to Layer. Now we are going to develop the fine tuning logic. Decorate the function with @model and pass it to Layer. This trains the model on Layer infra and registers it under our project.

Here we use three separate Layer decorators:

@model: Tells Layer that this function trains an ML model@fabric: Tells Layer the computation resources (CPU, GPU etc.) needed to train the model. T5 is a big model and we need GPU to fine tune it. Here is a list of the available fabrics you can use with Layer.@pip_requirements: The Python packages needed to fine tune our model.

Now we can just pass the tokenizer and model training functions to Layer to train our model on a remote GPU instance.

layer.run([build_tokenizer, build_model], debug=True)

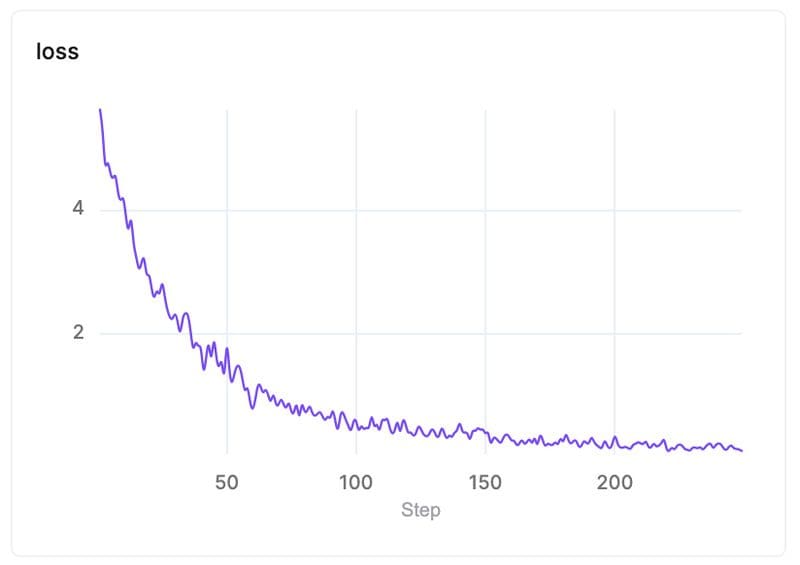

Once the training is complete, we can find our models and the metrics in the Layer UI. Here is our loss curve:

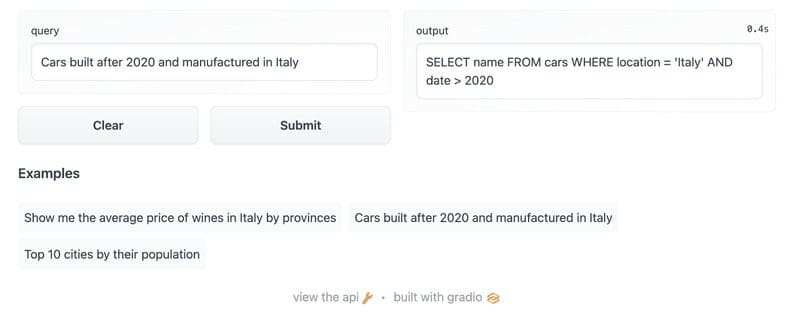

Build the Gradio Demo

Gradio is the fastest way to demo your machine learning model with a friendly web interface so that anyone can use it, anywhere! We will build an interactive demo with Gradio to provide a UI for people want to try our model.

Let’s get to coding. Create a Python file called app.py and put the following code:

In the above code:

- We fetch the our fine-tuned model and the related tokenizer from Layer

- Create a simple UI with Gradio: an input textfield for query input and an output textfield to display the predicted SQL query

We will need some extra libraries for this small Python application, so create a requirements.txt file with the following content:

layer-sdk==0.9.350435

torch==1.11.0

sentencepiece==0.1.96

We are ready to publish our Gradio app now:

- Go to Hugging face and create a space.

- Don’t forget to select Gradio as the Space SDK

Now clone your repo into your local directory with:

$ git clone [YOUR_HUGGINGFACE_SPACE_URL]

Put the requirements.txtand app.py file into to cloned directory and run the following commands in your terminal:

$ git add app.py

$ git add requirements.txt

$ git commit -m "Add application files"

$ git push

Now head over to your Hugging face space, you will see your example once the app is deployed

Final Thoughts

We learned how to fine tune a large language model to teach them a new skill. You can now design your own task and fine tune T5 for your own use.

Check out the full Fine Tuning T5 project and modify it for your own task.

Resources

- https://ai.googleblog.com/2020/02/exploring-transfer-learning-with-t5.html

- https://app.layer.ai/layer/t5-fine-tuning-with-layer

- https://huggingface.co/spaces

Mehmet Ecevit is Co-founder & CEO at Layer: Collaborative Machine Learning.