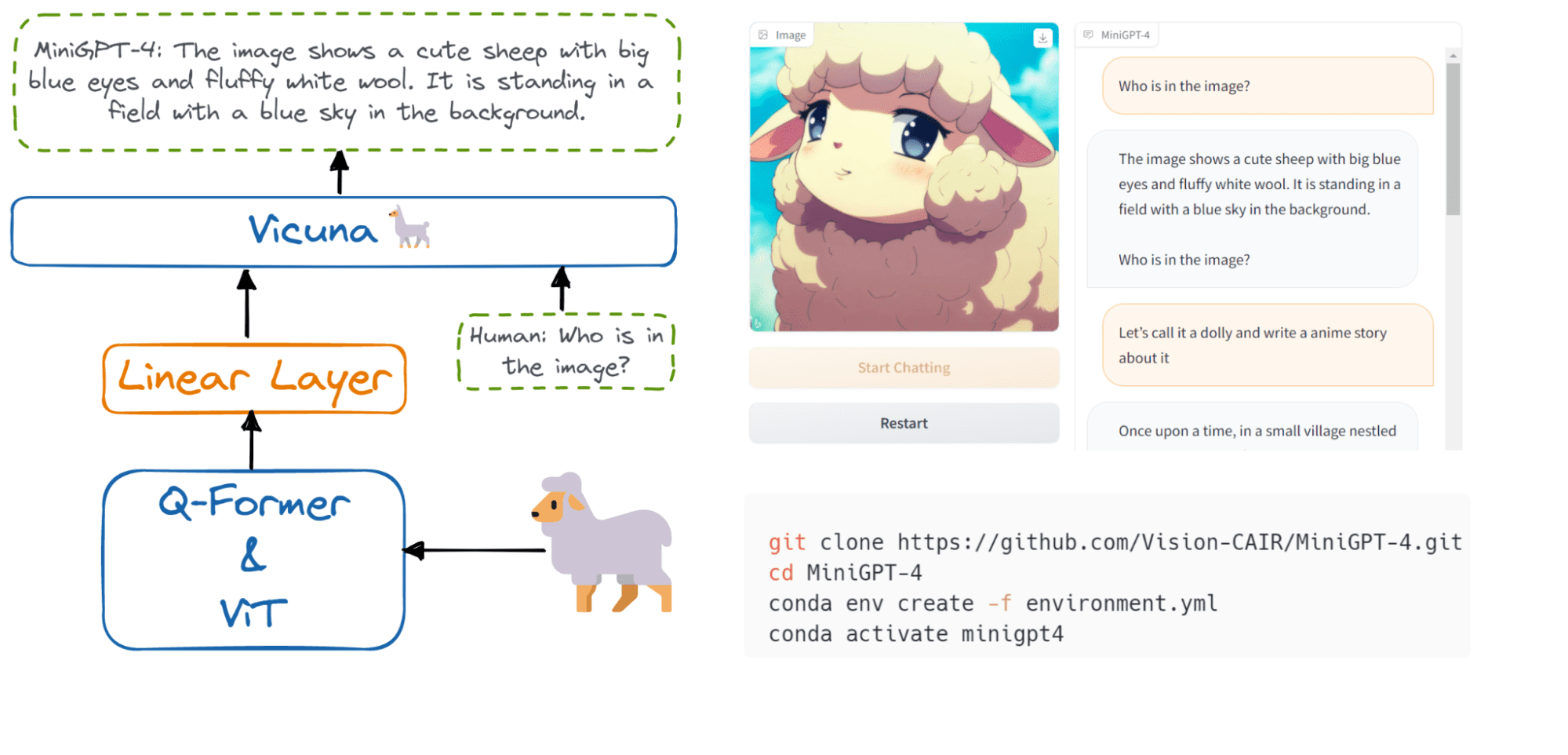

MiniGPT-4: A Lightweight Alternative to GPT-4 for Enhanced Vision-language Understanding

MiniGPT-4 possesses many capabilities of GPT-4 like generating image descriptions, creating a website with a hand-written draft, and writing a poem based on an image.

Image by Author

We are seeing rapid development of ChatGPT open-source alternatives, but no one is working on the GPT-4 alternative, which provides multimodality. GPT-4 is an advanced and powerful multimodal model that accepts images and text as input and outputs text response. It can solve complex problems with greater accuracy and learn from its mistakes.

In this post, we will learn about MiniGPT-4, an open-source alternative to OpenAI’s GPT-4 that can understand both visual and textual context while being lightweight.

What is MiniGPT-4?

Similar to GPT-4, MiniGPT-4 can exhibit detailed image description generation, write stories using images, and create a website using the hand-drawn user interface. It achieves that by utilization of a more advanced large language model (LLM).

You can experience it yourself by trying out the demo: MiniGPT-4 - a Hugging Face Space by Vision-CAIR.

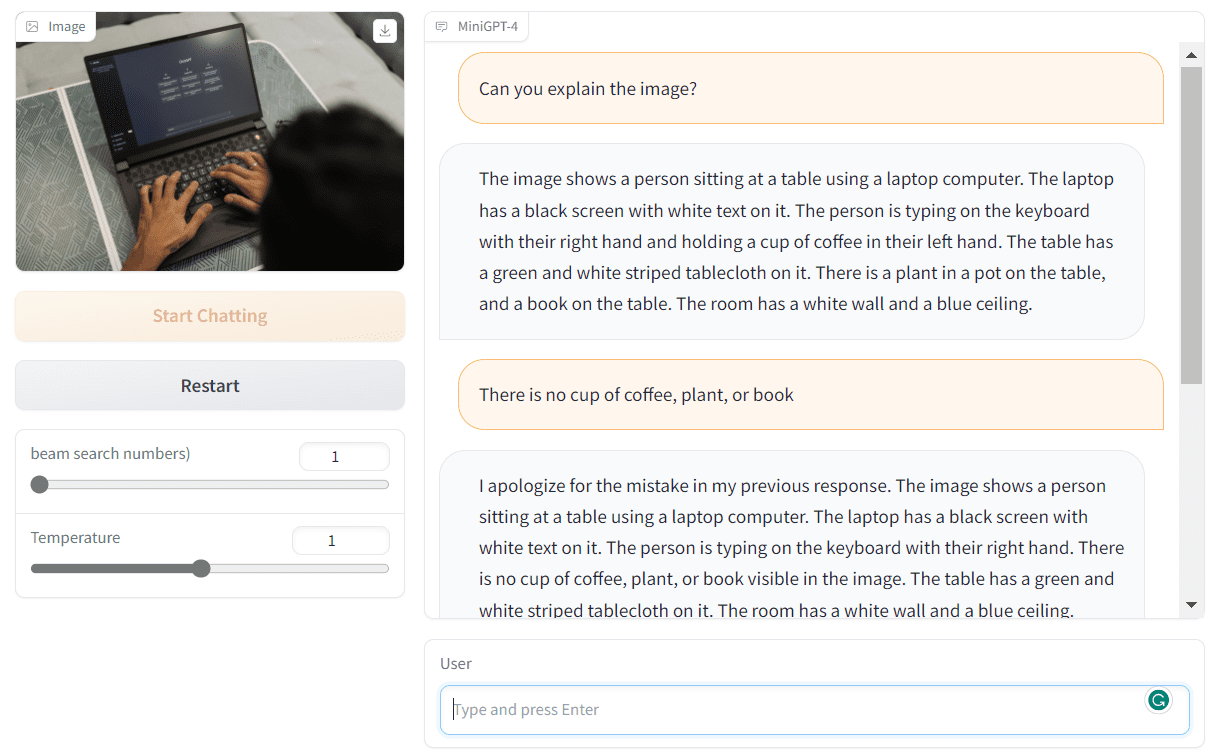

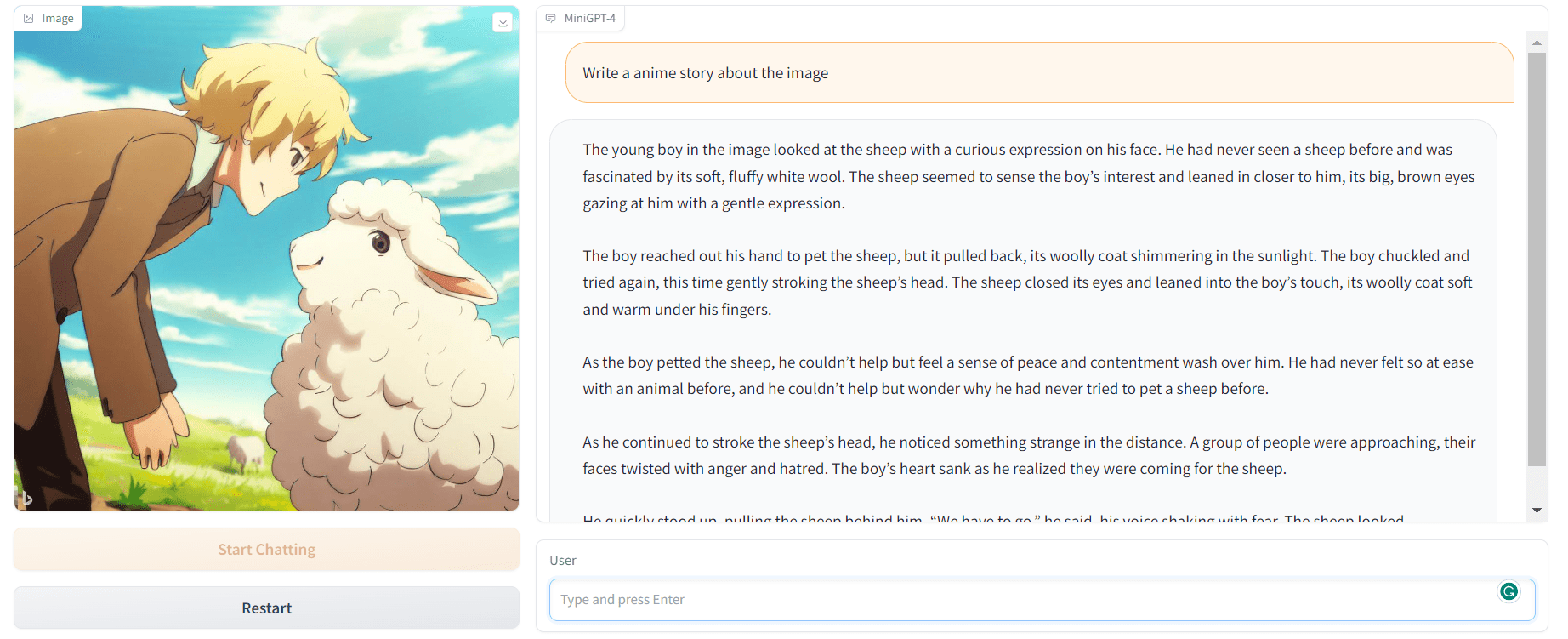

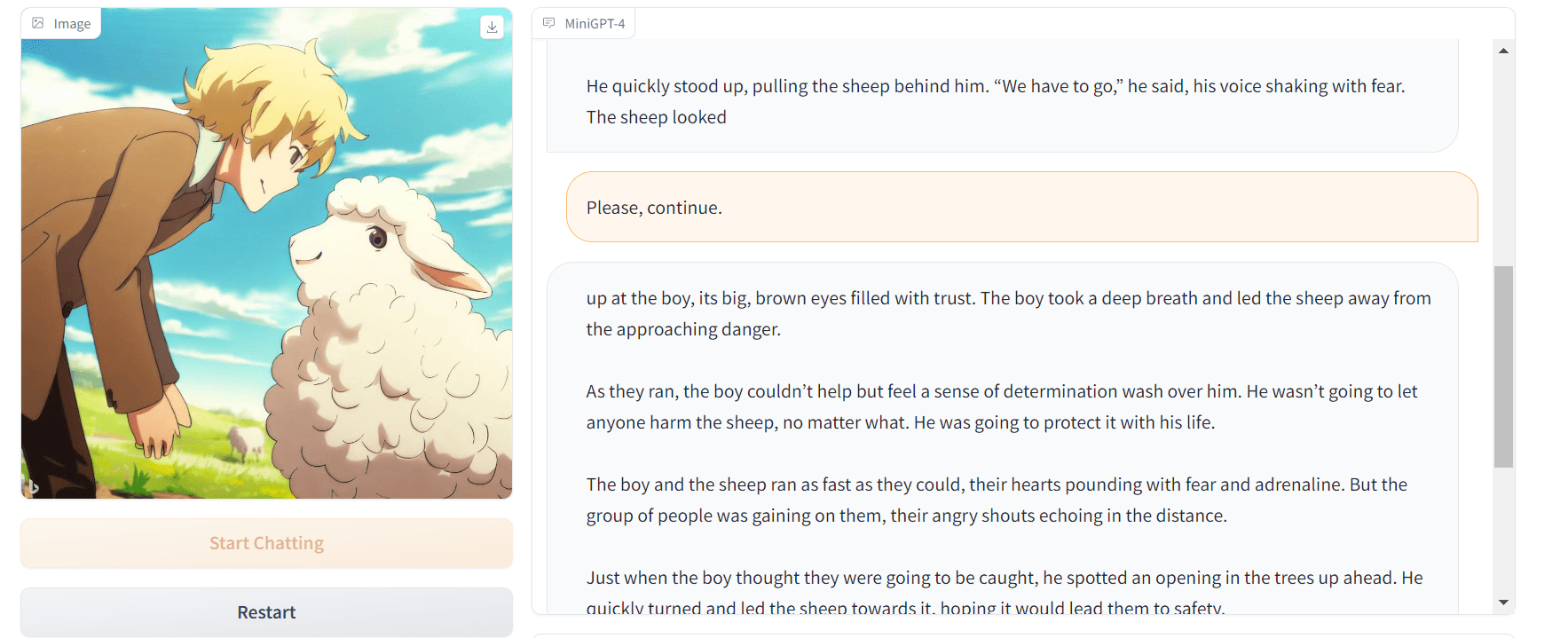

Image by Author | MiniGPT-4 Demo

The authors of MiniGPT-4: Enhancing Vision-language Understanding with Advanced Large Language Models found that pre-training on raw image-text pairs could produce poor results that lack coherency, including repetition and fragmented sentences. To counter this issue, they curated a high-quality, well-aligned dataset and fine-tuned the model using a conversational template.

The MiniGPT-4 model is highly computationally efficient, as they have trained only a projection layer utilizing approximately 5 million aligned image-text pairs.

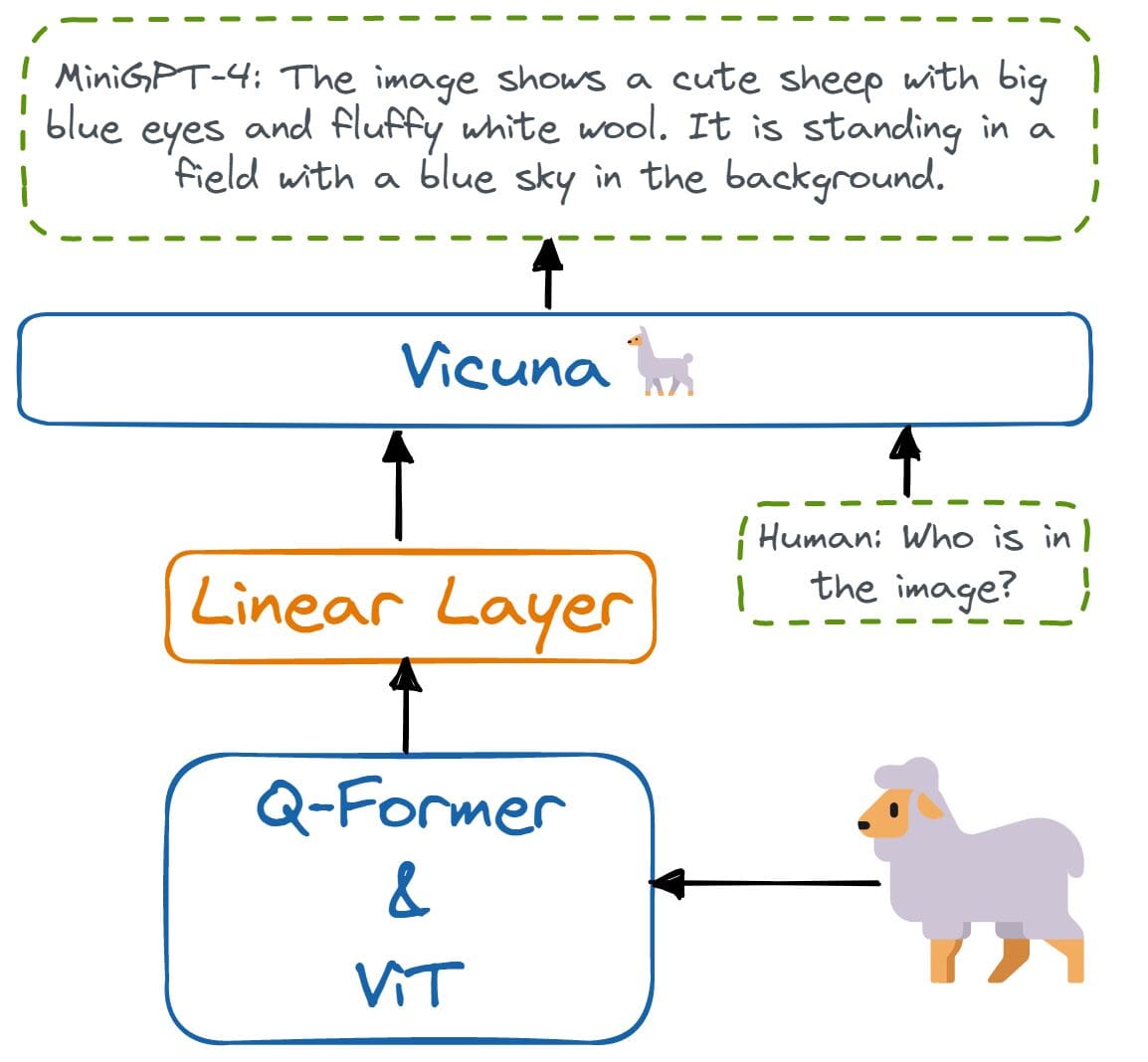

How does MiniGPT-4 work?

MiniGPT-4 aligns a frozen visual encoder with a frozen LLM called Vicuna using just one projection layer. The visual encoder consists of pretrained ViT and Q-Former models that are connected to an advanced Vicuna large language model via a single linear projection layer.

Image by Author | The architecture of MiniGPT-4.

MiniGPT-4 only requires training the linear layer to align the visual features with Vicuna. So, it is lightweight, requires less computational resources, and produces similar results to GPT-4.

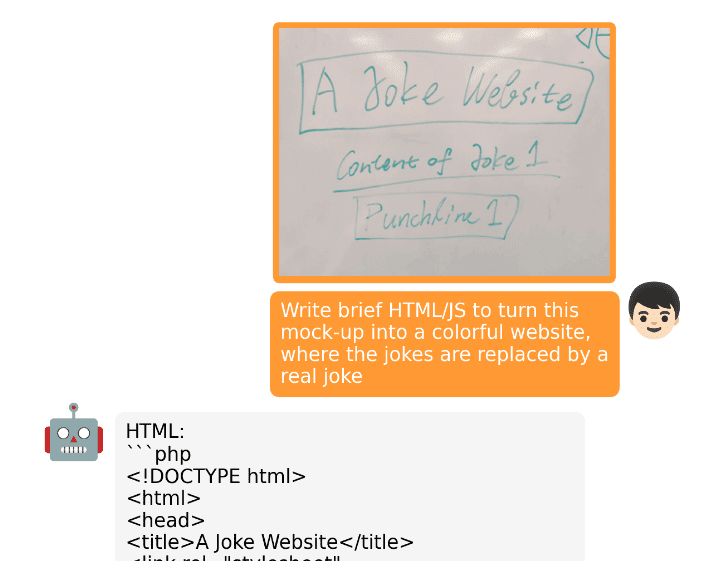

Results

If you look at the official results at minigpt-4.github.io, you will see that the authors have created a website by uploading the hand-drawn UI and asking it to write an HTML/JS website. The MiniGPT-4 understood the context and generated HTML, CSS, and JS code. It is amazing.

Image from minigpt-4.github.io

They have also shown how you can use the model to generate a recipe by providing food images, writing advertisements for the product, describing a complex image, explaining the painting, and more.

Let’s try this on our own by heading to the MiniGPT-4 demo. As we can see, I have provided the Bing AI-generated image and asked the MiniGPT-4 to write a story using it. The result is amazing.

The story is coherent.

Image by Author | MiniGPT-4 Demo

I wanted to know more, so I asked it to continue writing, and just like an AI chatbot, it kept writing the plot.

Image by Author | MiniGPT-4 Demo

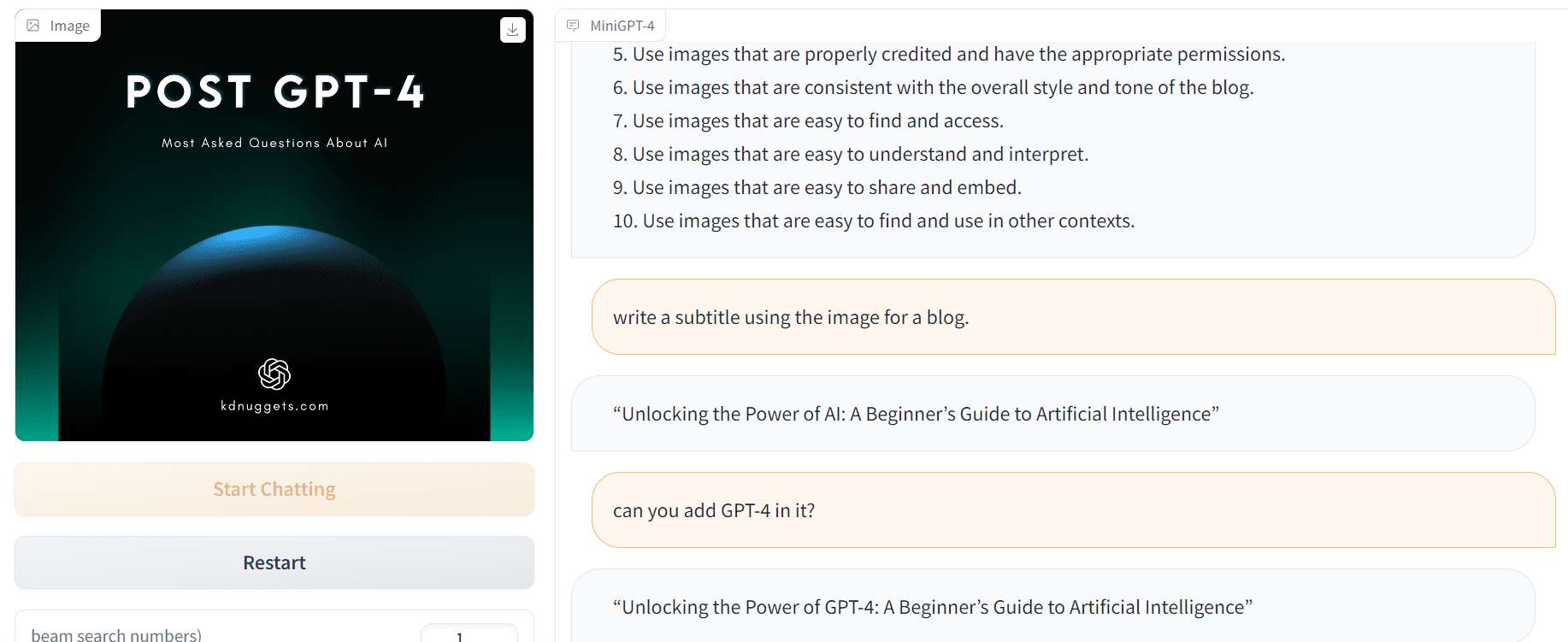

In the second example, I asked it to help me improve the design of the image and then asked it to generate subtitles for the blog using the image.

Image by Author | MiniGPT-4 Demo

MiniGPT-4 is amazing. It learns from mistakes and produces high-quality responses.

Limitations

MiniGPT-4 has many advanced vision-language capabilities, but it still faces several limitations.

- Currently, the model inference is slow even with high-end GPUs, which can result in slow results.

- The model is built upon LLMs, so it inherits their limitations like unreliable reasoning ability and hallucinating non-existent knowledge.

- The model has limited visual perception and may struggle to recognize detailed textual information in images.

Getting Started

The project comes with training, fine-tuning, and inference of source code. It also includes publicly available model weights, dataset, research paper, demo video, and link to the Hugging Face demo.

You can start hacking, start fine-tuning the model on your dataset, or just experience the model through various instances of the official demo on the official page.

- Official Page: minigpt-4.github.io

- Research Paper: MiniGPT-4/MiniGPT_4.pdf

- GitHub: Vision-CAIR/MiniGPT-4

- Gradio Demo: Demo of MiniGPT-4

- Demo Video: MiniGPT-4: Enhancing Vision-Language Understanding with Advanced Large Language Models

- Model Weights: Vision-CAIR/MiniGPT-4

- Dataset: Vision-CAIR/cc_sbu_align

It is the first version of the model. You will see a more improved version in the upcoming days, so stay tuned.

Abid Ali Awan (@1abidaliawan) is a certified data scientist professional who loves building machine learning models. Currently, he is focusing on content creation and writing technical blogs on machine learning and data science technologies. Abid holds a Master's degree in Technology Management and a bachelor's degree in Telecommunication Engineering. His vision is to build an AI product using a graph neural network for students struggling with mental illness.