Greening AI: 7 Strategies to Make Applications More Sustainable

The article delves into a comprehensive methodology that sheds light on how to accurately estimate the carbon footprint associated with AI applications. It explains the environmental impact of AI, a crucial consideration in today's world.

Image by Editor

AI applications possess unparalleled computational capabilities that can propel progress at an unprecedented pace. Nevertheless, these tools rely heavily on energy-intensive data centers for their operations, resulting in a concerning lack of energy sensitivity that contributes significantly to their carbon footprint. Surprisingly, these AI applications already account for a substantial 2.5 to 3.7 percent of global greenhouse gas emissions, surpassing the emissions from the aviation industry.

And unfortunately, this carbon footprint is increasing at a fast pace.

Presently, the pressing need is to measure the carbon footprint of machine learning applications, as emphasized by Peter Drucker's wisdom that "You can't manage what you can't measure." Currently, there exists a significant lack of clarity in quantifying the environmental impact of AI, with precise figures eluding us.

In addition to measuring the carbon footprint, the AI industry's leaders must actively focus on optimizing it. This dual approach is vital to addressing the environmental concerns surrounding AI applications and ensuring a more sustainable path forward.

What factors contribute to the carbon footprint of AI applications

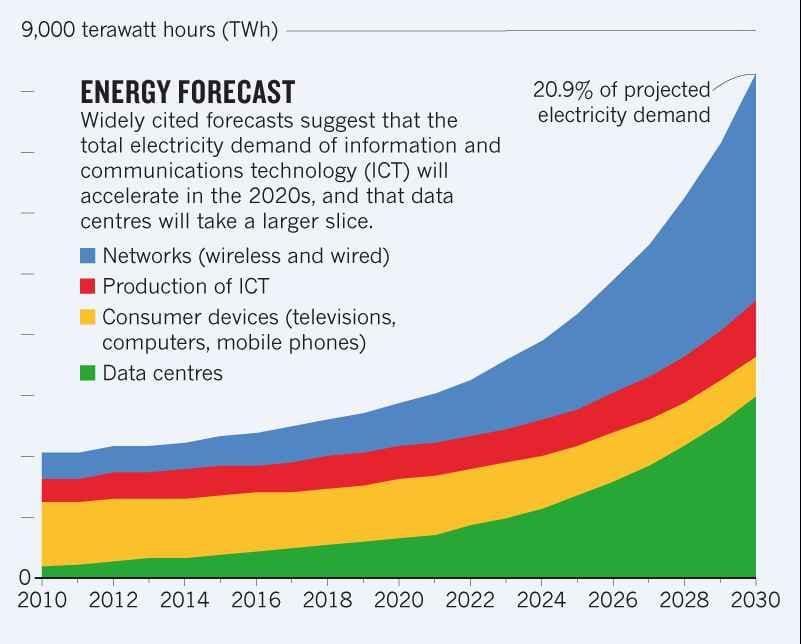

The increased use of machine learning requires increased data centers, many of which are power hungry and thus have a significant carbon footprint. The global electricity usage by data centers amounted to 0.9 to 1.3 percent in 2021.

A 2021 study estimated that this usage can increase to 1.86 percent by 2030. This figure represents the increasing trend of energy demand due to data centers

© Energy consumption trend and share of use for data centers

Notably, the higher the energy consumption is, the higher the carbon footprint will be. Data centers heat up during processing and can become faulty and even stop functioning due to overheating. Hence, they need cooling, which requires additional energy. Around 40 percent of the electricity consumed by data centers is for air conditioning.

Computing Carbon Intensity for AI Applications

Given the increasing footprint of AI usage, these tools’ carbon intensity needs to be accounted for. Currently, the research on this subject is limited to analyses of a few models and does not adequately address the diversity of the said models.

Here is an evolved methodology and a few effective tools to compute carbon intensity of AI systems.

Methodology for estimating carbon intensity of AI

The Software Carbon Intensity (SCI) standard is an effective approach for estimating carbon intensity of AI systems. Unlike the conventional methodologies that employ attributional carbon accounting approach, it uses a consequential computing approach.

Consequential approach attempts to calculate the marginal change in emissions arising from an intervention or decision, such as the decision to generate an extra unit. Whereas, attribution refers to accounting average intensity data or static inventories of emissions.

A paper on “Measuring the Carbon Intensity of AI in Cloud Instances” by Jesse Doge et al. has employed this methodology to bring in more informed research. Since a significant amount of AI model training is conducted on cloud computing instances, it can be a valid framework to compute the carbon footprint of AI models. The paper refines SCI formula for such estimations as:

which is refined from:

where:

E: Energy consumed by a software system, primarily of graphical processing units-GPUs which is specialized ML hardware.

I: Location-based marginal carbon emissions by the grid powering the datacenter.

M: Embedded or embodied carbon, which is the carbon emitted during usage, creation, and disposal of hardware.

R: Functional unit, which in this case is one machine learning training task.

C= O+M, where O equals E*I

The paper uses the formula to estimate electricity usage of a single cloud instance. In ML systems based on deep learning, major electricity consumption owes it to the GPU, which is included in this formula. They trained a BERT-base model using a single NVIDIA TITAN X GPU (12 GB) in a commodity server with two Intel Xeon E5-2630 v3 CPUs (2.4GHz) and 256GB RAM (16x16GB DIMMs) to experiment the application of this formula. The following figure shows the results of this experiment:

© Energy consumption and split between components of a server

The GPU claims 74 percent of the energy consumption. Although it is still claimed as an underestimation by the paper’s authors, inclusion of GPU is the step in the right direction. It is not the focus of the conventional estimation techniques, which means that a major contributor of carbon footprint is being overlooked in the estimations. Evidently, SCI offers a more wholesome and reliable computation of carbon intensity.

Approaches to measure real-time carbon footprint of cloud computing

AI model training is often conducted on cloud compute instances, as cloud makes it flexible, accessible, and cost-efficient. Cloud computing provides the infrastructure and resources to deploy and train AI models at scale. That’s why model training on cloud computing is increasing gradually.

It’s important to measure the real-time carbon intensity of cloud compute instances to identify areas suitable for mitigation efforts. Accounting time-based and location-specific marginal emissions per unit of energy can help calculate operational carbon emissions, as done by a 2022 paper.

An opensource tool, Cloud Carbon Footprint (CCF) software is also available to compute the impact of cloud instances.

Improving the carbon efficiency of AI applications

Here are 7 ways to optimize the carbon intensity of AI systems.

1. Write better, more efficient code

Optimized codes can reduce energy consumption by 30 percent through decreased memory and processor usage. Writing a carbon-efficient code involves optimizing algorithms for faster execution, reducing unnecessary computations, and selecting energy-efficient hardware to perform tasks with less power.

Developers can use profiling tools to identify performance bottlenecks and areas for optimization in their code. This process can lead to more energy-efficient software. Also, consider implementing energy-aware programming techniques, where code is designed to adapt to the available resources and prioritize energy-efficient execution paths.

2. Select more efficient model

Choosing the right algorithms and data structures is crucial. Developers should opt for algorithms that minimize computational complexity and consequently, energy consumption. If the more complex model only yields 3-5% improvement but takes 2-3x more time to train; then pick the simpler and faster model.

Model distillation is another technique for condensing large models into smaller versions to make them more efficient while retaining essential knowledge. It can be achieved by training a small model to mimic the large one or removing unnecessary connections from a neural network.

3. Tune model parameters

Tune hyperparameters for the model using dual-objective optimization that balance model performance (e.g., accuracy) and energy consumption. This dual-objective approach ensures that you are not sacrificing one for the other, making your models more efficient.

Leverage techniques like Parameter-Efficient Fine-Tuning (PEFT) whose goal is to attain performance similar to traditional fine-tuning but with a reduced number of trainable parameters. This approach involves fine-tuning a small subset of model parameters while keeping the majority of the pre-trained Large Language Models (LLMs) frozen, resulting in significant reductions in computational resources and energy consumption.

4. Compress data and use low-energy storage

Implement data compression techniques to reduce the amount of data transmitted. Compressed data requires less energy to transfer and occupies lower space on disk. During the model serving phase, using a cache can help reduce the calls made to the online storage layer thereby reducing

Additionally, picking the right storage technology can result in significant gains. For eg. AWS Glacier is an efficient data archiving solution and can be a more sustainable approach than using S3 if the data does not need to be accessed frequently.

5. Train models on cleaner energy

If you are using a cloud service for model training, you can choose the region to operate computations. Choose a region that employs renewable energy sources for this purpose, and you can reduce the emissions by up to 30 times. AWS blog post outlines the balance between optimizing for business and sustainability goals.

Another option is to select the opportune time to run the model. At certain times of the day; the energy is cleaner and such data can be acquired through a paid service such as Electricity Map, which offers access to real-time data and future predictions regarding the carbon intensity of electricity in different regions.

6. Use specialized data centers and hardware for model training

Choosing more efficient data centers and hardware can make a huge difference on carbon intensity. ML-specific data centers and hardware can be 1.4-2 and 2-5 times more energy efficient than the general ones.

7. Use serverless deployments like AWS Lambda, Azure Functions

Traditional deployments require the server to be always on, which means 24x7 energy consumption. Serverless deployments like AWS Lambda and Azure Functions work just fine with minimal carbon intensity.

Final Notes

The AI sector is experiencing exponential growth, permeating every facet of business and daily existence. However, this expansion comes at a cost—a burgeoning carbon footprint that threatens to steer us further away from the goal of limiting global temperature increases to just 1°C.

This carbon footprint is not just a present concern; its repercussions may extend across generations, affecting those who bear no responsibility for its creation. Therefore, it becomes imperative to take decisive actions to mitigate AI-related carbon emissions and explore sustainable avenues for harnessing its potential. It is crucial to ensure that AI's benefits do not come at the expense of the environment and the well-being of future generations.

Ankur Gupta is an engineering leader with a decade of experience spanning sustainability, transportation, telecommunication and infrastructure domains; currently holds the position of Engineering Manager at Uber. In this role, he plays a pivotal role in driving the advancement of Uber's Vehicles Platform, leading the charge towards a zero-emissions future through the integration of cutting-edge electric and connected vehicles.