Small Data requires Specialized Deep Learning and Yann LeCun response

For industries that have relatively small data sets (less than a petabyte), a Specialized Deep Learning approach based on unsupervised learning and domain knowledge is needed.

By Shalini Ananda, @ShaliniAnanda1.

Dear Yann,

In your recent IEEE Spectrum interview, you state:

"We now have unsupervised techniques that actually work. The problem is that you can beat them by just collecting more data, and then using supervised learning. This is why in industry, the applications of Deep Learning are currently all supervised. I agree with you that for the search and advertising industry, supervised learning is used because of the vast amounts of data being generated and gathered."

However, for industries that have Small Data sets (less than a petabyte), a Specialized Deep Learning approach based on unsupervised learning is necessary.

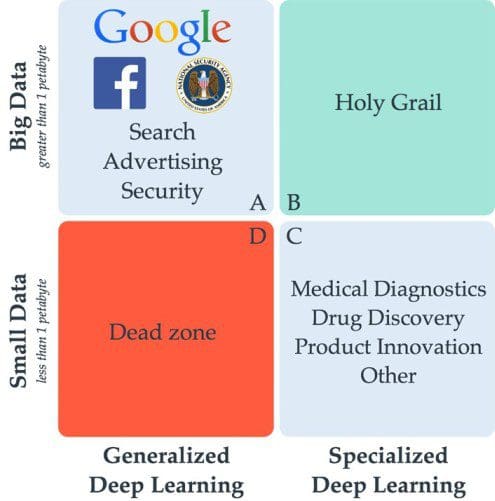

Here’s how we see industries fall based on the amount of data they can collect and the type of Deep Learning method is appropriate for each. Ideally all of our efforts should gravitate to what we call the Holy Grail (Quadrant B), where Specialized Deep Learning converges with Big Data to give us amazing insights about our fields. Unfortunately that’s time dependent and we’ll have to work within our means until the data catches up.

Data Scientists in field without Big Data (Quadrant C) simply cannot wait to gather sufficient data to implement Deep Learning in the way that works in your industry (Quadrant A).

Data Scientists in field without Big Data (Quadrant C) simply cannot wait to gather sufficient data to implement Deep Learning in the way that works in your industry (Quadrant A).

Specialized Deep Learning is already being used outside of Facebook and Google

Deep Learning as a technique began in cognitive science and is increasingly gaining momentum in the fields of medicine (eg. radiology), physics and materials research. The reason for this is that Deep Learning can generate insights faster and lead to into product innovation or diagnosis rather rapidly. A lot of these researchers tend towards Deep Learning to mitigate the limitations presented by other machine learning techniques.

Since the datasets available in these fields are small, data scientists cannot apply pre-packaged Deep Learning algorithms, but have to artfully determine the features to train and engineer their networks with convolution/dense layers to learn these rather complex features. The data scientists that perform these tasks have to be machine learning engineers who walked in the shoes of a radiologists, internist or a chemical engineer. This understanding across domains enables them to delve into appropriate pre-processing and feature engineering involved prior to architecting and training their networks.

Let me explain with an example.

In the broad field of image recognition, a data scientist in search and a data scientist in MRI research will need to approach Deep Learning differently.

A data scientist working in search can identify an image of a cookie as a cookie when presented with several types of cookies because the features and classifiers are obvious. But to train an algorithm to diagnose a disease from an MRI image, the data scientist has to determine inconspicuous features to extract and hand craft their network to train these complex features. This data scientist does not have billions of images at their disposal to determine appropriate classifiers and yet are looking to train and coordinate a set of very complex features.

Specialized Deep Learning will be the future standard

On a grander note, fields that require Specialized Deep Learning will soon gather more data. When Specialized Deep Learning is applied across Big Data (greater than 1 petabyte) I imagine we’d be able to glean insights previously unthinkable, such as generating psychological traits from a person’s genetic profile very precisely.

Dear Yann,

In your recent IEEE Spectrum interview, you state:

"We now have unsupervised techniques that actually work. The problem is that you can beat them by just collecting more data, and then using supervised learning. This is why in industry, the applications of Deep Learning are currently all supervised. I agree with you that for the search and advertising industry, supervised learning is used because of the vast amounts of data being generated and gathered."

However, for industries that have Small Data sets (less than a petabyte), a Specialized Deep Learning approach based on unsupervised learning is necessary.

Here’s how we see industries fall based on the amount of data they can collect and the type of Deep Learning method is appropriate for each. Ideally all of our efforts should gravitate to what we call the Holy Grail (Quadrant B), where Specialized Deep Learning converges with Big Data to give us amazing insights about our fields. Unfortunately that’s time dependent and we’ll have to work within our means until the data catches up.

Data Scientists in field without Big Data (Quadrant C) simply cannot wait to gather sufficient data to implement Deep Learning in the way that works in your industry (Quadrant A).

Data Scientists in field without Big Data (Quadrant C) simply cannot wait to gather sufficient data to implement Deep Learning in the way that works in your industry (Quadrant A).

Specialized Deep Learning is already being used outside of Facebook and Google

Deep Learning as a technique began in cognitive science and is increasingly gaining momentum in the fields of medicine (eg. radiology), physics and materials research. The reason for this is that Deep Learning can generate insights faster and lead to into product innovation or diagnosis rather rapidly. A lot of these researchers tend towards Deep Learning to mitigate the limitations presented by other machine learning techniques.

Since the datasets available in these fields are small, data scientists cannot apply pre-packaged Deep Learning algorithms, but have to artfully determine the features to train and engineer their networks with convolution/dense layers to learn these rather complex features. The data scientists that perform these tasks have to be machine learning engineers who walked in the shoes of a radiologists, internist or a chemical engineer. This understanding across domains enables them to delve into appropriate pre-processing and feature engineering involved prior to architecting and training their networks.

Let me explain with an example.

In the broad field of image recognition, a data scientist in search and a data scientist in MRI research will need to approach Deep Learning differently.

A data scientist working in search can identify an image of a cookie as a cookie when presented with several types of cookies because the features and classifiers are obvious. But to train an algorithm to diagnose a disease from an MRI image, the data scientist has to determine inconspicuous features to extract and hand craft their network to train these complex features. This data scientist does not have billions of images at their disposal to determine appropriate classifiers and yet are looking to train and coordinate a set of very complex features.

Specialized Deep Learning will be the future standard

On a grander note, fields that require Specialized Deep Learning will soon gather more data. When Specialized Deep Learning is applied across Big Data (greater than 1 petabyte) I imagine we’d be able to glean insights previously unthinkable, such as generating psychological traits from a person’s genetic profile very precisely.