Machine Learning Wars: Amazon vs Google vs BigML vs PredicSis

Comparing 4 Machine Learning APIs: Amazon Machine Learning, BigML, Google Prediction API and PredicSis on a real data from Kaggle, we find the most accurate, the fastest, the best tradeoff, and a surprise last place.

By Louis Dorard

UPDATE - NEW BIGML RESULTS: As pointed out by Francisco Martin, if you just change the objective field (SeriousDlqin2yrs) to be numeric instead of categorical, BigML's accuracy for a single model goes to 0.853 (whereas it was initially reported as 0.790 - the accuracy in the table above and the Kaggle rank below have been updated to reflect that).

Amazon ML (Machine Learning) made a lot of noise when it came out last month. Shortly afterwards, someone posted a link to Google Prediction API on HackerNews and it quickly became one of the most popular posts. Google’s product is quite similar to Amazon’s but it’s actually much older since it was introduced in 2011. Anyway, this gave me the idea of comparing the performance of Amazon’s new ML API with that of Google. For that, I used the Kaggle “give me some credit” challenge. But I didn’t stop there: I also included startups who provide competing APIs in this comparison — namely, PredicSis and BigML. In this wave of new ML services, the giant tech companies are getting all the headlines, but bigger companies do not necessarily have better products.

Here is a tweet-size summary:

Methodology

The ML problem in the Kaggle credit challenge is a binary classification one: you’re given a dataset of input-output pairs where each input corresponds to an individual who has applied for a credit and the output says whether he later defaulted or not. The idea is to use ML to predict whether a new individual applying for a credit will default.

The ML problem in the Kaggle credit challenge is a binary classification one: you’re given a dataset of input-output pairs where each input corresponds to an individual who has applied for a credit and the output says whether he later defaulted or not. The idea is to use ML to predict whether a new individual applying for a credit will default.

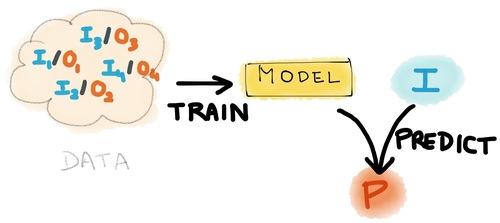

ML has two phases: train and predict. The “train” phase consists in using a set of input-output examples to create a model that maps inputs to outputs. The “predict” phase consists in using the model on new inputs to get predictions of the associated outputs. Amazon ML, Google Prediction API, PredicSis and BigML all have similar API methods for each phase: Model => Predict ">

Model => Predict ">

All 4 services offer free accounts which I used for this comparison (note: PredicSis is still in private beta but you can request an account here). In this post, I will only compare the performance of these two methods and I won't consider other aspects such as pricing, features, DX, UX, etc.

In order to evaluate the models produced by the APIs, we need to separate our dataset downloaded from Kaggle in two: a training set which we use to create a model, and an evaluation set. We apply the model to the inputs of the evaluation set and we get a prediction for each input. We can evaluate the accuracy of the model by comparing the predicted output with the true output (which was held out).

The dataset we start with contains 150,000 instances and weighs 7.2 MB. I randomly selected 90% of the dataset for training and in the remaining 10% I randomly selected 5,000 inputs for evaluation.

Results

For each API, there are three things to measure: the time taken by each method and the accuracy of predictions made by the model. For accuracy, I used the same performance measure as that of the Kaggle challenge, which is called AUC. I won’t explain what it is here, but what you have to know about AUC is that a) performance values are between 0 and 1, b) a random classifier would have an AUC of around 0.5, c) a perfect classifier would have an AUC of 1. As a consequence, the higher the AUC, the better the model.

Times for predictions correspond to 5,000 predictions. FYI, the top entry on the leaderboard had an AUC of 0.870. If you’d used these APIs in the Kaggle competition, here’s the approximate rank you could have had:

UPDATE - NEW BIGML RESULTS: As pointed out by Francisco Martin, if you just change the objective field (SeriousDlqin2yrs) to be numeric instead of categorical, BigML's accuracy for a single model goes to 0.853 (whereas it was initially reported as 0.790 - the accuracy in the table above and the Kaggle rank below have been updated to reflect that).

Amazon ML (Machine Learning) made a lot of noise when it came out last month. Shortly afterwards, someone posted a link to Google Prediction API on HackerNews and it quickly became one of the most popular posts. Google’s product is quite similar to Amazon’s but it’s actually much older since it was introduced in 2011. Anyway, this gave me the idea of comparing the performance of Amazon’s new ML API with that of Google. For that, I used the Kaggle “give me some credit” challenge. But I didn’t stop there: I also included startups who provide competing APIs in this comparison — namely, PredicSis and BigML. In this wave of new ML services, the giant tech companies are getting all the headlines, but bigger companies do not necessarily have better products.

Here is a tweet-size summary:

Amazon Machine Learning most accurate

BigML fastest

PredicSis best trade-off

Google (Prediction API) last

Methodology

The ML problem in the Kaggle credit challenge is a binary classification one: you’re given a dataset of input-output pairs where each input corresponds to an individual who has applied for a credit and the output says whether he later defaulted or not. The idea is to use ML to predict whether a new individual applying for a credit will default.

The ML problem in the Kaggle credit challenge is a binary classification one: you’re given a dataset of input-output pairs where each input corresponds to an individual who has applied for a credit and the output says whether he later defaulted or not. The idea is to use ML to predict whether a new individual applying for a credit will default.

ML has two phases: train and predict. The “train” phase consists in using a set of input-output examples to create a model that maps inputs to outputs. The “predict” phase consists in using the model on new inputs to get predictions of the associated outputs. Amazon ML, Google Prediction API, PredicSis and BigML all have similar API methods for each phase:

- One method that takes in a dataset (in csv format for instance), and that returns the id of a model trained on this dataset

- One method that takes a model id and an input, and that returns a prediction.

Model => Predict ">

Model => Predict ">All 4 services offer free accounts which I used for this comparison (note: PredicSis is still in private beta but you can request an account here). In this post, I will only compare the performance of these two methods and I won't consider other aspects such as pricing, features, DX, UX, etc.

In order to evaluate the models produced by the APIs, we need to separate our dataset downloaded from Kaggle in two: a training set which we use to create a model, and an evaluation set. We apply the model to the inputs of the evaluation set and we get a prediction for each input. We can evaluate the accuracy of the model by comparing the predicted output with the true output (which was held out).

The dataset we start with contains 150,000 instances and weighs 7.2 MB. I randomly selected 90% of the dataset for training and in the remaining 10% I randomly selected 5,000 inputs for evaluation.

Results

For each API, there are three things to measure: the time taken by each method and the accuracy of predictions made by the model. For accuracy, I used the same performance measure as that of the Kaggle challenge, which is called AUC. I won’t explain what it is here, but what you have to know about AUC is that a) performance values are between 0 and 1, b) a random classifier would have an AUC of around 0.5, c) a perfect classifier would have an AUC of 1. As a consequence, the higher the AUC, the better the model.

| Amazon | PredicSis | BigML | ||

| Accuracy (AUC) | 0.862 | 0.743 | 0.858 | 0.853 |

| Time for training (s) | 135 | 76 | 17 | 5 |

| Time for predictions (s) | 188 | 369 | 5 | 1 |

Times for predictions correspond to 5,000 predictions. FYI, the top entry on the leaderboard had an AUC of 0.870. If you’d used these APIs in the Kaggle competition, here’s the approximate rank you could have had: