Semantic Interoperability: Are you training your AI by mixing data sources that look the same but aren’t?

Semantic interoperability is a challenge in AI systems, especially since data has become increasingly more complex. The other issue is that semantic interoperability may be compromised when people use the same system differently.

By Luke Potgieter, John Snow Labs

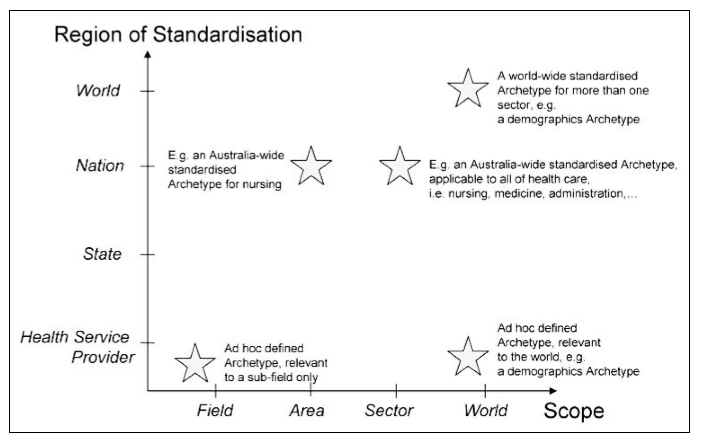

Using archetypes to standardize semantic interoperability (from Garde et al., 2007)

Introduction

Interoperability refers to the ability of computers to relate to each other. Semantic interoperability, in particular, is the ability of different computer information systems to use and share data in a meaningful way. The semantics refers to the meaning of the data. This means that the data has to have not only the same format and packaging, but should have the same meaning. The data must have a common standard and protocol in order to be compatible and to not lose meaning. A simple example of this can be seen with web programming such as XML, where a common syntax and data format is used. This ensures that all information coded into XML is interpreted in the same manner.

The issue

Semantic interoperability is a challenge in AI systems, especially since data has become increasingly more complex. The other issue is that semantic interoperability may be compromised when people use the same system differently. The data format may also not be compatible between the two different AI systems. This means that data has to often first be extracted, collected and then the data has to be converted or transformed in such a way that it can be used in the new system.

In healthcare, if the electronic health record (EHR) between different health systems is different it means that the semantics or meaning of the data may be different. Massachusetts General Hospital system had this experience when they decided to change to a new EHR system known as EPIC (Read more…). They realized that the data that was extracted from the old EHR system had first to be converted before it could be added into the new EPIC system. This movement of data from legacy systems to new systems is important today and is of concern to many systems, including for instance the World Health Organization (WHO). The WHO collects statistics from several different nations that can be used for monitoring purposes. Studies have shown that data is not always complete or consistent when entered into an EHR system. This was evident in a study that was done on pancreatic cancer patients (Read more...).

In general, the data in AI systems needs to be accessible and able to be used to make predictions and recommendations. Implementing newer systems can improve semantic interoperability in the future, which is desirable. In AI systems we cannot make automated predictions and recommendations if the meaning of data is not understood. Data that is entered into an AI system should be of a high quality and as complete and accurate as possible so as to ensure it is of maximum use.

How to achieve semantic interoperability

The first best practice to achieve semantic interoperability is to use archetypes. An archetype is a specification of the format of data that should encompass the most useful and complete information as possible (Read more...). An archetype provides the shared meaning of data. The distribution of data fields that come from different sources need to be checked to ensure interoperability. Semantic interoperability in AI systems requires that archetypes be of a high quality. Archetypes also need to be evidence-based, standardized and designed by experts with domain knowledge (Read more...).

Data from different systems may not have the same meaning (i.e. does not have semantic interoperability). Transferring from legacy systems to the newer systems that have better semantic interoperability will also be a good strategy to ensure better and more effective AI systems in the future. Using standardized archetypes and normalization of data and using newer systems can thus help achieve increased interoperability between systems. Once we have decided on archetypes (shared meaning of data), data should then be normalized.

It may be advisable to have a national standards framework that is established by the government. This could then ensure standardization of data types and identifiers which can help with semantic interoperability (Read more…). For instance, a standard terminology such as the Systematized Nomenclature for Human and Veterinary Medicine (SNOMED) Clinical Terms could be used to increase semantic interoperability.

Benefits of semantic interoperability

Semantic interoperability needs to be kept in mind when designing AI systems, as well as when data is being exchanged. The semantic interoperability is needed for correct and valid machine interpretation and logic. It saves time and money to have AI systems that are interoperable in this way. There are many useful and important benefits to having a system that has semantic interoperability in different areas where AI is used.

For instance, semantic interoperability would be very beneficial with health information systems since it would enable improved access to records and pertinent health information (Read more…).

Conclusion

In summary, semantic interoperability is necessary in AI systems. You cannot learn the patterns, predictions, or anomalies of data or make recommendations when the data is a mash-up of sources that do not mean the same thing. John Snow Labs is concerned with semantic interoperability, and provides a healthcare data normalization engine, as well as curated and versioned data sets for all the commonly used code sets and terminologies in this space.

Such solutions are a requirement to applying any data analysis, let alone AI techniques, on data that has been collected from diverse sources. It is critical that your data science team understands that having data in the same format doesn’t imply that it also has the same meaning - and regularly tests and corrects for this fact.

Bio: Luke Potgieter is a technical editor and advisor at John Snow Labs with a formal education background in the sciences. He is the author of several introductory computing courses, health guides, pre-med materials, and has published content on numerous award-winning blogs. As a Tech Advisor for John Snow Labs, Luke has found a passion for data science.

Related:

- How To Create Natural Language Semantic Search For Arbitrary Objects With Deep Learning

- 10 Big Data Trends You Should Know

- Society of Machines: The Complex Interaction of Agents