Reproducibility, Replicability, and Data Science

As cornerstones of scientific processes, reproducibility and replicability ensure results can be verified and trusted. These two concepts are also crucial in data science, and as a data scientist, you must follow the same rigor and standards in your projects.

By Sydney Firmin, Alteryx.

Reproducibility and replicability are cornerstones of scientific inquiry. Although there is some debate on terminology and definitions, if something is reproducible, it means that the same result can be recreated by following a specific set of steps with a consistent dataset. If something is replicable, it means that the same conclusions or outcomes can be found using slightly different data or processes. Without reproducibility, process and findings can’t be verified. Without replicability, it is difficult to trust the findings of a single study.

The Scientific Method was designed and implemented to encourage reproducibility and replicability by standardizing the process of scientific inquiry. By following a shared process of how to ask and explore questions – we can ensure consistency and rigor in how we come to conclusions. It also makes it easier for other researchers to converge on our results. The data science lifecycle is no different.

Despite this and other processes in place to encourage robust scientific research, over the past few decades, the entire field of scientific research has been facing a replication crisis. Research papers published in many high-profile journals, such as Nature and Science, have been failing to replicate in follow-up studies. Although the narrative crisis has been seen as a little alarmist and counterproductive by some researchers, you might label it a problem within the research that people are publishing false positives and findings that can’t be verified.

The growing awareness of irreproducible research can be, in part, attributed to technology – we are more connected, and scientific findings are more circulated than ever before. Technology also allows us to identify and leverage strategies to make scientific research more reproducible than ever before.

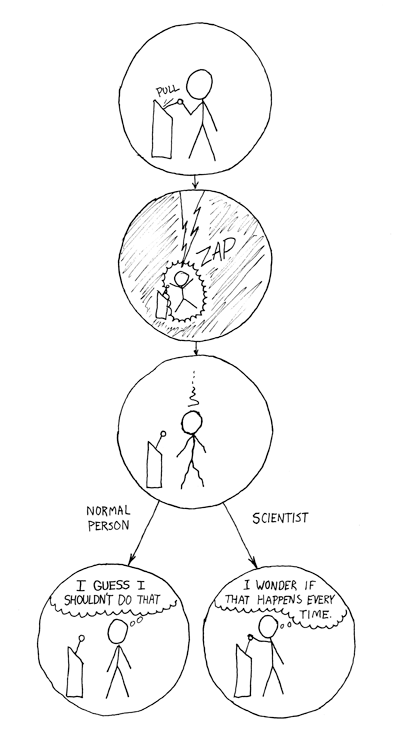

The Difference: https://xkcd.com/242/

Data science, at the crossroads of statistics and computer science, is positioned to encourage reproducibility and replicability, both in academic research and in industry.

Why Reproducibility Matters

As a researcher or data scientist, there are a lot of things that you do not have control over. You might not be able to collect your data in the most ideal way or ensure you are even capturing what you’re trying to measure with your data. You can’t really guarantee that your research or project will replicate. The only thing you can guarantee is that your work is reproducible.

Additionally, encouraging and standardizing a paradigm of reproducibility in your work promotes efficiency and accuracy. Often in scientific research and data science projects, we want to build upon preexisting work – work either done by ourselves or by other researchers. Including reproducible methods – or even better, reproducible code – prevents the duplication of efforts, allowing more focus on new, challenging problems. It also makes it easier for other researchers (including yourself in the future) to check your work, making sure your process is correct and bug-free.

Making Your Projects Reproducible

Reproducibility is a best practice in data science as well as in scientific research, and in a lot of ways, comes down to having a software engineering mentality. It is about setting up all your processes in a way that is repeatable (preferably by a computer) and well documented. Here are some (hopefully helpful) hints on how to make your work reproducible.

The first, and probably the easiest thing you can do is use a repeatable method for everything – no more editing your data in excel ad-hoc and maybe making a note in a notepad file about what you did. Leverage code or software that can be saved, annotated and shared so another person can run your workflow and accomplish the same thing. Even better, if you find you are using the same process repeatedly (more than a few times) or for different projects, convert your code or workflows into functions or macros to be shared and easily re-used.

Documentation of your processes is also critical. Write comments in your code (or your workflows) so that other people (or you six months down the road) can quickly understand what you were trying to do. Code and workflows are usually the best or most elegant when they are simple and can be easily interpreted, but there is never a guarantee that the person looking at your work thinks the same way you do; don’t take the risk here, just spend the extra time to write about what you’re doing.

Another best practice is to keep every version of everything; workflows and data alike, so you can track changes. Being able to back-version your data and your processes allows you to have awareness into any changes in your process, and track down where a potential error may have been introduced. You can use a version control system like Git or DVC to do this. In addition to being a great way to control versions of code, version control systems like Git can work with many different software files and data formats. Version-controlling your data is a good idea for data science projects because an analysis or model is directly influenced by the data set with which it is trained.

In combination with keeping all of your materials in a shared, central location, version control is essential for collaborative work or helping get your teammates up to speed on a project you've worked. The added benefit of having a version-control repository that’s in a shared location and not on your computer can’t be overstated – fun fact, this is my second attempt at writing this post after my computer was bricked last week. I am now compulsively saving all of my work in the cloud.

Challenges (and Possible Resolutions) to Reproducibility

Despite the great promise of leveraging code or other repeatable methods to make scientific research and data science projects more reproducible, there are still obstacles that can make reproducibility challenging.

One of these obstacles is computer environments. When you share a script, you can’t necessarily guarantee that the person receiving the script has all the same environmental components that you do – the same version of Python or R, for example. This can result in the outcomes of your documented and scripted process turning out differently on a different machine.

This use case is exactly what Docker containers, Cloud Services like AWS, and Python virtual environments were created for. By sharing a mini-environment that supports your process, you’re taking an extras step in ensuring your process is reproducible. This type of extra step is particularly important when you’re working with collaborators (which, arguably, is important for replicability).

Why Replicability Matters

Replicability is often the goal of scientific research. We turn to science for shared, empirical facts, and truth. When our findings can be supported or confirmed by other labs, with different data or slightly different processes, we know we’ve found something potentially meaningful or real.

Replicability is much, much harder to guarantee than reproducibility, but there are also practices researchers engage in, like p-hacking, which make expecting your results to replicate unreasonable.

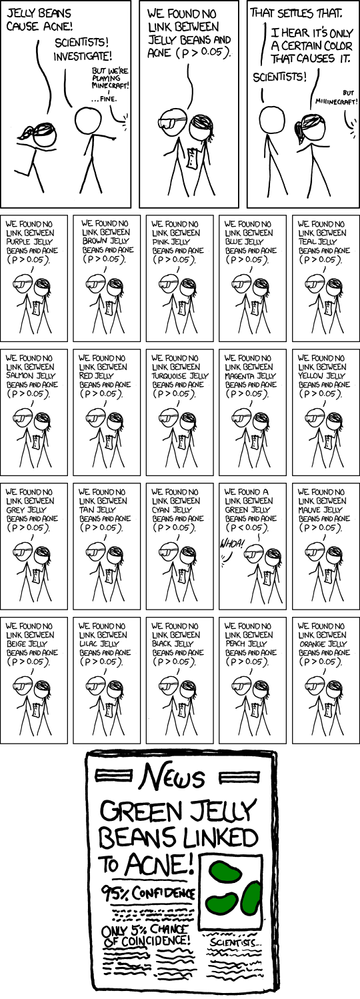

Significant: https://xkcd.com/882/

P-Hacking

P-hacking (also known as data dredging or data fishing) is the process in which a scientist or corrupt statistician will run numerous statistical tests on a data set until a “statistically significant” relationship (usually defined as p < 0.05) is found.

You can read more about p-hacking (and also play with a neat interactive app demonstrating how it works) in the article Science Isn’t Broken published by FiveThirtyEight. This video from CrashCourseStatistics on YouTube is also great.

Often, p-hacking isn’t done out of malice. There are a variety of incentives, particularly in academic research, that drive researchers to manipulate their data until they find an interesting outcome. It’s also natural to try to find data that supports your hypothesis. As a scientist or analyst, you have to make a large number of decisions on how to handle different aspects of your analysis – ranging from removing (or keeping) outliers, to which predictor variables to include, transform, or remove. P-hacking is often a result of specific researcher bias - you believe something works a certain way, so you torture your data until it confesses what you “know” to be the truth. It is not uncommon for researchers to fall in love with their hypothesis and (consciously or unconsciously) manipulate their data until they are proven right.

Setting up Your Project to Replicate

Although replicability is much more difficult to ensure than reproducibility, there are best practices you can employ as a data scientist to set your findings up for success in the world at large.

One relatively easy and concrete thing you can do in data science projects is to make sure you don't overfit your model; verify this by using a holdout data set for evaluation or leveraging cross-validation. Overfitting is when your model picks up on random variation in the training dataset instead of finding a "real" relationship between variables. This random variation will not exist outside of the sampled training data, so evaluating your model with a different data set can help you catch this.

Another thing that can help with replication is ensuring you are working with a sufficiently large data set. There are no hard and fast rules on when a data set is "big enough" - it will entirely depend on your use case and the type of modeling algorithm you are working with.

In addition to a strong understanding of statistical analysis and getting a sufficiently large sample size, I think the single most important thing you can do to increase the chances that your research or project will replicate is getting more people involved in developing or reviewing your project.

Getting a diverse team involved in a study helps mitigate the risk of bias because you are incorporating different viewpoints into setting up your question and evaluating your data. In this same sense, getting different types of researchers, for example, including a statistician in the problem formulation stage of a life sciences study, can help ensure different issues and perspectives are accounted for, and that the resulting research is more rigorous.

In the same sense, accepting that research is an iterative process, and being open to failure as an outcome is critical.

Above all, it is important to acknowledge uncertainty, and that a successful outcome can be finding that the data you have can't answer the question you're asking, or that the thing you suspected isn't being supported by the data. Most scientific experiments end in "failure," and in many ways, this failure can be considered a successful outcome if you did a robust analysis. Even when you do find "significant" relationships or results, it can be difficult to make guarantees about how the model will perform in the future or on data that is sampled from different populations. It is important to acknowledge the limitations or possible shortcomings of your analysis. Acknowledging the inherent uncertainty in the scientific method and data science and statistics will help you communicate your findings realistically and correctly.

Reproducibility and Replicability in Data Science

A principle of science is that it is self-correcting. If a study gets published or accepted that turns out to be disproven, it will be corrected by subsequent research, and as time moves forward, science can converge on “the truth.” Whether or not this currently happens in practice may be a little questionable, but the good news is that the internet seems to be helping. We are more connected to knowledge and one another than ever before - and because of this, there is an opportunity for science to self-correct and rigorously test, self-correct, and circulate findings.

Data science can be seen as a field of scientific inquiry in its own right. The work we do as data scientists should be held to the same levels of rigor as any other field of inquiry and research. It is our responsibility as data scientists to hold ourselves to these standards. Data science has also, in a lot of ways, been set up for success in these areas. Our work is computer-driven (and therefore reproducible) by nature, as well as interdisciplinary – meaning we should be working in teams with people that have different skills and backgrounds than ourselves.

Original. Reposted with permission.

Bio: A geographer by training and a data geek at heart, Sydney Firmin strongly believes that data and knowledge are most valuable when they can be clearly communicated and understood. In her current role as a Data Scientist on the Data Science Innovation team at Alteryx, she develops data science tools for a wide audience of users.

Related: