More Data Science Cheatsheets

It's time again to look at some data science cheatsheets. Here you can find a short selection of such resources which can cater to different existing levels of knowledge and breadth of topics of interest.

We recently realized that we hadn't brought you any data science cheatsheets in a while. And it's not for their lack of availability; data science cheatsheets are everywhere, ranging from the introductory to the advanced, covering topics from algorithms, to statistics, to interview tips, and beyond.

But what makes a good cheatsheet? What makes a cheatsheet worthy of being singled out as a particularly good one? It's difficult to put your finger on precisely what makes a good cheatsheet, but obviously one which conveys essential information concisely — whether that information is of a specific of general nature — is definitely a good start. And that is what makes our candidates today noteworthy. So read on for four curated complementary cheatsheets to assist you in your data science learning or review.

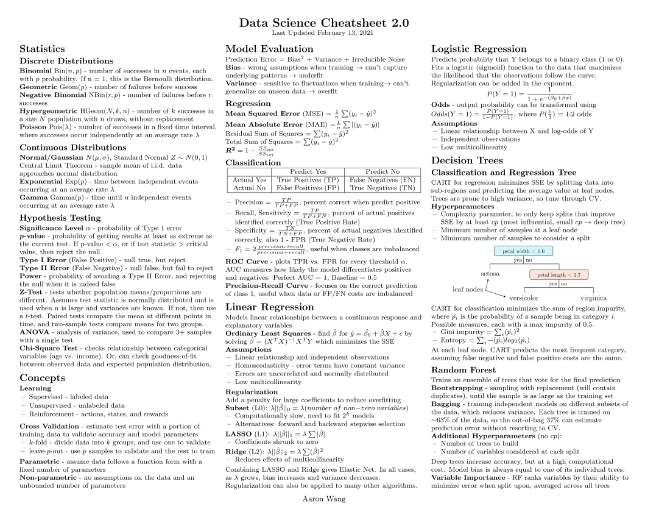

First up is Aaron Wang's Data Science Cheatsheet 2.0, a four page compilation of statistical abstractions, fundamental machine learning algorithms, and deep learning topics and concepts. It is not meant to be exhaustive, but instead a quick reference for situations such as interview preparation and exam reviews, and anything else requiring a similar level of review depth. The author notes that while those with a basic understanding of statistics and linear algebra would find this resource of most benefit, beginners should be able to glean useful information from its content as well.

Screenshot from Aaron Wang's Data Science Cheatsheet 2.0

Our next cheatsheet offering today is that which Aaron Wang's resource is based on, Maverick Lin's Data Science Cheatsheet (Wang's reference to his own as 2.0 is a direct nod to Lin's "original"). We can think of Lin's cheatsheet as more in-depth than Wang's (though Wang's decision to make his less in-depth seems intentional and a useful alternative), covering more fundamental data science concepts such as data cleaning, the idea of modeling, doing "big data" with Hadoop, SQL, and even the basics of Python.

Clearly this will appeal to those who are more firmly in the "beginner" camp, and does a good job of whetting appetites and making readers aware of the broad field of data science, and many of the varying concepts which it encompasses. This is definitely another solid resource, especially if the reader is newcomer to data science.

Screenshot from Maverick Lin's Data Science Cheatsheet

As we move further back in time — seeking the inspiration for Lin's cheatsheet — we come across William Chen's Probability Cheatsheet 2.0. Chen's cheatsheet has garnered much attention and praise over the years, and so you may have come across it at some point. Clearly with a different focus (given its name), Chen's cheatsheet is a crash course on, or deep dive review of, probability concepts, including a variety of distributions, covariance and transformations, conditional expectation, Markov chains, various formulas of importance, and much more.

At 10 pages, you should be able to imagine the breadth of probability topics being covered herein. But don't let that deter you; Chen's ability to boil concepts down to their essential bullet points and explain in plain English while not sacrificing on essentials is noteworthy. It is also rich in explanatory visualizations, something quite useful when space is limited and the desire to be concise is strong.

Not only is Chen's compilation a quality one and worthy of your time, as a beginner or someone interested in a full review, I would work in reverse order of how these resources were presented — from Chen's cheatsheet, to Lin's, and finally to Wang's, building on top of concepts as you go.

Screenshot from William Chen's Probability Cheatsheet 2.0

One final resource I'm including here, though not technically a cheatsheet, is Rishabh Anand's Machine Learning Bites. Billing itself as "[a]n interview guide on common Machine Learning concepts, best practices, definitions, and theory," Anand has compiled a wide ranging collection of knowledge "bites," the usefulness of which definitely transcends the originally intended interview preparation. Topics covered within include:

- Model Scoring Metrics

- Parameter Sharing

- k-Fold Cross Validation

- Python Data Types

- Improving Model Performance

- Computer Vision Models

- Attention and its Variants

- Handling Class Imbalance

- Computer Vision Glossary

- Vanilla Backpropagation

- Regularization

- References

Screenshot from Machine Learning Bites

While machine learning "concepts, best practices, definitions, and theory" are touched on, as promised in the resource's description of itself, these "bites" are definitely geared toward the practical, which makes the site complementary to much of the material covered in the three previously mentioned cheatsheets. If I were looking to cover all of the material in all four of the resources in this post, I would certainly look at this after the other three.

So there you have four cheatsheets (or three cheatsheets and one cheatsheet-adjacent resource) to use for your learning or review. Hopefully something here is useful for you, and I invite anyone to share the cheatsheets they have found useful in the comments below.