Why Use k-fold Cross Validation?

Generalizing things is easy for us humans, however, it can be challenging for Machine Learning models. This is where Cross-Validation comes into the picture.

Image by Editor

Generalization is a frequent term that is used in conversations about Machine Learning. It refers to how able the model can adapt to new, unseen data and how it works effectively using various inputs. It is understandable to say that if new unseen data is inputted into a model, if this unseen data has similar characteristics to the training data, it will perform well.

Generalizing things is easy to us humans, however it can be challenging to Machine Learning models. This is where Cross-Validation comes into the picture.

Cross-Validation

You may see cross-validation also being referred to as rotation estimation and/or out-of-sample testing. The overall aim of Cross-Validation is to use it as a tool to evaluate machine learning models, by training a number of models on different subsets of the input data.

Cross-validation can be used to detect overfitting in a model which infers that the model is not effectively generalizing patterns and similarities in the new inputted data.

A typical Cross-Validation workflow

In order to perform cross-validation, the following steps are typically taken:

- Split the dataset into training data and test data

- The parameters will undergo a Cross-Validation test to see which are the best parameters to select.

- These parameters will then be implemented into the model for retraining

- Final evaluation will occur and this will depend if the cycle has to go again, depending on the accuracy and the level of generalization that the model performs.

There are different types of Cross-Validation techniques

- Hold-out

- K-folds

- Leave-one-out

- Leave-p-out

However, we will be particularly focusing on K-folds.

K-fold Cross-Validation

K-fold Cross-Validation is when the dataset is split into a K number of folds and is used to evaluate the model's ability when given new data. K refers to the number of groups the data sample is split into. For example, if you see that the k-value is 5, we can call this a 5-fold cross-validation. Each fold is used as a testing set at one point in the process.

K-fold Cross-Validation Process:

- Choose your k-value

- Split the dataset into the number of k folds.

- Start off with using your k-1 fold as the test dataset and the remaining folds as the training dataset

- Train the model on the training dataset and validate it on the test dataset

- Save the validation score

- Repeat steps 3 – 5, but changing the value of your k test dataset. So we chose k-1 as our test dataset for the first round, we then move onto k-2 as the test dataset for the next round.

- By the end of it you would have validated the model on every fold that you have.

- Average the results that were produced in step 5 to summarize the skill of the model.

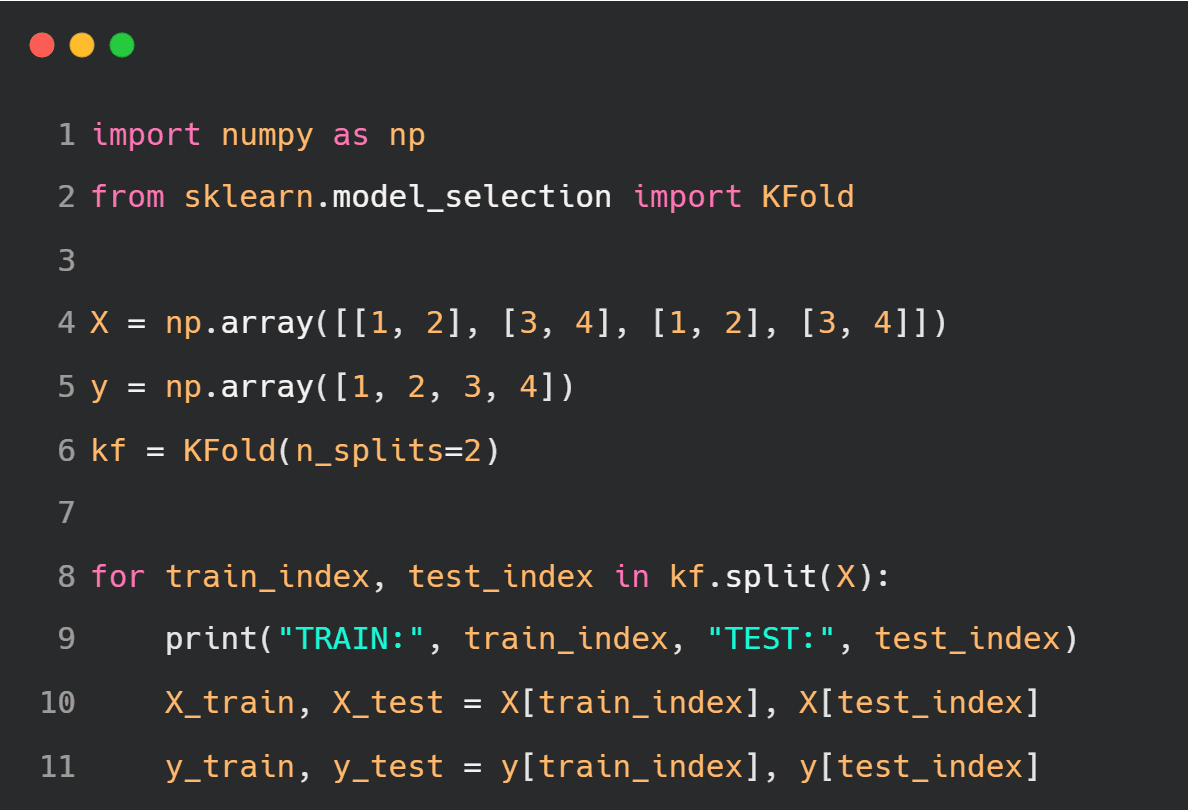

You can easily implement this using sklearn.model_selection.KFold

import numpy as np

from sklearn.model_selection import KFold

X = np.array([[1, 2], [3, 4], [1, 2], [3, 4]])

y = np.array([1, 2, 3, 4])

kf = KFold(n_splits=2)

for train_index, test_index in kf.split(X):

print("TRAIN:", train_index, "TEST:", test_index)

X_train, X_test = X[train_index], X[test_index]

y_train, y_test = y[train_index], y[test_index]

Why Should I Use K-fold Cross-Validation?

I am going to break down the reasons below as to why you should use K-fold Cross Validation

Make use of your data

We have a lot of readily available data that can explain a lot of things and help us identify hidden patterns. However, if we only have a small dataset, splitting it into a training and test dataset at a 80:20 ratio respectively doesn’t seem to do much for us.

However, when using K-fold cross validation, all parts of the data will be able to be used as part of the testing data. This way, all of our data from our small dataset can be used for both training and testing, allowing us to better evaluate the performance of our model.

More available metrics to help evaluate

Let’s refer back to the example of splitting your dataset into training and testing as a 80:20 ratio or a train-test split - this provides us with only one result to refer to in our evaluation of the model. We are not sure about the accuracy of this result, if it’s due to chance, the level of bias, or if it actually performed well.

However, when using k-fold cross validation, we have more models that will be producing more results. For example, if we chose our k value at 10, we would have 10 results to use in our evaluation of the model's performance.

If we were using accuracy as our measurement; having 10 different accuracy results where all of the data was used in the test phase is always going to be better and more reliable than using one accuracy result that was produced by a train-test split - where all the data wasn’t used in the test phase.

You would have more trust and confidence in your model's performance if the accuracy outputs were 94.0, 92.8, 93.0, 97.0, and 94.5 in a 5-fold cross validation than a 93.0 accuracy in a train-test split. This proves to us that our algorithm is generalizing and actively learning and is providing consistent reliable outputs.

Better performance using dependent data

In a random train-test split, we assume that the data inputs are independent. Let’s further expand on this. Let’s say we are using a random train-test split on a speech recognition dataset for British English speakers who reside from London. There are 5 speakers, with 250 recording each. Once the model performs a random train-test split, both the training and testing dataset will learn from the same speaker, which will be saying the same dialogue.

Yes this does improve the accuracy and boost the performance of the algorithms, however, what about when a new speaker is introduced to the model? How does the model perform then?

Using K-fold cross validation will allow you to train 5 different models, where in each model you are using one of the speakers for the testing dataset and the remaining for the training dataset. This way, not only can we evaluate the performance of our model, but our model will be able to perform better on new speakers and can be deployed in production to produce similar performance for other tasks.

Why is 10 Such a Desirable k value?

Choosing the right k-value is important as it can affect your level of accuracy, variance, bias and cause you to misinterpreted the overall performance of your model.

The simplest way to choose your k value is by equating it to your ‘n’ value, also known as leave-one-out cross-validation. n represents the size of the dataset which allows for each test sample the opportunity to be used in the test data or hold out data.

However, through various experimentations from Data Scientists, Machine Learning Engineers, and Researchers they have found that choosing a k-value of 10 has proven to provide a low bias and a modest variance.

Kuhn & Johnson spoke about their choice of k value in their book Applied Predictive Modeling.

“The choice of k is usually 5 or 10, but there is no formal rule. As k gets larger, the difference in size between the training set and the resampling subsets gets smaller. As this difference decreases, the bias of the technique becomes smaller (i.e., the bias is smaller for k=10 than k= 5). In this context, the bias is the difference between the estimated and true values of performance”

Let’s take this context and put it in an example:

Let's say we have a data set where N = 100

- If we chose our k value = 2, our subset size = 50 and the difference = 50

- If we chose our k value k = 4, our subset size = 75 and the difference = 25

- If we chose our k value = 10, our subset size = 90 and the difference = 10

So therefore, as the value of k increases, the difference between the original data set and the cross-validation subsets becomes smaller.

It is also stated that choosing k= 10 is more computationally efficient, as the larger the values of k gets it becomes more computationally impractical. Small values will also be deemed as computationally efficient, however they pose the possibility of high bias.

Conclusion

Cross-Validation is an important tool that every Data Scientist should be using or very proficient in at least. It allows you to make better use of all your data as well as providing Data Scientists, Machine Learning Engineers and Researchers with a better understanding of the performance of the algorithm.

Having confidence in your model is important to future deployment and trust that the model will be effective and perform well.

Nisha Arya is a Data Scientist and Freelance Technical Writer. She is particularly interested in providing Data Science career advice or tutorials and theory based knowledge around Data Science. She also wishes to explore the different ways Artificial Intelligence is/can benefit the longevity of human life. A keen learner, seeking to broaden her tech knowledge and writing skills, whilst helping guide others.