How to Efficiently Scale Data Science Projects with Cloud Computing

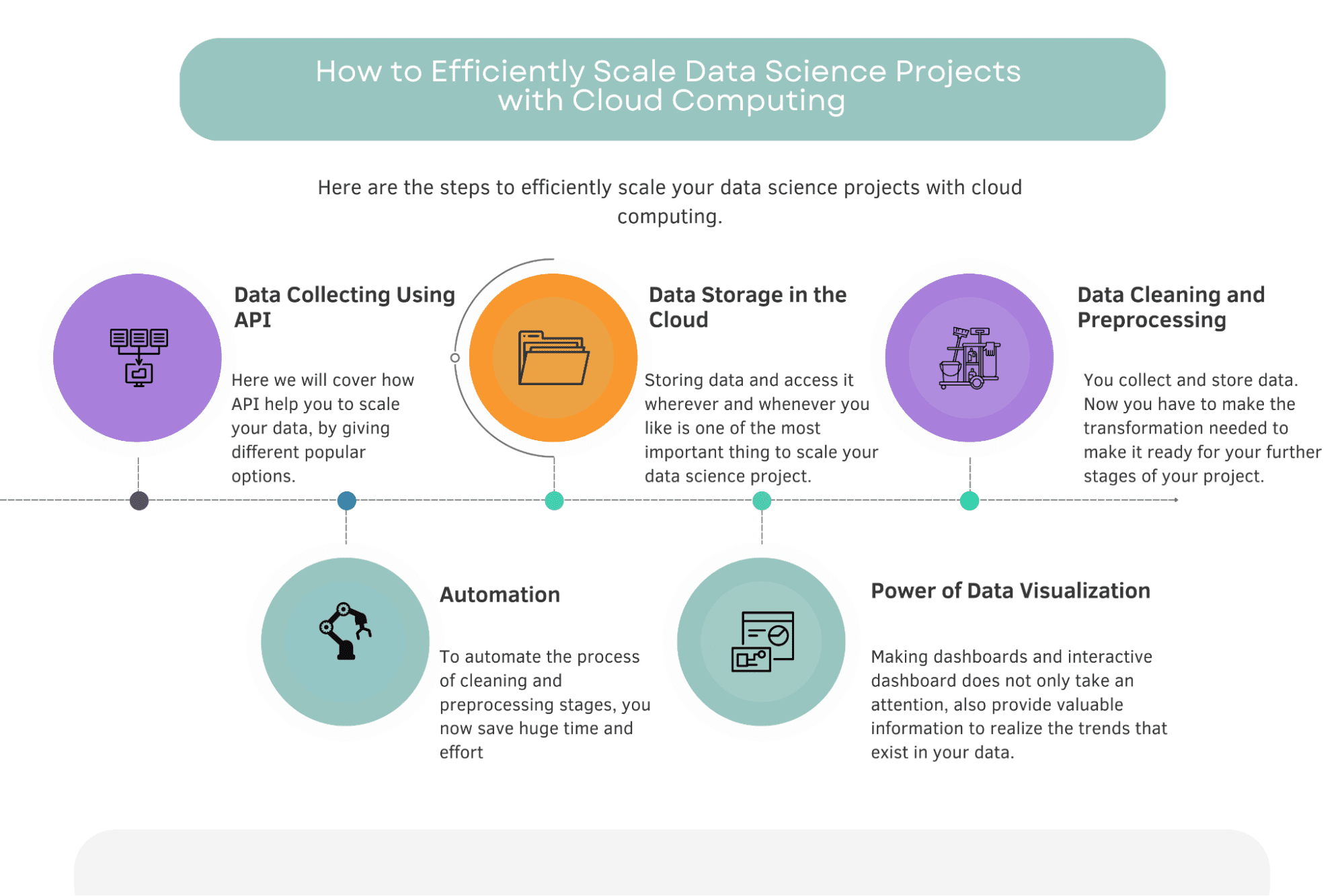

This article discusses the key components that contribute to the successful scaling of data science projects. It covers how to collect data using APIs, how to store data in the cloud, how to clean and process data, how to visualize data, and how to harness the power of data visualization through interactive dashboards.

Image by Author

It cannot be emphasized enough how crucial data is in making informed decisions.In today’s world, businesses rely on data to drive their strategies, optimize their operations, and gain a competitive edge.

However, as the volume of data grows exponentially, organizations or even developers in personal projects might face the challenge of efficiently scaling their data science projects to handle this deluge of information.

To address this issue, we will discuss five key components that contribute to the successful scaling of data science projects:

- Data Collection using APIs

- Data Storage in the Cloud

- Data Cleaning and Preprocessing

- Automation with Airflow

- Power of Data Visualization

These components are crucial in ensuring that businesses collect more data, and store it securely in the cloud for easy access, clean and process data using pre-written scripts, automate processes, and harness the power of data visualization through interactive dashboards connected to cloud-based storage.

Simply, these are the methods that we will cover in this article to scale your data science projects.

But to understand its importance, let’s take a look at, how you might scale your projects before cloud computing.

Before Cloud Computing

Image by Author

Before cloud computing, businesses had to rely on local servers to store and manage their data.

Data scientists had to move data from central servers to their systems for analysis, which was a time-consuming and complex process. Setting up and maintaining on-premise servers, can be highly costly and requires ongoing maintenance and backups.

Cloud computing has revolutionized the way businesses handle data by eliminating the need for physical servers and providing scalable resources on demand.

Now, let’s get started with Data Collection, to scale your data science projects.

Image by Author

Data Collection using API

Image by Author

In every data project the first stage will be data collection.

Feeding your project and model with constant, up-to-date data is crucial for increasing your model's performance and ensuring its relevance.

One of the most efficient ways to collect data is through API, which allows you to programmatically access and retrieve data from various sources.

APIs have become a popular method for data collection due to their ability to provide data from numerous sources including social media platforms or financial institutions and other web services.

Let’s cover different use cases to see how this can be done.

Youtube API

In this video, coding was done on Google Colab and testing was conducted using the Requests Library.

The YouTube API was used to retrieve data, and the response from making an API call was obtained.

The data was found to be stored in the 'items' key.

The data was parsed through, and a loop was created to go through the items.

A second API call was made, and the data was saved to a Pandas DataFrame.

This is a great example of using API in your data science project.

Quandl's API

Another example is the Quandl API, which can be used to access financial data.

In Data Vigo's video, here, he explains how to install Quandl using Python, find the desired data on Quandl's official website, and access the financial data using the API.

This approach allows you to easily feed your financial data project with the necessary information.

Rapid API

As you can see, there are many different options available to scale up your data by using different APIs. To discover the right API for your needs, you can explore platforms like RapidAPI, which offers a wide range of APIs covering various domains and industries. By leveraging these APIs, you can ensure that your data science project is always supplied with the latest data, enabling you to make well-informed, data-driven decisions.

Data Storage in the Cloud

Image by Author

Now, you collect your data, but where to store it?

The need for secure and accessible data storage is paramount in a data science project.

Ensuring that your data is both safe from unauthorized access and easily available to authorized users allows for smooth operations and efficient collaboration among team members.

Cloud-based databases have emerged as a popular solution for addressing these requirements.

Some popular cloud-based databases include Amazon RDS, Google Cloud SQL, and Azure SQL Database.

These solutions can handle large volumes of data.

Notable applications that utilize these cloud-based databases include ChatGPT, which runs on Microsoft Azure, demonstrating the power and effectiveness of cloud storage.

Let’s look at this use case.

Google Cloud SQL

To set up a Google Cloud SQL instance, follow these steps.

- Go to the Cloud SQL Instances page.

- Click "Create instance."

- Click "Choose SQL Server."

- Enter an ID for your instance.

- Enter a password.

- Select the database version you want to use.

- Select the region where your instance will be hosted.

- Update the settings according to your preferences.

For more detailed instructions, refer to the official Google Cloud SQL documentation. Additionally, you can read this article that explains Google Cloud SQL for practitioners, providing a comprehensive guide to help you get started.

By utilizing cloud-based databases, you can ensure that your data is securely stored and easily accessible, enabling your data science project to run smoothly and efficiently.

Data Cleaning and Preprocessing

Image by Author

You collect your data and store it in the cloud. Now, it is time to transform your data for further stages.

Because raw data often contains errors, inconsistencies, and missing values that can negatively impact the performance and accuracy of your models.

Proper data cleaning and preprocessing are essential steps to ensure that your data is ready for analysis and modeling.

Pandas and NumPy

Creating a script for cleaning and preprocessing involves the use of programming languages like Python and leveraging popular libraries such as Pandas and NumPy.

Pandas is a widely used library that offers data manipulation and analysis tools, while NumPy is a fundamental l?brary for numerical computing in Python. Both libraries provide essential functions for cleaning and preprocessing data, including handling missing values, filtering data, reshaping datasets, and more.

Pandas and NumPy are crucial in data cleaning and preprocessing because they offer a robust and efficient way to manipulate and transform data into a structured format, that can be easily consumed by machine learning algorithms and data visualization tools.

Once you have created a data cleaning and preprocessing script, you can deploy it on the cloud for automation. This ensures that your data is consistently and automatically cleaned and preprocessed, streamlining your data science project.

Data Cleaning on AWS Lambda

To deploy a data cleaning script on AWS Lambda, you can follow the steps outlined in this beginner example on processing a CSV file using AWS Lambda. This example demonstrates how to set up a Lambda function, configure the necessary resources, and execute the script in the cloud.

By leveraging the power of cloud-based automation and the capabilities of libraries like Pandas and NumPy, you can ensure that your data is clean, well-structured, and ready for analysis, ultimately leading to more accurate and reliable insights from your data science project.

Automation

Image by Author

Now, how can we automate this process?

Apache Airflow

Apache Airflow is well-suited for this particular task as it enables the programmable creation, scheduling, and monitoring of workflows.

It allows you to define complex, multi-stage pipelines using Python code, making it an ideal tool for automating data collection, cleaning, and preprocessing tasks in data analytics projects.

Automating a COVID Data Analysis using Apache Airflow

Let’s see its usage in the example project.

Example project: Automating a COVID data analysis using Apache Airflow.

In this example project, here, the author demonstrated how to automate a COVID data analysis pipeline using Apache Airflow.

- Create a DAG (Directed Acyclic Graph) file

- Load data from the data source.

- Clean and preprocess the data.

- Load the processed data into BigQueryç

- Send an email notification:

- Upload the DAG to Apache Airflow

By following these steps, you can create an automated pipeline for COVID data analysis using Apache Airflow.

This pipeline will handle data collection, cleaning, preprocessing, and storage, while also sending notifications upon successful completion.

Automation with Airflow streamlines your data science project, ensuring that your data is consistently processed and updated, enabling you to make well-informed decisions based on the latest information.

Power of Data Visualization

Image by Author

Data visualization plays a crucial role in data science projects by transforming complex data into easily understandable visuals, enabling stakeholders to quickly grasp insights, identify trends and make more informed decisions based on the presented information.

Simply put, it will offer you information in interactive ways.

There are several tools available for creating interactive dashboards including Tableau, Power BI, and Google Data Studio.

Each of these tools offers unique features and capabilities to help users create visually appealing and informative dashboards.

Connecting Dashboard to your cloud-based database

To integrate cloud data into a dashboard, start by choosing a cloud-based data integration tool that aligns with your needs. Connect the tool to your preferred cloud data source and map the data fields you want to display on your dashboard.

Next, select the appropriate visualization tools to represent your data in a clean and concise manner. Enhance data exploration by incorporating filters, grouping options, and drill-down capabilities.

Ensure that your dashboard automatically refreshes the data or configure manual updates as needed.

Lastly, test the dashboard thoroughly for accuracy and usability, making any necessary adjustments to improve the user experience.

Connecting Tableau to your cloud-based database - use case

Tableau offers seamless integration with cloud-based databases, making it simple to connect your cloud data to your dashboard.

First, identify the type of database you are using, as Tableau supports various database technologies such as Amazon Web Services(AWS), Google Cloud, and Microsoft Azure.

Then, establish a connection between your cloud database and Tableau, typically using API keys for secure access.

Tableau also provides a variety of cloud-based data connectors that can be easily configured to access data from multiple cloud sources.

For a step-by-step guide on deploying a single Tableau server on AWS, refer to this detailed documentation.

Alternatively, you can explore a use case that demonstrates the connection between Amazon Athena and Tableau, complete with screenshots and explanations.

Conclusion

The benefits of scaling data science projects with cloud computing include improved resource management, cost savings, flexibility, and the ability to focus on data analysis rather than infrastructure management.

By embracing cloud computing technologies and integrating them into your data science projects, you can enhance the scalability, efficiency, and overall success of your data-driven initiatives.

Improved decision-making and insights from data are achievable too by adopting cloud computing technologies in your data science projects. As you continue to explore and adopt cloud-based solutions, you'll be better equipped to handle the ever-growing volume and complexity of data.

This will ultimately empower your organization to make smarter, data-driven decisions based on the valuable insights derived from well-structured and efficiently managed data pipelines.

In this article, we discussed the importance of data collection using APIs and explored various tools and techniques to streamline data storage, cleaning, and preprocessing in the cloud. We also covered the powerful impact of data visualization in decision-making and highlighted the benefits of automating data pipelines using Apache Airflow.

Embracing the benefits of cloud computing for scaling your data science projects will enable you to fully harness the potential of your data and drive your organization towards success in the increasingly competitive landscape of data-driven industries.

Nate Rosidi is a data scientist and in product strategy. He's also an adjunct professor teaching analytics, and is the founder of StrataScratch, a platform helping data scientists prepare for their interviews with real interview questions from top companies. Connect with him on Twitter: StrataScratch or LinkedIn.