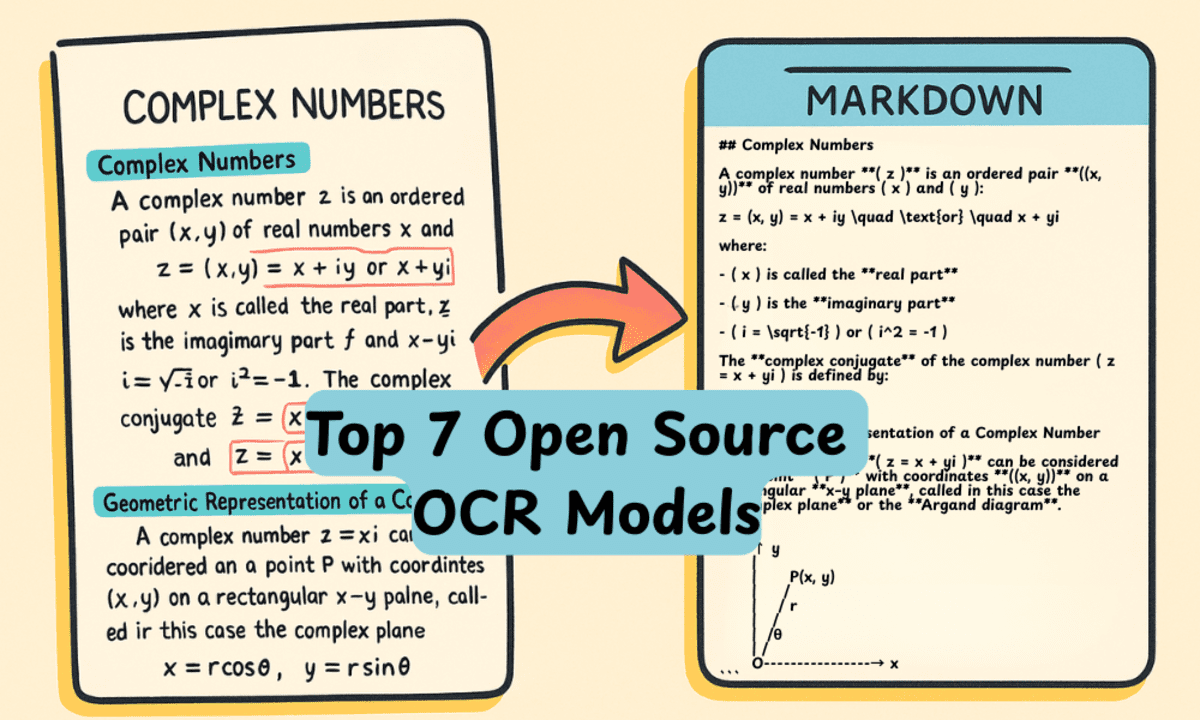

Top 7 Open Source OCR Models

Best OCR and vision language models you can run locally that transform documents, tables, and diagrams into flawless markdown copies with benchmark-crushing accuracy.

Image by Author

# Introduction

OCR (Optical Character Recognition) models are gaining new recognition every day. I am seeing new open-source models pop up on Hugging Face that have crushed previous benchmarks, offering better, smarter, and smaller solutions.

Gone are the days when uploading a PDF meant getting plain text with lots of issues. We now have complete transformations, new AI models that understand documents, tables, diagrams, sections, and different languages, converting them into highly accurate markdown format text. This creates a true 1-to-1 digital copy of your text.

In this article, we will review the top 7 OCR models that you can run locally without any issues to parse your images, PDFs, and even photos into perfect digital copies.

# 1. olmOCR 2 7B 1025

olmOCR-2-7B-1025 is a vision-language model optimized for optical character recognition on documents.

Released by the Allen Institute for Artificial Intelligence, the olmOCR-2-7B-1025 model is fine-tuned from Qwen2.5-VL-7B-Instruct using the olmOCR-mix-1025 dataset and further enhanced with GRPO reinforcement learning training.

The model achieves an overall score of 82.4 on the olmOCR-bench evaluation, demonstrating strong performance on challenging OCR tasks including mathematical equations, tables, and complex document layouts.

Designed for efficient large-scale processing, it works best with the olmOCR toolkit which provides automated rendering, rotation, and retry capabilities for handling millions of documents.

Here are the top five key features:

- Adaptive Content-Aware Processing: Automatically classifies document content types including tables, diagrams, and mathematical equations to apply specialized OCR strategies for enhanced accuracy

- Reinforcement Learning Optimization: GRPO RL training specifically enhances accuracy on mathematical equations, tables, and other difficult OCR cases

- Excellent Benchmark Performance: Scores 82.4 overall on olmOCR-bench with strong results across arXiv documents, old scans, headers, footers, and multi-column layouts

- Specialized Document Processing: Optimized for document images with longest dimension of 1288 pixels and requires specific metadata prompts for best results

- Scalable Toolkit Support: Designed to work with the olmOCR toolkit for efficient VLLM-based inference capable of processing millions of documents

# 2. PP OCR v5 Server Det

PaddleOCR VL is an ultra-compact vision-language model specifically designed for efficient multilingual document parsing.

Its core component, PaddleOCR-VL-0.9B, integrates a NaViT-style dynamic resolution visual encoder with the lightweight ERNIE-4.5-0.3B language model to achieve state-of-the-art performance while maintaining minimal resource consumption.

Supporting 109 languages including Chinese, English, Japanese, Arabic, Hindi, and Thai, the model excels at recognizing complex document elements such as text, tables, formulas, and charts.

Through comprehensive evaluations on OmniDocBench and in-house benchmarks, PaddleOCR-VL demonstrates superior accuracy and fast inference speeds, making it highly practical for real-world deployment scenarios.

Here are the top five key features:

- Ultra-Compact 0.9B Architecture: Combines a NaViT-style dynamic resolution visual encoder with ERNIE-4.5-0.3B language model for resource-efficient inference while maintaining high accuracy

- State-of-the-Art Document Parsing: Achieves leading performance on OmniDocBench v1.5 and v1.0 for overall document parsing, text recognition, formula extraction, table understanding, and reading order detection

- Extensive Multilingual Support: Recognizes 109 languages covering major global languages and diverse scripts including Cyrillic, Arabic, Devanagari, and Thai for truly global document processing

- Comprehensive Element Recognition: Excels at identifying and extracting text, tables, mathematical formulas, and charts including complex layouts and challenging content like handwritten text and historical documents

- Flexible Deployment Options: Supports multiple inference backends including native PaddleOCR toolkit, transformers library, and vLLM server for optimized performance across different deployment scenarios

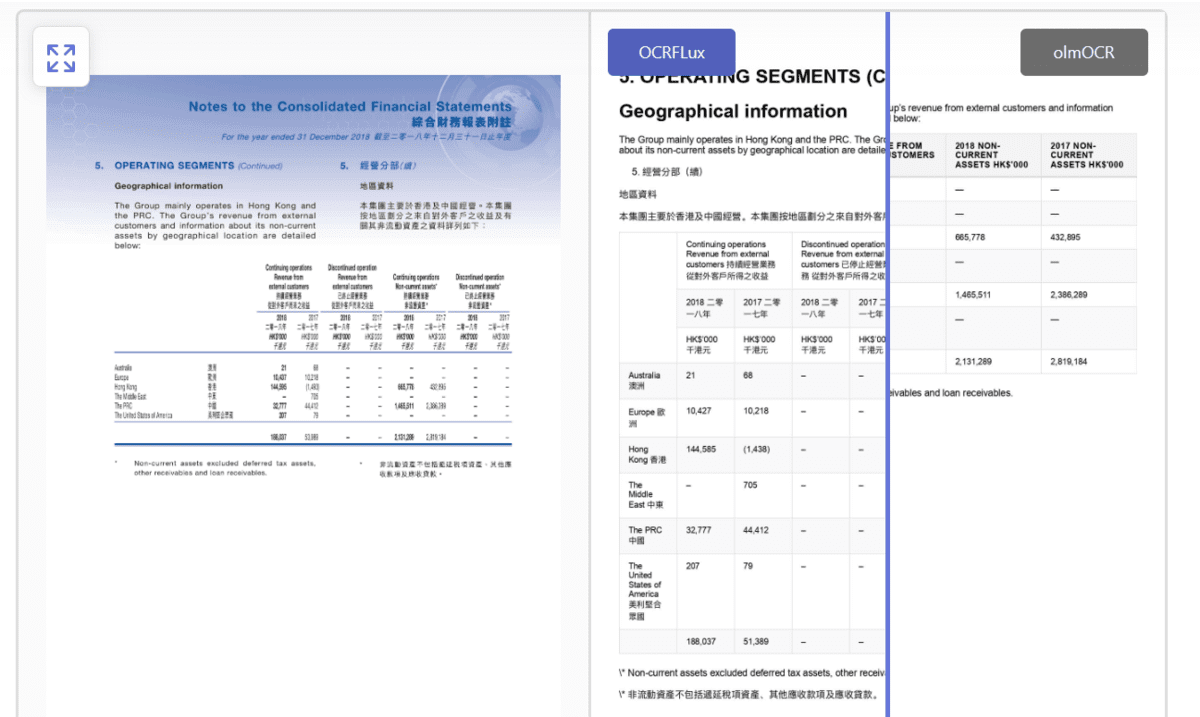

# 3. OCRFlux 3B

OCRFlux-3B is a preview release of a multimodal large language model fine-tuned from Qwen2.5-VL-3B-Instruct for converting PDFs and images into clean, readable Markdown text.

The model leverages private document datasets and the olmOCR-mix-0225 dataset to achieve superior parsing quality.

With its compact 3 billion parameter architecture, OCRFlux-3B can run efficiently on consumer hardware like the GTX 3090 while supporting advanced features like native cross-page table and paragraph merging.

The model achieves state-of-the-art performance on comprehensive benchmarks and is designed for scalable deployment via the OCRFlux toolkit with vLLM inference support.

Here are the top five key features:

- Exceptional Single-Page Parsing Accuracy: Achieves an Edit Distance Similarity of 0.967 on OCRFlux-bench-single, significantly outperforming olmOCR-7B-0225-preview, Nanonets-OCR-s, and MonkeyOCR

- Native Cross-Page Structure Merging: First open-source project to natively support detecting and merging tables and paragraphs that span multiple pages, achieving 0.986 F1 score on cross-page detection

- Efficient 3B Parameter Architecture: Compact model design enables deployment on GTX 3090 GPUs while maintaining high performance through vLLM-optimized inference for processing millions of documents

- Comprehensive Benchmarking Suite: Provides extensive evaluation frameworks including OCRFlux-bench-single and cross-page benchmarks with manually labeled ground truth for reliable performance measurement

- Scalable Production-Ready Toolkit: Includes Docker support, Python API, and a complete pipeline for batch processing with configurable workers, retries, and error handling for enterprise deployment

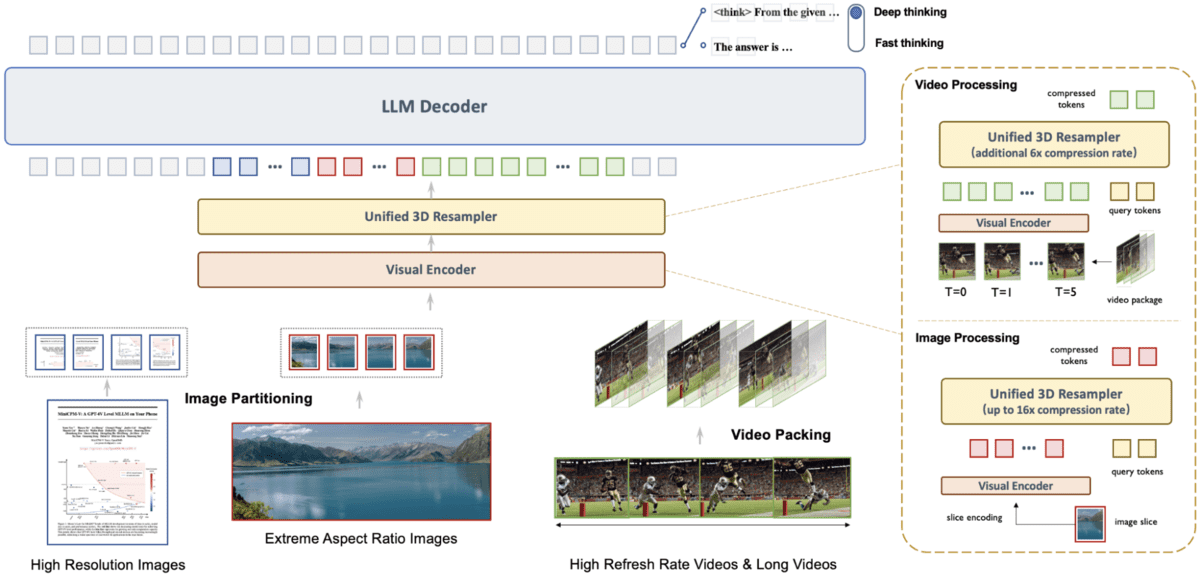

# 4. MiniCPM-V 4.5

MiniCPM-V 4.5 is the latest model in the MiniCPM-V series, offering advanced optical character recognition and multimodal understanding capabilities.

Built on Qwen3-8B and SigLIP2-400M with 8 billion parameters, this model delivers exceptional performance for processing text within images, documents, videos, and multiple images directly on mobile devices.

It achieves state of the art results across comprehensive benchmarks while maintaining practical efficiency for everyday applications.

Here are the top five key features:

- Exceptional Benchmark Performance: State of the art vision language performance with a 77.0 average score on OpenCompass, surpassing larger models like GPT-4o-latest and Gemini-2.0 Pro

- Revolutionary Video Processing: Efficient video understanding using a unified 3D-Resampler that compresses video tokens 96 times, enabling high-FPS processing up to 10 frames per second

- Flexible Reasoning Modes: Controllable hybrid fast and deep thinking modes for switching between quick responses and complex reasoning

- Advanced Text Recognition: Strong OCR and document parsing that processes high resolution images up to 1.8 million pixels, achieving leading scores on OCRBench and OmniDocBench

- Versatile Platform Support: Easy deployment across platforms with llama.cpp and ollama support, 16 quantized model sizes, SGLang and vLLM integration, fine tuning options, WebUI demo, iOS app, and online web demo

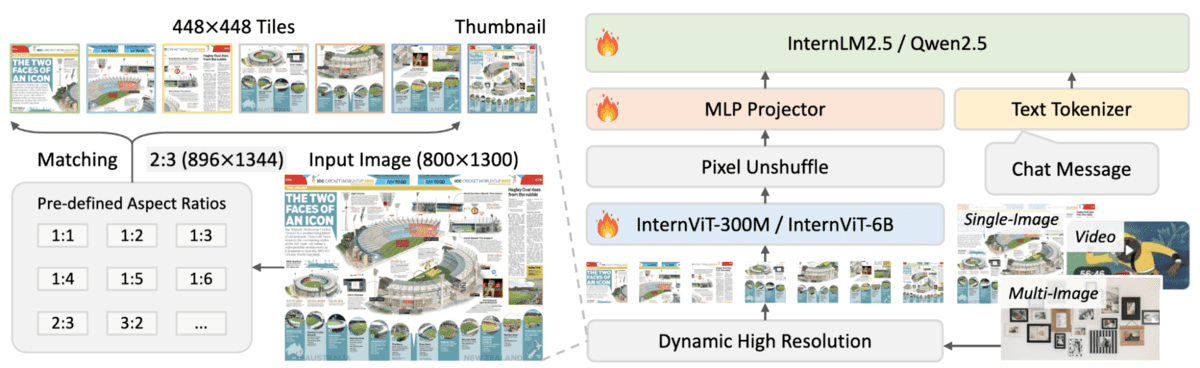

# 5. InternVL 2.5 4B

InternVL2.5-4B is a compact multimodal large language model from the InternVL 2.5 series, combining a 300 million parameter InternViT vision encoder with a 3 billion parameter Qwen2.5 language model.

With 4 billion total parameters, this model is specifically designed for efficient optical character recognition and comprehensive multimodal understanding across images, documents, and videos.

It employs a dynamic resolution strategy that processes visual content in 448 by 448 pixel tiles while maintaining strong performance on text recognition and reasoning tasks, making it suitable for resource constrained environments.

Here are the top five key features:

- Dynamic High Resolution Processing: Handles single images, multiple images, and video frames by dividing them into adaptive 448 by 448 pixel tiles with intelligent token reduction through pixel unshuffle operations

- Efficient Three Stage Training: Features a carefully designed pipeline with MLP warmup, optional vision encoder incremental learning for specialized domains, and full model instruction tuning with strict data quality controls

- Progressive Scaling Strategy: Trains the vision encoder with smaller language models first before transferring to larger ones, using less than one tenth of the tokens required by comparable models

- Advanced Data Quality Filtering: Employs a comprehensive pipeline with LLM based quality scoring, repetition detection, and heuristic rule based filtering to remove low quality samples and prevent model degradation

- Strong Multimodal Performance: Delivers competitive results on OCR, document parsing, chart understanding, multi image comprehension, and video analysis while preserving pure language capabilities through improved data curation

# 6. Granite Vision 3.3 2b

Granite Vision 3.3 2b is a compact and efficient vision-language model released on June 11th, 2025, designed specifically for visual document understanding tasks.

Built upon the Granite 3.1-2b-instruct language model and SigLIP2 vision encoder, this open-source model enables automated content extraction from tables, charts, infographics, plots, and diagrams.

It introduces experimental features including image segmentation, doctags generation, and multi-page document support while offering enhanced safety compared to earlier versions.

Here are the top five key features:

- Superior Document Understanding Performance: Achieves improved scores across key benchmarks including ChartQA, DocVQA, TextVQA, and OCRBench, outperforming previous granite-vision versions

- Enhanced Safety Alignment: Features improved safety scores on RTVLM and VLGuard datasets, with better handling of political, racial, jailbreak, and misleading content

- Experimental Multipage Support: Trained to handle question answering tasks using up to 8 consecutive pages from a document, enabling long context processing

- Advanced Document Processing Features: Introduces novel capabilities including image segmentation and doctags generation for parsing documents into structured text formats

- Efficient Enterprise-Focused Design: Compact 2 billion parameter architecture optimized for visual document understanding tasks while maintaining 128 thousand token context length

# 7. Trocr Large Printed

The TrOCR large-sized model fine-tuned on SROIE is a specialized transformer-based optical character recognition system designed for extracting text from single-line images.

Based on the architecture introduced in the paper "TrOCR: Transformer-based Optical Character Recognition with Pre-trained Models," this encoder-decoder model combines a BEiT-initialized image Transformer encoder with a RoBERTa-initialized text Transformer decoder.

The model processes images as sequences of 16 by 16 pixel patches and autoregressively generates text tokens, making it particularly effective for printed text recognition tasks.

Here are the top five key features:

- Transformer Based Architecture: Encoder-decoder design with image Transformer encoder and text Transformer decoder for end-to-end optical character recognition

- Pretrained Component Initialization: Leverages BEiT weights for image encoder and RoBERTa weights for text decoder for better performance

- Patch Based Image Processing: Processes images as fixed-size 16 by 16 patches with linear embedding and position embeddings

- Autoregressive Text Generation: Decoder generates text tokens sequentially for accurate character recognition

- SROIE Dataset Specialization: Fine-tuned on the SROIE dataset for enhanced performance on printed text recognition tasks

# Summary

Here is a comparison table that quickly summarizes leading open-source OCR and vision-language models, highlighting their strengths, capabilities, and optimal use cases.

| Model | Params | Main Strength | Special Capabilities | Best Use Case |

|---|---|---|---|---|

| olmOCR-2-7B-1025 | 7B | High-accuracy document OCR | GRPO RL training, equation and table OCR, optimized for ~1288px document inputs | Large-scale document pipelines, scientific and technical PDFs |

| PaddleOCR v5 / PaddleOCR-VL | 1B | Multilingual parsing (109 languages) | Text, tables, formulas, charts; NaViT-based dynamic visual encoder | Global multilingual OCR with lightweight, efficient inference |

| OCRFlux-3B | 3B | Markdown-accurate parsing | Cross-page table and paragraph merging; optimized for vLLM | PDF-to-Markdown pipelines; runs well on consumer GPUs |

| MiniCPM-V 4.5 | 8B | State-of-the-art multimodal OCR | Video OCR, support for 1.8MP images, fast and deep-thinking modes | Mobile and edge OCR, video understanding, multimodal tasks |

| InternVL 2.5-4B | 4B | Efficient OCR with multimodal reasoning | Dynamic 448×448 tiling strategy; strong text extraction | Resource-limited environments; multi-image and video OCR |

| Granite Vision 3.3 (2B) | 2B | Visual document understanding | Charts, tables, diagrams, segmentation, doctags, multi-page QA | Enterprise document extraction across tables, charts, and diagrams |

| TrOCR Large (Printed) | 0.6B | Clean printed-text OCR | 16×16 patch encoder; BEiT encoder with RoBERTa decoder | Simple, high-quality printed text extraction |

Abid Ali Awan (@1abidaliawan) is a certified data scientist professional who loves building machine learning models. Currently, he is focusing on content creation and writing technical blogs on machine learning and data science technologies. Abid holds a Master's degree in technology management and a bachelor's degree in telecommunication engineering. His vision is to build an AI product using a graph neural network for students struggling with mental illness.