Level 50 Data Scientist: Python Libraries to Know

This article will help you understand the different tools of Data Science used by experts for Data Visualization, Model Building, and Data Manipulation.

Image by Author

Data Science remains one of the hottest job titles in the 21st century. So, it's no wonder there's a lot of curiosity about it. But first, what is Data Science?

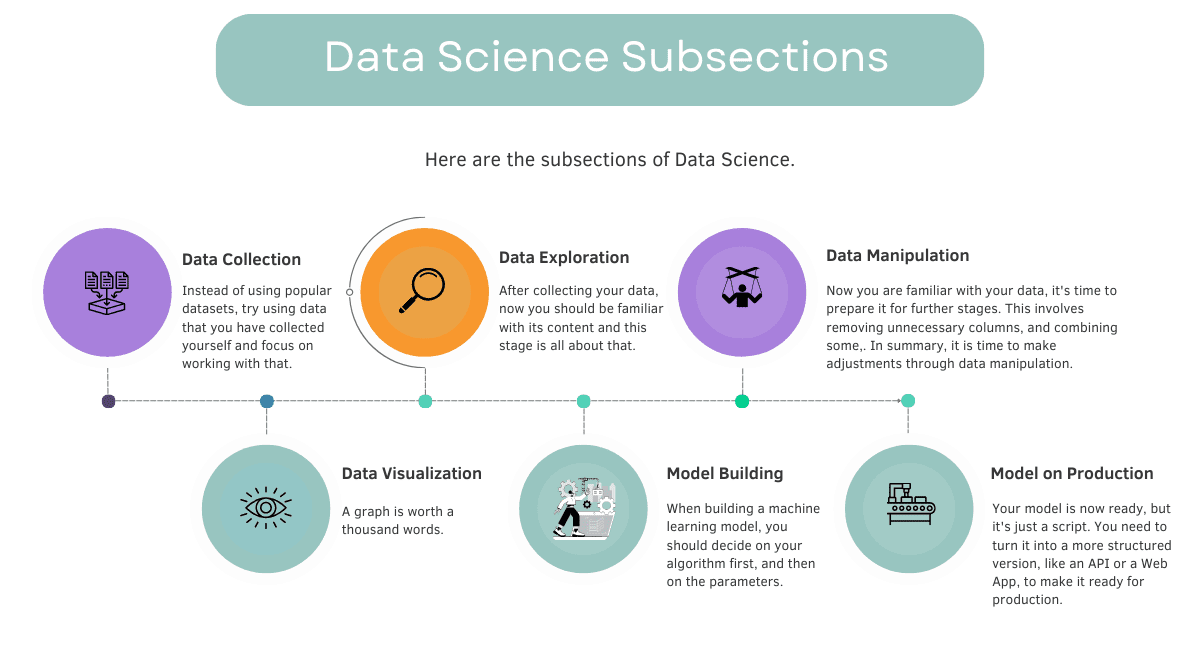

Data Science is a multidisciplinary field that includes different elements from various domains, such as Data Visualization, Model Building, and Data Manipulation.

In this article, we will look closer to these elements, and explore the libraries that will allow you to apply these elements, by using Python. Whether you're a pro or consider yourself a beginner, this article will surely expand your knowledge. Let’s get started!

Image by Author

Step 1 : Data Collection

Data Collection, means the process of combining information from the web.

You might see different data projects, which includes synthetic datasets or datasets fromKaggle.

Even if this is good for beginners, if you want to land a competitive job, you should do more.

In python, there are a lot of options to do that, let’s look closer at 3 of them.

Scrapy

This is a web crawling framework for Python, ideal for large-scale data extraction.

It's more sophisticated than BeautifulSoup, allowing for more complex data collection.

A unique feature of Scrapy is its ability to handle asynchronous requests efficiently, making it faster for large-scale scraping tasks. If you are new, the next one is better fit for you.

BeautifulSoup

BeautifulSoup is used for parsing HTML and XML documents. It's simpler and more user-friendly than Scrapy, making it ideal for beginners or for simpler scraping tasks.

A distinctive aspect of BeautifulSoup is its flexibility in parsing even poorly formatted HTML.

Selenium

Selenium is used primarily for automating web browsers. It is perfect for scraping data from websites that require interaction, like filling out forms or including JavaScript-driven content.

Its novel feature is the ability to automate and interact with web pages as if a human were browsing, which allows data collection from dynamic web pages.

Step 2: Data Exploration

Now you have data, but you should explore it to see its features.

Scipy

Scipy is used for scientific and technical computing.

It's more focused on advanced computations compared to numpy, offering additional functionalities like optimization, integration, and interpolation.

A unique feature of Scipy is its extensive collection of submodules for different scientific computing tasks.

Numpy

It is one of the most important libraries in Python about Data Science.

Biggest part of its fame comes from its array object. While Scipy builds on Numpy, Numpy itself works alone too.

A distinguishing feature is its ability to perform efficient array computations, which actually is the reason why it is that much important in Data Science, however the next one is also too important.

Pandas

Pandas offers easy to use data structures like data frames, and data analysis tools that will best fit to manipulate data by using data frames.

A novel aspect of Pandas, which distinguishes it from other data manipulation tools, is DataFrames, which provides extensive capabilities for data manipulation and analysis.

Step 3 : Data Manipulation

Image by Author

Data Manipulation is the process where you are shaping your data, to get ready for the next stages.

Pandas

Pandas offers data structures like DataFrame, which makes everything easier to work with. Because there are too many built-in functions defined in pandas, which will turn your 100 lines of code into 2 built-in functions.

It also has data visualization capabilities and data exploration functions, making it more all-purpose than other Python libraries.

Step 4: Data Visualization

Data Visualization enables you to tell the whole story on one page. Tto do that, in this section we will cover 3 of them.

Matplotlib

If you visualized your Data with Python, you know what matplotlib is. It is a Python library for creating a wide range of types of graphics, like static, interactive or even animated.

It is a more customizable data visualization library than others. You can control pretty much any element of a plot with it.

Seaborn

Seaborn is built on top of Matplotlib, and offers a different kind of view of the same graphs, like bar plot.

It can be simpler to use for creating complex visualizations, compared to Matplotlib, and it is fully integrated with Pandas DataFrames.

Plotly

Ploty is more interactive than others. You can even create a dashboard with it and also you can integrate your code with Plotly and see your graphs on the Plotly website.

If you want to know more, here are the Python Data Visualization Libraries.

Step 5 : Model Building

Model Building is the step, where you can finally see the results of your actions, to make predictions. To do that, we still have too many libraries.

Sci-kit Learn

Most famous Python library for machine learning is Sci-kit learn. It offers too simple, yet efficient functions to build your model in a couple of seconds. Of course, you can develop many of these functions by yourself, but do you want to write 100 lines of code instead of 1?

Its novel feature is the comprehensive collection of algorithms in a single package.

TensorFlow

TensorFlow, created by Google, is better suited for high-level models such as deep learning and offers high-level functions for building large-scale neural networks compared to Scikit-learn. Additionally, there are many free tools available online, also created by Google, which make learning TensorFlow easier.

Keras

Keras offers a high-level neural networks API, and it is capable of running on top of Tensorflow. It focuses more on enabling fast experimentation with deep neural networks than Tensorflow.

Step 6: Model on Production

Now you have your model, but it is just script. To make something more meaningful from it, you should turn your model into web application or api to make it ready for production.

Django

The most famous web framework allows you to develop your model in a structured way. It is more complicated than Flask and FastAPI, yet the reason behind it is that it has many built-in features, like an admin panel.

In Flask, for example, you should develop many things from scratch, but if you don’t know much about web frameworks, it's a good place to start.

Flask

Flask is a micro web framework for python, with it you can develop your own web app or api, easier. It is more flexible then Django and more suitable for smaller applications.

FastAPI

FastAPI is fast and easy to use, which made it more popular.

A unique feature of FastAPI is its automatic generation of documentation and its built-in validation using Python type hints.

If you want to know more, here are the top 18 Python libraries.

Bonus Step : Cloud Systems

At this stage, you have everything, but in your own environment. To share your model to the world and to test it even more, you should share them with people. To do that, your web application or api should be running on the server.

Heroku

A cloud platform as a service (PaaS) supporting several programming languages.

It's more user-friendly for beginners compared to AWS also offering simpler deployment processes for web applications. If you are a total beginner, it might be better for you, like Python anywhere.

PythonAnywhere

PythonAnyhwhere is an online development environment, also offers web hosting service, based on Python programming language, which can be understandable from its name.

It's more focused on Python-specific projects compared to other tools. If you chose Flask at step 6, you can upload your model to pythonanywhere, and it also has a free feature.

AWS (Amazon Web Services)

AWS has too many different options, for every feature it offers in the platform. If you plan to choose a database, even for it, there are too many options.

It is more complex and comprehensive then other tools, and well fit for large-scale operations.

Like if you chose django in the previous section, and take your time to create a large-scale web application, your next choice would be AWS.

Final Thoughts

In this article, we explored major Python libraries used in Data Science. When working on your Data Science projects, remember that there isn't just one ultimate method. I hope this article has introduced you to different tools.

Nate Rosidi is a data scientist and in product strategy. He's also an adjunct professor teaching analytics, and is the founder of StrataScratch, a platform helping data scientists prepare for their interviews with real interview questions from top companies. Nate writes on the latest trends in the career market, gives interview advice, shares data science projects, and covers everything SQL.