Basics of GPU Computing for Data Scientists

With the rise of neural network in data science, the demand for computationally extensive machines lead to GPUs. Learn how you can get started with GPUs & algorithms which could leverage them.

By Taposh Dutta-Roy.

Basics of GPU Computing for Data Scientists

GPU’s have become the new core for image analytics. More and more data scientists are looking into using GPU for image processing. In this article I review the basics of GPU’s that are needed for a data scientist and list a frame work discussed in literature for suitability of GPU for an algorithm.

Lets start with what is a GPU?

Graphics Processing Unit (GPU) were originally created for rendering graphics. However due to their high performance and low cost they have become the new standard of image processing. Their application areas include image restoration, segmentation (labeling), de-noising, filtering, interpolation and reconstruction. A web search on what is a GPU would result to : “A graphics processing unit (GPU) is a computer chip that performs rapid mathematical calculations, primarily for the purpose of rendering images.”

What is GPU Computing?

Nvidia’s blog defines GPU computing is the use of a graphics processing unit (GPU) together with a CPU to accelerate scientific, analytics, engineering, consumer, and enterprise applications. They also say if CPU is the brain then GPU is Soul of the computer.

GPU’s used for general-purpose computations have a highly data parallel architecture. They are composed of a number of cores. Each of these cores have a number of functional units, such as arithmetic and logic units (ALUs) etc. One or more of these functional units are used to process each thread of execution. These group of functional units that help the thread are called “thread processors”. All thread processors in a core of GPU perform the same instructions, as they share the same control unit. This means that GPUs can perform the same instruction on each pixel of an image in parallel. GPU architectures are complex and differ from manufacture to manufacture. The two main players in GPU market are Nvidia and AMD. Nvidia calls thread processors as CUDA (Compute Unified Device Architecture) cores, AMD calls them as Stream Processors (SP) .

The main difference between a thread processor and a CPU core is that each CPU core can perform different instructions on different data in parallel because each CPU core has separate control unit. Researchers have defined core as a processing unit with an independent flow of control. Based on this definition, scientists are referring to a group of thread processors that share the same control unit as cores. GPU’s are constructed to fit many thread processors on a chip while CPU’s are focused on advanced control units and large caches.

There are 2 main frameworks for GPU programming — OpenCL and CUDA. CUDA programming language can be used with Nvidia only where as OpenCL is an open standard for parallel computing on different devices including GPUs, CPUs and FPGAs. There are a few image processing libraries that provide GPU implementation — OpenCV,ArrayFire, Nvidia Performance Primities (NPP), CUVLIB, Intel Integrated Performance Primitivies, OpenCL Integrated Performance Primitives and the Insight Took Kit (ITK). Out of these the larger ones are OpenCV (supports CUDA and OpenCL) and ITK (supports only OpenCL).

Algorithm suitability for GPU usage

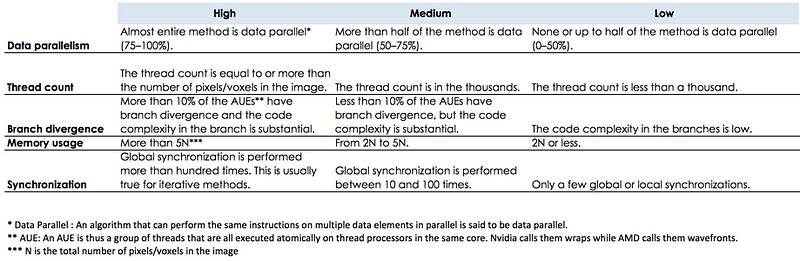

Erik Smistad et.al. discuss 5 factors that define the suitability of an algorithm towards a GPU implementation. These are — Data parallelism, thread count, branch divergence, memory usage and synchronization. I have created this mapping table for easy reference using the framework.

As a data scientist knowing this will help you selecting the suitability of GPU for your algorithm. Nvidia CUDA is more popular since most apple laptops have them but others are getting steam as well. Let me know if you have questions and comments.

Related: