Improving Nudity Detection and NSFW Image Recognition

This post discussed improvements made in a tricky machine learning classification problem: nude and/or NSFW, or not?

By James Sutton, Algorithmia.

Last June we introduced isitnude.com, a demo for detecting nudity in images based on our Nudity Detection algorithm. However, we wouldn’t be engineers if we didn’t think we could improve the NSFW image recognition accuracy.

The challenge, of course, is how do you do that without a labeled dataset of tens of thousands of nude images? To source and manually label thousands of nude images would have taken months, and likely years of post-traumatic therapy sessions. Without this kind of labeled dataset, we had no way of utilizing computer vision techniques such as CNNs (Convolutional Neural Networks) to construct an algorithm that could detect nudity in images.

The Starting Point for NSFW Image Recognition

The original algorithm used nude.py at its core to identify and locate skin in an image. It looked for clues in an image, like the size and percentage of skin in the image, to classify it as either nude or non-nude.

We built upon this by combining nude.py with OpenCV’s nose detection, and face detection algorithms. By doing this, we could draw bounding polygons for the face and nose in an image, and then get the skin tone range for each polygon. We could then compare the skin tone from inside the box to the skin found outside the box.

As a result, if we found a large area of skin in the image that matched the skin tone of the person’s face/nose, we could return that there was nudity in the image with high confidence.

Additionally, this method allowed us to limit false positives, and check for nudity when there were multiple people in an image. The downside to this method was that we could only detect nudity when a human face was present.

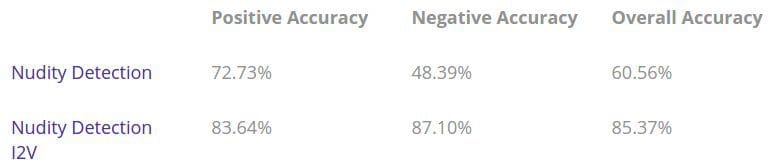

All told, our Nudity Detection algorithm was ~60% accurate using the above method.

Improving Nudity Detection In Images

The first step in improving our ability to detect nudity in images was to find a pre-trained model that we could work with. After some digging, one of our algorithm developers found the Illustration2Vec algorithm. It’s trained on anime to extract feature vectors from illustrations. The creators of the Illustration2Vec scraped 1.2M+ images of anime and the associated metadata, creating four categories of tags: general, copyright, character, and ratings.

This was a good starting point. We then created the Illustration Tagger microservice on Algorithmia, our implementation of the Illustration2Vec algorithm.

With the microservice running, we could simply call the API endpoint, and pass it an image. Below we pass an image of Justin Trudeau, Canada’s prime minister, to Illustration Tagger like so:

{

"image": "https://upload.wikimedia.org/wikipedia/commons/9/9a/Trudeaujpg.jpg"

}

And, the microservice will return the following JSON:

{

"rating": [

{"safe": 0.9863441586494446},

{"questionable": 0.011578541249036789},

{"explicit": 0.0006071273819543421}

],

"character": [],

"copyright": [{"real life": 0.4488498270511627}],

"general": [

{"1boy": 0.9083328247070312},

{"solo": 0.8707828521728516},

{"male": 0.6287103891372681},

{"photo": 0.45845481753349304},

{"black hair": 0.2932664752006531}

]

}

Side-note: Illustration2Vec was developed to make a keyword-based search engine to help novice drawers find reference images to base new work on. The pre-trained caffe model is available through Algorithmia, and available under the MIT License.

In all, Illustration2Vec can classify 512 tags, across three different rating levels (“safe,” “questionable,” and “explicit.”).

The important part here is that the ratings were used to … wait for it … label nudity and sexual implications in images. Why? Because anime tends to have lots of nudity and sexual themes.

Because of that, we were able to piggyback off their pre-trained model.

We used a subset of the 512 tags to create a new microservice called Nudity Detection I2V, which acts as a wrapper for Illustration Tagger, but only returns nude/not-nude with corresponding confidence values.

The same Trudeau image when passed to Nudity Detection I2V returns the following:

{

"url": "<a href="https://upload.wikimedia.org/wikipedia/commons/9/9a/Trudeaujpg.jpg">https://upload.wikimedia.org/wikipedia/commons/9/9a/Trudeaujpg.jpg</a>",

"confidence": 0.9829585656606923,

"nude": false

}

For comparison, here’s the output of the famous Lenna photo from Nudity Detection, Nudity Detection I2V, and Illustration Tagger.

Nudity Detection

{

"nude": "true",

"confidence": 1

}

Nudity Detection I2V

{

"url": "<a href="http://www.log85.com/tmp/doc/img/perfectas/lenna/lenna1.jpg">http://www.log85.com/tmp/doc/img/perfectas/lenna/lenna1.jpg</a>",

"confidence": 1,

"nude": true

}

Illustration Tagger

{

"character": [],

"copyright": [{"original": 0.24073825776577}],

"general": [

{"1girl": 0.7676181197166442},

{"nude": 0.7233675718307494},

{"photo": 0.5793498158454897},

{"solo": 0.5685935020446777},

{"breasts": 0.38033962249755865},

{"long hair": 0.24592463672161105}

],

"rating": [

{"questionable": 0.7255685329437256},

{"safe": 0.23983716964721682},

{"explicit": 0.032572492957115166}

]

}

For every image analyzed using Nudity Detection I2V, we check to see if any of these nine tags are returned: “explicit”, “questionable”, “safe”, “nude”, “pussy”, “breasts”, “penis”, “nipples”, “puffy nipples”, or “no humans.”

To capture nudity related information from the above tags, we labelled a small ~100 image dataset and pushed our data to Excel. Once there, we ran Excel’s Solver plugin to neatly fit weights to each tag, maximizing our new detector’s accuracy across our sample set.

One interesting observation from the linear fit operation is that some tags have less correlation with nudity (like breasts), than others (like nipples). Logically this makes sense, since exposed breasts aren’t necessarily nude, but if you can see nipples, it is.

By using this method, we were able to increase the accuracy rate from ~60% to ~85%.

Conclusion

Constructing a nudity detection algorithm from scratch is tough. Building a large unfiltered nudity dataset for CNN training is expensive and potentially damaging to one’s mental health. Our solution was to find a pre-trained model that “almost” fit our needs, and then fine tune the output to become an excellent nudity detector.

As you can see from the above results, we’ve definitely succeeded in improving our accuracy. Don’t just take our word for it, try out Nudity Detection I2V.

In a future post, we’ll demonstrate how to use an ensemble to combine the two methods for even greater accuracy.

Bio: James Sutton is a software engineer at Algorithmia.

Original. Reposted with permission.

Related: