Top Data Scientist Claudia Perlich on Biggest Issues in Data Science

Top Data Scientist Claudia Perlich on Biggest Issues in Data Science

Find out what top data scientist Claudia Perlich believes are - and are not - the biggest issues in data science today, and why spending 80% of their time with data preparation is not a problem.

By Claudia Perlich, Dstillery.

First off, let me state what I think is NOT the the problem: the fact that data scientists spend 80% of their time with data preparation. That is their JOB! If you are not good at data preparation, you are NOT a good data scientist. It is not a janitor problem as Steve Lohr provoked. The validity of any analysis is resting almost completely on the preparation. The algorithm you end up using is close to irrelevant.Complaining about data preparation is the same as being a farmer and complaining about having to do anything but harvesting and please have somebody else deal with the pesky watering, fertilizing, weeding, etc.

This being said - data preparation can be made difficult by the process of raw data collection. Designing a system that collects data in a form that is useful and easily digestible by data science is a high art. Providing full transparency to DS how exactly the data flows to the system is another. It involves processes that consider sampling, data annotation, matching, etc. It does not include things like replacing missing value and excessive normalization. Creating an effective data environment for DS needs to involve DS and cannot be entirely owned by engineering. DS is often NOT able to spec such system requirements in sufficient detail to allow for a clean handover.

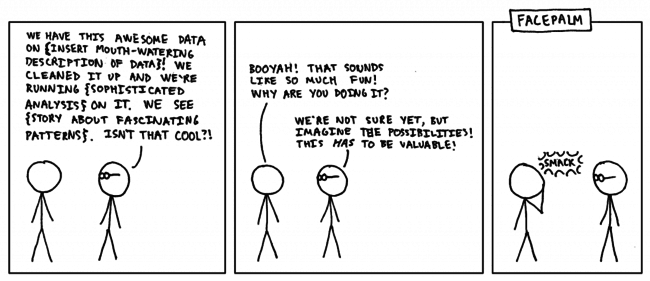

But in the bigger picture, there are more important things to consider. The by far biggest issue I see is data science solving irrelevant problems. This is a huge waste of time and energy. The reason is typically that whoever has the problem is lacking data science understanding to even express the issue and data scientists end up solving whatever they understood might be be the problem, ultimately creating a solution that is not really helpful (and often far too complicated). A typical category are ‘underdefined’ tasks: “Find actionable insights in this dataset!”. Well - most data scientists do not know which actions can be taken. They also do not know what insights are trivial vs. interesting. So there is really no point sending them on a wild goose chase.

The “solving the wrong problem” is pervasive in part because the data science is not sufficiently involved in the decision process (thanks to Meta for asking me to clarify). Now - not EVERY data scientist can and should be expected to be able to shape the problem as well as the solution (back to the unicorn problem), but at least one data scientist on the team should. The bigger issue is however not the lack of ability/willingness from the data science side (although indeed there are plenty who just like to solve a cute problem, not matter how relevant) - but often a corporate culture where analytics, IT, etc is considered an ‘execution’ function. Management decides what is needed and everybody else goes and does it.

On an individual level and a given (worthwhile) problem I would blame lack of data understanding, data intuition, and finally skepticism as most limiting factors to efficiency. What makes these factors contribute to inefficiency is NOT that it takes longer get to an answer (in fact lack of the three typically leads to results much more quickly) but rather how long it takes to a (almost) right answer.

Dstillery is a data analytics company that uses machine learning and predictive modeling to provide intelligent solutions for brand marketing and other business challenges. Drawing from a unique 360 degree view of digital, physical and offline activity, we generate insights and predictions about the behaviors of individuals and discrete populations.

Original. Reposted with permission.

Related:

- Automating Data Ingestion: 3 Important Parts

- Contest 2nd Place: Automated Data Science and Machine Learning in Digital Advertising

- Data Scientists Thoughts that Inspire

Top Data Scientist Claudia Perlich on Biggest Issues in Data Science

Top Data Scientist Claudia Perlich on Biggest Issues in Data Science