Big Data Science: Expectation vs. Reality

Big Data Science: Expectation vs. Reality

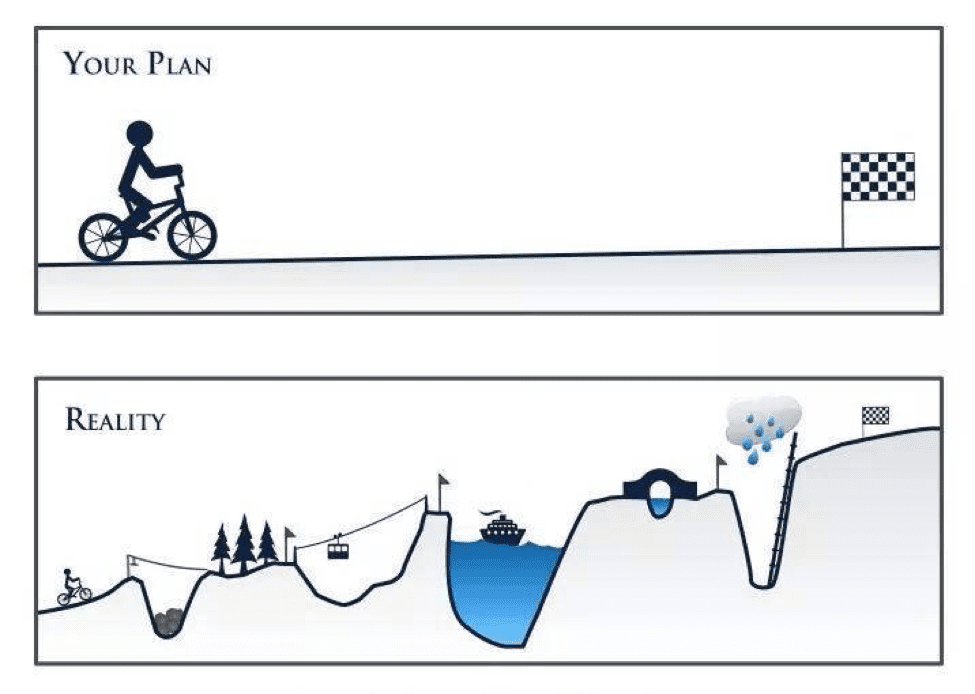

The path to success and happiness of the data science team working with big data project is not always clear from the beginning. It depends on maturity of underlying platform, their cross skills and devops process around their day-to-day operations.

By Stepan Pushkarev, CTO, Hydrosphere.io.

The past few years has been like a dream come true for those who work in analytics and big data. There is a new career path for platform engineers to learn Hadoop, Scala and Spark. Java and Python programmers have a chance to move to the Big Data world. There they find higher salaries, new challenges and get to scale up to distributed systems. But recently I am starting to hear some complaints and dashed hopes from engineers who have spent time working there.

Their expectations are not always met. Big data engineers spend most of their time in ETL, preparing data sets for analysts, and coding models developed by data scientists into scripts. So, they have become cogs between data scientists and big data.

I see the same feedback from data scientists. Real big data projects differ from Kaggle competitions. Even minor tasks require Python and Scala skills as well as multiple interactions and design sessions with big data engineers and operation teams.

DevOps engineers get to go no further than just using the basic tools and running through tutorials. They grow weary of being given nothing more to do than deploy the next Hadoop cluster through the Cloudera wizard and semi-automated Ansible scripts.

In this environment, the big data engineer will never get to work on scalability and storage optimization. Data scientists will never invent a new algorithm. And the DevOps engineer will never put the entire platform on autopilot. The risk for the team is their ideas might not make it beyond proof of concept.

How do we change this situation? There are 3 key points:

- Tools evolution—the Apache Spark/Hadoop ecosystem is great. But it is not stable and user-friendly enough to just run and forget. Engineers and data scientists should contribute to existing opensource projects and create new tools to fill the gaps in day-to-day operations.

- Education and cross skills—when data scientists write code they need to think not just about abstractions but need to consider the practical issues of what is possible and what is reasonable. For example, they need to think how long their query will run and whether the data they extract will fit into the storage mechanism they are using.

- Improve the process—DevOps might be a solution. Here DevOps does not just mean writing Ansible scripts and installing Jenkins. We need DevOps working in optimal fashion to reduce handoff and invent new tools to give everyone self-service to make them as productive as possible.

The Continuous Analytics environment for data scientists, big data engineers and business users

With Continuous Analytics and self-service, data scientists owns the data project from the original idea all the way to production. The more autonomous he or she is, the more time he or she can dedicate to producing actual insights.

The data scientist starts from the original business idea and works through data exploration and data preparation. Then he moves to model development. Next comes deployment and validating the environment. Finally there is the push to production. Given the proper tools, he or she could run this complete iteration multiple times a day without relying on the Big Data engineer.

The Big Data engineer, in turn, works on scalability and storage optimization, developing and contributing to tools like Spark, enabling streaming architectures and so on. He provides an API and a DSL for the data scientist.

Product engineers should be given analytics models developed by data scientists as neatly-bundled services. Then they can build smart applications for business users to allow them to use those in self-service.

Working like this, no one is stuck waiting on the next person. Each person is working with their own abstractions.

This might sound like a utopia. But I believe this could motivate big data teams to improve their existing big data platforms and processes, with the added benefit of make everyone happy.

Bio: Stepan Pushkarev is CTO of

Related:

Big Data Science: Expectation vs. Reality

Big Data Science: Expectation vs. Reality