Automated Machine Learning: An Interview with Randy Olson, TPOT Lead Developer

Read an insightful interview with Randy Olson, Senior Data Scientist at University of Pennsylvania Institute for Biomedical Informatics, and lead developer of TPOT, an open source Python tool that intelligently automates the entire machine learning process.

Automated machine learning has become a topic of considerable interest over the past several months. A recent KDnuggets blog competition focused on this topic, and generated a handful of interesting ideas and projects. Of note, our readers were introduced to Auto-sklearn, an automated machine learning pipeline generator, via the competition, and learned more about the project in a follow-up interview with its developers.

Automated machine learning has become a topic of considerable interest over the past several months. A recent KDnuggets blog competition focused on this topic, and generated a handful of interesting ideas and projects. Of note, our readers were introduced to Auto-sklearn, an automated machine learning pipeline generator, via the competition, and learned more about the project in a follow-up interview with its developers.

Prior to that competition, however, KDnuggets readers were introduced to TPOT, "your data science assistant," an open source Python tool that intelligently automates the entire machine learning process.

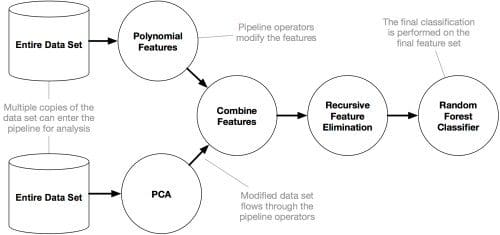

For scikit-learn-compatible datasets, TPOT can automatically optimize a series of feature preprocessors and machine learning models that maximize the dataset's cross-validation accuracy, and outputs the optimal model as Python code leveraging scikit-learn. The machine learning pipeline generation and optimization project is helmed by well-known and prolific machine learning and data science personality Randy Olson.

Randy is a Senior Data Scientist at the University of Pennsylvania Institute for Biomedical Informatics, where he works with Prof. Jason H. Moore (funded by NIH grant R01 AI117694). Randy is active on Twitter, and some of his other projects can be found on his GitHub. Of note, Randy has put together a really great Jupyter notebook collection of data analysis and machine learning projects, and also has a self-explanatory project called datacleaner which may be of interest to some.

Randy agreed to take some time out to discuss TPOT and automated machine learning with our readers.

Matthew Mayo: First off, thanks for taking some time out of your schedule to speak with us, Randy. You have previously shared an overview of your automated machine learning library, TPOT, with our readers, but what if we start by having you introduce yourself and provide a little information on your background.

Randy Olson: Sure! In short: I'm a Senior Data Scientist at the University of Pennsylvania's Institute for Biomedical Informatics, where I develop machine learning software for biomedical applications. As a hobby, I run a personal blog (randalolson.com/blog) where I apply data science to everyday problems to show people how data science can relate to almost any possible topic. I'm also an avid open science advocate, so all of my work can be found on GitHub (github.com/rhiever) if you ever want to learn and find out how my projects work.

TPOT is a collaboration project, correct? What about your co-conspirators; could you give us some info about them or point us in a direction to find out more?

Yep, that's right! Even though TPOT is practically my "software baby" at this point, there are plenty of people who have contributed their time and code to TPOT. To name a few:

Daniel Angell is a software engineering student at Drexel University who helped a ton with the TPOT refactor over Summer '16. Whenever TPOT exports directly to a scikit-learn Pipeline, you can thank Daniel.

Nathan Bartley is a computer science Master's student at the University of Chicago who was heavily involved in the early TPOT design phases. Nathan and I co-authored a research paper on TPOT that ended up winning the Best Paper award at GECCO 2016.

Weixuan Fu is a new programmer at the Institute for Biomedical Informatics. Even though he's new to the TPOT project, he's already made several major contributions, including placing time limits on TPOT pipeline evaluations. It turns out that placing a time limit on a function call can be pretty difficult when you need to support Mac, Linux, and Windows, but Weixuan figured it out.

Your TPOT post was informative and described the project quite well. It has been several months, however, and I know that you have been promoting and sharing the project, which now has over 1500 stars on Github and has been forked nearly 200 times. Is there anything additional of note you would like our readers to know about TPOT, or any developments that have occurred since your original post? Is there anything you would like share about future development plans?

TPOT has come a long way since the original post we shared about it on KDnuggets. Most of the changes were fine-tuning the optimization process "under the hood," but a few major changes are:

- TPOT supports regression problems with the TPOTRegressor class.

- TPOT now works directly with scikit-learn Pipeline objects, which means that it also exports to scikit-learn Pipelines. This makes TPOT's exported code much cleaner.

- TPOT explores more scikit-learn models and data preprocessors. We've been fine-tuning the models, preprocessors, and parameters with every release.

- TPOT allows you to set a time limit on the TPOT optimization process, both at the per-pipeline level (so TPOT doesn't spend hours evaluating a single pipeline) and at the TPOT optimization process level (so you know when the TPOT optimization process will end).

In the near future, we're primarily going to focus on making TPOT run faster, especially on large data sets. Currently, TPOT can take hours or even days to finish on large (50,000+ record) data sets, which can make it difficult to use for some users. We have a whole bag of tricks to speed up TPOT --- including parallelizing TPOT on multi-core computing systems --- so it's just a matter of time until we get those features rolled out.

Clearly TPOT could be used in a variety of domains and for numerous tasks, likely as many as we could imagine machine learning itself could be utilized for. I imagine that, given its development history, you do use it in your day job. Could you give us an example of how it has made your life easier?

Even though half of my job is machine learning software development, the other half of my job involves working with Penn physicians and drawing insight from the biomedical data sets they've collected. Every single time I approach a new data set --- and after I've finished the initial data cleaning and exploratory data analysis --- I run at least a dozen instances of TPOT on our computing cluster to see whether TPOT can discover a useful model of the data. While TPOT runs, I still explore a handful of simpler models by hand myself, but TPOT has saved me a ton of time when I need to delve into more complex models, which I rarely need to run by hand anymore.

One of the best examples I have is when we applied TPOT to a bladder cancer study that my boss, Prof. Jason H. Moore, had collaborated on years ago. We wanted to see whether we could replicate the findings in the study, so we used a custom version of TPOT to find the best model for us. After just a few hours of tinkering with the models, TPOT replicated the findings in the original study and found the same pipeline that took his collaborators weeks to figure out. As an added bonus, TPOT discovered a more complex pipeline that actually improved upon what his collaborators found by discovering a new interaction between two of the variables. If only they had TPOT back then, eh?

Where do you see automated machine learning going? Is the end game fully automated systems, with limited human interference, ushering in the decline of data scientists and machine learning experts? Or is it more likely that automation simply becomes another tool available to assist the machine learning scientist?

In the near future, I see automated machine learning (AutoML) taking over the machine learning model-building process: once a data set is in a (relatively) clean format, the AutoML system will be able to design and optimize a machine learning pipeline faster than 99% of the humans out there. Perhaps AutoML systems will be able to expand out to cover a larger portion of the data cleaning process, but many tasks --- such as being able to pose a problem as a machine learning problem in the first place --- will remain solely a human endeavor in the near future. However, technologists are infamously bad at predicting the future of technology, so perhaps I should decline to comment on the long-term of where AutoML can and will head.

One long-term trend in AutoML that I can confidently comment on, however, is that AutoML systems will become mainstream in the machine learning world, and it's highly likely that the AutoML systems of the future will be interactive. Instead of the user and AutoML system working independently, the user and AutoML system will work together: as the user tries out different pipelines by hand, the AutoML system will learn in real-time from the user's experience and adapt its optimization process. Instead of providing one recommendation at the end of the optimization process, the AutoML system will continually recommend the best pipelines it's discovered so far and allow the user to provide feedback on those pipelines --- feedback that is then incorporated into the AutoML optimization process. And so on. In essence, AutoML systems will become akin to "Data Science Assistants" that can combine the tremendous computing power of high-performance computing systems with the problem-solving ability of human designers.

As a follow-up to the previous question, do you see data scientists and others using machine learning becoming unemployed anytime soon? Or, if too drastic an idea, will the current hype surrounding data science be tempered by automation in the near future, and if so, to what degree?

I don't see the purpose of AutoML as replacing data scientists, just the same as intelligent code autocompletion tools aren't intended to replace computer programmers. Rather, to me the purpose of AutoML is to free data scientists from the burden of repetitive and time-consuming tasks (e.g., machine learning pipeline design and hyperparameter optimization) so they can better spend their time on tasks that are much more difficult to automate. For example, parsing a heterogeneous HTML file into a clean data set or translating a "human problem" into a "machine learning problem" are relatively simple tasks for experienced data scientists, yet are currently out of reach for AutoML systems. My motto: "Automate the boring stuff so we can focus on the interesting stuff."

Any final words on TPOT or automated machine learning?

AutoML is a very new field, and we're only just tapping into the potential of AutoML. Before we move too far along in this field, I believe that it's important to take a step back and ask: what do we (the users) want from AutoML systems? What would you expect from an AutoML system, dear reader?

On behalf of our readers I would like to thank you for your time, Randy.

Related: