An ode to the analytics grease monkeys

Analytics is not one time job. It needs to be automated, deployed and improved for future business analytics requirements. Here an IBM expert discusses about development & deployment of analytics assets and capabilities of it.

By Erick Brethenoux, IBM.

Analytics has value only when it is actionable.

Analytics provide a significant business (i.e., monetary) impact for organizations when analytical assets are deployed within its business processes.(as exemplified by deploying analytics in business processes is for smart buildings and elevators, as explained by IBM’s Harriet Green and KONE’s Larry Wash at InterConnect)– i.e., when analytical assets are consumed by people or processes; like a call center operator making a recommendation in real-time to a customer on the line (a recommendation promoted by an analytical propensity model), or a machine accepting or declining millions of credit-card transaction every minute (acceptance calculated in micro-seconds by an analytical fraud-detection model).

Embedding those models inside the appropriate business processes deliver that value; making sure that those models (that have supported concrete decisions) can be legally traced, monitored, and secured requires discipline and management capabilities. Deploying analytical assets within operational processes in a repeatable, manageable, secure, and traceable manner requires more than a set of APIs and a cloud service; a model that has been scored (executed) has not necessarily been managed.

Developing & deploying analytical assets: complementary cycles.

In the mid-90’s we have already been through this phase of wild and creative programmable development. Today, aside from a salutary democratization of analytics in general and predictive analytics in particular, and an unprecedented development of analytical talents, the analytical open-source movement is also producing a wide number of analytical assets that will eventually have to be consumed – but current open-source real-time deployment techniques provide “dissemination means” not “managed deployment means”.

To make an analogy: you do not win the Tour de France by building bikes, you win it by riding those bikes within organized teams.

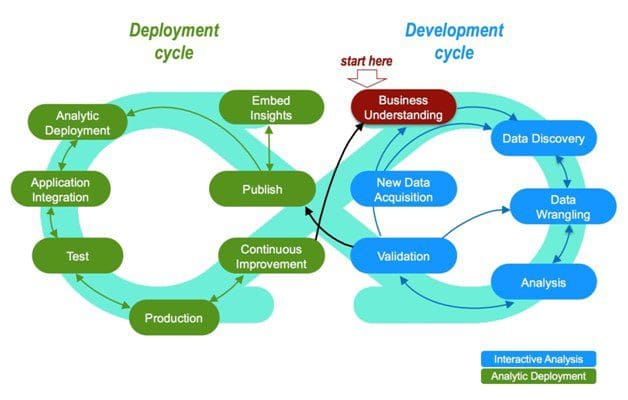

In the last decade where the “industrial” deployment of analytics have started to boost the competitiveness of early adopters, we have learned, often the hard way, that releasing a model to production is not enough; that “release moment” is followed by many steps that make the deployment cycle as important as the development cycle (see Figure 1).

Figure 1. Complementary and interlinked analytical development & deployment cycles

Capabilities for the deployment of analytical assets

The development cycle has been battle tested for more than two decades, and originates from the open methodology CRISP-DM (Cross-Industry Standard Process for Data Mining) which has been refined last year by IBM, through an extended version called Analytics Solutions Unified Method for Data Mining/Predictive Analytics (also known as ASUM-DM). Many development efforts at IBM enable the development cycle through technologies including our Data Science Experience (DSX) and our ML-as-a-Service expansive upcoming machine learning workflow capability.

The deployment cycle, on the other end, has not been as formalized; but successful organizations have adopted various technologies to provide the foundation to manage analytical assets in a reliable, repeatable, scalable and secured way. That foundation should provide:

- A “centralized” way to store and secure analytical assets to make it easier for analysts to collaborate, allow them to re-use models or other assets as needed (it could be a secured community or a collaboration space)

- The possibility to house the repository in an organization existing database management system (regardless of its provisioning) and establish security credentials through integration with existing security providers, while accommodating models from any analytical tool

- Protocols to ensure adherence to all internal and external procedures and regulations, not just for compliance reasons but, as an increasing amount of data gets aggregated, to address potential privacy issues

- Automated versioning, fine-grained traceable model scoring and change management capabilities (including champion/challenging features) to closely monitor and audit analytical asset lifecycles

- Bridges to eventually link both the development and deployment cycles to: external information life cycle management systems (to optimize a wider and contextual re-use of assets) and enhanced collaboration capabilities (to share experiences and information assets beyond the four walls of the organization).

The development cycle has always been the glamorous part of the full analytical process, and developers, especially coders (now fully enabled through robust sets of development tools), have thrived on that first cycle. However, neglecting the deployment cycle has often prevented organizations to fully realize the business value of their analytical efforts while generating distrustful attitudes towards analytics within those organizations. In the deployment cycle, the “industrialization” of analytics is less glamorous, but it is where the rubber meets the road.

Deployment benefits

Beyond fostering collaboration, delivering more effective analytical results, controlling and deploying those results to people and systems to support decision-making in a broad range of operational areas, automating analytical processes for greater consistency and auditing the development, refinement and usage of models and other assets throughout their lifecycle, the formalization of the deployment cycle provides a bigger picture advantage: more consistent organization’s decisions.

Next analytic market leader

The current hype around the development cycle is overshadowing the upcoming need for organized analytics consumption – and that need, through the advent of external data and the tsunami of Internet of Things data and processes will be overwhelming. IBM has the benefit of having foundational capabilities through its Collaboration & Deployment Services technology, but the next wave will require some significant updates around that foundation– including the extension (i.e., bridges) toward information life cycle management capabilities and wider collaboration capabilities (i.e., beyond the organization’s analytics community). We need a stronger focus and a formalized approach (with its accompanying technology) for the “deployment cycle” – let’s bring together the conversations on the analytical deployment theme. I predict that the next analytic market leader will dominate (and strongly financially benefit from) the managed deployment of analytical assets; who will take that hill first?

Bio: Erick Brethenoux, is IBM, Director Analytics Strategy & Adjunct Professor – Stuart School of Business at Illinois Institute of Technology.

Related: