Deep Learning Made Easy with Deep Cognition

So normally we do Deep Learning programming, and learning new APIs, some harder than others, some are really easy an expressive like Keras, but how about a visual API to create and deploy Deep Learning solutions with the click of a button? This is the promise of Deep Cognition.

By Favio Vázquez, BBVA Data & Analytics

Deep Cognition, Inc.

This past month I had the luck to meet the founders of DeepCognition.ai. Deep Cognition breaks the significant barrier for organizations to be ready to adopt Deep Learning and AI through Deep Learning Studio.

What is Deep Learning?

Before continuing and describe how Deep Cognition simplifies Deep Learning and AI, lets first define the main concepts for Deep Learning.

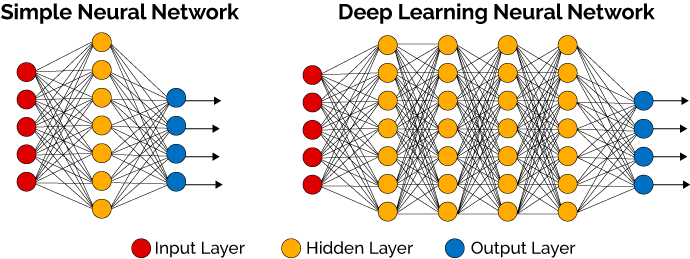

Deep learning is a specific subfield of machine learning, a new take on learning representations from data which puts an emphasis on learning successive “layers” of increasingly meaningful representations.

Deep learning allows computational models that are composed of multiple processing layers to learn representations of data with multiple levels of abstraction.

These layered representations are learned via models called “neural networks”, structured in literal layers stacked one after the other.

Actually what we use in Deep Learning is something called artificial neural network (ANN), that’s a network inspired by biological neural networks which are used to estimate or approximate functions that can depend on a large number of inputs that are generally unknown.

Although deep learning is a fairly old subfield of machine learning, it only rose to prominence in the early 2010s. In the few years since, it has achieved great things, François Collet list following breakthroughs of Deep Learning:

- Near-human level image classification.

- Near-human level speech recognition.

- Near-human level handwriting transcription.

- Improved machine translation.

- Improved text-to-speech conversion.

- Digital assistants such as Google Now or Amazon Alexa.

- Near-human level autonomous driving.

- Improved ad targeting, as used by Google, Baidu, and Bing.

- Improved search results on the web.

- Answering natural language questions.

- Superhuman Go playing.

Why Deep Learning?

As François Chollet states in his book until the late 2000s, we were still missing a reliable way to train very deep neural networks. As a result, neural networks were still fairly shallow, leveraging only one or two layers of representations, and so they were not able to shine against more refined shallow methods such as SVMs or Random Forests.

But in this decade, with the development of several simple but important algorithmic improvements, the advances in hardware (mostly GPUs), and the exponential generation and accumulation of data, with the help of Deep Learning nowadays it’s possible to run small deep learning models on your laptop (or in the cloud).

How do we do Deep Learning?

Let’s see how we normally do Deep Learning.

Even though this is not a new field, what is new are the ways we can interact with the computer to do Deep Learning. And one of the most important moments for this field was the creation of TensorFlow.

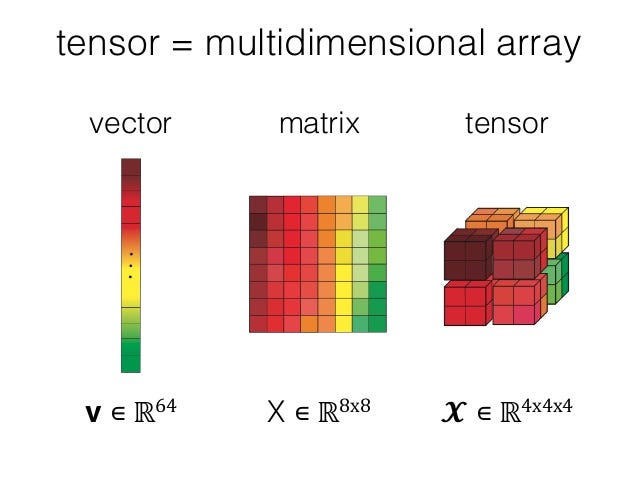

TensorFlow is an open source software library for numerical computation using data flow graphs. Nodes in the graph represent mathematical operations, while the graph edges represent the multidimensional data arrays (tensors) communicated between them.

What are tensors?

Tensors, defined mathematically, are simply arrays of numbers, or functions, that transform according to certain rules under a change of coordinates.

But is this scope a tensor is a generalization of vectors and matrices to potentially higher dimensions. Internally, TensorFlow represents tensors as n-dimensional arrays of base datatypes.

We need tensors because what NumPy (the fundamental package for scientific computing with Python) lacks is creating Tensors. We can convert tensors to NumPy and viceversa. That is possible since the constructs are defined definitely as arrays/matrices.

TensorFlow combines the computational algebra of compilation optimization techniques, making easy the calculation of many mathematical expressions that would be difficult to calculate, instead.

Keras

This is not a blog about TensorFlow, there are great ones. But it was neccesary to introduce Keras.

Keras is a high-level neural networks API, written in Python and capable of running on top of TensorFlow, CNTK, or Theano. It was developed with a focus on enabling fast experimentation. Being able to go from idea to result with the least possible delay is key to doing good research.

This was created by François Collet and was the first serious step for making Deep Learning easy for the masses.

TensorFlow has a Python API which is not that hard, but Keras made really easy to get into Deep Learning for lots of people. It should be noted that Keras is now officially a part of Tensorflow: www.tensorflow.org/api_docs/python/tf/contrib/keras

Deep Learning Frameworks

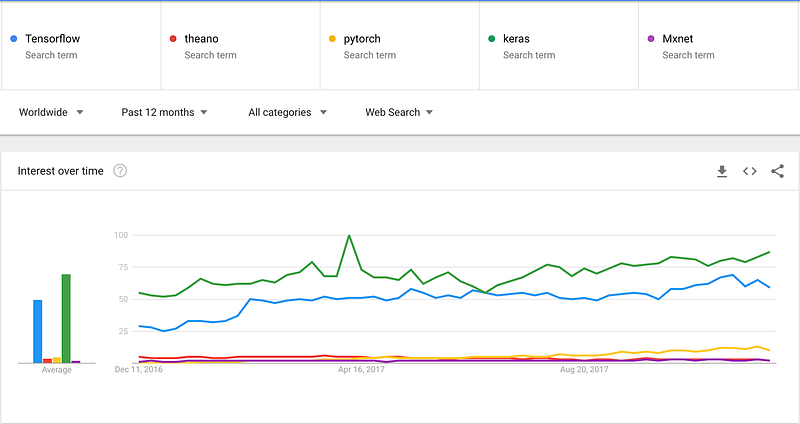

I made a comparison between Deep Learning frameworks.

Keras is the winner for now, it is interesting to see that people prefers an easy interface and usability.

If you want more information about Keras visit this post I made on LinkedIn: www.linkedin.com/feed/update/urn:li:activity:6344255087057211393