Supercharging Visualization with Apache Arrow

Interactive visualization of large datasets on the web has traditionally been impractical. Apache Arrow provides a new way to exchange and visualize data at unprecedented speed and scale.

By Leo Meyerovich (CEO and Co-Founder, Graphistry) , Paul Taylor (Senior Engineer, Graphistry) and Jacques Nadeau (CTO and Co-Founder, Dremio)

Minority Report For Your Data

Imagine a future where Minority Report-style data visualizations run in every web browser. This is a big step forward for critical workflows like investigating security and fraud incidents, and making critical insights for the next level of BI. Today’s options are dominated by rigid Windows desktop tools and slow web apps with clunky dashboards. The Apache Arrow ecosystem, including the first open source layers for improving JavaScript performance, is changing that.

Frustrated with legacy big data vendors for whom “interactive data visualization” does not mean “sub-second”, Dremio, Graphistry, and other leaders in the data world have been gutting the cruft from today’s web stacks. By replacing them with straight-shot technologies like Arrow, we are successfully serving up beyond-native experiences to regular web clients.

To appreciate what is now possible, watch this recent live demo from GTC DC 2017 where Graphistry connects GPUs in the cloud to GPUs in the browser (jump to 19:00).

GPUs began as specialized hardware for accelerating games. With projects like Google’s TensorFlow and the increasing number of vendors in the GPU Open Analytics Initiative (GOAI), the same GPU hardware has been taking over general analytical applications. GPUs have thousands of times more cores than CPUs, and many analytical workloads can be parallelized to take advantage of these resources. As a result, machine learning libraries, GPU-based databases, and visualization tools have emerged that dramatically improve the speed of these workloads.

A Different Architecture for Visualization on the Web

Nvidia and our fellow GOAI startups are collaborating to enable enterprises -- and the entire web -- to accelerate analytic workloads end-to-end without ever facing the slowdowns of touching the CPU. However, rich visualization faces a last mile problem. Most enterprise-grade GPU visual analytics tools requires a workstation -- likely shared -- which almost everyone dislikes. Historically, teams will try to avoid these limitations by using GPU servers as remote desktops that stream pictures into the client browser, Netflix-style. However, these images are painfully static: high resolution analyst screens require higher bandwidth, and almost all interactions violate interactivity latency budgets by breaking the critical path with network round trips. This architectural shortcut meant full end-to-end GPU visual computing was more of a showroom floor concept: many analysts find deployments inaccessible, unreliable, and frustrating for anyone investigating data, pixelated.

An alternative approach is remote rendering: the server sends geometry commands to the client, and the client turns those into viewable pixels by leveraging the client’s standard web browser and its local access to a client-side GPU. This is now practical because even mobile phones have great GPUs. Remote rendering done well is akin to running a video game on the client, and the server telling the client what the pieces are and when to move them. Clever applications will transfer surprisingly little. Most interactions like pan and zoom will run entirely on the client at full framerate with few, if any, network calls on the critical path.

Unlocking Remote Rendering Performance with Arrow

Implementing remote rendering experiences over typical web architectures built on JSON and a plethora of JavaScript libraries quickly hits two key bottlenecks: 1) networking clogged by large file sizes, and 2) CPU and memory-intensive data serialization.

| Row Order | Columnar |

|---|---|

| [{time: 0, user: ‘a’}, {time: 2, user: ‘b’}, {time: 4, user: ‘c’}, {time: 6, user: ‘d’}] |

{time: [0, 2, 4, 6], user: [‘a’, ‘b’, ‘c’, ‘d’]} |

One quick yet big win for the file size is using a columnar format. See the above comparison to a more common row order: redundant boilerplate has been removed so files are a fraction of the original size. The columnar order also plays nicely with binary data representations, which further trims the file size and clears the path for many compute optimizations.

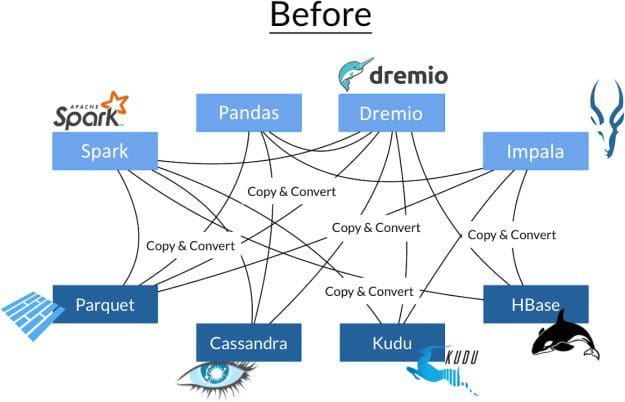

There are several columnar formats for data on disk (eg, Apache Parquet). However, before Apache Arrow there was no such standard for in-memory processing. Even with libraries like Google’s FlatBuffers sitting on top of native ArrayBuffers, each process uses a private model, and data must be serialized, de-serialized, and likely transformed between these models. This processing can even dominate the overall workload, consuming 60%-70% of CPU time. In addition, each process creates a copy of the data, increasing use of scare memory resources. This is especially important for GPUs where there is a fraction of the RAM compared to CPUs.

Apache Arrow was designed to eliminate the overhead of serialization by providing a standard way of representing columnar data for in-memory processing. When using Arrow, multiple processes can access the same buffer, with zero copy and zero serialization/deserialization of the data.

This standard provides dramatic improvements in efficiency - IBM observed a 53x speed up in data processing with Spark and Python when using Arrow. In turn, the Graphistry platform has been adding Arrow ingest support, which means its Spark and Python users can get data to the screen even faster. Every time a framework adds Arrow support, the entire community reaps the rewards, which is an unusual yet powerful case of network effects.

npm install apache-arrow

Native support for Arrow is now coming to the JavaScript community as part of its acceptance into the overall Apache Arrow project. For example, the following NodeJS snippet queries the GPU database MapD and provides direct native access to the data in JavaScript:

MapD.open(host, port).connect(db, user, password) .flatMap((session) => session.queryDF(`SELECT *FROM eventsWHERE alert ILIKE malware` ).disconnect()) .map(([schema, records]) =>Table.from([schema, records]))

When Graphistry started, almost every piece of the end-to-end GPU platform had to be built from scratch - our datacenter neighbors were almost exclusively bitcoin miners! As deep learning helped developers see that the GPU waters were safe, many amazing projects emerged, and the focus is now on interoperability. The JavaScript Arrow library ships with support for embedding into most JavaScript module systems, and the CPU mode is being used for tasks like making native calls into the MapD database as well as Python data science libraries like Pandas. The latter is especially exciting because it demonstrates how the enormous world of JavaScript software is now poised to include data science and AI as yet another one of its competencies… and the V0 already has almost no performance cost.

The core JavaScript Arrow and GOAI contributors are now planning how to bring in further GPU and compute support. For example, Plasma provides simple high-quality lifecycle management for when sharing Arrow data in CPU RAM, and similar ideas should apply for when composing GPU frameworks in JavaScript. The Arrow project is already changing what is practical with JavaScript, and we are just getting started!

Bios:

Leo Meyerovich, CEO and Co-Founder, Graphistry. Leo Meyerovich co-founded Graphistry to supercharge visual investigations. Over the last 15 years at UC Berkeley and various labs, Leo's research resulted in the first functional reactive web framework, the first GPU visual analytics language, the first parallel web browser, the first security policy change-impact analyzer, and considering the social foundations of programming languages. These led to core technologies behind widely adopted web frameworks and browsers by companies including Mozilla, Facebook, and Microsoft, as well as received awards (SIGPLAN best of year, 2 best papers) and support from the NSF, Qualcomm, and others.

Paul Taylor, Senior Engineer, Graphistry. Paul Taylor is responsible for frontend technologies at Graphistry. He is an active primary contributor to the popular web frameworks RxJS, Falcor, and now the JavaScript implementation of the Apache Arrow project. He was previously at Netflix where he helped rewrite RxJS and Falcor to run on almost all devices.

Jacques Nadeau, Co-Founder and CTO, Dremio. Jacques Nadeau is co-founder and CTO of Dremio.He is also the PMC Chair of the open source Apache Arrow project, spearheading the project’s technology and community. Previously he was MapR’s lead architect for distributed systems technologies. He is an industry veteran with more than 15 years of big data and analytics experience. In addition, he was cofounder and CTO of search engine startupYapMap. Before that, he was director of new product engineering with Quigo (contextual advertising, acquired by AOL in 2007). He also built the Avenue A | Razorfish analytics data warehousing system and associated services practice (acquired by Microsoft).

Related