Top 12 Essential Command Line Tools for Data Scientists

Top 12 Essential Command Line Tools for Data Scientists

This post is a short introductory overview of 12 Unix-like operating system command line tools of value to data science tasks, and the data scientists who perform them.

This post is a short overview of a dozen Unix-like operating system command line tools which can be useful for data science tasks. The list does not include any general file management commands (pwd, ls, mkdir, rm, ...) or remote session management tools (rsh, ssh, ...), but is instead made up of utilities which would be useful from a data science perspective, generally those related to varying degrees of data inspection and processing. They are all included within a typical Unix-like operating system as well.

It is admittedly elementary, but I encourage you to seek out additional command examples where appropriate. Tool names link to Wikipedia entries as opposed to man pages, as the former are generally more friendly to newcomers, in my view.

1. wget

wget is a file retrieval utility, used for downloading files from remote locations.

In its most basic form, wget is used as follows to download a remote file:

~$ wget https://raw.githubusercontent.com/uiuc-cse/data-fa14/gh-pages/data/iris.csv --2018-03-20 18:27:21-- https://raw.githubusercontent.com/uiuc-cse/data-fa14/gh-pages/data/iris.csv Resolving raw.githubusercontent.com (raw.githubusercontent.com)... 151.101.20.133 Connecting to raw.githubusercontent.com (raw.githubusercontent.com)|151.101.20.133|:443... connected. HTTP request sent, awaiting response... 200 OK Length: 3716 (3.6K) [text/plain] Saving to: ‘iris.csv’ iris.csv 100 [=======================================================================================================>] 3.63K --.-KB/s in 0s 2018-03-20 18:27:21 (19.9 MB/s) - ‘iris.csv’ saved [3716/3716]

2. cat

cat is a tool for outputting file contents to the standard output. The name comes from concatenate.

More complex use cases include combining files together (actual concatenation), appending file(s) to another, numbering file lines, and more.

~$ cat iris.csv sepal_length,sepal_width,petal_length,petal_width,species 5.1,3.5,1.4,0.2,setosa 4.9,3,1.4,0.2,setosa 4.7,3.2,1.3,0.2,setosa 4.6,3.1,1.5,0.2,setosa 5,3.6,1.4,0.2,setosa ... 6.7,3,5.2,2.3,virginica 6.3,2.5,5,1.9,virginica 6.5,3,5.2,2,virginica 6.2,3.4,5.4,2.3,virginica 5.9,3,5.1,1.8,virginica

3. wc

The wc command is used for producing word counts, line counts, byte counts, and related from text files. The default output for wc, when run without options, is a single line consisting of, left to right, line count, word count (note that the single string without breaks on each line are counted as a single word), character count, and filename(s).

~$ wc iris.cs 151 151 3716 iris.csv

4. head

head outputs the first n lines of a file (10, by default) to standard output. The number of lines displayed can be set with the -n option.

~$ head -n 5 iris.csv sepal_length,sepal_width,petal_length,petal_width,species 5.1,3.5,1.4,0.2,setosa 4.9,3,1.4,0.2,setosa 4.7,3.2,1.3,0.2,setosa 4.6,3.1,1.5,0.2,setosa

5. tail

Any guesses as to what tail does?

~$ tail -n 5 iris.csv 6.7,3,5.2,2.3,virginica 6.3,2.5,5,1.9,virginica 6.5,3,5.2,2,virginica 6.2,3.4,5.4,2.3,virginica 5.9,3,5.1,1.8,virginica

Working that command line sorcery.

6. find

find is a utility for searching the file system for particular files.

The following searches the tree structure starting in the current directory (".") for any file starting with "iris" and ending in any dumber of characters ("-name 'iris*'") of regular file type ("-type f"):

~$ find . -name 'iris*' -type f ./iris.csv ./notebooks/kmeans-sharding-init/sharding/tests/results/iris_time_results.csv ./notebooks/ml-workflows-python-scratch/iris_raw.csv ./notebooks/ml-workflows-python-scratch/iris_clean.csv ...

7. cut

cut is used for slicing out sections of a line of text from a file. While these slices can be made using a variety of criteria, cut can be useful for extracting columnar data from CSV files.

This outputs the fifth column ("-f 5") of the iris.csv file using the comma as field delimiter ("-d ','"):

~$ cut -d ',' -f 5 iris.csv species setosa setosa setosa ...

8. uniq

uniq modifies the output of text files to standard output by collapsing identical consecutive lines into a single copy. On its own, this may not seem too terribly interesting, but when used to build pipelines at the command line (piping the output of one command into the input of another, and so on), this can become useful.

The following gives us a unique count of the iris dataset class names held in the fifth column, along with their counts:

~$ tail -n 150 iris.csv | cut -d "," -f 5 | uniq -c 50 setosa 50 versicolor 50 virginica

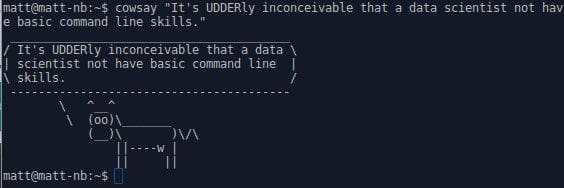

What the cow say.

9. awk

awk isn't actually a "command," but is instead a full programming language. It is meant for processing and extracting text, and can be invoked from the command line in single line command form.

Mastery of awk would take some time, but until then here is a sample of what it can accomplish. Considering that our sample file -- iris.csv -- is rather limited (especially when it comes to diversity of text), this line will invoke awk, search for the string "setosa" within a given file ("iris.csv"), and print to standard output, one by one, the items which it has encountered (held in the $0 variable):

~$ awk '/setosa/ { print $0 }' iris.csv

5.1,3.5,1.4,0.2,setosa

4.9,3,1.4,0.2,setosa

4.7,3.2,1.3,0.2,setosa

4.6,3.1,1.5,0.2,setosa

5,3.6,1.4,0.2,setosa

10. grep

grep is another text processing tool, this one for string and regular expression matching.

~$ grep -i "vir" iris.csv 6.3,3.3,6,2.5,virginica 5.8,2.7,5.1,1.9,virginica 7.1,3,5.9,2.1,virginica ...

If you spend much time doing text processing at the command line, grep is definitely a tool you will get to know well. See here for some more useful details.

11. sed

sed is a stream editor, yet another text processing and transformation tool, similar to awk. Let's use it below to change the occurrences of "setosa" in our iris.csv file to "iris-setosa," using this line:

~$ sed 's/setosa/iris-setosa/g' iris.csv > output.csv ~$ head output.csv sepal_length,sepal_width,petal_length,petal_width,species 5.1,3.5,1.4,0.2,iris-setosa 4.9,3,1.4,0.2,iris-setosa 4.7,3.2,1.3,0.2,iris-setosa ...

12. history

history is pretty straightforward, but also pretty useful, especially if you're depending on replicating some data preparation you accomplished at the command line.

~$ history 547 tail iris.csv 548 tail -n 150 iris.csv 549 tail -n 150 iris.csv | cut -d "," -f 5 | uniq -c 550 clear 551 history

And there you have a simple introduction to 12 handy command line tools. This is only a taste of what is possible at the command line for data science (or any other goal, for that matter). Free yourself from the mouse and watch your productivity increase.

Related:

- Data Science at the Command Line: Exploring Data

- How Docker Can Help You Become A More Effective Data Scientist

- Using Excel with Pandas

Top 12 Essential Command Line Tools for Data Scientists

Top 12 Essential Command Line Tools for Data Scientists