Naive Bayes from Scratch using Python only – No Fancy Frameworks

We provide a complete step by step pythonic implementation of naive bayes, and by keeping in mind the mathematical & probabilistic difficulties we usually face when trying to dive deep in to the algorithmic insights of ML algorithms, this post should be ideal for beginners.

By Aisha Javed .

Unfolding Naive Bayes from Scratch! Take-2 ????

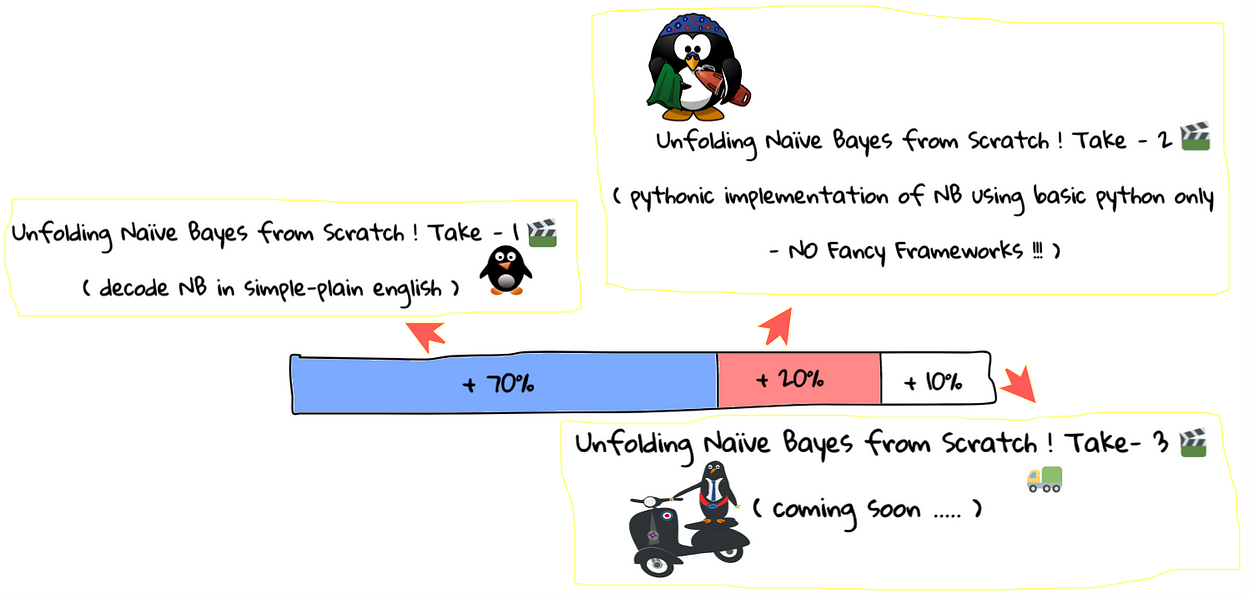

So in my previous blog post of Unfolding Naive Bayes from Scratch! Take-1????, I tried to decode the rocket science behind the working of The Naive Bayes (NB) ML algorithm, and after going through it’s algorithmic insights, you too must have realized that it’s quite a painless algorithm. In this blog post, we will walk-through it’s complete step by step pythonic implementation ( using basic python only) and it will be quite evident that how easy it is to code NB from scratch and that NB is not that Naive at classifying !

Who’s the Target Audience? ???? ???? ???? ML Beginners ???? ????????

Since I always wanted to decipher ML for absolute beginners and as it is said that if you can’t explain it, you probably didn't understand it, so yeah this blog post too is especially intended for ML beginners looking for humanistic ML resources for an in depth yet without any gibberish jargon of those creepy Greek mathematical formulas ( honestly that scary looking math never made any sense to me too ! )

Outcome of this Tutorial — A Hands-On Pythonic Implementation of NB

As I just mentioned above, a complete walk-through of NB pythonic implementation

Once you reach the end of this blog post, you will be done completely with 90% of understanding & implementing NB and only 10% will be remaining to master it from application point of view!

Defining The Roadmap….. ????

Milestone # 1: Data Preprocessing Function

Milestone # 2: Implementation of NaiveBayes Class — Defining Functions for Training & Testing

Milestone # 3: Training NB Model on Training Dataset

Milestone # 4: Testing Using Trained NB Model

Milestone # 5: Proving that the Code for NaiveBayes Class is Absolutely Generic!

Before we begin writing code for Naive Bayes in python, I assume you are familiar with:

- Python Lists

- Numpy & just a tad bit of vectorized code

- Dictionaries

- Regex

Let’s Begin the with the Pythonic Implementation !

Defining Data Preprocessing Function

Let’s begin with a few imports that we would need while implementing Naive Bayes

Milestone # 1 Achieved ????

Implementation of NaiveBayes Class — Defining Functions for Training & Testing

The Bonus Part : We will be writing a a fully generic code for the NB Classifier! No matter how many classes come into the training dataset and whatever text dataset is given — it will still be able to train a fully working model ???? ???? ????

The code for NaiveBayes is just a little extensive — but we just to need to spend a maximum of 10–15 minutes to grasp it! After that, you can surely technically call yourself a “NB Guru” ????

What is this code doing ??

There are in total four functions defined in the NaiveBayes Class:

1. def addToBow(self,example,dict_index) 2. def train(self,dataset,labels) 3. def getExampleProb(self,test_example) 4. def test(self,test_set)

And the code is divided into two major functions i.e train & test functions. Once you understand the statements defined inside these two functions, you will surely get to know what the code is actually doing and in what order the other two functions are being called.

1. Training function that trains NB Model : def train(self,dataset,labels) 2. Testing function that is used to predict class labels for the given test examples : def test(self,test_set)

The other two functions are defined to supplement these two major functions

1. BoW function that supplements training function It is called by the train function. It simply splits the given example using space as a tokenizer and adds every tokenized word to its corresponding BoW : def addToBow(self,example,dict_index) 2. Probability function that supplements test function. It is called by the test function. It estimates probability of the given test example so that it can be classified for a class label : def getExampleProb(self,test_example)

You can view the above code in this Jupyter Notebook too

It is much much more easier to organize and reuse the code if we define a class of NB rather than use the traditional structured programming approach. That’s the reason of defining a NB class and all it’s relevant functions inside it.

We don’t just want to write code, rather we want to write beautiful, neat & clean, handy, and reusable code . Yes that’s right - we want to possess all the traits that a good data scientist could possibly have !

And guess what? Whenever we will be dealing with a text classification problem that we intend to solve using NB, we will simply instantiate its object and by using the same programming interface, we will be able to train a NB classifier. Plus, as its the general principle of Object Oriented Programming that we only define functions relevant to a class inside that class, so all functions that are not relevant to NB class will be defined separately

Milestone # 2 Achieved ???? ????