A Crash Course in Game Theory for Machine Learning: Classic and New Ideas

Game theory is experiencing a renaissance driven by the evolution of AI. What are some classic and new ideas that data scientists should be aware of.

Game theory is one of the most fascinating areas of mathematics that have influenced diverse fields such as economics, social sciences, biology and, obviously, computer science. There are many ways to think about game theory but one that I find really helpful, although overly simplistic, is:

game theory is probabilities with incentives

Games are playing a key role in the evolution of artificial intelligence(AI). For starters, game environments are becoming a popular training mechanism in areas such as reinforcement learning or imitation learning. In theory, any multi-agent AI system can be subjected to gamified interactions between its participants. The branch of mathematics that formulates the principles of games is known as game theory. In the context of artificial intelligence(AI) and deep learning systems, game theory is essential to enable some of the key capabilities required in multi-agent environments in which different AI programs need to interact or compete in order to accomplish a goal.

The history of game theory is attached to the history of computer science. Much of the current research in game theory dates back to the work of computer science pioneers like Alan Turing or John Von Neumann. The famous Nash equilibrium popularized by the movie “A Beautiful Mind” is the cornerstone of many AI interactions in modern systems. However, modeling an AI universe using the principles of game theory many times goes beyond the Nash equilibrium. A good place to start understanding the implications architecting AI systems using principles of game theory is to understand the different types of games that we typically encountered in our social or economic interactions.

Every day, we participate in hundreds of interactions that are based on game dynamics. However, the architecture of those gamified environments are completely different and so are the incentives and goals of the participant. How to apply some of principles to the modeling of AI agents? That’s a challenge that is pushing entire segments of AI research such as multi-agent reinforcement learning.

While games are, obviously, the most visible materialization of game theory, is far from being the only space on which those concepts are applied. From that perspective, there are many other areas that can be influenced by the combination of game theory and AI. The fact us that most scenarios that involve multiple “participants” collaborating or competing to accomplish a task can be gamified and improved using AI techniques. Even though the previous statement is a generalization, I hope it conveys the point that game theory and AI is a way to think and model software systems rather than a specific technique.

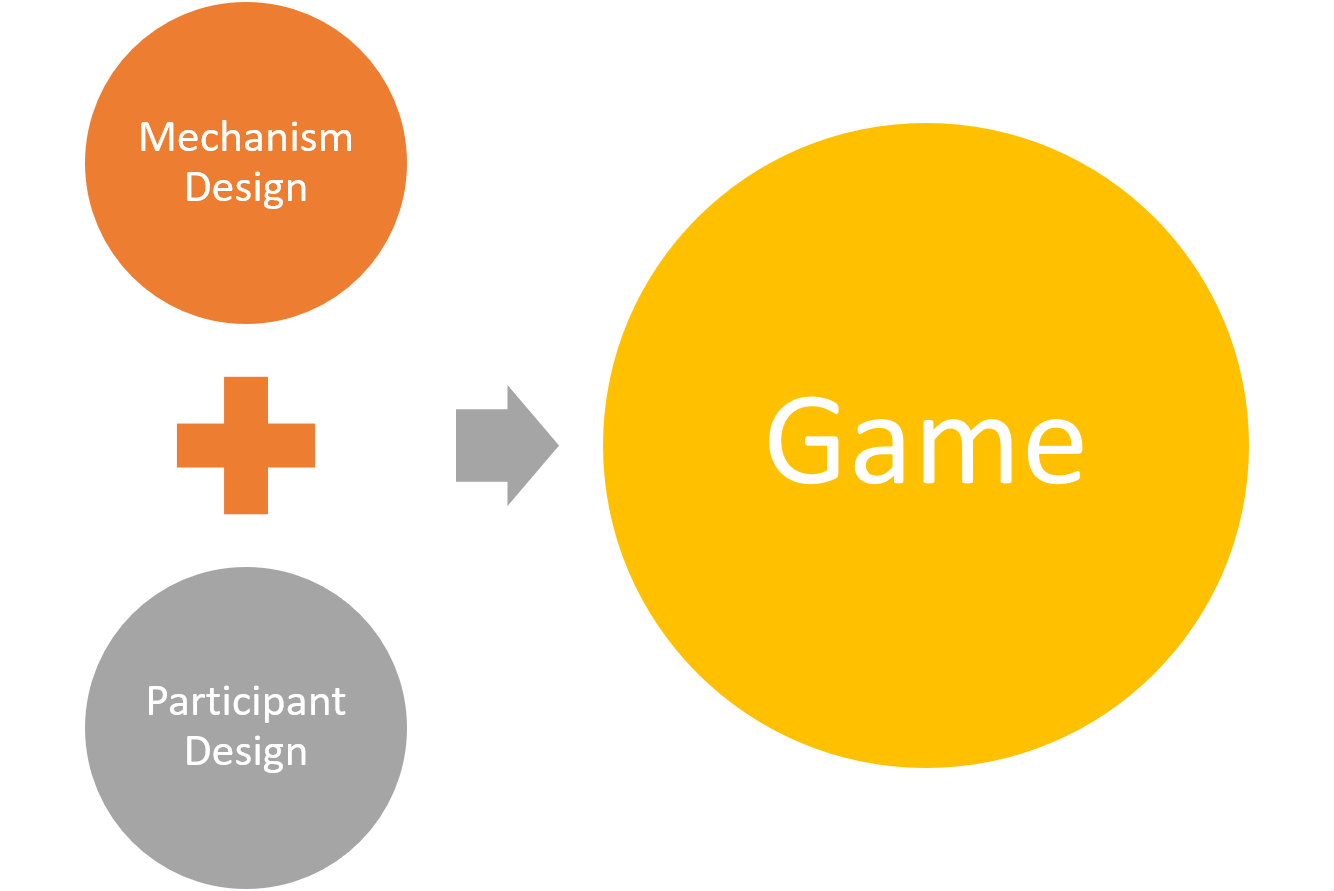

For an AI scenario to be a good candidate for using game theory, it should involve more than one participant. For instance, a sale forecast optimization AI systems such as Salesforce Einstein is not an ideal candidate for applying game theory principles. However, in a multi-participant environment, game theory can be incredibly efficient. Architecting game dynamics in an AI system can be summarized in two fundamental steps:

- Participant Design: Game theory can be used to optimize the decision of a participant in order to obtain the maximum utility.

- Mechanism Design: Inverse game theory focus on designing a game for a group of intelligent participant. Auctions are a classic example of mechanism design.

5 Types of Games Data Scientists Should Know About

Suppose that we are modeling an AI system that involves multiple agents which will colalborate and compete to accomplish a specific goal. That’s a classic example of game theory. Since its inception in the 1940s, game theory has focused on modeling the most common interaction patterns that now we are seeing every day in multi-agent AI systems. Understanding the different types of game dynamics in an environment is a key element to design efficient gamified AI systems. At a high level there is a five-element criteria that I like to use to understand the game dynamics in AI environments:

Symmetric vs. Asymmetric.

One of the simplest classifications of games is based on their symmetry. A symmetric game describes an environment in which each player has the same goals and the results will only depend on the strategies involved. Chess is a classic example of a symmetric game. Many of the situations we encountered in the real world lack the mathematical elegance of symmetry as participants often have different and even conflicting goals. A business negotiation is an example of asymmetric game in which each party has different goals and evaluates the results from a different perspective (ex: winning a contract vs. minimizing an investment).

Perfect vs. Imperfect Information

Another important categorization of games is based on the type of information available. A perfect information game refers to an environment in which each player can see the other player’s moves. Chess, again, is an example of a perfect information game. Many modern interactions are based on environments in which the moves from each player are hidden from other players and game theory classifies those scenarios as imperfect information games. From card games like poker to self-driving car scenarios, imperfect information games are all around us.

Cooperative vs. Non-Cooperative

A cooperative game environment is one in which the different participants can establish alliances in order to maximize the end result. Contractual negotiations are often modeled as cooperative games. Non-cooperative scenarios describe environments in which players are forbidden from forming alliances. Wars are the ultimate example of non-cooperative games.

Simultaneous vs. Sequential

A sequential game takes place in an environment in which each player has information about the other player earlier actions. Board games are mostly sequential in nature. Simultaneous games represent scenarios in which both players can take concurrent actions. Securities trading is an example of simultaneous games.

Zero-Sum vs. Non-Zero-Sum

A zero-sum game refers to a scenario in which the gains or one player always come translate into looses for other players. Board games are examples of zero-sum games. Non-zero-sum games are often encountered in scenarios in which multiple players can benefit from the actions of one players. Economic interactions in which multiple participants collaborate to increase the size of the market is an example of a non-zero-sum game.

Nash Equilibrium

Symmetric games rule the AI world and most of them are based on one of the most famous mathematical theories of the past century: The Nash Equilibrium.

The Nash Equilibrium was named after John Forbes Nash, the American mathematician immortalized by Russell Crow in the movie “A Beautiful Mind”. Essentially, a Nash equilibrium describes a situation in which each player has chosen a strategy and no player can benefit by changing strategies while the other players keep theirs unchanged.

The Nash equilibrium is a beautiful and incredibly powerful mathematical model to tackle many game theory problems but it also falls short in many asymmetric game environments. For starters, the Nash method assumes that players have infinite computing power which is rarely the case in real world environments. Also, many Nash equilibrium models fail to account for the notion of risk which is omnipresent in most asymmetric games the economic markets. As a result, there are many asymmetric game scenarios that are hard to implement using the Nash equilibrium. This is particularly important in multi-agent AI systems that need to find the right balance between the mathematical elegance of the solution and the practicality of its implementation.

Novel Ideas in Game Theory that are Influencing Machine Learning

Multi-agent AI systems are one of the most fascinating areas of research in the AI ecosystem. Recent advancements in areas such as multi-agent systems are pushing the boundaries of game theory relying on some of the most sophisticated ideas in the field. Here are some examples of game theory sub-disciplines that are very present in modern machine learning.

Mean Field Games

Mean Field-Games(MFG) are a relatively new area in the game theory space. The MFG theory was just developed in 2006 as part of a series of independent papers published by Minyi Huang, Roland Malhamé and Peter Caines in Montreal, and by Jean-Michel Lasry and Fields medalist Pierre-Louis Lions in Paris. Conceptually, MFG comprises methods and techniques to study differential games with a large population of rational players. These agents have preferences not only about their state (e.g., wealth, capital) but also on the distribution of the remaining individuals in the population. MFG theory studies generalized Nash equilibria for these systems.

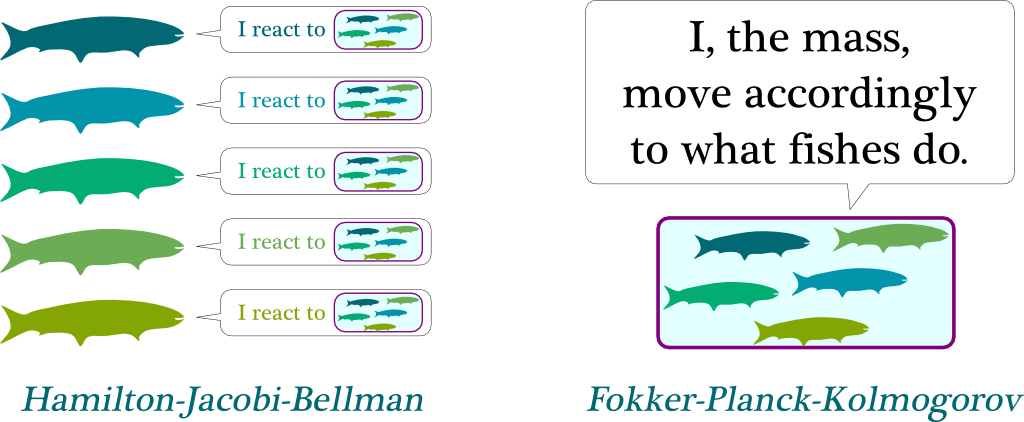

A classic example of MFG is how groups of fish in a schooling swim in the same direction and in a coordinated matter. Theoretically, this phenomenon is really hard to explain but it has his roots on the fact that a fish reacts to the behavior of the closest group. More specifically, each fish does not care about each of the other fishes individually but, rather, it cares about how the fishes nearby, as a mass, globally move. If we translate that into mathematical terms, the reaction of fishes to the mass is described to the Hamilton-Jacobi-Bellman equation. On the other hand, the aggregation of the actions of the fishes which determines the motion of the mass corresponds to the Fokker-Planck-Kolmogorov equation. Mean-field game theory is the combination of these two equations.

Stochastic Games

Stochastic games date back to the 1950s and were introduced by Nobel-prize winner economist Lloyd Shapley. Conceptually, stochastic games are played by a finite number of players on a finite state space, and in each state, each player chooses one of finitely many actions; the resulting profile of actions determines a reward for each player and a probability distribution on successor states.

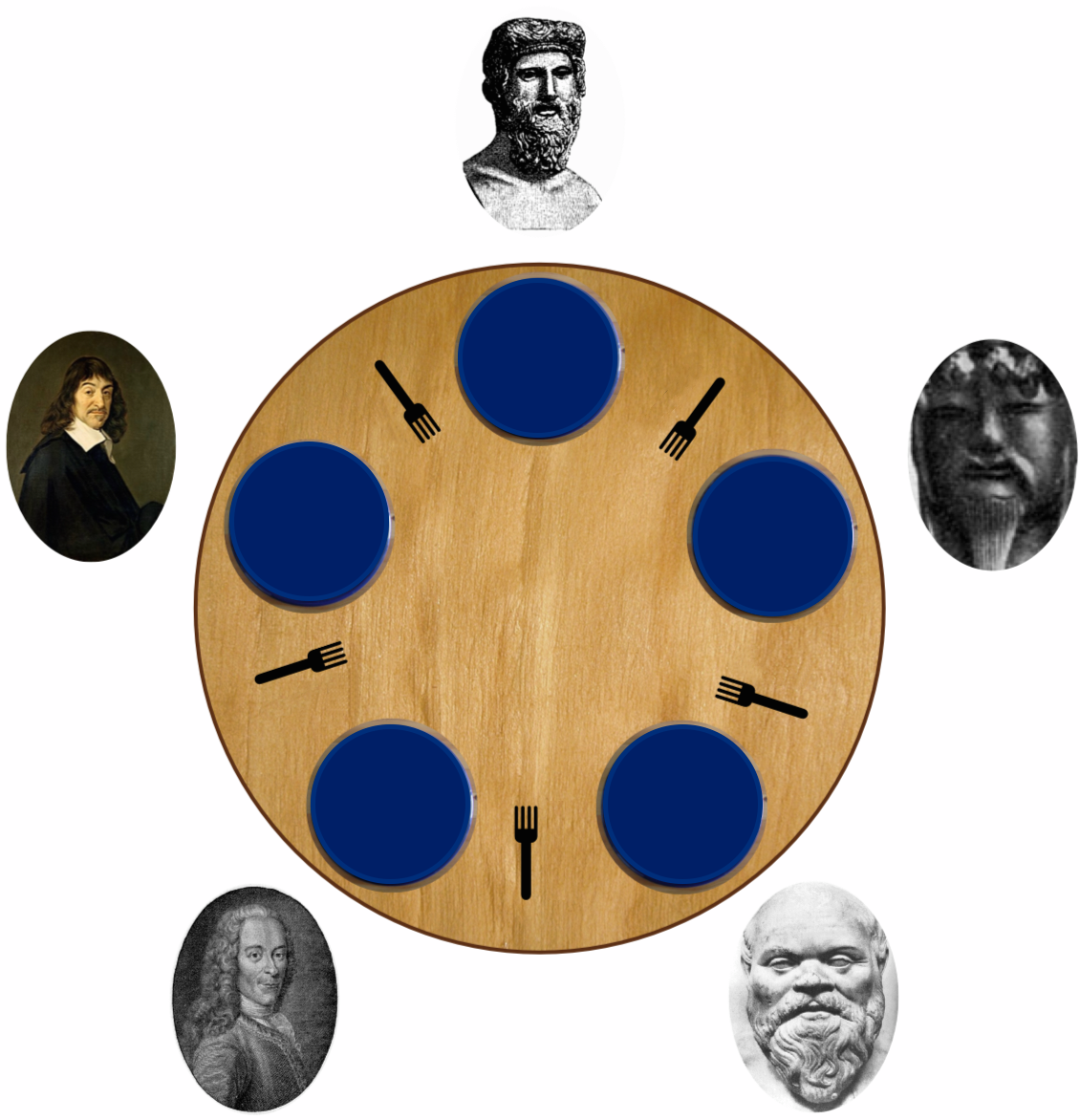

A classic form of stochastic games is the dinning philosophers problem in which there are n + 1 philosophers (n ≥ 1) sitting at a round table with a bowl of rice in the middle. Between any two philosophers who sit next to each other lies a chopstick, which can be accessed by both of them. Since the table is round, there are as many chopsticks as there are philosophers;. To eat from the bowl, a philosopher needs to acquire both of the chopsticks he has access to. Hence, if one philosopher eats, then his two neighbors cannot eat at the same time. The life of a philosopher is rather simple and consists of thinking and eating; to survive, a philosopher needs to think and eat again and again. The task is to design a protocol that allows all of the philosophers to survive.

Evolutionary Games

Evolutionary Game Theory(EGT) draws inspiration from the Darwinian theory of evolution. The origins of EGT can be traced back to 1973 with John Maynard Smith and George R. Price’s formalization of contests, analyzed as strategies, and the mathematical criteria that can be used to predict the results of competing strategies. Conceptually, EGT is the application of game theory concepts to situations in which a population of agents with diverse strategies interact over time to create a stable solution, through an evolutionary process of selection and duplication. The main idea behind EGT is that many behaviors involve the interaction of multiple agents in a population, and the success of any one of these agents depends on how its strategy interacts with that of others. While classic game theory has been focused on static strategies, that is to say, strategies that do not change over time, evolutionary game theory differs from classical game theory in focusing on how strategies evolve over time and which kind of dynamic strategies are most successful in this evolutionary process.

A classic example of EGT is the Hawk Dove Game that models a contest between a hawk and a dove over a shareable resource. In the game, each contestant follows exactly one of two strategies described below:

- Hawk: Initiate aggressive behavior, not stopping until injured or until one’s opponent backs down.

- Dove: Retreat immediately if one’s opponent initiates aggressive behavior.

If we assume that (1) whenever two individuals both initiate aggressive behaviour, conflict eventually results and the two individuals are equally likely to be injured, (2) the cost of the conflict reduces individual fitness by some constant value C, (3) when a Hawk meets a Dove, the Dove immediately retreats and the Hawk obtains the resource, and (4) when two Doves meet the resource is shared equally between them, the fitness payoffs for the Hawk-Dove game can be summarized according to the following matrix:

Inverse Game Theory

In many cases, the problem is not to optimize the participant’s strategy on a game but to design a game around the behavior of rational participants. this is the role of inverse game theory. Auctions are considered one of the main examples of inverse game theory.

Game theory is experiencing a renaissance driven by the evolution of AI and multi-agent systems. The principles of game theory that were formulated by computer science legends like Alan Turing or John Von Neumann are now at the center of some of the most intelligent systems in the planet and recent advancements in AI are helping to push the research about game theory as well. As AI continues evolving we should see new and more novel ideas in the field of game theory that find their way into mainstream deep learning systems.

Original. Reposted with permission.

Related:

- Introduction to Game Theory (Part 1)

- Trends in Machine Learning in 2020

- Introduction to Markov Chains