How to Process a DataFrame with Millions of Rows in Seconds

TLDR; process it with a new Python Data Processing Engine in the Cloud.

Photo by Jason Blackeye on Unsplash

Data Science is having its renaissance moment. It's hard to keep track of all new Data Science tools that have the potential to change the way Data Science gets done.

I learned about this new Data Processing Engine only recently in a conversation with a colleague, also a Data Scientist. We had a discussion about Big Data processing, which is at the forefront of innovation in the field, and this new tool popped up.

While pandas is the defacto tool for data processing in Python, it doesn’t handle big data well. With bigger datasets, you’ll get an out-of-memory exception sooner or later.

Researchers were confronted with this issue a long time ago, which prompted the development of tools like Dask and Spark, which try to overcome “the single machine” constrain by distributing processing to multiple machines.

This active area of innovation also brought us tools like Vaex, which try to solve this issue by making processing on a single machine more memory efficient.

And it doesn’t end there. There is another tool for big data processing you should know about …

Meet Terality

Photo by frank mckenna on Unsplash

Terality is a Serverless Data Processing Engine that processes the data in the Cloud. There is no need to manage infrastructure as Terality takes care of scaling compute resources. Its target audiences are Engineers and Data Scientists.

I exchanged a few emails with the Terality’s team as I was interested in the tool they’ve developed. They answered swiftly. These were my questions to the team:

My n-th email to the Terality’s team (screenshot by author)

What are the main steps of data processing with Terality?

- Terality comes with a Python client that you import into a Jupyter Notebook.

- Then you write the code in “a pandas way” and Terality securely uploads your data and takes care of distributed processing (and scaling) to calculate your analysis.

- After processing is completed, you can convert the data back to a regular pandas DataFrame and continue with analysis locally.

What’s happening behind the scenes?

Terality team developed a proprietary data processing engine — it’s not a fork of Spark or Dask.

The goal was to avoid the imperfections of Dask, which doesn’t have the same syntax as pandas, it’s asynchronous, doesn’t have all pandas functions and it doesn’t support auto-scaling.

Terality’s Data Processing Engine solves these issues.

Is Terality FREE to use?

Terality has a free plan with which you can process up to 500 GB of data per month. It also offers a paid plan for companies and individuals with greater requirements.

In this article, we’ll focus on the free plan as it’s applicable to many Data Scientists.

How does Terality calculate data usage? (From Terality’s documentation)

Consider a dataset with a total size of 15GB in memory, as would be returned by the operation df.memory_usage(deep=True).sum().

Running one (1) operation on this dataset, such as a .sum or a .sort_values, would consume 15GB of processed data in Terality.

The billable usage is only recorded when task runs enter a Success state.

What about Data Privacy?

When a user performs a read operation, the Terality client copies the dataset on Terality’s secured cloud storage on Amazon S3.

Terality has a strict policy around data privacy and protection. They guarantee that they’ll not use the data and process it securely.

Terality is not a storage solution. They will delete your data maximum within 3 days after Terality’s client session is closed.

Terality processing currently occurs on AWS in the Frankfurt region.

See the security section for more information.

Does the data need to be public?

No!

The user needs to have access to the dataset on his local machine and Terality will handle the uploading process behind the scene.

The upload operation is also parallelized so that is faster.

Can Terality process Big Data?

At the moment, in November 2021, Terality is still in beta. It’s optimized for datasets up to 100–200 GB.

I asked the team if they plan to increase this and they plan to soon start to optimize for Terabytes.

Let’s take it for a test drive

Photo by Eugene Chystiakov on Unsplash

I was surprised that you can simply drop in replace pandas import statement with Terality’s package and rerun your analysis.

Note, once you import Terality’s Python client, the data processing is not any longer performed on your local machine but with Terality’s Data Processing Engine in the Cloud.

Now, let’s install Terality and try it in practice…

Setup

You can install Terality by simply running:

pip install --upgrade terality

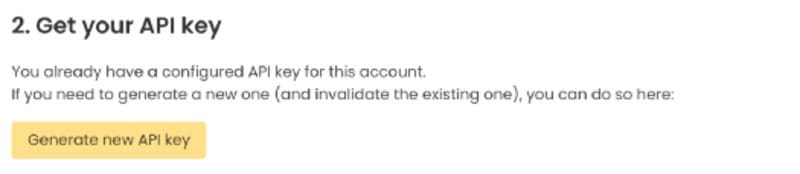

Then you create a free account on Terality and generate an API key:

Generate new API key on Terality (screenshot by author)

The last step is to enter your API key (also replace the email with your email):

terality account configure --email your@email.com

Let’s start small…

Now, that we have Terality installed, we can run a small example to get familiar with it.

The practice shows that you get the best of both worlds while using both Terality and pandas — one to aggregate the data and the other to analyze the aggregate locally

The command below creates a terality.DataFrame by importing a pandas.DataFrame:

import pandas as pd

import terality as tedf_pd = pd.DataFrame({"col1": [1, 2, 2], "col2": [4, 5, 6]})

df_te = te.DataFrame.from_pandas(df_pd)

Now, that the data is in Terality’s Cloud, we can proceed with analysis:

df_te.col1.value_counts()

Running filtering operations and other familiar pandas operations:

df_te[(df_te["col1"] >= 2)]

Once we finish with the analysis, we can convert it back to a pandas DataFrame with:

df_pd_roundtrip = df_te.to_pandas()

We can validate that the DataFrames are equal:

pd.testing.assert_frame_equal(df_pd, df_pd_roundtrip)

Let’s go Big…

I would suggest you check Terality’s Quick Start Jupyter Notebook, which takes you through an analysis of 40 GB of Reddit comments dataset. They also have a tutorial with a smaller 5 GB dataset.

I clicked through Terality’s Jupyter Notebook and processed the 40 GB dataset. It read the data in 45 seconds and needed 35 seconds to sort it. The merge with another table took 1 minute and 17 seconds. It felt like I’m processing a much smaller dataset on my laptop.

Then I tried loading the same 40GB dataset with pandas on my laptop with 16 GB of main memory — it returned an out-of-memory exception.

Official Terality tutorial takes you through the analysis of a 5GB file with Reddit comments.

Conclusion

Photo by Sven Scheuermeier on Unsplash

I played with Terality quite a bit and my experience was without major issues. This surprised me as they are officially still in beta. A great sign is also that their support team is really responsive.

I see a great use case for Terality when you have a big data set that you can’t process on your local machine — may that be because of memory constraints or the speed of processing.

Using Dask (or Spark) would require spinning up a cluster which would cost much more than using Terality to complete your analysis.

Also, configuring such a cluster is a cumbersome process, while with Terality you only need to change the import statement.

Another thing that I like is that I can use it in my local JupyterLab, because I have many extensions, configurations, dark mode, etc.

I’m looking forward to the progress that the team makes with Terality in the coming months.

Roman Orac is a Machine Learning engineer with notable successes in improving systems for document classification and item recommendation. Roman has experience with managing teams, mentoring beginners and explaining complex concepts to non-engineers.

Original. Reposted with permission.