Hyperparameter Tuning Using Grid Search and Random Search in Python

A comprehensive guide on optimizing model hyperparameters with Scikit-Learn.

Image by Editor

Introduction

All machine learning models have a set of hyperparameters or arguments that must be specified by the practitioner.

For example, a logistic regression model has different solvers that are used to find coefficients that can give us the best possible output. Each solver uses a different algorithm to find an optimal result, and none of these algorithms are strictly better than the other. It is difficult to tell which solver will perform the best on your dataset unless you try all of them.

The best hyperparameter is subjective and differs for every dataset. The Scikit-Learn library in Python has a set of default hyperparameters that perform reasonably well on all models, but these are not necessarily the best for every problem.

The only way to find the best possible hyperparameters for your dataset is by trial and error, which is the main concept behind hyperparameter optimization.

In simple words, hyperparameter optimization is a technique that involves searching through a range of values to find a subset of results that achieve the best performance on a given dataset.

There are two popular techniques used to perform hyperparameter optimization - grid and random search.

Grid Search

When performing hyperparameter optimization, we first need to define a parameter space or parameter grid, where we include a set of possible hyperparameter values that can be used to build the model.

The grid search technique is then used to place these hyperparameters in a matrix-like structure, and the model is trained on every combination of hyperparameter values.

The model with the best performance is then selected.

Random Search

While grid search looks at every possible combination of hyperparameters to find the best model, random search only selects and tests a random combination of hyperparameters.

This technique randomly samples from a grid of hyperparameters instead of conducting an exhaustive search.

We can specify the number of total runs the random search should try before returning the best model.

Now that you have a basic understanding of how random search and grid search work, I will show you how to implement these techniques using the Scikit-Learn library.

Optimizing a Random Forest Classifier Using Grid Search and Random Search

Step 1: Loading the Dataset

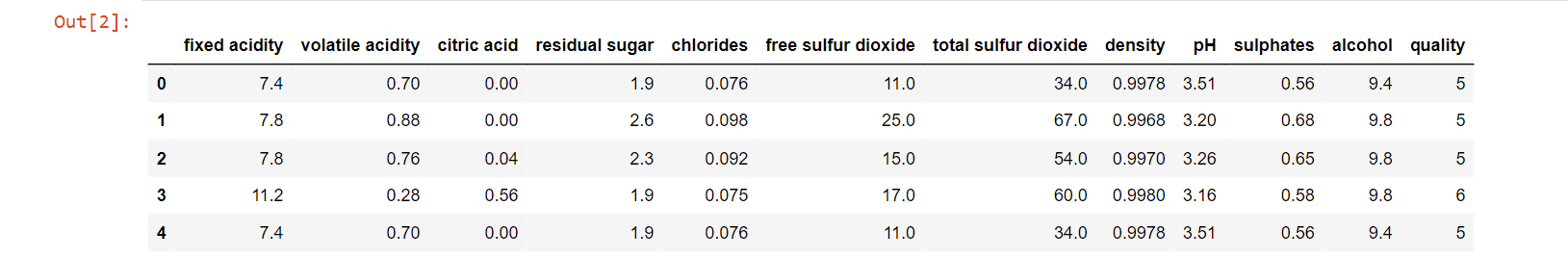

Download the Wine Quality dataset on Kaggle and type the following lines of code to read it using the Pandas library:

import pandas as pd

df = pd.read_csv('winequality-red.csv')

df.head()

The head of the dataframe looks like this:

Step 2: Data Preprocessing

The target variable “quality” contains values ranging between 1 and 10.

We will turn this into a binary classification task by assigning a value of 0 to all data points with a quality value of less than or equal to 5, and a value of 1 to the remaining observations:

import numpy as np df['target'] = np.where(df['quality']>5, 1, 0)

Let’s split the dependent and independent variables in this dataframe:

df2 = df.drop(['quality'],axis=1) X = df2.drop(['target'],axis=1) y = df2[['target']]

Step 3: Building the Model

Now, let’s instantiate a random forest classifier. We will be tuning the hyperparameters of this model to create the best algorithm for our dataset:

from sklearn.ensemble import RandomForestClassifier rf = RandomForestClassifier()

Step 4: Implementing Grid Search with Scikit-Learn

Defining the Hyperparameter Space

We will now try adjusting the following set of hyperparameters of this model:

- “Max_depth”: This hyperparameter represents the maximum level of each tree in the random forest model. A deeper tree performs well and captures a lot of information about the training data, but will not generalize well to test data. By default, this value is set to “None” in the Scikit-Learn library, which means that the trees are left to expand completely.

- “Max_features”: The maximum number of features that the random forest model is allowed to try at each split. By default in Scikit-Learn, this value is set to the square root of the total number of variables in the dataset.

- “N_estimators”: The number of decision trees in the forest. The default number of estimators in Scikit-Learn is 10.

- “Min_samples_leaf”: The minimum number of samples required to be at the leaf node of each tree. The default value is 1 in Scikit-Learn.

- “Min_samples_split”: The minimum number of samples required to split an internal node of each tree. The default value is 2 in Scikit-Learn.

We will now create a dictionary of multiple possible values for all the above hyperparameters. This is also called the hyperparameter space, and will be searched through to find the best combination of arguments:

grid_space={'max_depth':[3,5,10,None],

'n_estimators':[10,100,200],

'max_features':[1,3,5,7],

'min_samples_leaf':[1,2,3],

'min_samples_split':[1,2,3]

}

Running Grid Search

Now, we need to perform the search to find the best hyperparameter combination for the model:

from sklearn.model_selection import GridSearchCV grid = GridSearchCV(rf,param_grid=grid_space,cv=3,scoring='accuracy') model_grid = grid.fit(X,y)

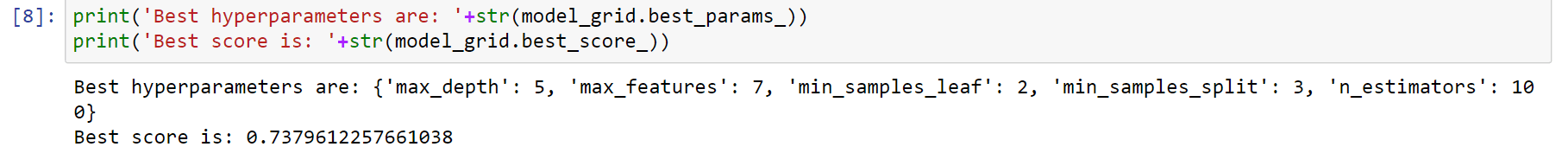

Evaluating Model Results

Finally, let’s print out the best model accuracy, along with the set of hyperparameters that yielded this score:

print('Best hyperparameters are: '+str(model_grid.best_params_))

print('Best score is: '+str(model_grid.best_score_))

The best model rendered an accuracy score of approximately 0.74, and its hyperparameters are as follows:

Now, let’s use random search on the same dataset to see if we get similar results.

Step 5: Implementing Random Search Using Scikit-Learn

Defining the Hyperparameter Space

Now, let’s define the hyperparameter space to implement random search. This parameter space can have a bigger range of values than the one we built for grid search, since random search does not try out every single combination of hyperparameters.

It randomly samples hyperparameters to find the best ones, which means that unlike grid search, random search can look through a large number of values quickly.

from scipy.stats import randint

rs_space={'max_depth':list(np.arange(10, 100, step=10)) + [None],

'n_estimators':np.arange(10, 500, step=50),

'max_features':randint(1,7),

'criterion':['gini','entropy'],

'min_samples_leaf':randint(1,4),

'min_samples_split':np.arange(2, 10, step=2)

}

Running Random Search

Run the following lines of code to run random search on the model: (Note that we have specified n_iter=500, which means that the random search will run 500 times before choosing the best model. You can experiment with a different number of iterations to see which one gives you optimal results. Keep in mind that a large number of iterations will result in better performance but is time-consuming).

from sklearn.model_selection import RandomizedSearchCV rf = RandomForestClassifier() rf_random = RandomizedSearchCV(rf, space, n_iter=500, scoring='accuracy', n_jobs=-1, cv=3) model_random = rf_random.fit(X,y)

Evaluating Model Results

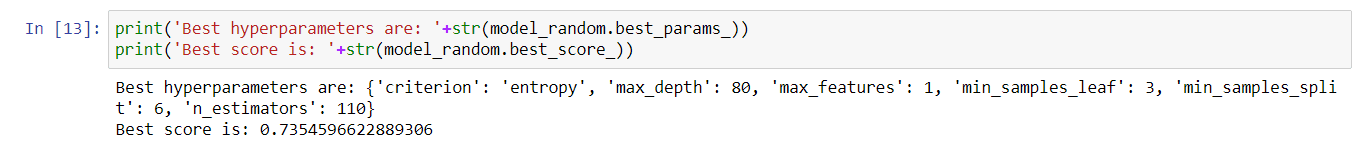

Now, run the following lines of code to print the best hyperparameters found by random search, along with the highest accuracy of the best model:

print('Best hyperparameters are: '+str(model_random.best_params_))

print('Best score is: '+str(model_random.best_score_))

The best hyperparameters found by random search are as follows:

The highest accuracy of all the models built is approximately 0.74 as well.

Observe that both grid search and random search performed reasonably well on the dataset. Keep in mind that if you were to run a random search on the same code, your results may end up being very different from what I’ve displayed above.

This is because it is searching through a very large parameter grid using random initialization, which can render results that vary dramatically each time you use the technique.

Complete Code

Here is the complete code used in the tutorial:

# imports

import pandas as pd

import numpy as np

from sklearn.ensemble import RandomForestClassifier

from sklearn.model_selection import GridSearchCV

from scipy.stats import randint

from sklearn.model_selection import RandomizedSearchCV

# reading the dataset

df = pd.read_csv('winequality-red.csv')

# preprocessing

df['target'] = np.where(df['quality']>5, 1, 0)

df2 = df.drop(['quality'],axis=1)

X = df2.drop(['target'],axis=1)

y = df2[['target']]

# initializing random forest

rf = RandomForestClassifier()

# grid search cv

grid_space={'max_depth':[3,5,10,None],

'n_estimators':[10,100,200],

'max_features':[1,3,5,7],

'min_samples_leaf':[1,2,3],

'min_samples_split':[1,2,3]

}

grid = GridSearchCV(rf,param_grid=grid_space,cv=3,scoring='accuracy')

model_grid = grid.fit(X,y)

# grid search results

print('Best grid search hyperparameters are: '+str(model_grid.best_params_))

print('Best grid search score is: '+str(model_grid.best_score_))

# random search cv

rs_space={'max_depth':list(np.arange(10, 100, step=10)) + [None],

'n_estimators':np.arange(10, 500, step=50),

'max_features':randint(1,7),

'criterion':['gini','entropy'],

'min_samples_leaf':randint(1,4),

'min_samples_split':np.arange(2, 10, step=2)

}

rf = RandomForestClassifier()

rf_random = RandomizedSearchCV(rf, rs_space, n_iter=500, scoring='accuracy', n_jobs=-1, cv=3)

model_random = rf_random.fit(X,y)

# random random search results

print('Best random search hyperparameters are: '+str(model_random.best_params_))

print('Best random search score is: '+str(model_random.best_score_))

Grid Search vs Random Search - Which One To Use?

If you ever find yourself trying to choose between grid search and random search, here are some pointers to help you decide which one to use:

- Use grid search if you already have a ballpark range of known hyperparameter values that will perform well. Make sure to keep your parameter space small, because grid search can be extremely time-consuming.

- Use random search on a broad range of values if you don’t already have an idea of the parameters that will perform well on your model. Random search is faster than grid search and should always be used when you have a large parameter space.

- It is also a good idea to use both random search and grid search to get the best possible results.

You can use random search first with a large parameter space since it is faster. Then, use the best hyperparameters found by random search to narrow down the parameter grid, and feed a smaller range of values to grid search.

Natassha Selvaraj is a self-taught data scientist with a passion for writing. You can connect with her on LinkedIn.