Creating a Web Application to Extract Topics from Audio with Python

A step-by-step tutorial to build and deploy a web application for topic modeling of a Spotify podcast.

Photo by israel palacio on Unsplash

The article is in continuation of the story How to build a Web App to Transcribe and Summarize audio with Python. In the previous post, I have shown how to build an app that transcribes and summarizes the content of your favourite Spotify Podcast. The summary of a text can be useful for listeners to decide if the episode is interesting or not before listening to it.

But there are other possible features that can be extracted from audio. The topics. Topic modelling is one of the many natural language processing that enables the automatic extraction of topics from different types of sources, such as reviews of hotels, job offers, and social media posts.

In this post, we are going to build an app that collects the topics from a podcast episode with Python and analyzes the importance of each topic extracted with nice data visualizations. In the end, we’ll deploy the web app to Heroku for free.

Requirements

- Create a GitHub repository, that will be needed to deploy the web application into production to Heroku!

- Clone the repository on your local PC with

git clone <name-repository>.git. In my case, I will use VS code, which is an IDE really efficient to work with python scripts, includes Git support and integrates the terminal. Copy the following commands on the terminal:

git init

git commit -m "first commit"

git branch -M master

git remote add origin https://github.com//.git

git push -u origin master

- Create a virtual environment in Python.

Part 1: Create the Web Application to Extract Topics

This tutorial is split into two main parts. In the first part, we create our simple web application to extract the topics from the podcast. The remaining part focuses on the deployment of the app, which is an important step for sharing your app with the world anytime. Let’s get started!

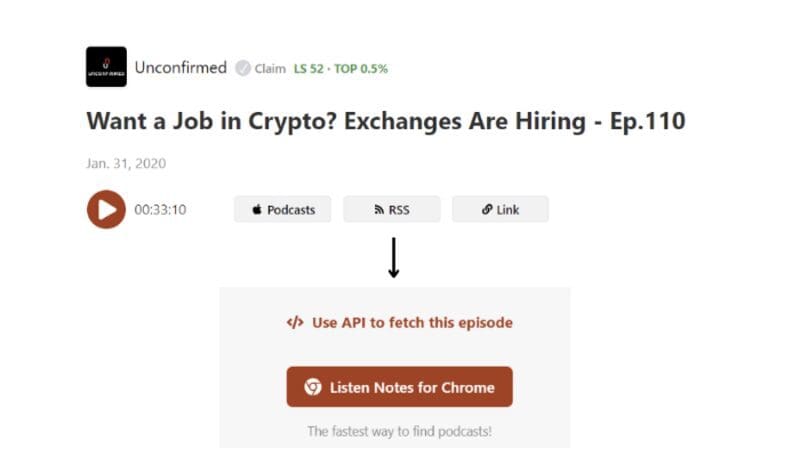

1. Extract Episode’s URL from Listen Notes

We are going to discover the topics from an episode of Unconfirmed, called Want a Job in Crypto? Exchanges are hiring — Ep. 110. You can find the link to the episode here. As you may know from the news in television and newspaper, blockchain industry is exploding and there is the esigence to keep updated in the opening of jobs in that field. Surely, they will need data engineers and data scientists to manage data and extract values from these huge amounts of data.

Listen Notes is a podcast search engine and database online, allowing us to get access to podcast audio through their APIs. We need to define the function to extract the episode’s URL from the web page. First, you need to create an account to retrieve the data and subscribe to free plan to use the Listen Notes API.

Then, you click the episode you are interested in and select the option “Use API to fetch this episode” at the right of the page. Once you pressed it, you can change the default coding language to Python and click the requests option to use that python package. After, you copy the code and adapt it into a function.

import streamlit as st

import requests

import zipfile

import json

from time import sleep

import yaml

def retrieve_url_podcast(parameters,episode_id):

url_episodes_endpoint = 'https://listen-api.listennotes.com/api/v2/episodes'

headers = {

'X-ListenAPI-Key': parameters["api_key_listennotes"],

}

url = f"{url_episodes_endpoint}/{episode_id}"

response = requests.request('GET', url, headers=headers)

print(response.json())

data = response.json()

audio_url = data['audio']

return audio_url

It takes the credentials from a separate file, secrets.yaml, which is composed of a collection of key-value pairs like the dictionaries:

api_key:{your-api-key-assemblyai}

api_key_listennotes:{your-api-key-listennotes}

2. Retrieve Transcription and Topics from Audio

To extract the topics, we first need to send a post request to AssemblyAI’s transcript endpoint by giving in input the audio URL retrieved in the previous step. After we can obtain the transcription and the topics of our podcast by sending a GET request to AssemblyAI.

## send transcription request

def send_transc_request(headers, audio_url):

transcript_endpoint = "https://api.assemblyai.com/v2/transcript"

transcript_request = {

"audio_url": audio_url,

"iab_categories": True,

}

transcript_response = requests.post(

transcript_endpoint, json=transcript_request, headers=headers

)

transcript_id = transcript_response.json()["id"]

return transcript_id

##retrieve transcription and topics

def obtain_polling_response(headers, transcript_id):

polling_endpoint = (

f"https://api.assemblyai.com/v2/transcript/{transcript_id}"

)

polling_response = requests.get(polling_endpoint, headers=headers)

i = 0

while polling_response.json()["status"] != "completed":

sleep(5)

polling_response = requests.get(

polling_endpoint, headers=headers

)

return polling_response

The results will be saved into two different files:

def save_files(polling_response):

with open("transcript.txt", 'w') as f:

f.write(polling_response.json()['text'])

f.close()

with open('only_topics.json', 'w') as f:

topics = polling_response.json()['iab_categories_result']

json.dump(topics, f, indent=4)

def save_zip():

list_files = ['transcript.txt','only_topics.json','barplot.html']

with zipfile.ZipFile('final.zip', 'w') as zipF:

for file in list_files:

zipF.write(file, compress_type=zipfile.ZIP_DEFLATED)

zipF.close()

Below I show an example of transcription:

Hi everyone. Welcome to Unconfirmed, the podcast that reveals how the marketing names and crypto are reacting to the week's top headlines and gets the insights you on what they see on the horizon. I'm your host, Laura Shin. Crypto, aka Kelman Law, is a New York law firm run by some of the first lawyers to enter crypto in 2013 with expertise in litigation, dispute resolution and anti money laundering. Email them at info at kelman law. ....

Now, I show the output of the topics extracted from the podcast’s episode:

{

"status": "success",

"results": [

{

"text": "Hi everyone. Welcome to Unconfirmed, the podcast that reveals how the marketing names and crypto are reacting to the week's top headlines and gets the insights you on what they see on the horizon. I'm your host, Laura Shin. Crypto, aka Kelman Law, is a New York law firm run by some of the first lawyers to enter crypto in 2013 with expertise in litigation, dispute resolution and anti money laundering. Email them at info at kelman law.",

"labels": [

{

"relevance": 0.015229620970785618,

"label": "PersonalFinance>PersonalInvesting"

},

{

"relevance": 0.007826927118003368,

"label": "BusinessAndFinance>Industries>FinancialIndustry"

},

{

"relevance": 0.007203377783298492,

"label": "BusinessAndFinance>Business>BusinessBanking&Finance>AngelInvestment"

},

{

"relevance": 0.006419596262276173,

"label": "PersonalFinance>PersonalInvesting>HedgeFunds"

},

{

"relevance": 0.0057992455549538136,

"label": "Hobbies&Interests>ContentProduction"

},

{

"relevance": 0.005361487623304129,

"label": "BusinessAndFinance>Economy>Currencies"

},

{

"relevance": 0.004509655758738518,

"label": "BusinessAndFinance>Industries>LegalServicesIndustry"

},

{

"relevance": 0.004465851932764053,

"label": "Technology&Computing>Computing>Internet>InternetForBeginners"

},

{

"relevance": 0.0021628723479807377,

"label": "BusinessAndFinance>Economy>Commodities"

},

{

"relevance": 0.0017050291644409299,

"label": "PersonalFinance>PersonalInvesting>StocksAndBonds"

}

],

"timestamp": {

"start": 4090,

"end": 26670

}

},...],

"summary": {

"Careers>JobSearch": 1.0,

"BusinessAndFinance>Business>BusinessBanking&Finance>VentureCapital": 0.9733043313026428,

"BusinessAndFinance>Business>Startups": 0.9268804788589478,

"BusinessAndFinance>Economy>JobMarket": 0.7761372327804565,

"BusinessAndFinance>Business>BusinessBanking&Finance>AngelInvestment": 0.6847236156463623,

"PersonalFinance>PersonalInvesting>StocksAndBonds": 0.6514145135879517,

"BusinessAndFinance>Business>BusinessBanking&Finance>PrivateEquity": 0.3943130075931549,

"BusinessAndFinance>Industries>FinancialIndustry": 0.3717447817325592,

"PersonalFinance>PersonalInvesting": 0.3703657388687134,

"BusinessAndFinance>Industries": 0.29375147819519043,

"BusinessAndFinance>Economy>Currencies": 0.27661699056625366,

"BusinessAndFinance": 0.1965470314025879,

"Hobbies&Interests>ContentProduction": 0.1607944369316101,

"BusinessAndFinance>Economy>FinancialRegulation": 0.1570006012916565,

"Technology&Computing": 0.13974210619926453,

"Technology&Computing>Computing>ComputerSoftwareAndApplications>SharewareAndFreeware": 0.13566900789737701,

"BusinessAndFinance>Industries>TechnologyIndustry": 0.13414880633354187,

"BusinessAndFinance>Industries>InformationServicesIndustry": 0.12478621304035187,

"BusinessAndFinance>Economy>FinancialReform": 0.12252965569496155,

"BusinessAndFinance>Business>BusinessBanking&Finance>MergersAndAcquisitions": 0.11304120719432831

}

}

We have obtained a JSON file, containing all the topics detected by AssemblyAI. Essentially, we transcribed the podcast into text, which is split up into different sentences and their corresponding relevance. For each sentence, we have a list of topics. At the end of this big dictionary, there is a summary of topics that have been extracted from all the sentences.

It’s worth noticing that Careers and JobSearch constitute the most relevant topic. In the top five labels, we also find Business and Finance, Startups, Economy, Business and Banking, Venture Capital, and other similar topics.

3. Build a Web Application with Streamlit

The link to the App deployed is here

Now, we put all the functions defined in the previous steps into the main block, in which we build our web application with Streamlit, a free open-source framework that allows building applications with few lines of code using Python:

- The main title of the app is displayed using

st.markdown. - A left panel sidebar is created using

st.sidebar. We need it to insert the episode id of our podcast. - After pressing the button “Submit”, a bar plot will appear, showing the most relevant 5 topics extracted.

- there is the Download button in case you want to download the transcription, the topics, and the data visualization

st.markdown("# **Web App for Topic Modeling**")

bar = st.progress(0)

st.sidebar.header("Input parameter")

with st.sidebar.form(key="my_form"):

episode_id = st.text_input("Insert Episode ID:")

# 7b23aaaaf1344501bdbe97141d5250ff

submit_button = st.form_submit_button(label="Submit")

if submit_button:

f = open("secrets.yaml", "rb")

parameters = yaml.load(f, Loader=yaml.FullLoader)

f.close()

# step 1 - Extract episode's url from listen notes

audio_url = retrieve_url_podcast(parameters, episode_id)

# bar.progress(30)

api_key = parameters["api_key"]

headers = {

"authorization": api_key,

"content-type": "application/json",

}

# step 2 - retrieve id of transcription response from AssemblyAI

transcript_id = send_transc_request(headers, audio_url)

# bar.progress(70)

# step 3 - topics

polling_response = obtain_polling_response(headers, transcript_id)

save_files(polling_response)

df = create_df_topics()

import plotly.express as px

st.subheader("Top 5 topics extracted from the podcast's episode")

fig = px.bar(

df.iloc[:5, :].sort_values(

by=["Probability"], ascending=True

),

x="Probability",

y="Topics",

text="Probability",

)

fig.update_traces(

texttemplate="%{text:.2f}", textposition="outside"

)

fig.write_html("barplot.html")

st.plotly_chart(fig)

save_zip()

with open("final.zip", "rb") as zip_download:

btn = st.download_button(

label="Download",

data=zip_download,

file_name="final.zip",

mime="application/zip",

)

To run the web application, you need to write the following command line on the terminal:

streamlit run topic_app.py

Amazing! Now two URL should appear, click one of these and the web application is ready to be used!

Part 2: Deploy the Web Application to Heroku

Once you completed the code of the web application and you checked if it works well, the next step is to deploy it on the Internet to Heroku.

You are probably wondering what Heroku is. It’s a cloud platform that allows the development and deployment of web applications using different coding languages.

1. Create requirements.txt, Procfile, and setup.sh

After, we create a file requirements.txt, that includes all the python packages requested by your script. We can automatically create it using the following command line by using this marvellous python library pipreqs.

pipreqs

It will magically generate a requirements.txt file:

pandas==1.4.3

plotly==5.10.0

PyYAML==6.0

requests==2.28.1

streamlit==1.12.2

Avoid using the command line pip freeze > requirements like this article suggested. The problem is that it returns more python packages that could not be required from that specific project.

In addition to requirements.txt, we also need Procfile, which specifies the commands that are needed to run the web application.

web: sh setup.sh && streamlit run topic_app.py

The last requirement is to have a setup.sh file that contains the following code:

mkdir -p ~/.streamlit/

echo "\

[server]\n\

port = $PORT\n\

enableCORS = false\n\

headless = true\n\

\n\

" > ~/.streamlit/config.toml

2. Connect to Heroku

If you didn’t register yet on Heroku’s website, you need to create a free account to be able to exploit its services. It’s also necessary to install Heroku on your local PC. Once you accomplished these two requirements, we can begin the fun part! Copy the following command line on the terminal:

heroku login

After pressing the command, a window of Heroku will appear on your browser and you’ll need to put the email and password of your account. If it works, you should have the following result:

So, you can return on VS code and write the command to create your web application on the terminal:

heroku create topic-web-app-heroku

Output:

Creating ⬢ topic-web-app-heroku... done

https://topic-web-app-heroku.herokuapp.com/ | https://git.heroku.com/topic-web-app-heroku.git

To deploy the app to Heroku, we need this command line:

git push heroku master

It’s used to push the code from the local repository’s main branch to heroku remote. After you push the changes to your repository with other commands:

git add -A

git commit -m "App over!"

git push

We are finally done! Now you should see your app that is finally deployed!

Final Thoughts

I hope you appreciated this mini-project! It can be really fun to create and deploy apps. The first time can be a little intimidating, but once you finish, you won’t have any regrets! I also want to highlight that it’s better to deploy your web application to Heroku when you are working on small projects with low memory requirements. Other alternatives can be bigger cloud platform frameworks, like AWS Lambda and Google Cloud. The GitHub code is here. Thanks for reading. Have a nice day!

Eugenia Anello is currently a research fellow at the Department of Information Engineering of the University of Padova, Italy. Her research project is focused on Continual Learning combined with Anomaly Detection.

Original. Reposted with permission.