Free Full Stack LLM Bootcamp

Want to learn more about LLMs and build cool LLM-powered applications? This free Full Stack LLM Bootcamp is all you need!

Image by Author

Large Language Models (LLMs) and LLM applications are the talk of the town! Whether you're interested in exploring them for personal projects, using them at work to maximize productivity, or quickly summarize search results and research papers—LLMs offer something for everyone across the spectrum.

This is great! But, if you want to get better at using LLMs and go beyond just using these apps and start building your own, the free LLM Bootcamp from the Full Stack team is for you.

From prompt engineering for effectively using LLMs to building your own applications and designing optimal user interfaces for LLM apps, this bootcamp covers it all. Let's learn more about what the bootcamp offers.

What Is the Full Stack LLM Bootcamp?

The Full Stack LLM Bootcamp was originally taught as an in-person event at San Francisco in April 2023. And now, the materials from the bootcamp—lectures, slides, source code for projects—are all accessible for free.

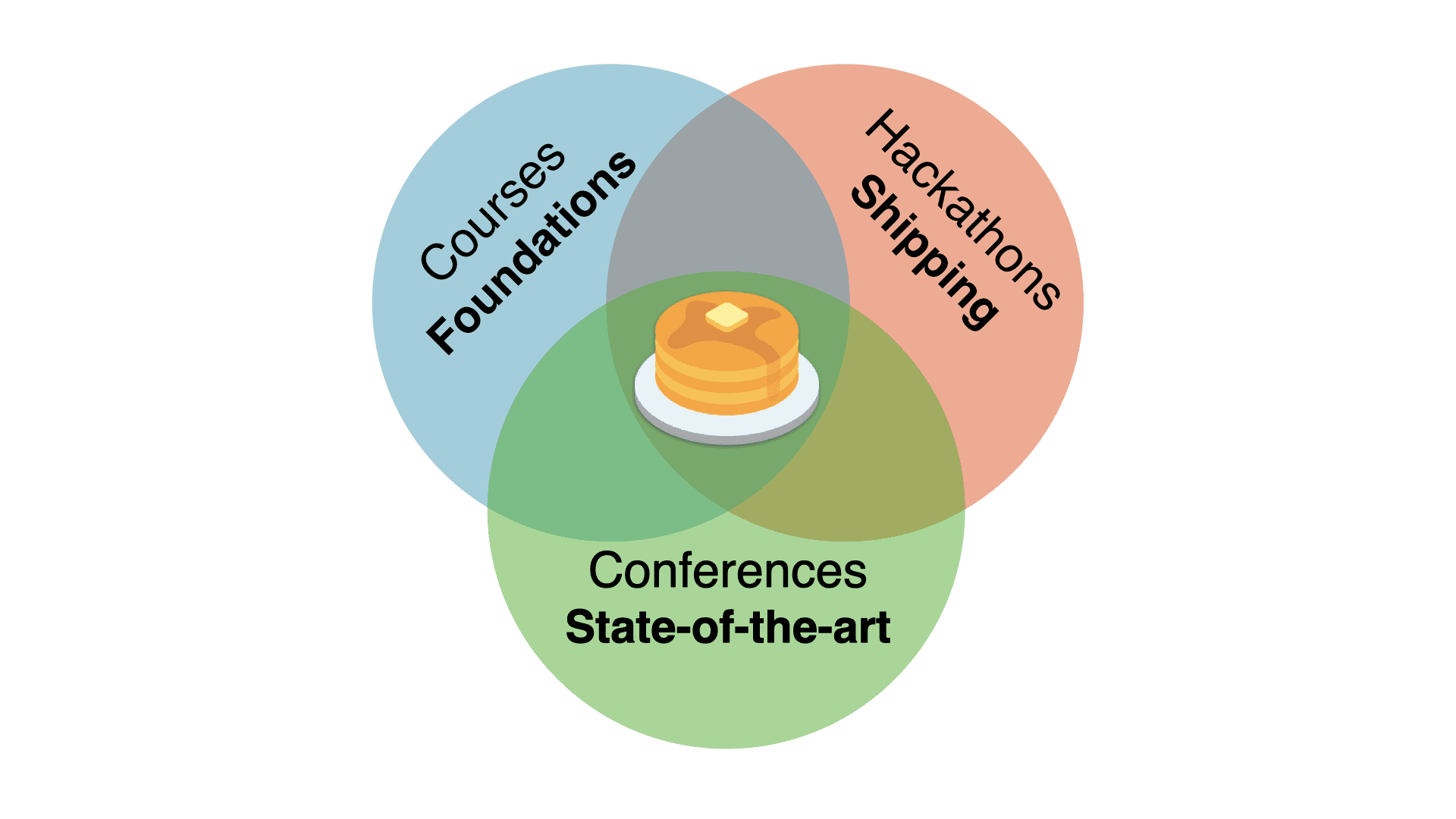

LLM Bootcamp | Image Source

The LLM bootcamp aims at providing a well-rounded approach. It covers prompt engineering techniques, fundamentals of LLMs, to building and shipping LLM apps to production.

This bootcamp is taught by instructors: Charles Frye, Josh Torbin, and Sergey Karayev—who are all UC Berkeley alumni. And their goal is getting everyone up to speed on the recent advances in LLM:

“Our goal is to get you 100% caught up to state-of-the-art and ready to build and deploy LLM apps, no matter what your level of experience with machine learning is.” – The Full Stack Team

A Closer Look at the Full Stack LLM Bootcamp

Now that we know what the LLM bootcamp is about, let’s delve deeper into the course contents.

Prerequisites

Though there are no mandatory prerequisites—except for a genuine interest in learning about LLMs, some relevant programming experience can make your journey simpler. Here are a couple of such prerequisites:

- Experience with programming in Python

- Familiarity with machine learning, front-end, or back-end development will be helpful

Learning to Spell: Prompt Engineering

For language models to produce desirable results it's important to get better at prompting.

The Learning to Spell: Prompt Engineering module covers the following:

- Thinking probabilistically about LLM outputs

- The basics of prompting in pretrained models like GPT-3 and LLaMa, instruction-tuned models like ChatGPT, and agents that mimic personas

- Prompt engineering techniques and best practices such as decomposition, ensembling outputs from different LLMs, using randomness, and more

LLMOps

Let alone LLM apps, even for simple machine learning applications, building the model is just the tip of the iceberg. The real challenge lies in deploying the model to production and monitoring and maintaining its performance over time.

The LLMOps module of the bootcamp covers:

- Choosing the best LLM for your application by factoring in the speed, cost, customizability, and the availability of open-source and restricted licenses

- Managing prompts better by integrating prompt tracking into the workflow (using Git or other version control systems)

- Testing LLMs

- The challenges of implementing test-driven development (TDD) in LLM apps

- Evaluation metrics for LLM

- Monitoring performance metrics, collecting user feedback and making requisite updates

UX For Language User Interfaces

In addition to accounting for the infrastructure and focusing on model choice, the success of the application depends on the user experience.

The module on UX for Language User Interfaces covers:

- Design principles for humans-centric and empathetic design of that and product interfaces

- Accounting for convenience factors like autocomplete, low latency, and more

- Case studies to delve deeper into what works (and what doesn’t)

- Importance of UX research

Augmented Language Models

Augmented language models are at the core of all LLM-powered applications. Often, we’d need language models to have better reasoning capabilities, work with custom datasets, and use up-to-date information to answer queries.

The module on Augmented Language Models covers the concepts:

- AI-powered information retrieval

- All about embeddings

- Chaining LLM calls to multiple language models

- Effective use of tools like LangChain

Launch an LLM App in One Hour

This module teaches you how to quickly build LLM apps including:

- Going about the processes of prototyping, iteration, and deployment to build an MVP application

- Using different tech stacks to build a useful product: from OpenAI’s language models to leveraging serverless infrastructure

LLM Foundations

If you are interested in understanding the foundations of large language models along with breakthroughs over the years, the LLM foundations module will help you understand the following:

- Foundations of machine learning

- Transformers and attentions

- Important LLMs such as GPT-3 family of LLMs and LLaMA important breakthrough elements

Project Walkthrough: askFSDL

The bootcamp also has a dedicated section that walks you through project askFSDL, an LLM-powered application that is built over the corpus from Full Stack Deep Learning course.

The Full Stack Deep Learning course by the team is another excellent resource to learn the best practices to build and ship deep learning models to production.

From data collection and cleaning, ETL and data processing steps, up to building the front and back ends, deploying and setting up model monitoring—this is a full stack project that you can try to replicate and learn a ton along the way.

Here’s an (inexhaustive) overview of what the project uses:

- OpenAI’s LLMs

- MongoDB to store the cleaned document corpus

- FAISS index for faster search through the corpus

- LangChain for chaining LLM calls and prompt management

- Hosting the application’s backend on Modal

- Model monitoring with Gantry

Wrapping Up

Hope you’re excited to learn more about LLMs by working through the LLM bootcamp. Happy learning!

You can also interact with other learners and members of the community by joining this Discord server. There are invited talks from industry experts (from the likes of OpenAI and Repl.it) and creators of tools in the LLM space. These talks will also be uploaded shortly to the bootcamp’s website.

Interested in checking out other courses on LLMs? Here’s a list of top free courses on LLMs.

Bala Priya C is a developer and technical writer from India. She likes working at the intersection of math, programming, data science, and content creation. Her areas of interest and expertise include DevOps, data science, and natural language processing. She enjoys reading, writing, coding, and coffee! Currently, she's working on learning and sharing her knowledge with the developer community by authoring tutorials, how-to guides, opinion pieces, and more.