Meet Gorilla: UC Berkeley and Microsoft’s API-Augmented LLM Outperforms GPT-4, Chat-GPT, and Claude

The model is augmented with APIs from Torch Hub, TensorFlow Hub and HuggingFace.

Image from Adobe Firefly

I recently started an AI-focused educational newsletter, that already has over 160,000 subscribers. TheSequence is a no-BS (meaning no hype, no news, etc) ML-oriented newsletter that takes 5 minutes to read. The goal is to keep you up to date with machine learning projects, research papers, and concepts. Please give it a try by subscribing below:

Recent advancements in large language models (LLMs) have revolutionized the field, equipping them with new capabilities like natural dialogue, mathematical reasoning, and program synthesis. However, LLMs still face inherent limitations. Their ability to store information is constrained by fixed weights, and their computation capabilities are limited to a static graph and narrow context. Additionally, as the world evolves, LLMs need retraining to update their knowledge and reasoning abilities. To overcome these limitations, researchers have started empowering LLMs with tools. By granting access to extensive and dynamic knowledge bases and enabling complex computational tasks, LLMs can leverage search technologies, databases, and computational tools. Leading LLM providers have begun integrating plugins that allow LLMs to invoke external tools through APIs. This transition from a limited set of hand-coded tools to accessing a vast array of cloud APIs has the potential to transform LLMs into the primary interface for computing infrastructure and the web. Tasks such as booking vacations or hosting conferences could be as simple as conversing with an LLM that has access to flight, car rental, hotel, catering, and entertainment web APIs.

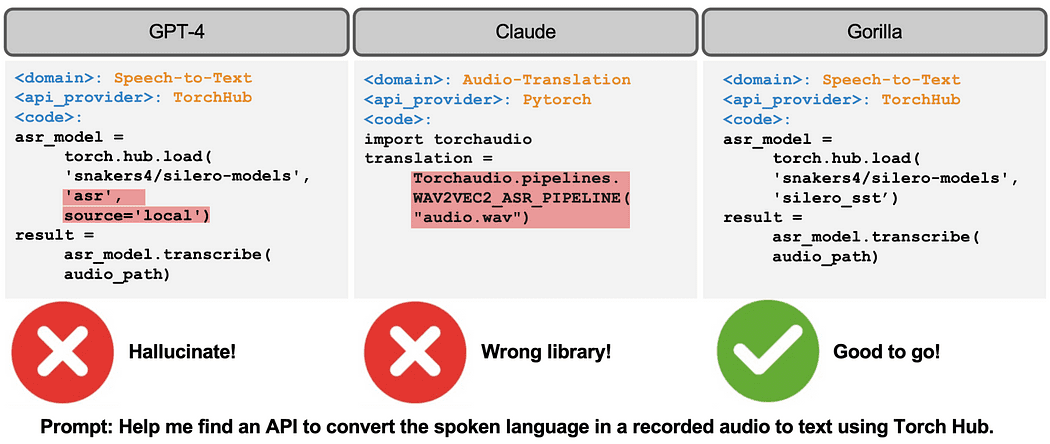

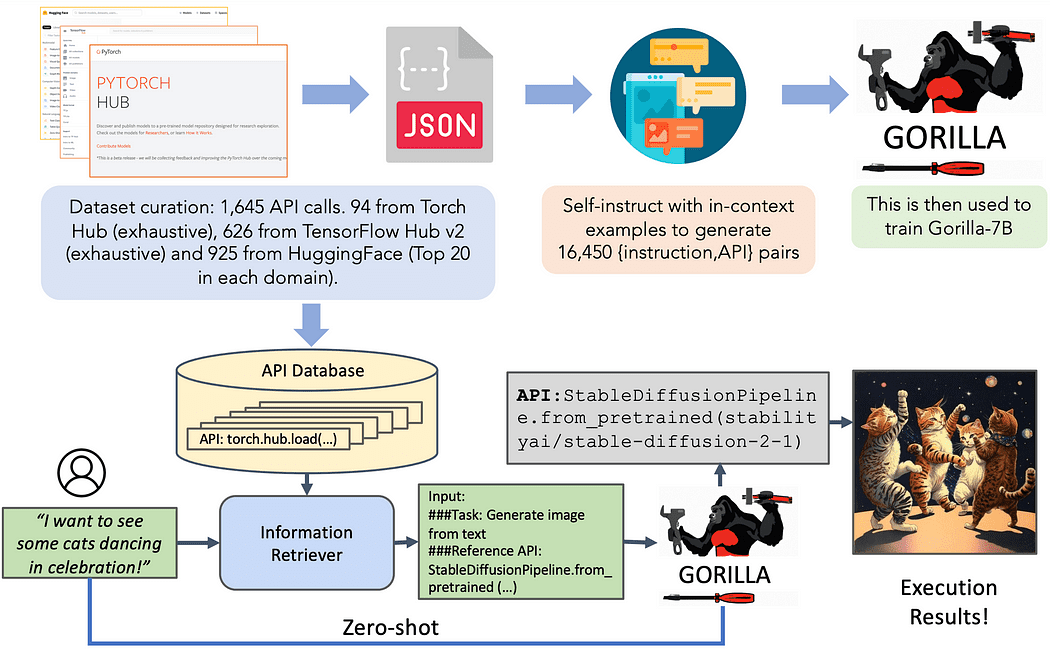

Recently, researchers from UC Berkeley and Microsoft unveiled Gorilla, a LLaMA-7B model designed specifically for API calls. Gorilla relies on self-instruct fine-tuning and retrieval techniques to enable LLMs to select accurately from a large and evolving set of tools expressed through their APIs and documentation. The authors construct a large corpus of APIs, called APIBench, by scraping machine learning APIs from major model hubs such as TorchHub, TensorHub, and HuggingFace. Using self-instruct, they generate pairs of instructions and corresponding APIs. The fine-tuning process involves converting the data to a user-agent chat-style conversation format and performing standard instruction finetuning on the base LLaMA-7B model.

Image Credit: UC Berkeley

API calls often come with constraints, adding complexity to the LLM’s comprehension and categorization of the calls. For example, a prompt may require invoking an image classification model with specific parameter size and accuracy constraints. These challenges highlight the need for LLMs to understand not only the functional description of an API call but also reason about the embedded constraints.

The Dataset

The tech-focused dataset at hand encompasses three distinct domains: Torch Hub, Tensor Hub, and HuggingFace. Each domain contributes a wealth of information, shedding light on the diverse nature of the dataset. Torch Hub, for instance, offers 95 APIs, providing a solid foundation. In comparison, Tensor Hub takes it a step further with an extensive collection of 696 APIs. Lastly, HuggingFace leads the pack with a whopping 925 APIs, making it the most comprehensive domain.

To amplify the value and usability of the dataset, an additional endeavor has been undertaken. Each API in the dataset is accompanied by a set of 10 meticulously crafted and uniquely tailored instructions. These instructions serve as indispensable guides for both training and evaluation purposes. This initiative ensures that every API goes beyond mere representation, enabling more robust utilization and analysis.

The Architecture

Gorilla introduces the notion of retriever-aware training, where the instruction-tuned dataset includes an additional field with retrieved API documentation for reference. This approach aims to teach the LLM to parse and answer questions based on the provided documentation. The authors demonstrate that this technique allows the LLM to adapt to changes in API documentation, improves performance, and reduces hallucination errors.

During inference, users provide prompts in natural language. Gorilla can operate in two modes: zero-shot and retrieval. In zero-shot mode, the prompt is directly fed to the Gorilla LLM model, which returns the recommended API call to accomplish the task or goal. In retrieval mode, the retriever (either BM25 or GPT-Index) retrieves the most up-to-date API documentation from the API Database. This documentation is concatenated with the user prompt, along with a message indicating the reference to the API documentation. The concatenated input is then passed to Gorilla, which outputs the API to be invoked. Prompt tuning is not performed beyond the concatenation step in this system.

Image Credit: UC Berkeley

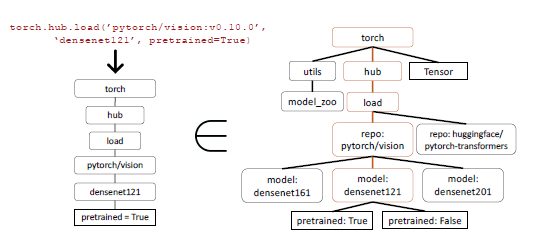

Inductive program synthesis has achieved success in various domains by synthesizing programs that meet specific test cases. However, when it comes to evaluating API calls, relying solely on test cases falls short as it becomes challenging to verify the semantic correctness of the code. Let’s consider the example of image classification, where there are more than 40 different models available for the task. Even if we narrow it down to a specific family, such as Densenet, there are four possible configurations. Consequently, multiple correct answers exist, making it difficult to determine if the API being used is functionally equivalent to the reference API through unit tests. To evaluate the performance of the model, a comparison of their functional equivalence is made using the collected dataset. To identify the API called by the LLM in the dataset, an AST (Abstract Syntax Tree) tree-matching strategy is employed. By checking if the AST of a candidate API call is a sub-tree of the reference API call, it becomes possible to trace which API is being utilized.

Identifying and defining hallucinations poses a significant challenge. The AST matching process is leveraged to identify hallucinations directly. In this context, a hallucination refers to an API call that is not a sub-tree of any API in the database, essentially invoking an entirely imagined tool. It’s important to note that this definition of hallucination differs from invoking an API incorrectly, which is defined as an error.

AST sub-tree matching plays a crucial role in identifying the specific API being called within the dataset. Since API calls can have multiple arguments, each of these arguments needs to be matched. Additionally, considering that Python allows for default arguments, it is essential to define which arguments to match for each API in the database.

Image Credit: UC Berkeley

Gorilla in Action

Together with the paper, the researchers open sourced a version of Gorilla. The release includes a notebook with many examples. Additionally, the following video clearly shows some of the magic of Gorillas.

Gorilla is one of the most interesting approaches in the tool-augmented LLM space. Hopefully, we will see the model distributed in some of the main ML hubs in the space.

Jesus Rodriguez is currently a CTO at Intotheblock. He is a technology expert, executive investor and startup advisor. Jesus founded Tellago, an award winning software development firm focused helping companies become great software organizations by leveraging new enterprise software trends.

Original. Reposted with permission.