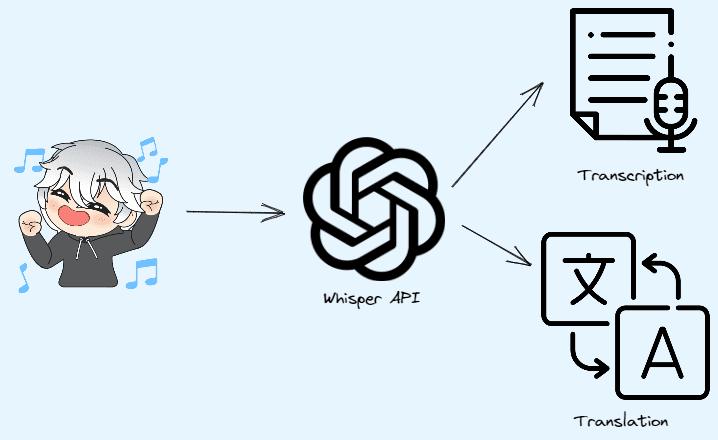

OpenAI’s Whisper API for Transcription and Translation

This article will show you how to use OpenAI's Whisper API to transcribe audio into text. It will also show you how to use it in your own projects and how to integrate it into your data science projects.

Illustration by Author | Source: flaticon

Did you accumulate a lot of recordings, but you don’t have any energy to start to listen and transcribe them? When I was still a student, I remember that I had to struggle every day with listening hours and hours of recorded lessons and most of my time was taken away from transcription. Furthermore, it wasn’t my native language and I had to drag every sentence into google translate to convert it into Italian.

Now, manual transcription and translation are only a memory. The famous research company for ChatGPT, OpenAI, launched Whisper API for speech-to-text conversation! With a few lines of Python code, you can call this powerful speech recognition model, get the thought off of your mind and focus on other activities, like making practice with data science projects and improving your portfolio. Let’s get started!

What is Whisper?

Whisper is a model based on neural networks developed by OpenAI to solve speech-to-text tasks. It belongs to the GPT-3 family and has become very popular for its ability to transcribe audio into text with very high accuracy.

It doesn’t limit handling English, but its ability is extended to more than 50 languages. If you are interested to understand if your language is included, check here. Furthermore, it can translate any language audio into English.

Like other OpenAI products, there is an API to get access to these speech recognition services, allowing developers and data scientists to integrate Whisper into their platforms and apps.

How to access Whisper API?

GIF by Author

Before going further, you need a few steps to get access to Whisper API. First, go and log in to the OpenAI API website. If you still don’t have the account, you need to create it. After you entered, click on your username and press the option “View API keys”. Then, click the button “Create new API key” and copy the new create API key on your Python code.

Transcribe with Whisper API

First, let’s download a youtube video of Kevin Stratvert, a very popular YouTuber that helps students from all over the world to master technology and improve skills by learning tools, like Power BI, video editing and AI products. For example, let’s suppose that we would like to transcribe the video “3 Mind-blowing AI Tools”.

We can directly download this video using pytube library. To install it, you need the following command line:

pip install pytube3

pip install openai

We also install the openai library, since it will be used later in the tutorial. Once there are all the python libraries installed, we just need to pass the URL of the video to the Youtube object. After, we get the highest resolution video stream and, then, download the video.

from pytube import YouTube

video_url = "https://www.youtube.com/watch?v=v6OB80Vt1Dk&t=1s&ab_channel=KevinStratvert"

yt = YouTube(video_url)

stream = yt.streams.get_highest_resolution()

stream.download()

Once the file is downloaded, it’s time to start the fun part!

import openai

API_KEY = 'your_api_key'

model_id = 'whisper-1'

language = "en"

audio_file_path = 'audio/5_tools_audio.mp4'

audio_file = open(audio_file_path, 'rb')

After setting up the parameters and opening the audio file, we can transcribe the audio and save it into a Txt file.

response = openai.Audio.transcribe(

api_key=API_KEY,

model=model_id,

file=audio_file,

language='en'

)

transcription_text = response.text

print(transcription_text)

Output:

Hi everyone, Kevin here. Today, we're going to look at five different tools that leverage artificial intelligence in some truly incredible ways. Here for instance, I can change my voice in real time. I can also highlight an area of a photo and I can make that just automatically disappear. Uh, where'd my son go? I can also give the computer instructions, like, I don't know, write a song for the Kevin cookie company....

As it was expected, the output is very accurate. Even the punctuation is so precise, I am very impressed!

Translate with Whisper API

This time, we’ll translate the audio from Italian to the English language. As before, we download the audio file. In my example, I am using this youtube video of a popular Italian YouTuber Piero Savastano that teaches machine learning in a very simple and funny way. You just need to copy the previous code and change only the URL. Once it’s downloaded, we open the audio file as before:

audio_file_path = 'audio/ml_in_python.mp4'

audio_file = open(audio_file_path, 'rb')

Then, we can generate the English translation starting from the Italian language.

response = openai.Audio.translate(

api_key=API_KEY,

model=model_id,

file=audio_file

)

translation_text = response.text

print(translation_text)

Output:

We also see some graphs in a statistical style, so we should also understand how to read them. One is the box plot, which allows to see the distribution in terms of median, first quarter and third quarter. Now I'm going to tell you what it means. We always take the data from the data frame. X is the season. On Y we put the count of the bikes that are rented. And then I want to distinguish these box plots based on whether it is a holiday day or not. This graph comes out. How do you read this? Here on the X there is the season, coded in numerical terms. In blue we have the non-holiday days, in orange the holidays. And here is the count of the bikes. What are these rectangles? Take this box here. I'm turning it around with the mouse....

Final thoughts

That’s it! I hope that this tutorial has helped you on getting started with Whisper API. In this case study, it was applied with youtube videos, but you can also try podcasts, zoom calls and conferences. I found the outputs obtained after the transcription and the translation very impressive! This AI tool is surely helping a lot of people right now. The only limit is the fact that it’s only possible to translate to English text and not vice versa, but I am sure that OpenAI will provide it soon. Thanks for reading! Have a nice day!

Resources

- Speech-to-text Guide of Whisper API

- Getting Started with OpenAI Whisper API in Python | Youtube video

Eugenia Anello is currently a research fellow at the Department of Information Engineering of the University of Padova, Italy. Her research project is focused on Continual Learning combined with Anomaly Detection.