Exploring the Latest Trends in AI/DL: From Metaverse to Quantum Computing

The author discusses several emerging trends in Artificial Intelligence and Deep Learning such as Metaverse and Quantum Computing.

Image by Editor

The field of artificial intelligence (AI) is constantly evolving, and several emerging trends are shaping the landscape, with the potential to greatly impact various industries and everyday life. One of the driving forces behind recent breakthroughs in AI is Deep Learning (DL), also referred to as artificial neural networks (ANNs). DL has shown remarkable advancements in areas such as natural language processing (NLP), computer vision, reinforcement learning, and generative adversarial networks (GANs).

What makes DL even more fascinating is its close connection to neuroscience. Researchers often draw insights from the complexity and functionality of the human brain to develop DL techniques and architectures. For example, convolutional neural networks (CNNs), activation functions, and artificial neurons in ANNs are all inspired by the structure and behavior of biological neurons in the human brain.

While AI/DL and neuroscience are already making significant waves, there is another area that holds even greater promise for transforming our lives – quantum computing. Quantum computing has the potential to revolutionize computing power and unlock unprecedented advancements in various fields, including AI. Its ability to perform complex calculations and process vast amounts of data simultaneously opens up new frontiers of possibilities.

Deep Learning

Modern artificial neural networks (ANNs) have earned the name "deep learning" due to their complex architecture. These networks are a type of machine learning model that takes inspiration from the structure and functionality of the human brain. Comprising multiple interconnected layers of neurons, ANNs process and transform data as it flows through the network. The term "deep" refers to the network's depth, determined by the number of hidden layers in its architecture. Traditional ANNs typically have only a few hidden layers, making them relatively shallow. In contrast, deep learning models can possess dozens or even hundreds of hidden layers, making them significantly deeper. This increased depth empowers deep learning models to capture intricate patterns and hierarchical features within the data, leading to high performance in cutting-edge machine learning tasks.

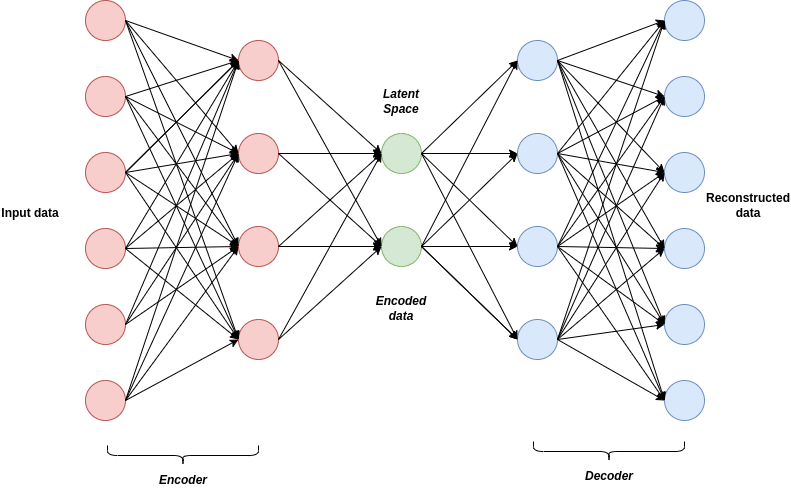

One notable application of deep learning is Image-to-Text and Text-to-Image Generation. These tasks rely on DL techniques like Generative Adversarial Networks (GANs) or Variational Autoencoders (VAEs) to learn the complex relationships between text and images from vast datasets. Such models find use in various fields, including computer graphics, art, advertising, fashion, entertainment, virtual reality, gaming experiences, data visualization, and storytelling.

The Architecture of the VAE Algorithm

Despite the significant progress, deep learning encounters its fair share of challenges and limitations. The primary obstacles lie in Computational Resources and Energy Efficiency. DL models often demand substantial computational resources, such as powerful GPUs (Graphics Processing Units) or specialized hardware, to perform predictions efficiently. This reliance on extensive computing infrastructure can restrict access to deep learning for researchers or organizations lacking sufficient resources. Moreover, training and running DL models can be computationally intensive, consuming a considerable amount of energy. As models continue to grow in size each year, concerns about energy efficiency become increasingly relevant.

Large Models

In addition to the technical considerations surrounding large language and visual models, there is an unexpected challenge arising from governments worldwide. These governing bodies are pushing for regulations on AI models and requesting transparency from model owners, including platforms like ChatGPT, to explain the inner workings of their models. However, neither major entities like OpenAI, Microsoft, or Google, nor the AI scientific community, have concrete answers to these inquiries. They admit to having a general understanding but are unable to pinpoint why models provide one response over another. Recent incidents, such as the banning of ChatGPT in Italy and Elon Musk's accusations against Microsoft regarding the unauthorized use of Twitter data by ChatGPT, are just the beginning of a larger issue. It appears that a new battle is brewing among prominent IT companies, concerning who can claim ownership of the "largest model" and which data can be utilized for such models.

In a recent blog post titled "The Age of AI has begun", Microsoft co-founder Bill Gates praised ChatGPT and related AI advancements as "revolutionary". Gates emphasized the necessity for "revolutionary" solutions to address the challenges at hand. Consequently, this prompts a reevaluation of concepts like "copyrights," "college and university tests," and even philosophical inquiries into the very nature of "learning."

Neuroscience

In his recent book "The Thousand Brains Theory," J. Hawkins presents a novel and evolving perspective on how the human brain processes information and generates intelligent behavior. The Thousand Brains Theory proposes that the brain functions as a network of thousands of individual mini-brains, each responsible for processing sensory input and producing motor output simultaneously. According to this theory, the neocortex, the outer layer of the brain associated with higher-level cognitive functions, consists of numerous functionally independent columns that can be likened to mini-brains.

The theory suggests that each column within the neocortex learns and models the sensory input it receives from the surrounding environment, making predictions about future sensory input. These predictions are then compared with the actual sensory input, and any disparities are used to update the internal models within the columns. This continuous process of prediction and comparison forms the foundation of how the neocortex processes information and generates intelligent behavior.

According to the Thousand Brains Theory, sensory input from various modalities, such as vision, hearing, and touch, is processed independently in separate columns. The outputs of these columns are subsequently combined to create a unified perception of the world. This remarkable ability allows the brain to integrate information from different sensory modalities and form a coherent representation of the surrounding environment.

A key concept in the Thousand Brains Theory is "Sparse Representation." This notion highlights the idea that only a subset of neurons in the human brain is active or firing at any given time, while the remaining neurons remain relatively inactive or silent. Sparse coding allows for efficient processing and encoding of information in the brain by reducing redundant or unnecessary neural activity. An important benefit of sparse representation is its ability to enable selective updates in the brain. In this process, only the active neurons or neural pathways are updated or modified in response to new information or experiences.

This selective updating mechanism allows the brain to adapt and learn efficiently by focusing its resources on the most relevant information or tasks, rather than updating all neurons simultaneously. Selective updating of neurons plays a vital role in neural plasticity, which refers to the brain's ability to change and adapt through learning and experience. It enables the brain to refine its representations and connections based on ongoing cognitive and behavioral demands while conserving energy and computational resources.

The practical applications of the Numenta theory are already evident. For example, the recent collaboration with Intel has facilitated significant performance improvements in various use cases, such as natural language processing and computer vision. Customers can achieve anywhere from 10 to more than 100 times improvement in performance, thanks to this partnership.

Metaverse

While many focus their attention on Large Language Models, Meta takes a distinctive approach. In a blog post titled "Robots that learn from videos of human activities and simulated interactions", the Meta AI team highlights an intriguing concept known as "Moravec's paradox." According to this thesis, the most challenging problems in AI revolve around sensorimotor skills rather than abstract thought or reasoning. In support of this claim, the team has announced two significant advancements in the realm of general-purpose embodied AI agents.

- Firstly, they introduced the artificial visual cortex, known as VC-1. This groundbreaking perception model is the first to provide support for a wide range of sensorimotor skills, environments, and embodiments.

- Additionally, Meta's team developed an innovative approach called adaptive (sensorimotor) skill coordination (ASC). This approach achieves near-perfect performance, with a success rate of 98%, in the demanding task of robotic mobile manipulation. It involves navigating to an object, picking it up, moving to another location, placing the object, and repeating these actions—all within physical environments.

These advancements from Meta signify a departure from the predominant focus on large language models. By prioritizing sensorimotor skills and embodied AI, they contribute to the development of agents that can interact with the world in a more comprehensive and nuanced manner.

ChatGPT models have garnered significant hype and received disproportionate public attention, despite being primarily based on statistical approaches. In contrast, Meta's recent breakthroughs represent substantial scientific advancements. These achievements are laying the foundation for a revolutionary expansion in the realms of virtual reality (VR) and robotics. We highly recommend reading the full article to gain insights and be well-prepared for the upcoming wave of AI innovation, as it promises to shape the future of these fields in remarkable ways.

Robotics

Currently, two prominent robots in the field are Atlas and Spot (robodog), both of which are readily available for purchase online. These robots represent remarkable feats of engineering, yet their capabilities are still limited by the absence of advanced "brains." This is precisely where the Meta artificial visual cortex comes into play as a potential game-changer. By integrating robotics with AI, it has the power to revolutionize numerous industries and sectors, including manufacturing, healthcare, transportation, agriculture, and entertainment, among others. The Meta artificial visual cortex holds the promise of enhancing the capabilities of these robots and paving the way for unprecedented advancements in the field of robotics.

Boston Dynamics’ Atlas and Spot robots

New Interfaces for Humans: Brain-Computer/Brain-Brain Interfaces

While concerns about being surpassed by AI may arise, the human brain possesses a crucial advantage that modern-day AI lacks: neuroplasticity. Neuroplasticity, also known as brain plasticity, refers to the brain's remarkable capacity to change and adapt in both structure and function in response to experiences, learning, and environmental changes. However, despite this advantage, human brains still lack advanced methods of communication with other human brains or AI systems. To overcome these limitations, the development of new interfaces for the brain is imperative.

Traditional modes of communication such as vision, hearing, or typing fall short in competing with modern AI models due to their limited communication speed. To address this, new interfaces based on direct brain neuralnet electrical activities are being pursued. Enter the realm of modern Brain-Computer Interfaces (BCIs), cutting-edge technologies that enable direct communication and interaction between the brain and external devices or systems, bypassing the need for traditional peripheral nervous system pathways. BCIs find applications in areas such as neuroprosthetics, neurorehabilitation, communication, control for individuals with disabilities, cognitive enhancement, and neuroscientific research. Moreover, BCIs have recently ventured into the realm of VR entertainment, with devices like 'Galea' on the horizon, potentially becoming part of our everyday reality.

Another intriguing example is Kernel Flow, a device capable of capturing both EEG and full-head coverage fMRI-like data from the cortex. With such capabilities, it is conceivable that we may eventually create virtual worlds directly from our dreams.

In contrast to non-invasive BCIs like 'Galea' and 'Kernel,' Neuralink, founded by Elon Musk, takes a different approach, promoting an invasive brain implant. Some have referred to it as a "socket to the outer world," offering communication channels far broader than any modern non-invasive BCIs. An additional significant advantage of invasive BCIs is the potential for two-way communication. Imagine a future where information no longer requires our eyes or ears but can be delivered directly to our neocortex.

Bryan Johnson’s (left) Kernel Flow

Quantum Computing

If Neuroscience and the human brain weren't intriguing enough, there's yet another mind-boggling topic to explore: Quantum Computers. These extraordinary machines have the potential to surpass classical computers in certain computational tasks. Leveraging quantum superpositions and entanglements—the forefront of modern-day physics—quantum computers can perform parallel computations and solve specific problems more efficiently. Examples of these include factoring large numbers, solving complex optimization problems, simulating quantum systems, and the futuristic concept of quantum teleportation. These advancements are poised to revolutionize domains such as cryptography, drug discovery, materials science, and financial modeling. For a firsthand experience of quantum programming, you can visit www.quantumplayground.net and write your first quantum script in just a few minutes.

While the future is inherently uncertain, one thing remains clear: the trajectory of humanity's future will be shaped by the choices and actions of individuals, communities, institutions, and governments. It is crucial for us to collectively strive for positive change, address pressing global issues, promote inclusivity and sustainability, and work together towards creating a better future for all of humanity.

Ihar Rubanau is Senior Data Scientist at Sigma Software Group