Python Vector Databases and Vector Indexes: Architecting LLM Apps

Vector databases enable fast similarity search and scale across data points. For LLM apps, vector indexes can simplify architecture over full vector databases by attaching vectors to existing storage. Choosing indexes vs databases depends on specialized needs, existing infrastructure, and broader enterprise requirements.

Photo by Christina Morillo

Because of Generative AI applications created using their hardware, Nvidia has experienced significant growth. Another software innovation, the vector database, is also riding the Generative AI wave.

Developers are building AI-powered applications in Python on Vector Databases. By encoding data as vectors, they can leverage the mathematical properties of vector spaces to achieve fast similarity search across very large datasets.

Let's start with the basics!

Vector Database Basics

A vector database stores data as numeric vectors in a coordinate space. This allows similarities between vectors to be calculated via operations like cosine similarity.

The closest vectors represent the most similar data points. Unlike scalar databases, vector databases are optimized for similarity searches rather than complex queries or transactions.

Retrieving similar vectors takes milliseconds versus minutes, even across billions of data points.

Vector databases build indexes to efficiently query vectors by proximity. This is somewhat analogous to how text search engines index documents for fast full-text search.

Benefits of Vector Search Over Traditional Databases for Developers

For developers, vector databases provide:

- Fast similarity search - Find similar vectors in milliseconds

- Support for dynamic data - Continuously update vectors with new data

- Scalability - Scale vector search across multiple machines

- Flexible architectures - Vectors can be stored locally, in cloud object stores, or managed databases

- High dimensionality - Index thousands of dimensions per vector

- APIs - If you go for a managed vector database, it usually comes with clean query APIs and integrations with some existing data science toolkits or platforms.

The example of popular use cases supported by the vector searches (the key feature offering of a vector database) are:

- Visual search - Find similar product images

- Recommendations - Suggest content

- Chatbots - Match queries to intent

- Search - Surface relevant documents from text vectors

Use cases where vector searches are starting to gain traction are :

- Anomaly detection - Identify outlier vectors

- Drug discovery - Relate molecules by property vectors

What is a Python Vector Database?

A Vector database which includes Python libraries that supports a full lifecycle of a vector database is a Python vector database. The database itself does not need to be built in Python.

What Should be Supported by these Python Vector Database Libraries?

The calls to a vector database can be separated into two categories - Data related and Management related. The good news here is that they follow similar patterns as a traditional database.

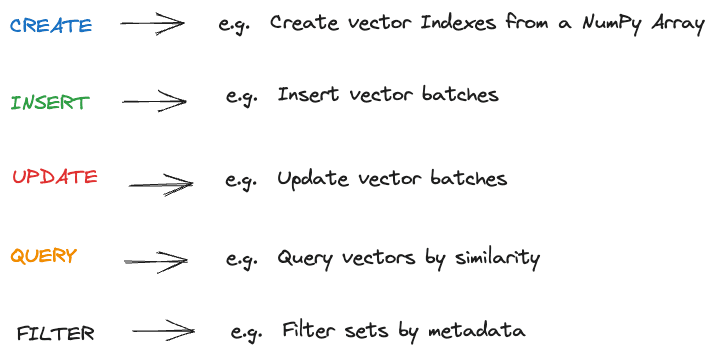

Data related functions which libraries should support

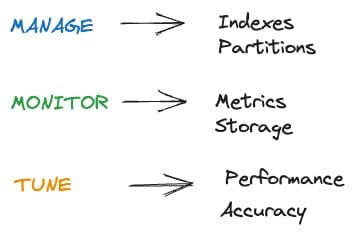

Standard management related functions which libraries should support

Let’s now move on to a little more advanced concept where we talk about building LLM Apps on top of these databases

Architecting LLM Apps

Let’s understand what is involved from a workflow perspective before we go deeper into the architecture of vector search powered LLM Apps.

A typical workflow involves:

- Enriching or cleaning the data. This is a lightweight data transformation step to help with data quality and consistent content formatting. It is also where data may need to be enriched.

- Encoding data as vectors via models. The models have some transformers included (e.g. sentence transformers)

- Inserting vectors into a vector database or vector index (something which we will explain shortly)

- Exposing search via a Python API

- Document orchestrating workflow

- Testing and visualizing results in apps and UIs (e.g. Chat UI)

Now let’s see how we enable different parts of this workflow using different architecture components.

For 1) you might need to start getting metadata from other source systems (including relational databases or content management systems.

Pretrained models are almost always preferred for step 2) above. OpenAI models are the most well-liked models offered through hosted offerings. You might host local models for privacy and security reasons.

For 3), you need a vector database or vector index if you need to perform large similarity searches, such as in datasets with more than one billion records. From an enterprise standpoint, you typically have a little more context before you conduct the "search".

For 4) above, the good news is that the exposed search typically follows a similar pattern. Something along the lines of the following code:

From Pinecone

index = pinecone.Index("example-index")

index.upsert([

("A", [0.1, 0.1, 0.1, 0.1], {"genre": "comedy", "year": 2020}),

)

index.query(

vector=[0.1, 0.1, 0.1, 0.1],

filter={

"genre": {"$eq": "documentary"},

"year": 2019

},

top_k=1,

)

An interesting line here is this:

filter={

"genre": {"$eq": "documentary"},

"year": 2019

},

It really filters the results to vectors near the ‘genre’ and ‘year’. You can also filter vectors by concepts or themes.

The challenge now, in an enterprise setting, is that it includes other business filters. It is important to address the lack of modeling for data coming from data sources (think table structure and metadata). It would be important to improve text fidelity with fewer incorrect expressions that contradict the structured data. . A "data pipelining" strategy is required in this situation, and enterprise "content matching" starts to matter.

For 5) Other than the usual challenges of scaling ingest, a changing corpus has its own challenges. New documents may require re-encoding and re-indexing of the entire corpus to keep vectors relevant.

For 6) This is a completely new area and a human in the loop approach is required on top of testing similarity levels to ensure there is quality across the spectrum of search.

Automated search scoring along with different types of context scoring is not an easily accomplished task.

Python Vector Index: a simpler vector search alternative for your existing database.

A vector database is a complex system that enables contextual search as in the above examples plus all the additional database functionalities (create, insert, update, delete, manage, …).

Examples of vector databases include Weaviate and Pinecone. Both of these expose Python API’s.

Sometimes, a simpler setup is enough. As a lighter alternative, you can use whatever storage you were already using, and add a vector index based on it. This vector index is used for retrieving only your search queries with context, for example, for your generative AI use.

In a vector index setup, you have:

- Your usual data storage (e.g. PostgreSQL or disk directory with files) provides the basic operations you need: create, insert, update, delete.

- Your vector index which enables fast context-based search on your data.

Standalone Python libraries which implement vector indices for you include FAISS, Pathway LLM, Annoy.

The good news is that the LLM application workflow for vector databases and Vector indexes is the same. The main difference is that in addition to the Python Vector Index library, you continue to also use your existing data library for “normal” data operations and for data management. For example, this could be Psycopg if you are using PostgreSQL, or the standard Python “fs” module if you are storing data in files.

Proponents of vector indexes focus on the following advantages:

- Data Privacy: Keeps original data secure and undisturbed, minimizing data exposure risk.

- Cost-Efficiency: Lessens costs associated with extra storage, compute power, and licensing.

- Scalability: Simplifies scaling by decreasing the number of components to manage.

When to use Vector Databases vs Vector Indexes?

Vector Databases are useful when one or more of the following is true

- You have a specialized need for working with vector data at scale

- You are creating a standalone purpose-built application for vectors

- You do not expect other types of use for your stored data in other types of applications.

Vector Indexes are useful when one or more of the following is true

- You do not want to trust new technology for your data storage

- Your existing storage is easy to access from Python.

- Your similarity search is just one capability among other larger enterprise BI and database needs

- You need the ability to attach vectors to existing scalar records

- You need one unified way of dealing with pipelines for your data engineering team

- You need index and graph structures on the data to help with your LLM apps or tasks

- You need augmented output or augmented context coming from other sources

- You want to create rules from your corpus which can apply to your transactional data

The Future of Enterprise Vector Search

Vector search unlocks game-changing capabilities for developers. As models and techniques improve, expect vector databases or vector indexes to become an integral part of the application stack.

I hope this overview provides a solid starting point for exploring vector databases and vector indexes in Python. If you are curious about a recently developed vector index please check this open source project.

Anup Surendran is a VP of Product and Product Marketing who specializes in bringing AI products to market. He has worked with startups that have had two successful exits (to SAP and Kroll) and enjoys teaching others about how AI products can improve productivity within an organization.