7 End-to-End MLOps Platforms You Must Try in 2024

List of top MLOPs platforms that will help you with integration, training, tracking, deployment, monitoring, CI/CD, and optimizing the infrastructure.

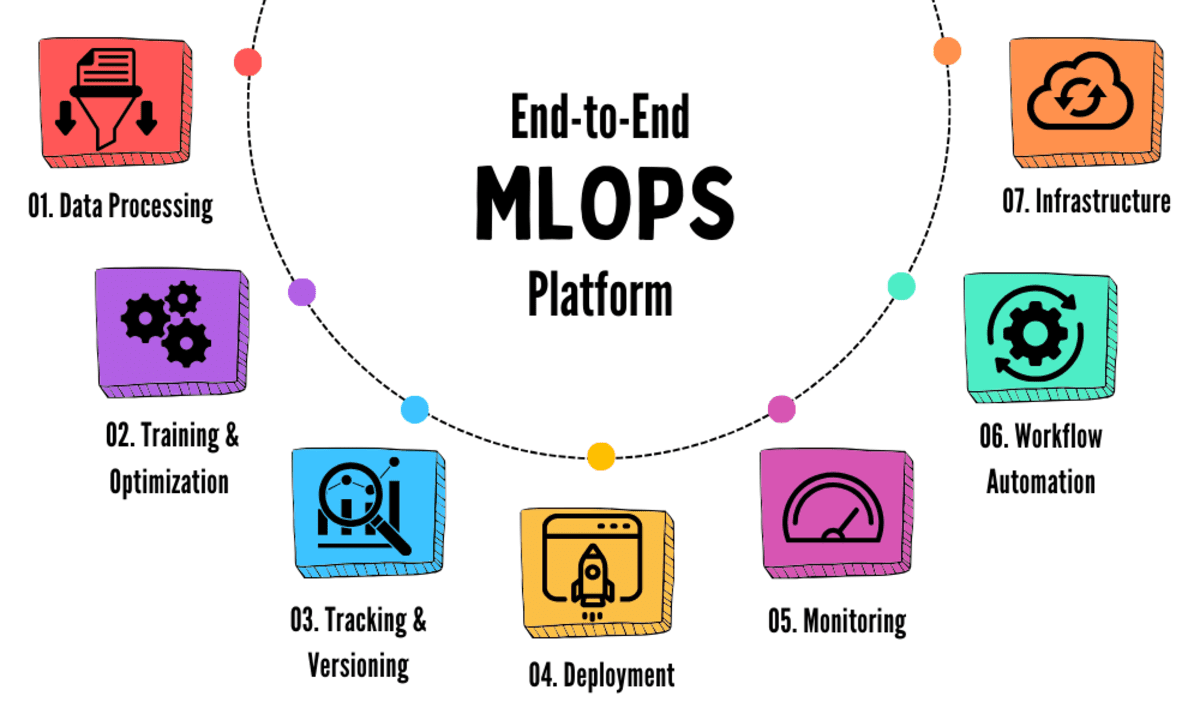

Image by Author

Do you ever feel like there are too many tools for MLOps? There's a tool for experiment tracking, data and model versioning, workflow orchestration, feature store, model testing, deployment and serving, monitoring, runtime engines, LLM frameworks, and more. Each category of tool has multiple options, making it confusing for managers and engineers who want a simple solution, a unified tool that can easily perform almost all the MLOps tasks. This is where end-to-end MLOps platforms come in.

In this blog post, we will review the best end-to-end MLOps platforms for personal and enterprise projects. These platforms will enable you to create an automated machine learning workflow that can train, track, deploy, and monitor models in production. Additionally, they offer integrations with various tools and services you may already be using, making it easier to transition to these platforms.

1. AWS SageMaker

Amazon SageMaker is a quite popular cloud solution for the end-to-end machine learning life cycle. You can track, train, evaluate, and then deploy the model into production. Furthermore, you can monitor and retain models to maintain quality, optimize the compute resource to save cost, and use CI/CD pipelines to automate your MLOps workflow fully.

If you are already on the AWS (Amazon Web Services) cloud, you will have no problem using it for the machine learning project. You can also integrate the ML pipeline with other services and tools that come with Amazon Cloud.

Similar to AWS Sagemaker, you can try Vertex AI and Azure ML. They all provide similar functions and tools for building an end-to-end MLOPs pipeline with integration with cloud services.

2. Hugging Face

I am a big fan of the Hugging Face platform and the team, building open-source tools for machine learning and large language models. The platform is now end-to-end as it is now providing the enterprise solution for multiple GPU power model inference. I highly recommend it for people who are new to cloud computing.

Hugging Face comes with tools and services that can help you build, train, fine-tune, evaluate, and deploy machine learning models using a unified system. It also allows you to save and version models and datasets for free. You can keep it private or share it with the public and contribute to open-source development.

Hugging Face also provides solutions for building and deploying web applications and machine learning demos. This is the best way to showcase to others how terrific your models are.

3. Iguazio MLOps Platform

Iguazio MLOps Platform is the all-in-one solution for your MLOps life cycle. You can build a fully automated machine-learning pipeline for data collection, training, tracking, deploying, and monitoring. It is inherently simple, so you can focus on building and training amazing models instead of worrying about deployments and operations.

Iguazio allows you to ingest data from all kinds of data sources, comes with an integrated feature store, and has a dashboard for managing and monitoring models and real-time production. Furthermore, it supports automated tracking, data versioning, CI/CD, continuous model performance monitoring, and model drift mitigation model drift.

4. DagsHub

DagsHub is my favorite platform. I use it to build and showcase my portfolio projects. It is similar to GitHub but for data scientists and machine learning engineers.

DagsHub provides tools for code and data versioning, experiment tracking, mode registry, continuous integration and deployment (CI/CD) for model training and deployment, model serving, and more. It is an open platform, meaning anyone can build, contribute, and learn from the projects.

The best features of the DagsHub are:

- Automatic data annotation.

- Model serving.

- ML pipeline visualization.

- Diffing and commenting on Jupyter notebooks, code, datasets, and images.

The only thing it lacks is a dedicated compute instance for model inference.

5. Weights & Biases

Weights & Biases started as an experimental tracking platform but evolved into an end-to-end machine learning platform. It now provides experiment visualization, hyperparameter optimization, model registry, workflow automation, workflow management, monitoring, and no-code ML app development. Moreover, it also comes with LLMOps solutions, such as exploring and debugging LLM applications and GenAI application evaluations.

Weights & Biases comes with cloud and private hosting. You can host your server locally or use managed to survive. It is free for personal use, but you have to pay for team and enterprise solutions. You can also use the open-source core library to run it on your local machine and enjoy privacy and control.

6. Modelbit

Modelbit is a new but fully featured MLOps platform. It provides an easy way to train, deploy, monitor, and manage the models. You can deploy the trained model using the Python code or the `git push` command.

Modelbit is made for both Jupyter Notebook lovers and software engineers. Apart from training and deploying, Modelbit allows us to run models on auto scaling computing using your preferred cloud service or their dedicated infrastructure. It is a true MLOps platform that lets you log, monitor, and alert about the model in production. Moreover, it comes with a model registry, auto retraining, model testing, CI/CD, and workflow versioning.

7. TrueFoundry

TrueFoundry is the fastest and most cost-effective way of building and deploying machine learning applications. It can be installed on any cloud and used locally. TrueFoundry also comes with multiple cloud management, autoscaling, model monitoring, version control, and CI/CD.

Train the model in the Jupyter Notebook environment, track the experiments, save the model and metadata using the model registry, and deploy it with one click.

TrueFoundry also provides support for LLMs, where you can easily fine-tune the open-source LLMs and deploy them using the optimized infrastructure. Moreover, it comes with integration with open source model training tools, model serving and storage platforms, version control, docker registry, and more.

Final Thoughts

All the platforms I mentioned earlier are enterprise solutions. Some offer a limited free option, and some have an open-source component attached to them. However, eventually, you will have to move to a managed service to enjoy a fully featured platform.

If this blog post becomes popular, I will introduce you to free, open-source MLOps tools that provide greater control over your data and resources.

Abid Ali Awan (@1abidaliawan) is a certified data scientist professional who loves building machine learning models. Currently, he is focusing on content creation and writing technical blogs on machine learning and data science technologies. Abid holds a Master's degree in technology management and a bachelor's degree in telecommunication engineering. His vision is to build an AI product using a graph neural network for students struggling with mental illness.