Leveraging GPT Models to Transform Natural Language to SQL Queries

By training GPT to query with few-shot prompting.

Image by Author. Base image from pch-vector.

Natural Language Processing —or NLP-has evolved enormously, and GPT models are at the forefront of this revolution.

Today LLM models can be used in a wide variety of applications.

To avoid unnecessary tasks and enhance my workflow, I began exploring the possibility of training GPT to formulate SQL queries for me.

And this is when a brilliant idea appeared:

Using the power of GPT models in interpreting natural language and transforming it into structured SQL queries.

Could this be possible?

Let’s discover it all together!

So let’s start from the beginning…

The concept of “Few Shot Prompting”

Some of you might be already familiar with the concept of few shot prompting, while others might have not heard of it never before.

So…What is it?

The basic idea here is to use some explicit examples-or shots-to guide the LLM to respond in a specific way.

This is why it is called Few Shot prompting.

To put it simply, by showcasing a few examples of the user input-sample prompts-along with the desired LLM output, we can teach the model to deliver some enhanced output that follows our preferences.

By doing so we are expanding the knowledge of the model on some specific domain to generate some output that aligns better with our desired task.

So let’s exemplify this!

Throughout this tutorial, I’ll be using a predefined function called chatgpt_call() to prompt the GPT model. If you want to further understand it, you go check the following article.

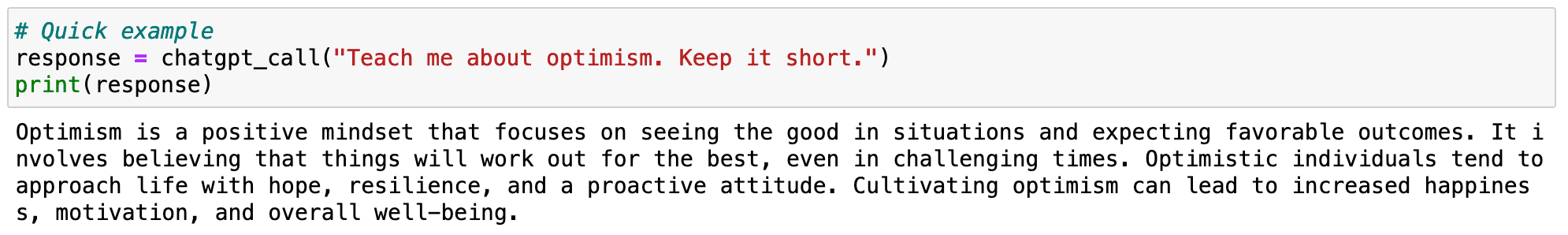

Imagine I want ChatGPT to describe the term optimism.

If I simply ask GPT to describe it, I will obtain a serious-and boring-description.

## Code Block

response = chatgpt_call("Teach me about optimism. Keep it short.")

print(response)

With the corresponding output:

Screenshot of my Jupyter Notebook. Prompting GPT.

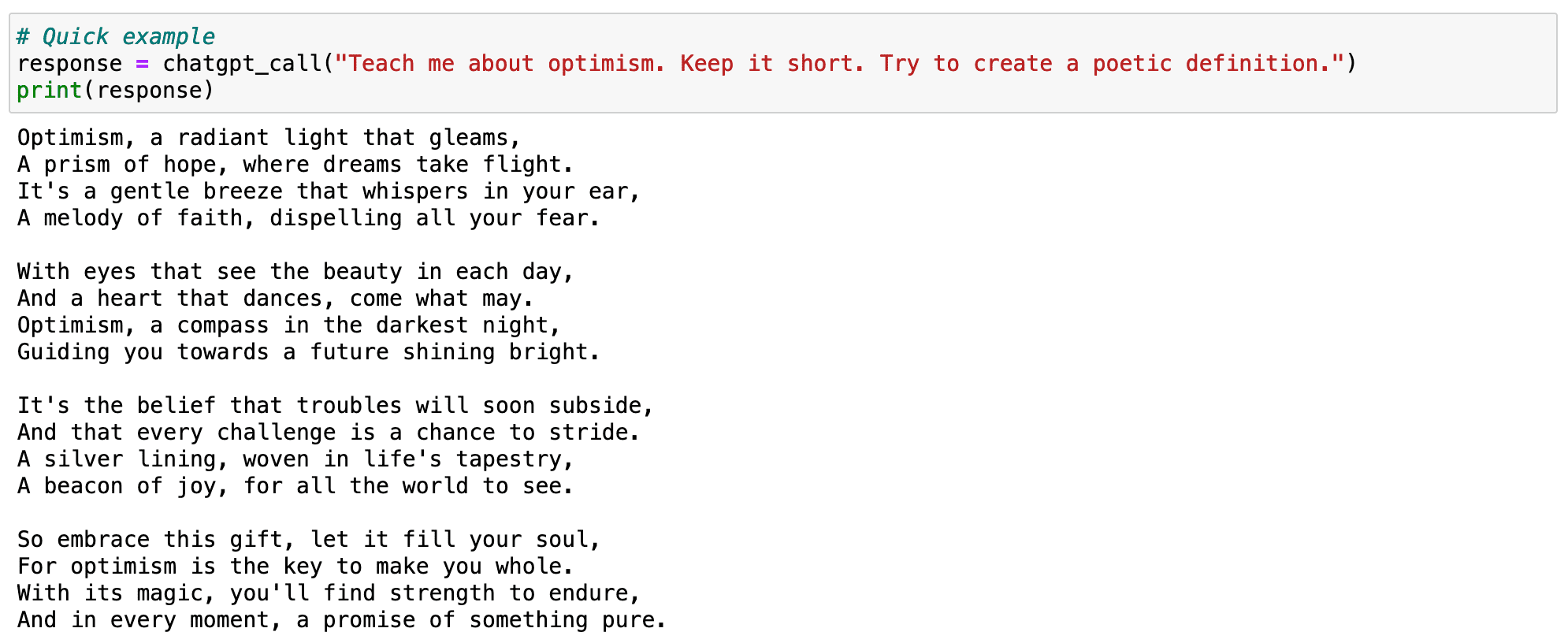

However, imagine I would rather like to get something more poetic. I can add to my prompt some more detail specifying that I want a poetic definition.

## Code Block

response = chatgpt_call("Teach me about optimism. Keep it short. Try to create a poetic definition.")

print(response)

But this second output looks just like a poem and has nothing to do with my desired output.

Screenshot of my Jupyter Notebook. Prompting GPT.

What can I do?

I could detail even more the prompt, and keep iterating until I receive some good output. However, this would take a lot of time.

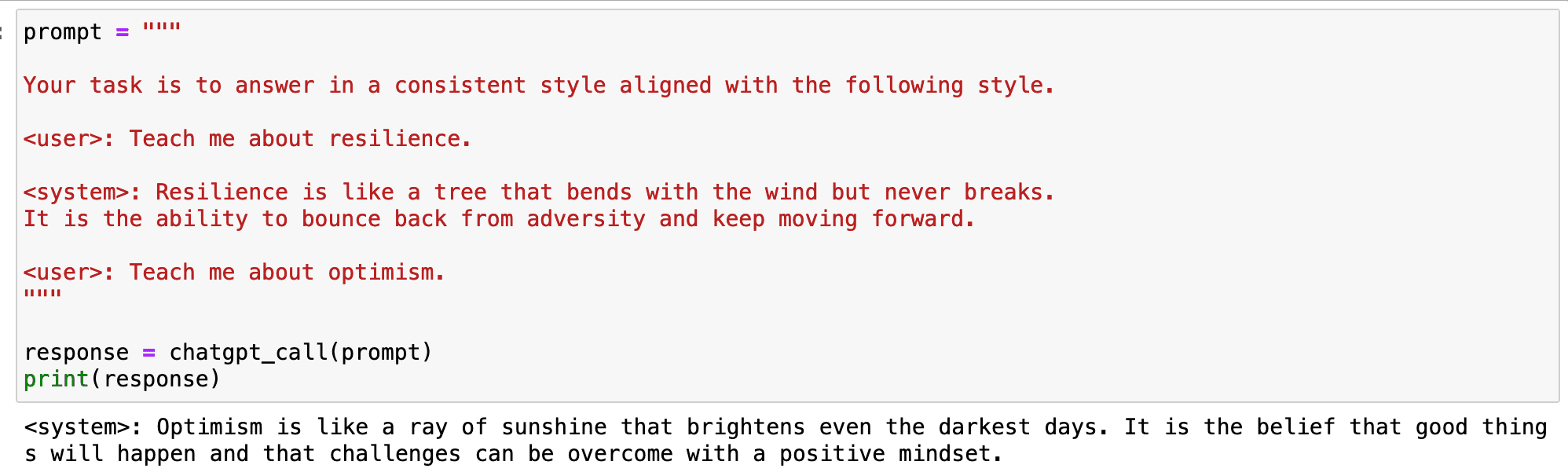

Instead, I can show the model what the kind of poetic description I prefer designing an example and showing it to the model.

## Code Block

prompt = """

Your task is to answer in a consistent style aligned with the following style.

: Teach me about resilience.

: Resilience is like a tree that bends with the wind but never breaks.

It is the ability to bounce back from adversity and keep moving forward.

: Teach me about optimism.

"""

response = chatgpt_call(prompt)

print(response)

And the output is exactly what I was looking for.

Screenshot of my Jupyter Notebook. Prompting GPT.

So… how can we translate this into our specific case of SQL queries?

Using NLP for SQL generation

ChatGPT is already capable of generating SQL queries out of Natural Language prompts. We do not even have to show the model any table, just formulate a hypothetical computation and it will do it for us.

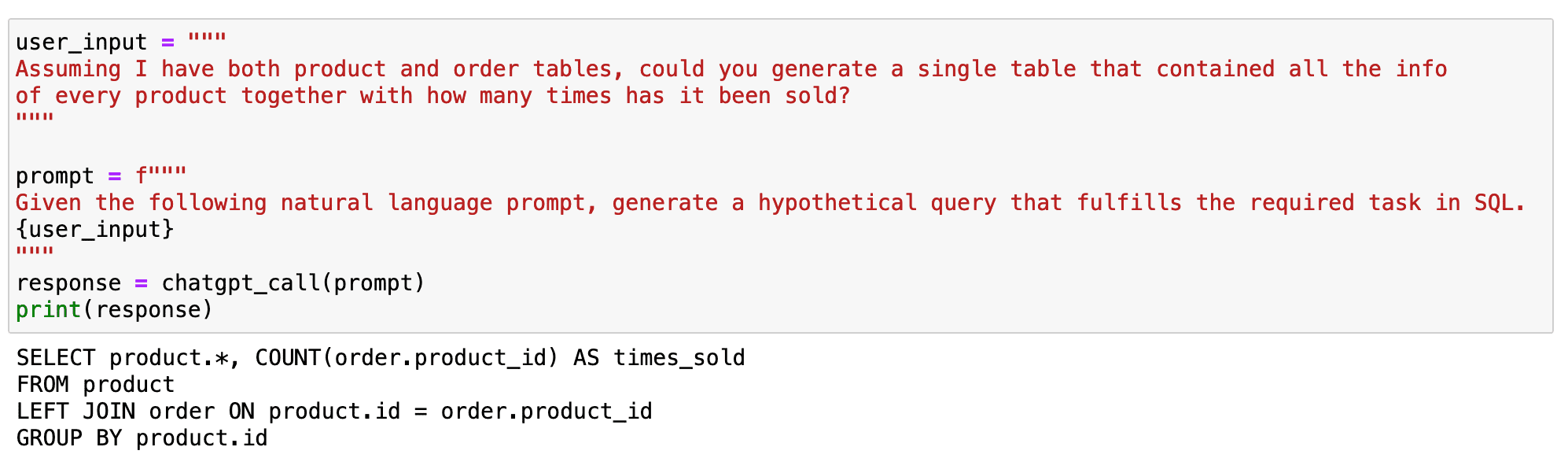

## Code Block

user_input = """

Assuming I have both product and order tables, could you generate a single table that contained all the info

of every product together with how many times has it been sold?

"""

prompt = f"""

Given the following natural language prompt, generate a hypothetical query that fulfills the required task in SQL.

{user_input}

"""

response = chatgpt_call(prompt)

print(response)

However, and as you already know, the more context we give to the model, the better outputs it will generate.

Screenshot of my Jupyter Notebook. Prompting GPT.

Throughout this tutorial I am splitting the input prompts into the specific demand of the user and the high-level behaviour expected from the model. This is a good practice to improve our interaction with the LLM and be more concise in our prompts. You can learn more in the following article.

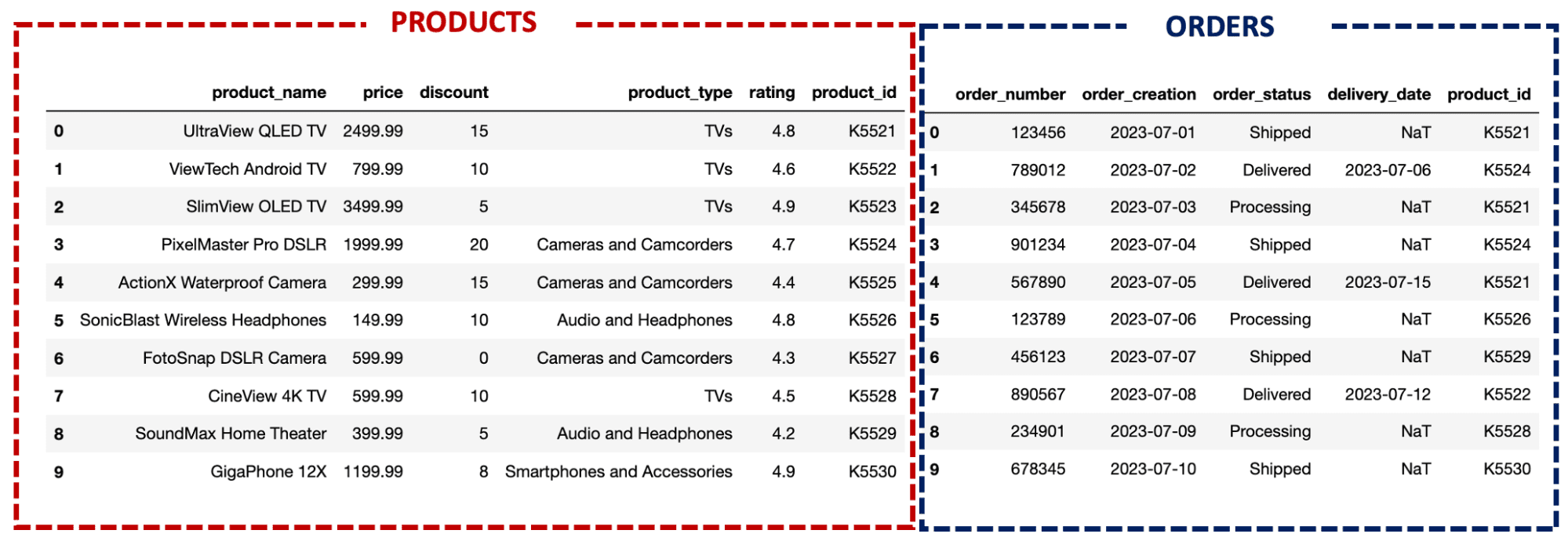

So let’s imagine I am working with two main tables: PRODUCTS and ORDERS

Image by Author. Tables to be used throughout the tutorial.

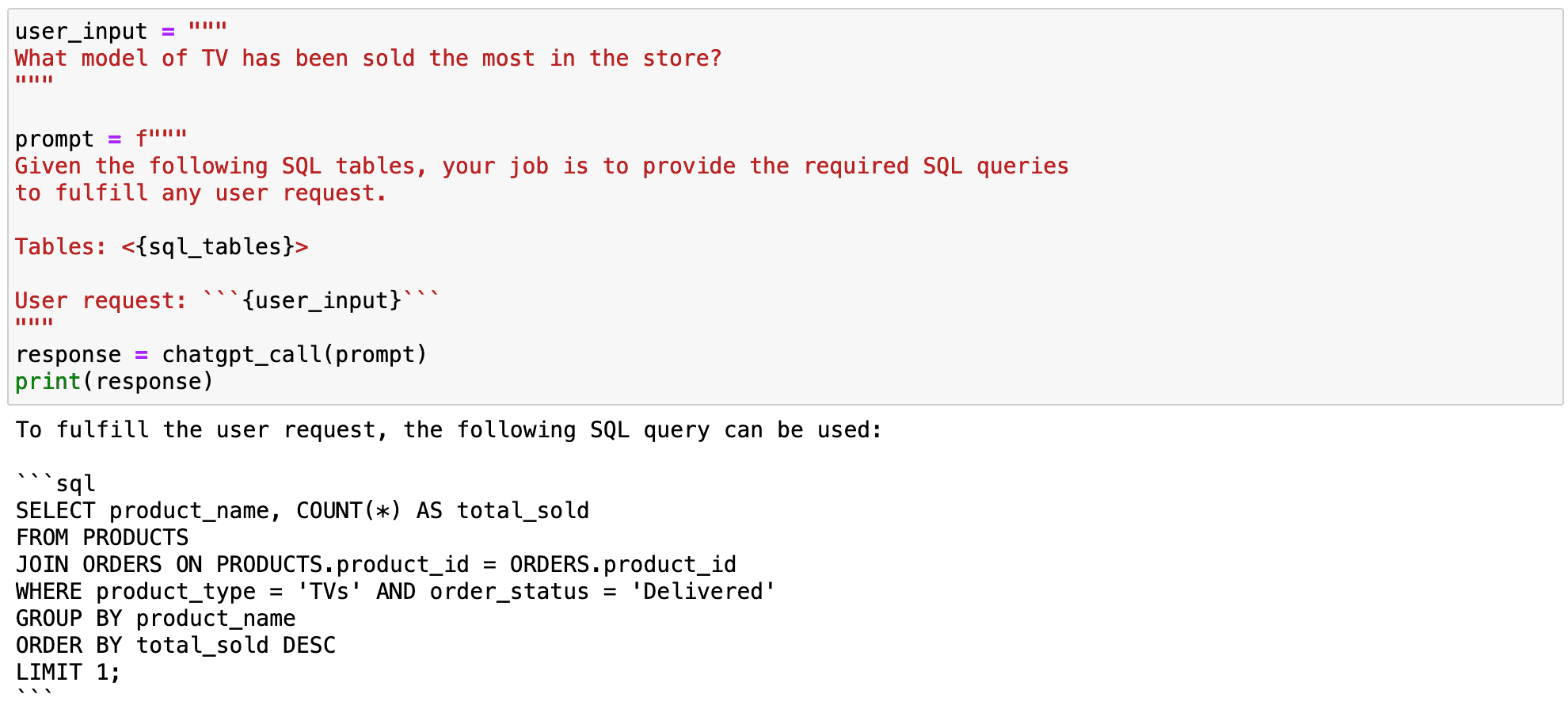

If I ask GPT for a simple query, the model will give a solution right away, just as it did in the beginning, but with specific tables for my case.

## Code Block

user_input = """

What model of TV has been sold the most in the store?

"""

prompt = f"""

Given the following SQL tables, your job is to provide the required SQL queries to fulfil any user request.

Tables: <{sql_tables}>

User request: ```{user_input}```

"""

response = chatgpt_call(prompt)

print(response)

You can find the sql_tables in the end of this article!

And the output looks like as follows!

Screenshot of my Jupyter Notebook. Prompting GPT.

However, we can observe some problems in the previous output.

- The computation is partially wrong, as it is only considering those TVs that have been already delivered. And any issued order-be it delivered or not-should be considered as a sale.

- The query is not formatted as I would like it to be.

So first let’s focus on showing the model how to compute the required query.

#1. Fixing some misunderstandings of the model

In this first case, the model considers only those products that have been delivered as sold, but this is not true. We can simply fix this misunderstanding by displaying two different examples where I compute similar queries.

## Few_shot examples

fewshot_examples = """

-------------- FIRST EXAMPLE

User: What model of TV has been sold the most in the store when considering all issued orders.

System: You first need to join both orders and products tables, filter only those orders that correspond to TVs

and count the number of orders that have been issued:

SELECT P.product_name AS model_of_tv, COUNT(*) AS total_sold

FROM products AS P

JOIN orders AS O ON P.product_id = O.product_id

WHERE P.product_type = 'TVs'

GROUP BY P.product_name

ORDER BY total_sold DESC

LIMIT 1;

-------------- SECOND EXAMPLE

User: What's the sold product that has been already delivered the most?

System: You first need to join both orders and products tables, count the number of orders that have

been already delivered and just keep the first one:

SELECT P.product_name AS model_of_tv, COUNT(*) AS total_sold

FROM products AS P

JOIN orders AS O ON P.product_id = O.product_id

WHERE P.order_status = 'Delivered'

GROUP BY P.product_name

ORDER BY total_sold DESC

LIMIT 1;

"""

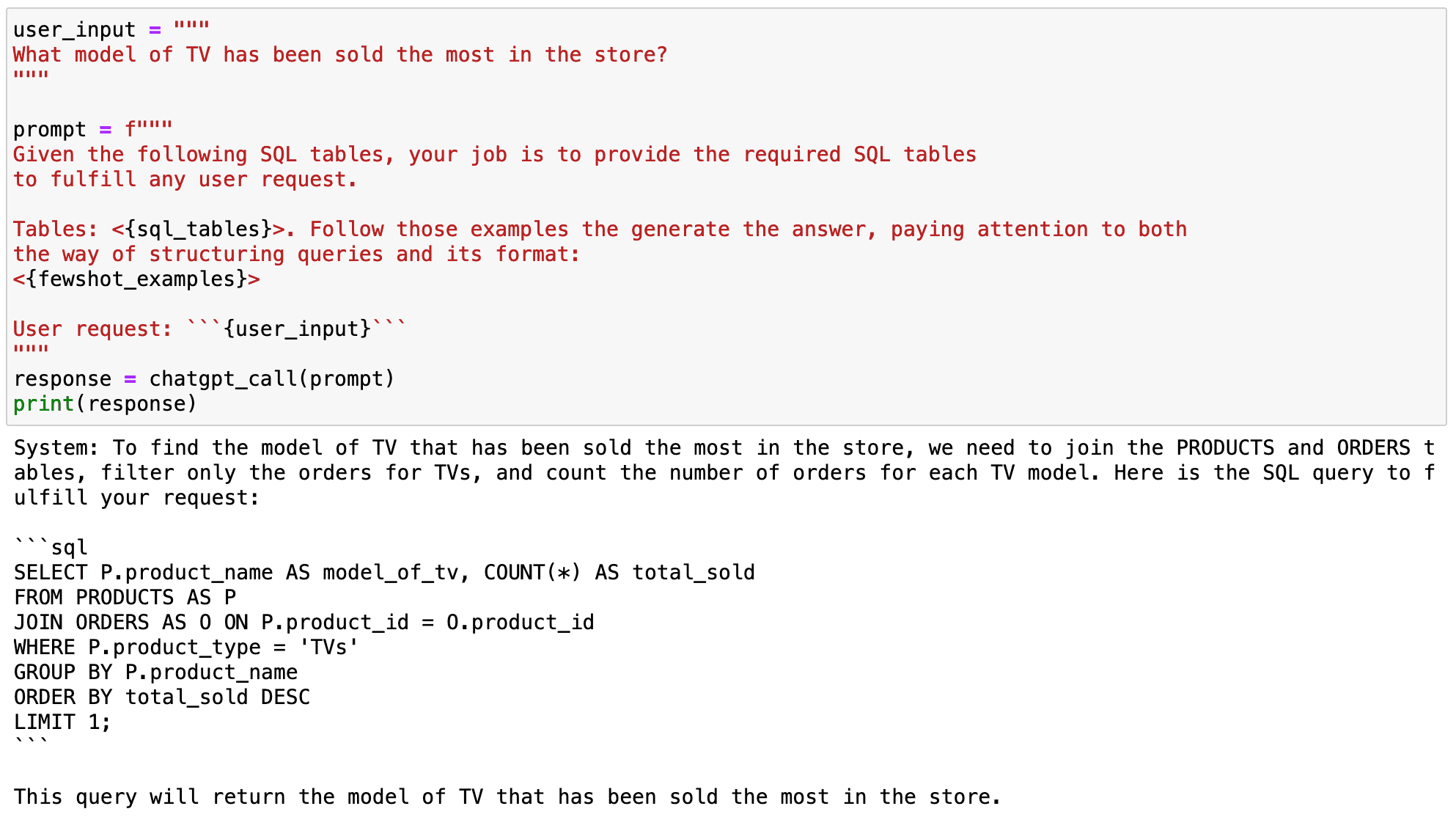

And now if we prompt again the model and include the previous examples on it, one can see that the corresponding query will not be only correct-the previous query was already working-but will also consider sales as we want it to!

## Code Block

user_input = """

What model of TV has been sold the most in the store?

"""

prompt = f"""

Given the following SQL tables, your job is to provide the required SQL tables

to fulfill any user request.

Tables: <{sql_tables}>. Follow those examples the generate the answer, paying attention to both

the way of structuring queries and its format:

<{fewshot_examples}>

User request: ```{user_input}```

"""

response = chatgpt_call(prompt)

print(response)

With the following output:

Screenshot of my Jupyter Notebook. Prompting GPT.

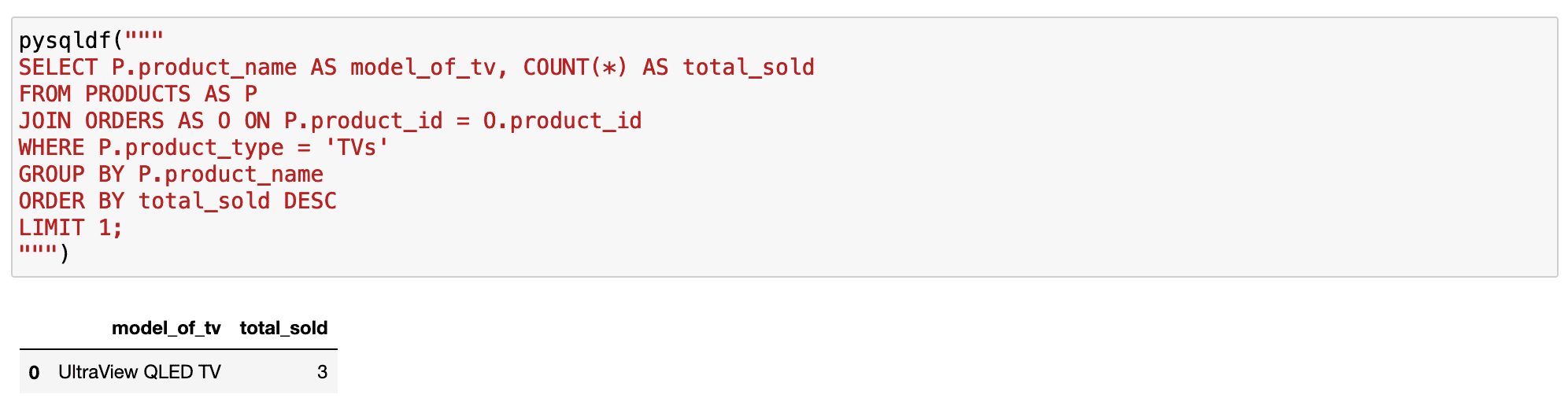

Now if we check the corresponding query…

## Code Block

pysqldf("""

SELECT P.product_name AS model_of_tv, COUNT(*) AS total_sold

FROM PRODUCTS AS P

JOIN ORDERS AS O ON P.product_id = O.product_id

WHERE P.product_type = 'TVs'

GROUP BY P.product_name

ORDER BY total_sold DESC

LIMIT 1;

""")

It works perfectly!

Screenshot of my Jupyter Notebook. Prompting GPT.

#2. Formatting SQL Queries

Few-short prompting can also be a way to customise the model for our own purpose or style.

If we go back to the examples before, the queries had no format at all. And we all know there are some good practices-together with some personal oddities-that allow us to better read SQL queries.

This is why we can use few-shot prompting to show the model the way we like to query - with our good practices or just our oddities-and train the model to give us our formatted desired SQL queries.

So, now I will prepare the same examples as before but following my format preferences.

## Code Block

fewshot_examples = """

---- EXAMPLE 1

User: What model of TV has been sold the most in the store when considering all issued orders.

System: You first need to join both orders and products tables, filter only those orders that correspond to TVs

and count the number of orders that have been issued:

SELECT

P.product_name AS model_of_tv,

COUNT(*) AS total_sold

FROM products AS P

JOIN orders AS O

ON P.product_id = O.product_id

WHERE P.product_type = 'TVs'

GROUP BY P.product_name

ORDER BY total_sold DESC

LIMIT 1;

---- EXAMPLE 2

User: What is the latest order that has been issued?

System: You first need to join both orders and products tables and filter by the latest order_creation datetime:

SELECT

P.product_name AS model_of_tv

FROM products AS P

JOIN orders AS O

ON P.product_id = O.product_id

WHERE O.order_creation = (SELECT MAX(order_creation) FROM orders)

GROUP BY p.product_name

LIMIT 1;

"""

Once the examples have been defined, we can input them into the model so that it can mimic the style showcased.

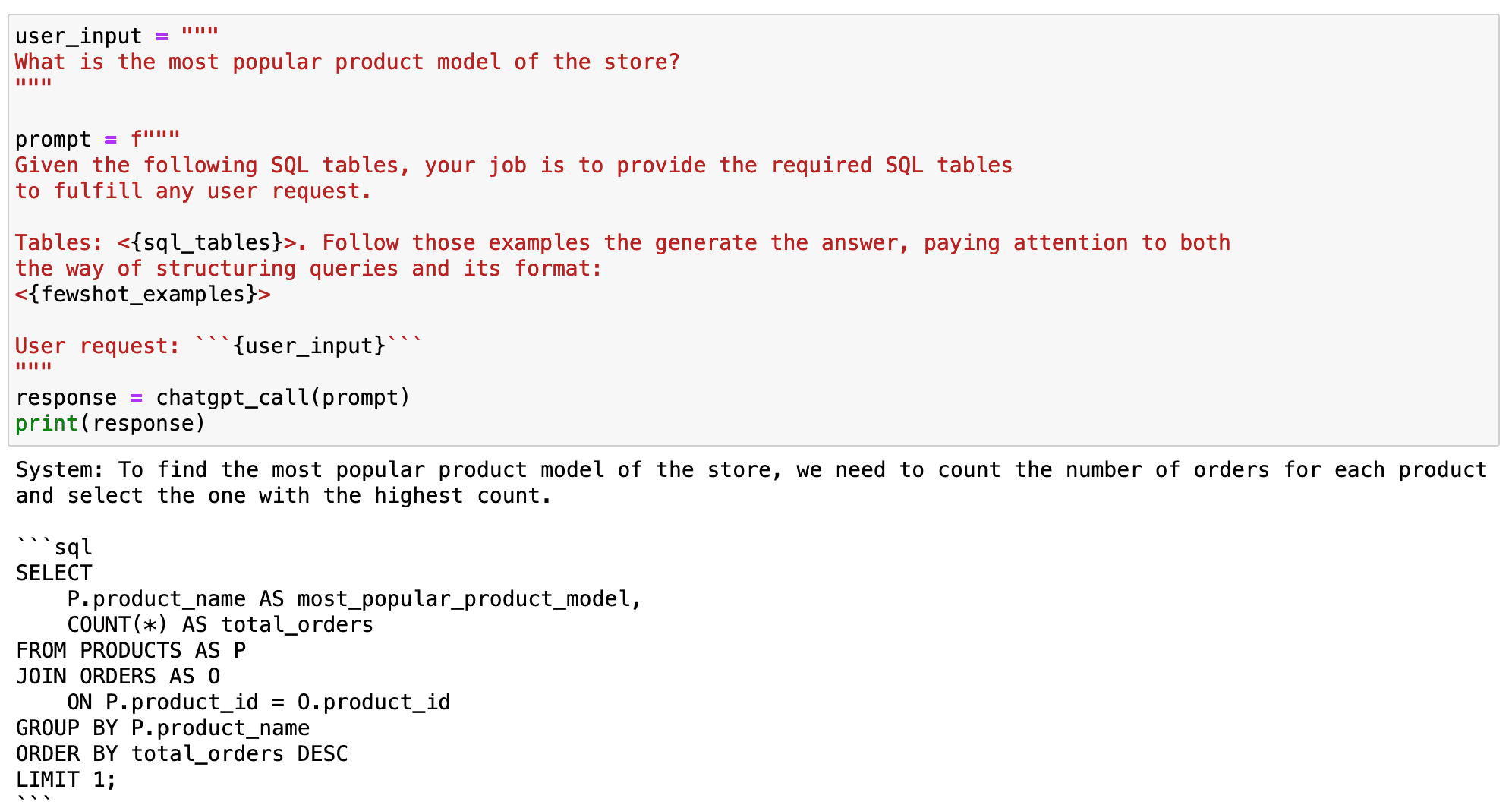

As you can observe in the following code box, after showing GPT what we expect from it, it replicates the style of the given examples to produce any new output accordingly.

## Code Block

user_input = """

What is the most popular product model of the store?

"""

prompt = f"""

Given the following SQL tables, your job is to provide the required SQL tables

to fulfill any user request.

Tables: <{sql_tables}>. Follow those examples the generate the answer, paying attention to both

the way of structuring queries and its format:

<{fewshot_examples}>

User request: ```{user_input}```

"""

response = chatgpt_call(prompt)

print(response)

And as you can observe in the following output, it worked!

Screenshot of my Jupyter Notebook. Prompting GPT.

#3. Training the model to compute some specific variable.

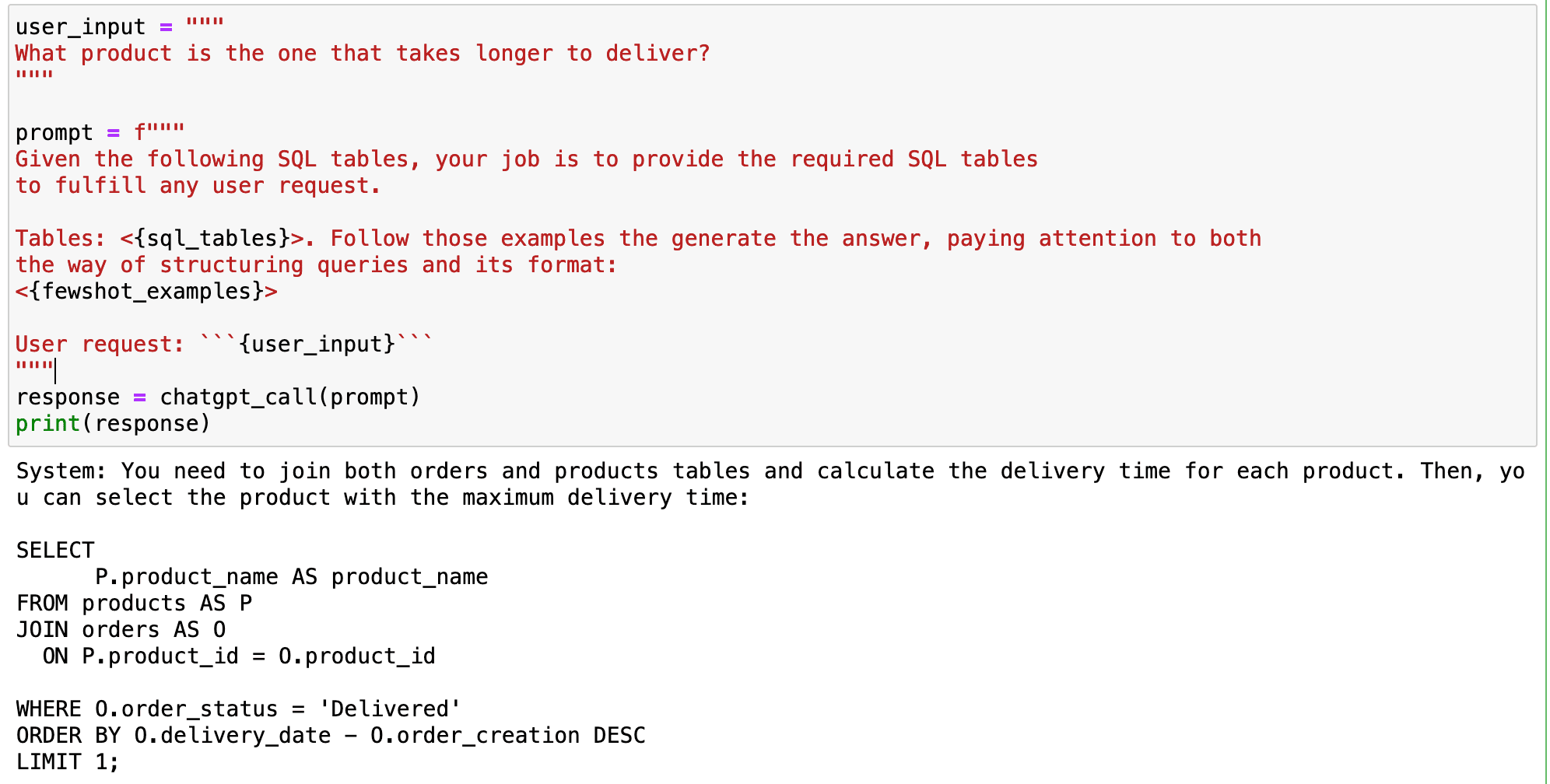

Let’s dive deeper into an illustrative scenario. Suppose we aim to compute which product takes the longest to deliver. We pose this question to the model in natural language, expecting a correct SQL query.

## Code Block

user_input = """

What product is the one that takes longer to deliver?

"""

prompt = f"""

Given the following SQL tables, your job is to provide the required SQL tables

to fulfill any user request.

Tables: <{sql_tables}>. Follow those examples the generate the answer, paying attention to both

the way of structuring queries and its format:

<{fewshot_examples}>

User request: ```{user_input}```

"""

response = chatgpt_call(prompt)

print(response)

Yet, the answer we receive is far from correct.

Screenshot of my Jupyter Notebook. Prompting GPT.

What went wrong?

The GPT model attempts to calculate the difference between two datetime SQL variables directly. This computation is incompatible with most SQL versions, creating an issue, especially for SQLite users.

How do we rectify this problem?

The solution is right under our noses-we resort back to few-shot prompting.

By demonstrating to the model how we typically compute time variables-in this case, the delivery time-we train it to replicate the process whenever it encounters similar variable types.

For example, SQLite users may use the julianday() function. This function converts any date into the number of days that have elapsed since the initial epoch in the Julian calendar.

This could help GPT model to handle date differences in SQLite database better.

## Adding one more example

fewshot_examples += """

------ EXAMPLE 4

User: Compute the time that it takes to delivery every product?

System: You first need to join both orders and products tables, filter only those orders that have

been delivered and compute the difference between both order_creation and delivery_date.:

SELECT

P.product_name AS product_with_longest_delivery,

julianday(O.delivery_date) - julianday(O.order_creation) AS TIME_DIFF

FROM

products AS P

JOIN

orders AS O ON P.product_id = O.product_id

WHERE

O.order_status = 'Delivered';

"""

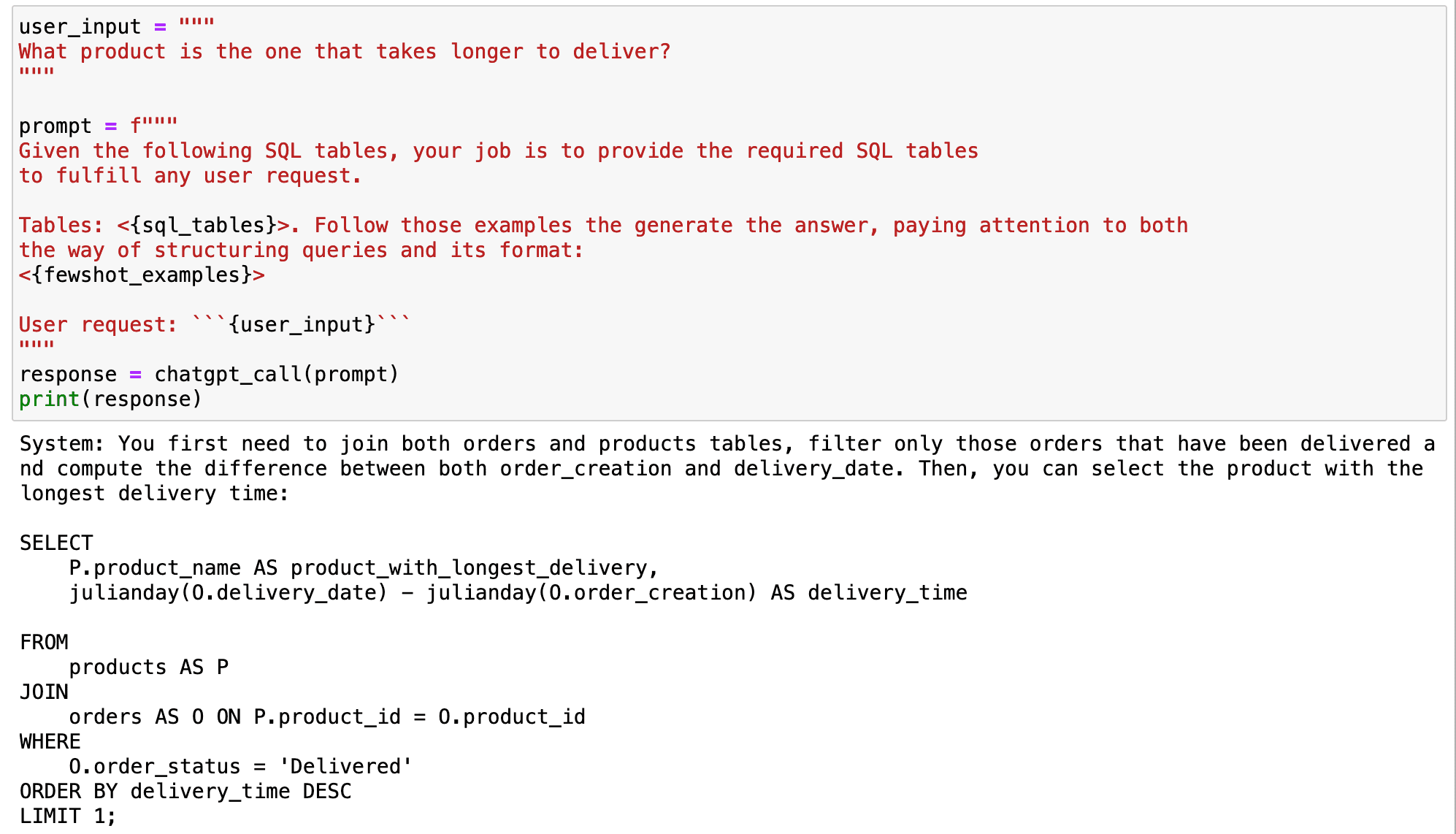

When we use this method as an example for the model, it learns our preferred way of computing the delivery time. This makes the model better suited to generate functional SQL queries that are customised to our specific environment.

If we use the previous example as an input, the model will replicate the way we compute the delivery time and will provide functional queries for our concrete environment from now on.

## Code Block

user_input = """

What product is the one that takes longer to deliver?

"""

prompt = f"""

Given the following SQL tables, your job is to provide the required SQL tables

to fulfill any user request.

Tables: <{sql_tables}>. Follow those examples the generate the answer, paying attention to both

the way of structuring queries and its format:

<{fewshot_examples}>

User request: ```{user_input}```

"""

response = chatgpt_call(prompt)

print(response)

Screenshot of my Jupyter Notebook. Prompting GPT.

Summary

In conclusion, the GPT model is an excellent tool for converting natural language into SQL queries.

However, it’s not perfect.

The model may not be able to understand context-aware queries or specific operations without proper training.

By using few-shot prompting, we can guide the model to understand our query style and computing preferences.

This allows us to fully harness the power of the GPT model in our data science workflows, turning the model into a powerful tool that adapts to our unique needs.

From unformatted queries to perfectly customised SQL queries, GPT models bring the magic of personalization to our fingertips!

You can go check my code directly in my GitHub.

## SQL TABLES

sql_tables = """

CREATE TABLE PRODUCTS (

product_name VARCHAR(100),

price DECIMAL(10, 2),

discount DECIMAL(5, 2),

product_type VARCHAR(50),

rating DECIMAL(3, 1),

product_id VARCHAR(100)

);

INSERT INTO PRODUCTS (product_name, price, discount, product_type, rating, product_id)

VALUES

('UltraView QLED TV', 2499.99, 15, 'TVs', 4.8, 'K5521'),

('ViewTech Android TV', 799.99, 10, 'TVs', 4.6, 'K5522'),

('SlimView OLED TV', 3499.99, 5, 'TVs', 4.9, 'K5523'),

('PixelMaster Pro DSLR', 1999.99, 20, 'Cameras and Camcorders', 4.7, 'K5524'),

('ActionX Waterproof Camera', 299.99, 15, 'Cameras and Camcorders', 4.4, 'K5525'),

('SonicBlast Wireless Headphones', 149.99, 10, 'Audio and Headphones', 4.8, 'K5526'),

('FotoSnap DSLR Camera', 599.99, 0, 'Cameras and Camcorders', 4.3, 'K5527'),

('CineView 4K TV', 599.99, 10, 'TVs', 4.5, 'K5528'),

('SoundMax Home Theater', 399.99, 5, 'Audio and Headphones', 4.2, 'K5529'),

('GigaPhone 12X', 1199.99, 8, 'Smartphones and Accessories', 4.9, 'K5530');

CREATE TABLE ORDERS (

order_number INT PRIMARY KEY,

order_creation DATE,

order_status VARCHAR(50),

product_id VARCHAR(100)

);

INSERT INTO ORDERS (order_number, order_creation, order_status, delivery_date, product_id)

VALUES

(123456, '2023-07-01', 'Shipped','', 'K5521'),

(789012, '2023-07-02', 'Delivered','2023-07-06', 'K5524'),

(345678, '2023-07-03', 'Processing','', 'K5521'),

(901234, '2023-07-04', 'Shipped','', 'K5524'),

(567890, '2023-07-05', 'Delivered','2023-07-15', 'K5521'),

(123789, '2023-07-06', 'Processing','', 'K5526'),

(456123, '2023-07-07', 'Shipped','', 'K5529'),

(890567, '2023-07-08', 'Delivered','2023-07-12', 'K5522'),

(234901, '2023-07-09', 'Processing','', 'K5528'),

(678345, '2023-07-10', 'Shipped','', 'K5530');

"""

Josep Ferrer is an analytics engineer from Barcelona. He graduated in physics engineering and is currently working in the Data Science field applied to human mobility. He is a part-time content creator focused on data science and technology. You can contact him on LinkedIn, Twitter or Medium.