An Overview of Python Deep Learning Frameworks

An Overview of Python Deep Learning Frameworks

Read this concise overview of leading Python deep learning frameworks, including Theano, Lasagne, Blocks, TensorFlow, Keras, MXNet, and PyTorch.

By Madison May, indico.

I recently stumbled across an old Data Science Stack Exchange answer of mine on the topic of the “Best Python library for neural networks”, and it struck me how much the Python deep learning ecosystem has evolved over the course of the past 2.5 years. The library I recommended in July 2014, pylearn2, is no longer actively developed or maintained, but a whole host of deep learning libraries have sprung up to take its place. Each has its own strengths and weaknesses. We’ve used most of the technologies on this list in production or development at indico, but for the few that we haven’t, I’ll pull from the experiences of others to help give a clear, comprehensive picture of the Python deep learning ecosystem of 2017.

In particular, we’ll be looking at:

- Theano

- Lasagne

- Blocks

- TensorFlow

- Keras

- MXNet

- PyTorch

Theano

Description:

Theano is a Python library that allows you to define, optimize, and evaluate mathematical expressions involving multi-dimensional arrays efficiently. It works with GPUs and performs efficient symbolic differentiation.

Documentation:

http://deeplearning.net/software/theano/

Summary:

Theano is the numerical computing workhorse that powers many of the other deep learning frameworks on our list. It was built by Frédéric Bastien and the excellent research team behind the University of Montreal’s lab, MILA. Its API is quite low level, and in order to write effective Theano you need to be quite familiar with the algorithms that are hidden away behind the scenes in other frameworks. Theano is a go-to library if you have substantial academic machine learning expertise, are looking for very fine grained control of your models, or want to implement a novel or unusual model. In general, Theano trades ease of use for flexibility.

Pros:

- Flexible

- Performant if used properly

Cons:

- Substantial learning curve

- Lower level API

- Compiling complex symbolic graphs can be slow

Resources:

- Installation guide

- Official Theano tutorial

- Theano slideshow and practice exercises

- From linear regression to CNNs with Theano

- Introduction to Deep Learning with Python & Theano (MNIST video tutorial)

Lasagne

Description:

Lightweight library for building and training neural networks in Theano.

Documentation:

http://lasagne.readthedocs.org/

Summary:

Since Theano aims first and foremost to be a library for symbolic mathematics, Lasagne offers abstractions on top of Theano that make it more suitable for deep learning. It’s written and maintained primarily by Sander Dieleman, a current DeepMind research scientist. Instead of specifying network models in terms of function relationships between symbolic variables, Lasagne allows users to think at the Layer level, offering building blocks like “Conv2DLayer” and “DropoutLayer” for users to work with. Lasagne requires little sacrifice in terms of flexibility while providing a wealth of common components to help with layer definition, layer initialization, model regularization, model monitoring, and model training.

Pros:

- Still very flexible

- Higher layer of abstraction than Theano

- Docs and code contain an assortment of pasta puns

Cons:

- Smaller community

Resources:

Blocks

Description:

A Theano framework for building and training neural networks.

Documentation:

http://blocks.readthedocs.io/en/latest/

Summary:

Similar to Lasagne, Blocks is a shot at adding a layer of abstraction on top of Theano to facilitate cleaner, simpler, more standardized definitions of deep learning models than writing raw Theano. It’s written by the University of Montreal’s lab, MILA — some of the same folks who contributed to the building of Theano and its first high level interface to neural network definitions, the deceased PyLearn2. It’s a bit more flexible than Lasagne at the cost of having a slightly more difficult learning curve to use effectively. Among other things, Blocks has excellent support for recurrent neural network architectures, so it’s worth a look if you’re interested in exploring that genre of model. Alongside TensorFlow, Blocks is the library of choice for many of the APIs we’ve deployed to production at indico.

Pros:

- Still very flexible

- Higher layer of abstraction than Theano

- Very well tested

Cons:

- Substantial learning curve

- Smaller community

Resources:

- Official installation guide

- Arxiv paper on the design of the Blocks library

- A reddit discussion on the differences between Blocks and Lasagne

- Block’s sister library for data pipelines, Fuel

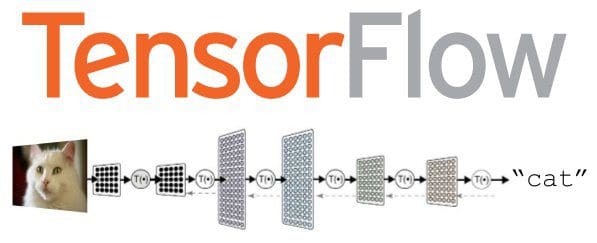

TensorFlow

Description:

An open source software library for numerical computation using data flow graphs.

Documentation:

https://www.tensorflow.org/api_docs/python/

Summary:

TensorFlow is a blend between lower level, symbolic computation libraries like Theano, and higher level, network specification libraries like Blocks and Lasagne. Although it’s the newest member of the Python deep learning library collection, it likely has garnered the largest active community because it’s backed by the Google Brain team. It offers support for running machine learning models across multiple GPUs, provides utilities for efficient data pipelining, and has built-in modules for the inspection, visualization, and serialization of models. More recently, the TensorFlow team decided to incorporate support for Keras, the next deep learning library on our list. The community seems to agree that although TensorFlow has its shortcomings, the sheer size of its community and the massive amount of momentum behind the project mean that learning TensorFlow is a safe bet. Consequently, TensorFlow is our deep learning library of choice today at indico.

Pros:

- Backed by software giant Google

- Very large community

- Low level and high level interfaces to network training

- Faster model compilation than Theano-based options

- Clean multi-GPU support

Cons:

- Initially slower at many benchmarks than Theano-based options, although Tensorflow is catching up.

- RNN support is still outclassed by Theano

Resources:

- Official TensorFlow website

- Download and setup guide

- indico’s take on TensorFlow

- A collection of TensorFlow tutorials

- A Udacity machine learning course taught using TensorFlow

- TensorFlow MNIST tutorial

- TensorFlow data input

Keras

Description:

Deep learning library for Python. Convnets, recurrent neural networks, and more. Runs on Theano or TensorFlow.

Documentation:

Summary:

Keras is probably the highest level, most user friendly library of the bunch. It’s written and maintained by Francis Chollet, another member of the Google Brain team. It allows users to choose whether the models they build are executed on Theano’s or TensorFlow’s symbolic graph. Keras’ user interface is Torch-inspired, so if you have prior experience with machine learning in Lua, Keras is definitely worth a look. Thanks in part to excellent documentation and its relative ease of use, the Keras community is quite large and very active. Recently, the TensorFlow team announced plans to ship with Keras support built in, so soon Keras will be a subset of the TensorFlow project.

Pros:

- Your choice of a Theano or TensorFlow backend

- Intuitive, high level interface

- Easier learning curve

Cons:

- Less flexible, more prescriptive than other options

Resources:

- Official installation guide

- Keras users Google group

- Repository of Keras examples

- Instructions for using Keras with Docker

- Repository of Keras tutorials by application area

MXNet

Description:

MXNet is a deep learning framework designed for both efficiency and flexibility.

Documentation:

http://mxnet.io/api/python/index.html#python-api-reference

Summary:

MXNet is Amazon’s library of choice for deep learning, and is perhaps the most performant library of the bunch. It has a data flow graph similar to Theano and TensorFlow, offers good support for multi-GPU configurations, has higher level model building blocks similar to that of Lasagne and Blocks, and can run on just about any hardware you can imagine (including mobile phones). Python support is just the tip of the iceberg — MXNet also offers interfaces to R, Julia, C++, Scala, Matlab, and Javascript. Choose MXNet if you’re looking for performance that’s second to none, but you must be willing to deal with a few of MXNet’s quirks to get you there.

Pros:

- Blazing fast benchmarks

- Extremely flexible

Cons:

- Smallest community

- Steeper learning curve than Theano

Resources:

- Official getting started guide

- indico’s intro to MXNet

- Repository of MXNet examples

- Amazon’s CTO’s take on MXNet

- MXNet Arxiv paper

PyTorch

Description:

Tensors and dynamic neural networks in Python with strong GPU acceleration.

Documentation:

Summary:

Released just over a week ago, PyTorch is the new kid on the block in our list of deep learning frameworks for Python. It’s a loose port of Lua’s Torch library to Python, and is notable because it’s backed by the Facebook Artificial Intelligence Research team (FAIR), and because it’s designed to handle dynamic computation graphs — a feature absent from the likes of Theano, TensorFlow, and derivatives. The jury is still out on what role PyTorch will play in the Python deep learning ecosystem, but all signs point to PyTorch being a very respectable alternative to the other frameworks on our list.

Pros:

- Organizational backing from Facebook

- Clean support for dynamic graphs

- Blend of high level and low level APIs

Cons:

- Much less mature than alternatives (in their own words — “We are in an early-release Beta. Expect some adventures.”)

- Limited references / resources outside of the official documentation

Resources:

- Official PyTorch homepage

- PyTorch twitter feed

- Repository of PyTorch examples

- Repository of PyTorch tutorials

Bio: Madison May is a developer, designer, and engineer, and is the CTO of indico Data Solutions.

Original. Reposted with permission.

Related:

An Overview of Python Deep Learning Frameworks

An Overview of Python Deep Learning Frameworks