The Data Science Project Playbook

The Data Science Project Playbook

Keep your development team from getting mired in high-complexity, low-return projects by following this practical playbook.

By Matthew Coffman, High Alpha.

This post is a follow-up to "Four Big Ideas Shaping Product Strategy Today".

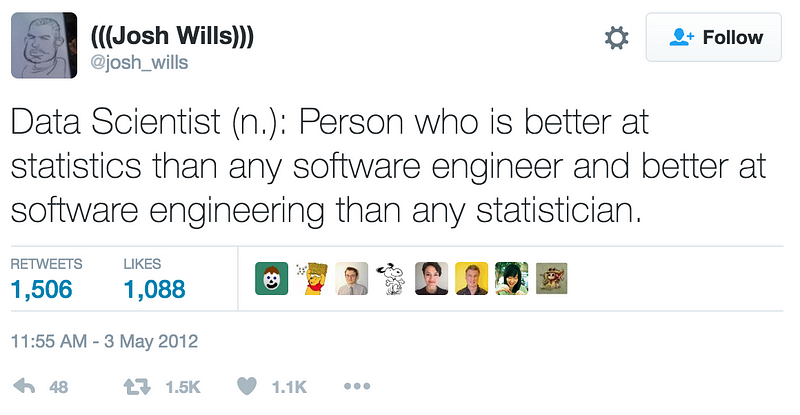

Josh Wills, head of data engineering at Slack, takes a practical view of data science.

Given how frequently we hear or talk about machine learning and AI as emerging ways for startups to differentiate themselves, I’ve been working to identify baby steps that would allow startups to identify and create valuable data science projects on their own. I recently attended MLconf 2016, an event bringing together a nice mix of academics, product leaders, and practicing data scientists.

I found it to be an inspiring and humbling experience in terms of seeing how bigger and more established companies are attacking these challenges. The talks were a mix of recipes, reflections, and advice. Since I have more of an engineering mind, some of the content was over my head. Overall, though, I learned so much from the presenters and other attendees. I walked away with some thoughts on how we as startup product leaders might best tackle data science challenges. I wanted to try to organize these thoughts as a playbook others could use for getting started.

Step One: Understand the Data Science Landscape

Certainly, data science/machine learning/AI has achieved critical mass as a standalone industry. There is no shortage of platforms, tools, and algorithms available from a variety of vendors to tackle just about any application. On the other hand, finding experts with availability to tackle your challenges is a different matter. The big companies are all at war to lure away each other’s data scientists. That doesn’t leave much opportunity for the rest of us who are looking to build the next great chatbot or insight-driven application.

If you’re lucky enough to already have a data scientist on your payroll, then make this person your partner in planning and executing your projects. Meanwhile, understand that data scientists many times don’t have the same expertise and experience as other engineers in building and scaling all the other complex parts of applications. Be sure to get both data scientists and engineers involved in the planning of projects to best ensure success.

In the absence of a relationship with a subject matter expert, how should product leaders still pursue meaningful data science-driven features for their applications? I advocate for an extremely practical approach — as with most other product planning processes, get ready for a number of trade-offs. Luckily, the highly competitive environment of tools and platforms means that almost any dreamy feature can be built. For a product leader, then, the focus needs to be on finding the right feature and balancing the implications.

Step Two: Identify the MVDP

Josh Wills, who is the head of data engineering at Slack, gave a really interesting talk at the conference. Slack has a lot of interesting ways they look at product, particularly in that they are very focused on not selling a product, but a solution to a problem. Every ounce of effort is directed to solving discrete business problems for their customers.

As product leaders, we often use the concept of minimum viable products as a way to identify the least amount of work required to establish that we have solved a customer’s problem. Josh advocates for Minimum Viable Data Products as a way to balance “the vision of what’s possible with what is necessary.” Slack chose a small set of features — channel recommendations, as an example — then strove to identify the lowest effort, most-easily-measurable way to validate that it made the customer’s experience better.

Minimum viable data products need the following to be successful:

- Real value to customers — something that enhances or deepens their relationship with the product

- Available and sufficient data — even the best algorithm can’t perform without data

- Delivery practicality —in other words, can the team deliver the capability with available resources and off-the-shelf solutions?

Product leaders can start with brainstorming features, prioritizing those with most value to customers. Working with engineering leaders (and potentially data science professionals), discuss the availability of data and resources to deliver a given feature.

Don’t be afraid to reduce scope — the goal here is to build something that can quickly prove that a feature is valuable to customers. Once that value is established, then additional layers of complexity can be added on top. With data science projects in particular, though, it’s important to guard against too much complexity up front to reduce the chance that a given project won’t get off the ground.

Step Three: Find Engineer-Friendly Solutions

Our engineering and product teams are excellent at building and delivering features, but they may not yet have the experience and expertise to do this all on their own today. A data scientist provides a higher-level understanding of the possibilities in a given dataset, the right tool/technique to build a feature, and then (and equally important) how to put it into production. Fortunately, the internet is full of courses, learning materials, applications, and APIs that can help companies launch data science features even if they don’t have their own data scientist.

Today, almost every algorithm and technique can be pulled off the shelf. The real focus for our engineering teams should be on data preparation and loading, training and selection of models/algorithms/tools, and implementing those tools into production. Teams shouldn’t be building anything from scratch — it’s a waste of precious resources.

With the minimally viable data product identified, it’s time to find the most practical way to build the feature. While no tool or platform is appropriate for every use case, there are some good starting points for your team to utilize:

- General-purpose machine learning platforms/prediction services: Google Prediction API, Amazon Machine Learning API, Microsoft Azure Machine Learning API, and BigML are examples of services with APIs where your engineers feed data into either pre-built or custom-fit models that can quickly be tested and inserted into your application. This type of service is a good fit for predicting user behaviors, tagging users or products in big data sets, prioritizing data sets, and more.

- Specific-purpose artificial intelligence platforms: this area seems to have the most momentum — searching the Internet for your use case will likely yield all kinds of new applications targeted at developers to solve specific problems. Key vendors include IBM Watson (speech recognition, image recognition, translation) and Google Cloud (speech, text, image, and other services), with many new startups emerging every day.

- Blogs, directories, and ML community news: as with most other areas of development, the Internet provides a healthy starting point where other teams have shared their successes and failures with data science projects. I recommend KDnuggets and O’Reilly as good starting points for knowledge exploration.

In all likelihood, whatever gets built is likely to include a combination of tools of platforms. That’s why it’s helpful to focus on only doing the work that your team needs to deliver that minimum customer value — as long as all parties are aligned around that goal, it should help keep data science projects from blossoming out of control.

Step Four: Measure and Iterate

Just like with any other feature, it needs to be clear from the beginning how customer satisfaction will be measured. Given the additional complexities of data science projects, it’s even more important to create a tight loop between customer feedback and feature iteration. With the enormous dependency on data and potentially complicated models, it can be difficult for teams to isolate exactly why a given feature isn’t as effective as planned. The product leader has an important role in understanding how much work is anticipated for each iteration and will likely have to make judgment calls in terms of the value of additional work. Sometimes, it may be necessary to abandon a feature altogether if it seems that too much work will be required or if the results remain unpredictable.

A good product leader maintains a diligent relationship with both the customer and the data. Observing the feedback from both of these sources as new data science-driven features are tested with customers is critical.

Conclusion: No Going Back

Josh Wills powerfully observed that for many companies, data science efforts were just part of the portfolio of their product investments. He continued that in most cases, one or two of those efforts will pay for all of the rest of them. Getting started at data science is really hard — he called it an act of faith. He said companies like Facebook, Google, and Amazon are already way over that initial hump and now data science drives almost everything they do. Machine learning and data science are the tools those companies are using to create value, and user experience becomes a function of delivering insights and opportunities to make customers’ lives easier through automation.

With a practical point-of-view, product leaders can begin to incorporate data science features into their own applications. While catching up with the big companies may be a challenge, we need to stay focused on the needs of our own customers and work to make their experience better in the most reasonable way possible.

Bio: Matthew Coffman leads Product at High Alpha, a venture studio focused on conceiving, launching, and scaling next generation enterprise cloud companies. He works with their portfolio companies to build teams, plan technology, and solve problems that will help them grow more quickly. He is excited to be part of the Indianapolis technology community!

Original. Reposted with permission.

Related:

- Laying the Foundation for a Data Team

- Getting Real World Results From Agile Data Science Teams

- Creativity is Crucial in Data Science

The Data Science Project Playbook

The Data Science Project Playbook