Fast Big Data: Apache Flink vs Apache Spark for Streaming Data

Real-time stream processing has been gaining momentum in recent past, and major tools which are enabling it are Apache Spark and Apache Flink. Learn with the help of a case study about Data processing, Data Flow, Data management using these tools.

The demand for faster data processing has been increasing and real-time streaming data processing appears to be the answer. While Apache Spark is still being used in a lot of organizations for big data processing, Apache Flink has been coming up fast as an alternative. In fact, many think that it has the potential to replace Apache Spark because of its ability to process streaming data real time. Of course, the jury on whether Flink can replace Spark is still out because Flink is yet to be put to widespread tests. But real-time processing and low data latency are two of its defining characteristics. At the same time, this needs to be considered that Apache Spark will probably not go out of favor because its batch processing capabilities will be still relevant.

Case for Streaming Data Processing

For all the merits of batch-based processing, there appears to be a strong case for real-time streaming data processing. Streaming data processing makes it possible to set up and load a data warehouse quickly. A streaming processor that has low data latency gives more insights on data quickly. So, you have more time to find out what is going on. In addition to quicker processing, there is also another significant benefit: you have more time to design an appropriate response to events. For example, in the case of anomaly detection, lower latency and quicker detection enables you to identify the best response which is key to prevent damage in cases such as fraudulent attacks on a secure website or industrial equipment damage. So, you can prevent substantial loss.

What is Apache Flink?

Apache Flink is a big data processing tool and it is known to process big data quickly with low data latency and high fault tolerance on distributed systems on a large scale. Its defining feature is its ability to process streaming data in real time.

Apache Flink started off as an academic open source project and back then, it was known as Stratosphere. Later, it became a part of the Apache Software Foundation incubator. To avoid conflict in name with another project, the name was changed to Flink. The name Flink is appropriate because it means agile. Even the logo chosen, a squirrel is appropriate because a squirrel represents the virtues of agility, nimbleness and speed.

Since it was added to the Apache Software Foundation, it had a rather quick rise as a big data processing tool and within 8 months, it had started to capture the attention of a wider audience. People’s growing interest in Flink was reflected in the number of attendees in a number of meetings in 2015. A number of people attended the meeting on Flink at the Strata Conference in London in May 2015 and the Hadoop Summit in San Jose in June, 2015. More than 60 people attended the Bay Area Apache Flink meet-up hosted at the MapR headquarters in San Jose in August, 2015.

The image below gives the Lambda architecture of Flink.

Comparison between Spark and Flink

Though there are a few similarities between Spark and Flink, for example, their APIs and components, the similarities do not matter much when it comes to data processing. Given below is a comparison between Flink and Spark.

Data processing

Spark processes data in batch mode while Flink processes streaming data in real time. Spark processes chunks of data, known as RDDs while Flink can process rows after rows of data in real time. So, while a minimum data latency is always there with Spark, it is not so with Flink.

Iterations

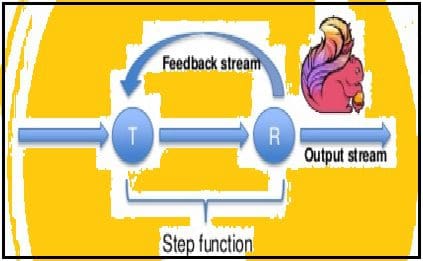

Spark supports data iterations in batches but Flink can natively iterate its data by using its streaming architecture. The image below shows how iterative processing takes place.