Data Science Basics: 3 Insights for Beginners

For data science beginners, 3 elementary issues are given overview treatment: supervised vs. unsupervised learning, decision tree pruning, and training vs. testing datasets.

1. What is the difference between supervised and unsupervised learning?

In supervised learning, the learning algorithm is provided outcome data in advance, in the form of a pre-labeled set of instances. It is from this set that the algorithm is expected to learn what to do when it encounters future, previously unseen instances. Classification is a form of supervised learning.

As an example, take the biological taxonomic hierarchy. Organisms are grouped into successfully more specific ranks of domain, kingdom, phylum, etc. If an algorithm was to learn the defining features of the most specific of the subgroups, species, based on the observance of pre-labeled member instances, it could then make a decision as to where future instances should be placed.

If, for instance, an algorithm had built up a robust model and was then presented with what we would recognize to be a fox, it would be able to inspect the fox's collective descriptive attributes (number of legs, teeth type, eye position, etc.) and make a determination of the unlabeled instance's species (if that were the goal of the model).

The trade-off here is that pre-labeling of training data (what the algorithm is fed to construct its understanding of a problem - the model) comes at a cost: the time and trouble needed to perform the labeling. The benefit is that many classification algorithms are very effective when combined with adequate amounts of properly pre-labeled data.

Support vector machines, decision trees, regression, and a whole host of other algorithms fall under supervised learning.

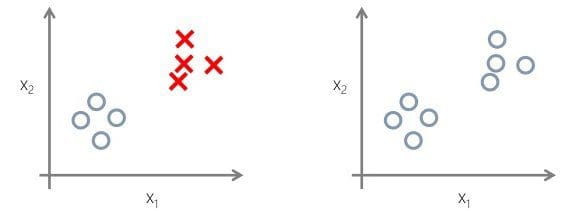

Unsupervised learning differs in that it is not provided with pre-labeled training data in advance. The learning algorithm instead is expected to search for any sensible pattern among the numerous instance attributes. I have a feeling that when the general public hears the term "data mining," this is what it generally thinks of: heaps of Big Data being searched randomly by Big Brother for meaningful patterns. While some data mining is constructed in this fashion (to say nothing of a whole host of statistical methods used to validate potential findings of relevance in the "randomness"), that's certainly not the norm. Clustering is a form of unsupervised learning.

To contrast the above example, unsupervised learning is like having a data set of biological organisms with all of their defining attributes, but no class attribute among them (i.e. no pre-labeling of species). A clustering algorithm would then attempt to group like instances together, attempting to maximize the similarity of grouped instances while minimizing the similarity of ungrouped instances. The grand concept is that, though foxes are not labeled as foxes, they share a number of similar attribute values which would - hopefully - make them identifiable as very similar to one another, while very different from snakes.

The trade-off here is that no pre-labeling - and none of the time associated with it - is required. The problem can be that different classes may not be as easily distinguishable as one assumes (think wolves vs. dogs).

This is a very high-level, but factually correct, overview of supervised and unsupervised learning. As you will soon see, there are all sorts of questions - technical, theoretical, and philosophical - that accompany all types of learning techniques. Knowing how to identify and differentiate 2 of the major classes of learning algorithm, however, is essential at the start of your journey.

2. What is the difference between a pruned decision tree and an unpruned tree?

Decision trees are created by making meaningful decisions as to where to mark boundaries on ranges of attribute values in order to split the instances into 2 or more subcategories, each being represented by different branches of a tree. This process continues, with branches being recursively split into smaller, more specific, branches on different attributes, with the tree leaves being classes. A subsequent walk of the tree with any un-labeled instance would lead to an unambiguous classification.

After a decision tree model is built, it is often pruned. This means that branches which do not add any value to data classification, or branches which, if removed, do not result in a considerable reduction in training data classification accuracy - this accuracy reduction threshold would be pre-specified - are removed, and its sub-trees are combined. The effects of this pruning process can be measured on training data, but effects on unseen test data (or real world data), remain unknown at the time of model training, parameter tuning, and tree pruning.

An unpruned decision tree can lead to overfitting. Overfitting occurs when a data model describes random error or noise, and does not describe the underlying data relationships. Overfitting more accurately fits known data, and in turn is not as good at predicting new data. As a result, this produces too many class outcomes to be useful.

Also, overfitting does not allow for meaningful information to be learned from a model. A tree that is pruned but does not fit the data so well can still be useful as there would be fewer, more meaningful classes. Fewer classes mean that more instances are grouped together, a situation in which there is a better chance that meaningful patterns will emerge and information will be extracted.

Any time that instances are grouped together in fewer classes there is a better chance of patterns being recognized. This is the reason that pruned decision trees, which avoid the overfitting prone to unpruned trees, could be a better choice for learning.

As discussed with supervised vs. unsupervised learning above, you can see that there are obvious trade-offs to pruning a tree vs. deciding against it.

3. What is the difference between a training set and a test set?

They have been mentioned above. What are they, and how do they differ?

A training set is the data set that is used to build a data mining model. The model learns from the training data, and uses what it does learn on subsequent unseen instances. Training data should never be used for testing, since results would not be meaningful.

A test set is an approximation of real world data, used to see how the data mining model would perform on unseen data. This is data that has not been used during training, as this would defeat the purpose.

In real life, coming by well-crafted datasets which have been split into training and testing sets is difficult, especially for real world problems. Often a single set of data is purposefully and statistically split into training and testing sets in order to accomplish the goals of these 2 steps of data mining.

Related: